Archaea

[H]F Junkie

- Joined

- Oct 19, 2004

- Messages

- 11,826

Vega multiminotor bezel correction still is broken 2 months after release.

Freesync is finally working for me after a reformat of Windows or a driver update I’m not sure which. (It was working before but a driver update broke it two separate times on my system, currently it’s working)

I’ve seriously just about lost my patience with this card/buggy software.

I’ve really experienced a LOT of hassle with my AMD Vega cards. Id not recommend them to anyone at this point in time quite honestly.

Someday they’ll get it squared away—that day has not yet come.

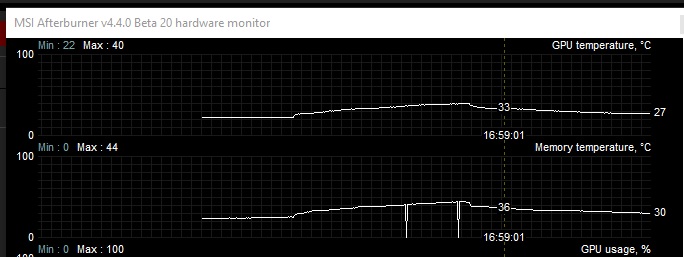

right after this pic, the computer bluescreened, and rebooted to some crazy upside down image, and a low resolution. Then it was in a funk for a while blinking on and off, and just generally messing up/bugging out until I hit reset again.

So far, after loading my OS fresh tonight, I've had to hard reboot 4 times with these stupid Vega cards and my three monitor setup. If you just want a single monitor for gaming it seems to be working OK... but that's not why I bought Vega.

Freesync is finally working for me after a reformat of Windows or a driver update I’m not sure which. (It was working before but a driver update broke it two separate times on my system, currently it’s working)

I’ve seriously just about lost my patience with this card/buggy software.

I’ve really experienced a LOT of hassle with my AMD Vega cards. Id not recommend them to anyone at this point in time quite honestly.

Someday they’ll get it squared away—that day has not yet come.

right after this pic, the computer bluescreened, and rebooted to some crazy upside down image, and a low resolution. Then it was in a funk for a while blinking on and off, and just generally messing up/bugging out until I hit reset again.

So far, after loading my OS fresh tonight, I've had to hard reboot 4 times with these stupid Vega cards and my three monitor setup. If you just want a single monitor for gaming it seems to be working OK... but that's not why I bought Vega.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)