defaultluser

[H]F Junkie

- Joined

- Jan 14, 2006

- Messages

- 14,398

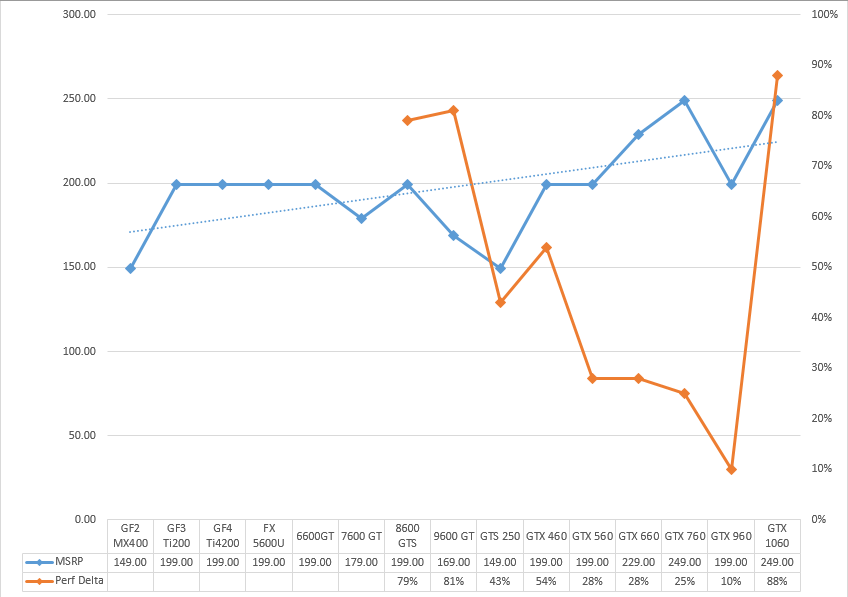

Could just be that it's getting harder to squeeze performance out of each process, especially when they don't shrink them as often as they used to you know.

During the golden age of video cards, (1998 to 2008), companies relied on FOUR things to improve performance, in descending order of importance:

1. Die Shrinks (allowing you to add more functional units, OR lower power, allowing higher clocks at same power level)

2. Increasing power consumption (higher clocks beyond what the die shrink bought you). We went from the 16w GeForce 256 to 75w 7800 GTX to 150w 8800 GTX to 220w GTX 280 in the span of ten years.

3. Increasing die size from one generation to the next (more functional units), as the industry got more experienced.

4. Architectural improvements. It's actually pretty rare to see a part like Maxwell, where the architectural improvements are AS important as the added functional units. Most of the time this is ~5-10% of the total performance. Sometimes it was hard to validate the efficiency of new features because no games used them for several years.

High-end graphics cards have not always been huge 600mm^2 monsters, they started out rather small. The Geforce 256 was a paltry 111 mm², but then it grew successively. This, combined with a die shrink AND power increase allowed some next-generation cards to more than double performance

Geforce 3: 128 mm²

FX 5800 XT: 200 mm²

7800 GTX : 333 mm²

8800 GTX: 484 mm²

GTX 280: 575 mm²

And Nvidia has been stuck in thew die size AND power consumption (250w) corner since then, dependent on die shrinks and architectural improvements ALONE. And those die shrinks have slowed down as well.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)