Rasterizer

n00b

- Joined

- Aug 4, 2017

- Messages

- 40

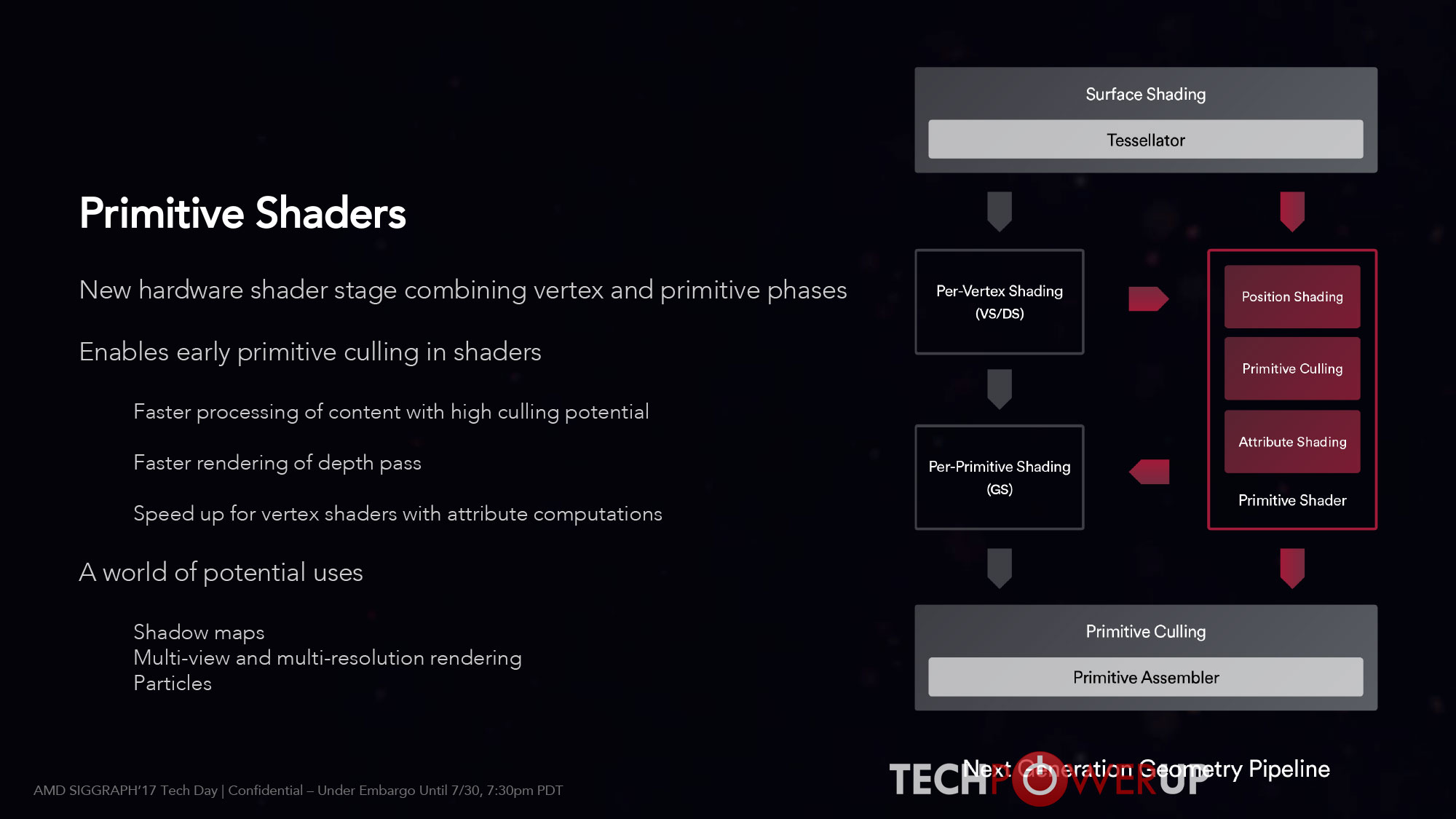

By definition, if you replace the fixed function shaders in the geometry engines with generarilzed non-compute shaders, they become programmable and thus of course they can be arbitrarily switched between behaving like Fiji's geometry engine and behaving like primitive shaders instead as needed.Come on Rasterizer it was right there. Page 7

Look, here is a slide from the Polaris slide deck:

and here is were primitive discard is talked about in the Polaris whitepaper:

This makes it very clear that Polaris' Primitve Discard Accelerators are inside the geometry engines prior to the rasterizers, yes?The Polaris geometry engines use a new filtering algorithm to more efficiently discard primitives. As figure 5 illustrates, it is common that small or very thin triangles do not intersect any pixels on the screen and therefore cannot influence the rendered scene. The new geometry engines will detect such triangles and automatically discard them prior to rasterization

Now go back and look at the diagram for used for primitive shaders:

This shows primitive shaders being prior in the rendering pipeline to fixed function PDA culling. This is before the draw stream binning rasterizers on the Vega block diagram. What you are suggesting would mean that geometry would be being sent to the CUs for processing and then brought back through the chip to be put through the PDA fixed function culling and the four draw stream binning rasterizers. That doesn't make any sense, and no one would even try such a thing.

Incidentally, the Polaris slide deck conveniently proves that MSAA performance is a known geometry throughput issue for GCN, which is convenient.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)