Araxie

Supreme [H]ardness

- Joined

- Feb 11, 2013

- Messages

- 6,463

how was fury X's fire strike score vs 980ti? were they also roughly equal?

AMD always have scored higher in 3DMark Fury X Scored better than vanilla 980Ti but way lower than AIB 980Ti.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

how was fury X's fire strike score vs 980ti? were they also roughly equal?

I see they've foregone wood screws and gone for some high end cardboard boxes insteadOMGGGGG IT'S HAPPENING!!!!

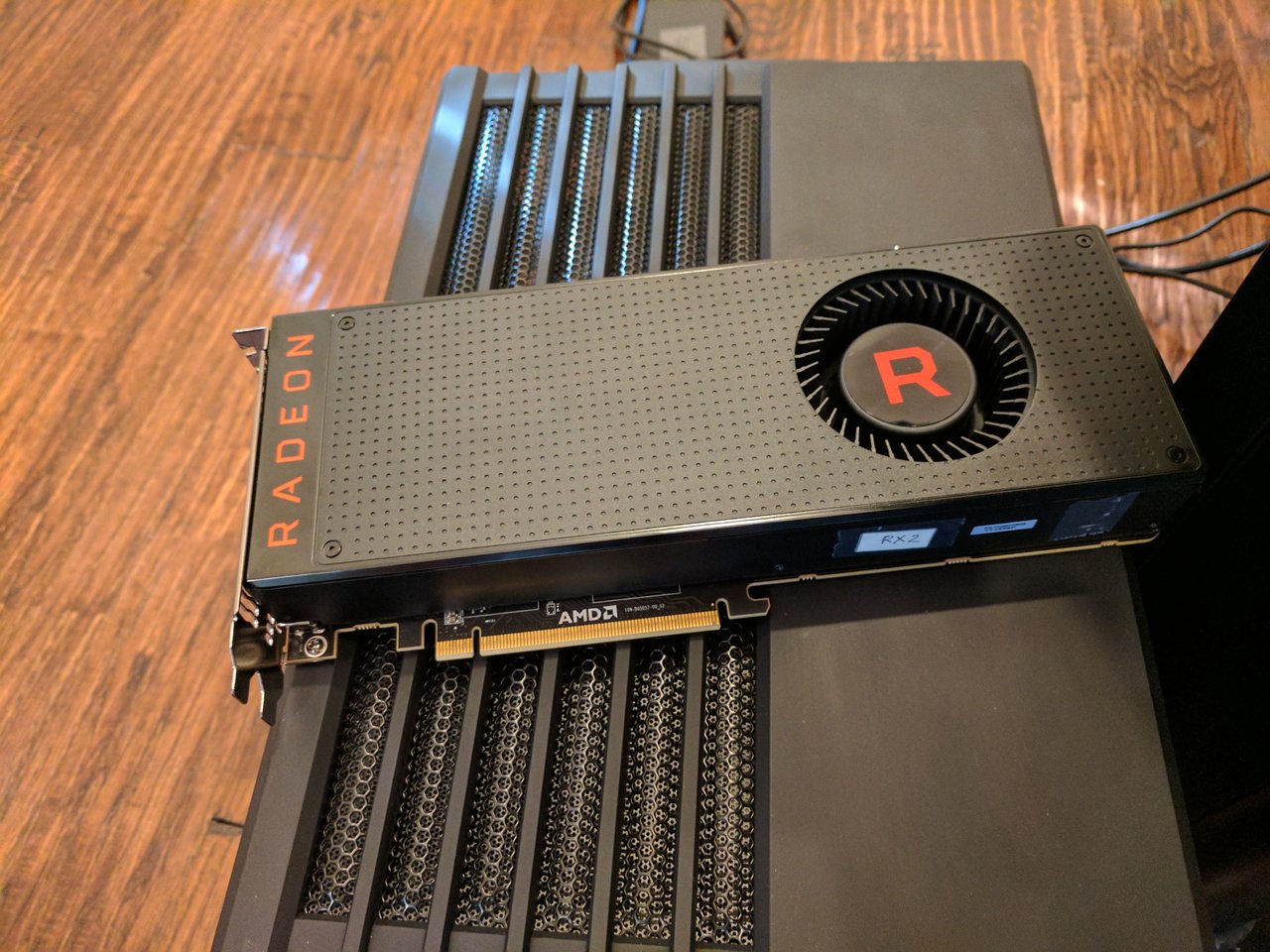

View attachment 31491

View attachment 31492

oh wait... =( isn't Vega it's Volta...

FuryX gave the 980ti a run for its money and often did better in crossfire.

Vega only matches a 1080 despite being a year newer. Not a good scenario at all.

Still up in the air wether Vega or X299 is a bigger failure....

You need 7980X at 4.6 (to account for silicon lottery, you know) and quadfire vegas for portal to hell to open, actually. Of course with Prime95 and Furmark running in the same time.Still, I would love to pair a 7900x clocked at 4.8 Ghz with tri-fire Vegas. I always wanted to open a dimensional portal to hell.

You need 7980X at 4.6 (to account for silicon lottery, you know) and quadfire vegas for portal to hell to open, actually. Of course with Prime95 and Furmark running in the same time.

Source: am literally devil.

You need 7980X at 4.6 (to account for silicon lottery, you know) and quadfire vegas for portal to hell to open, actually. Of course with Prime95 and Furmark running in the same time.

Source: am literally devil.

Officially one generation behind.

Anyone here have a nagging feeling that AMD should have just gone with GDDR5X instead of being delayed HBM2?

We brought in two teens and one guy in his 20s that is a phenom as well. Wanted to blanket the age range.Must be old people, its ok age comes with experience and wisdom

Does somebody already have their grubby little fingers on a RX Vega?

Hang on, only 1 CPU?You need 7980X at 4.6 (to account for silicon lottery, you know) and quadfire vegas for portal to hell to open, actually. Of course with Prime95 and Furmark running in the same time.

Source: am literally devil.

Was really hoping they would keep the Aluminum shroud with the R cube in corner

how is the build quality on em?

Was really hoping they would keep the Aluminum shroud with the R cube in corner

how is the build quality on em?

If this is a Pepsi-Challenge, will we get the video before launch I wonder?

Earlier you mentioned your sources have the performance between 1080Ti and the 1080, but current news and rumors have it @1080 level, what do you think now?

Reviews @ July 31?

And what's with the RX2 sticker?

I can't tell if he's being serious or taking a jab at AMDs ridiculous marketing stunt the past week or both.

AMD hand delivered this card to my house on Saturday morning, and took it with them Saturday evening. That all said, this card was an engineering sample but I was specifically told that it was representative of retail product. So basically a "reference" card built outside of mass production.So no review soon? Is the card loaned from AMD to do just the blind test?

No, we were packed in VERY tight for what we were doing. Had to narrow down to one game. :\Were you able to conduct any VR demos or was it too time constraint?

The power of Kyle. Here I have a problem convincing the post office to deliver something at all.

Please let there be numbers.

OMGGGGG IT'S HAPPENING!!!!

THE BIG V it's HERE!!

View attachment 31491

View attachment 31492

oh wait... =( isn't Vega it's Volta...

Sorry, there will be ZERO objective data. But that is exactly what this was all about. Should be fun.The power of Kyle. Here I have a problem convincing the post office to deliver something at all.

Please let there be numbers.

LOL! That is an idea......I like it.I'm guessing Kyle's blind test is he put two systems with different monitors, but the same hardware. That would be hilarious.

One game, DOOM running under Vulkan. Ultra settings at 3440x1440. We used the preset so no "funny business" could be charged.Can't we at least hope for your methodology of "max playable settings" to be present in this comparison?

Was really hoping they would keep the Aluminum shroud with the R cube in corner

how is the build quality on em?

SP.Single player or multiplayer?

Call Asus and have them bring a card to you so you can run your own testing methods.Why Doom? Surely there's better more taxing games out there that could have been picked?

Because I wanted to.Why Doom? Surely there's better more taxing games out there that could have been picked?

Well using Doom will really give us an idea of how drivers changed performance from 6 months ago

Then this fully subjective preview we pulled off will in no way interest you. Your thoughts are noted.The problem with Doom is it already runs so fast on even Maxwell that a blind challenge wouldn't show any difference. Using a more taxing game where fps matters would certainly be more realistic since these are the best from both companies.

The problem with Doom is it already runs so fast on even Maxwell that a blind challenge wouldn't show any difference. Using a more taxing game where fps matters would certainly be more realistic since these are the best from both companies.

No FRAG-HARDER lights on this one.Knew it, the picture you posted didn't have enough LED's or look like some sort of stealth military hardware to be ASUS

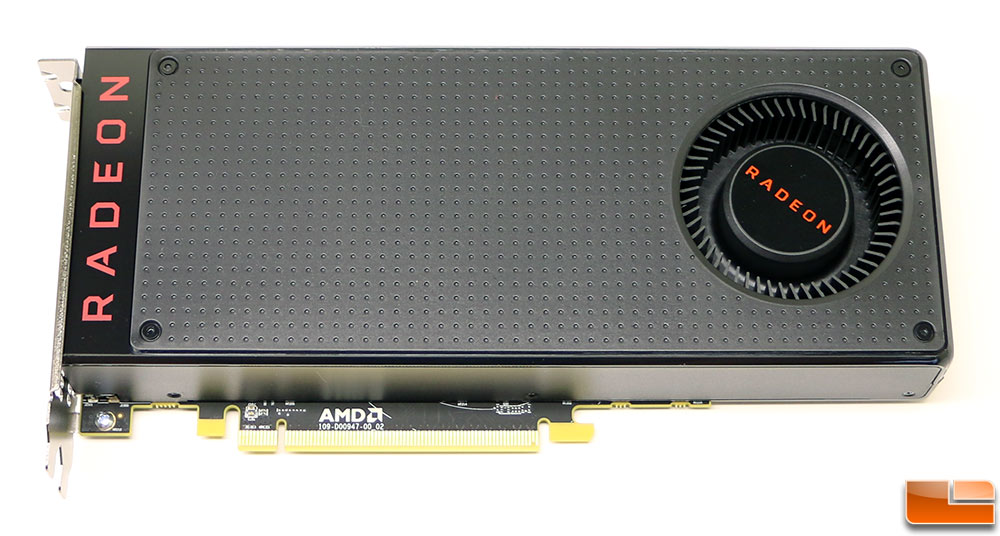

Just messing with you, no one else. And yes, I do know what an RX 480 looks like, I bought plenty of those for testing.Is this really Radeon RX Vega or are you just messing with us?

Radeon RX 480 reference

The problem with Doom is it already runs so fast on even Maxwell that a blind challenge wouldn't show any difference. Using a more taxing game where fps matters would certainly be more realistic since these are the best from both companies.