$200 is a good price, but it's disappointing they don't have anything that competes with at least the 1070, Nvidia's prices suck. If they announced a $300 1070 competitor I'd probably wait and pick up two.

I'm sure they'll sell a bunch of these.

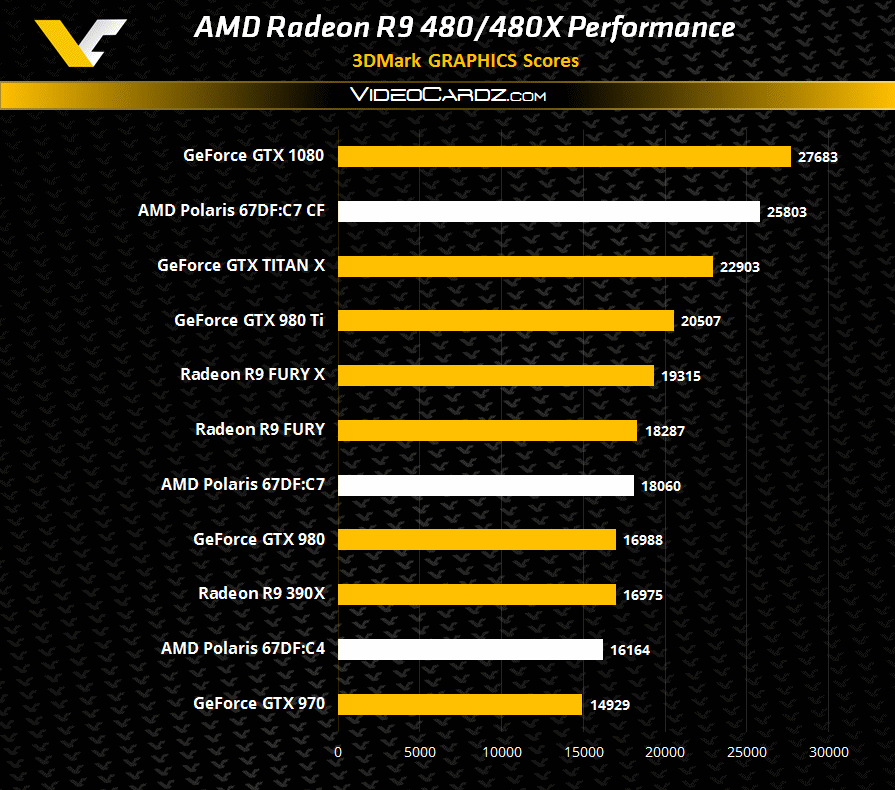

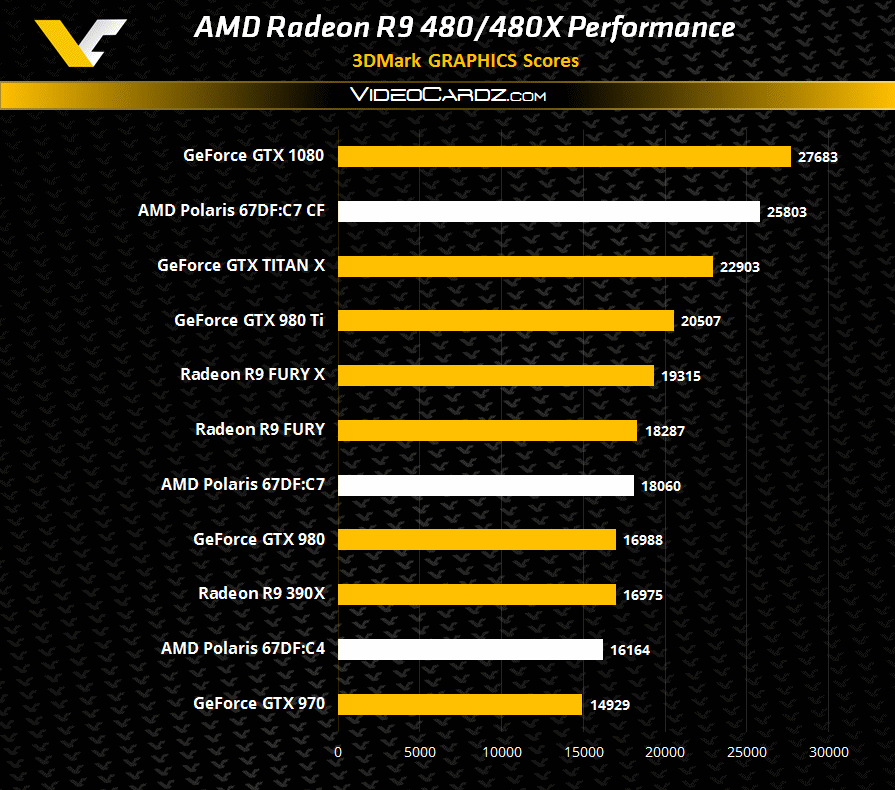

Oh, I don't know if this has already been posted. They were right about the GTX 1080 and GTX 1070, and it matches up with what AMD has said. The top variant (67DF:C7) is supposedly the 480x and the lower (67DF:C4) the 480. Slower than the Fury, which is a little disappointing.

I'm sure they'll sell a bunch of these.

Oh, I don't know if this has already been posted. They were right about the GTX 1080 and GTX 1070, and it matches up with what AMD has said. The top variant (67DF:C7) is supposedly the 480x and the lower (67DF:C4) the 480. Slower than the Fury, which is a little disappointing.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)