How can identify how much input lag a monitor has? Can't find any info when I look at specs. Is it the Contrast Ratio that measures this?

I am on my knowledge quest regarding monitors. I will be getting a new monitor soon and I am just trying to learn everything I can about them to make a better choice when I buy.

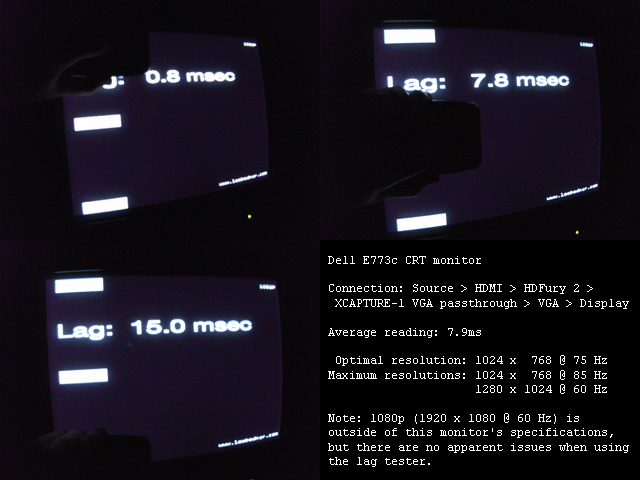

Input lag is becoming something I am very concerned about. I see all this talk about response time but what I do not see is alot of talk about input lag which in theory (in my head) should be more important to me.

I see lots of specs on monitors but no one seems to mention input lag. So what if you have a monitor with 1ms response time but with very high input lag VS. a monitor with 5ms response time with very low input lag? Hope you see where I am going with this.

So just generally, where can I find specs regarding input lag when I am looking at monitors? Is is the contrast ratio? If so what are good and bad?

What else should I know about input lag?

I am on my knowledge quest regarding monitors. I will be getting a new monitor soon and I am just trying to learn everything I can about them to make a better choice when I buy.

Input lag is becoming something I am very concerned about. I see all this talk about response time but what I do not see is alot of talk about input lag which in theory (in my head) should be more important to me.

I see lots of specs on monitors but no one seems to mention input lag. So what if you have a monitor with 1ms response time but with very high input lag VS. a monitor with 5ms response time with very low input lag? Hope you see where I am going with this.

So just generally, where can I find specs regarding input lag when I am looking at monitors? Is is the contrast ratio? If so what are good and bad?

What else should I know about input lag?

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)