Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,857

Hey all,

I just set up SLI for the first time with two 980 ti's.

I haven't started overclocking yet (I want to get some custom cooling going first) but I have experimented with just bumping the power limits, temp limits and fans up to max, and have made some observations.

With a single card (EVGA Superclocked 980ti ACX 2.0+) installed, or with two cards installed, running in single GPU mode it clocks itself up to 1349Mhz (!?) and stays there for the duration of the game/bench.

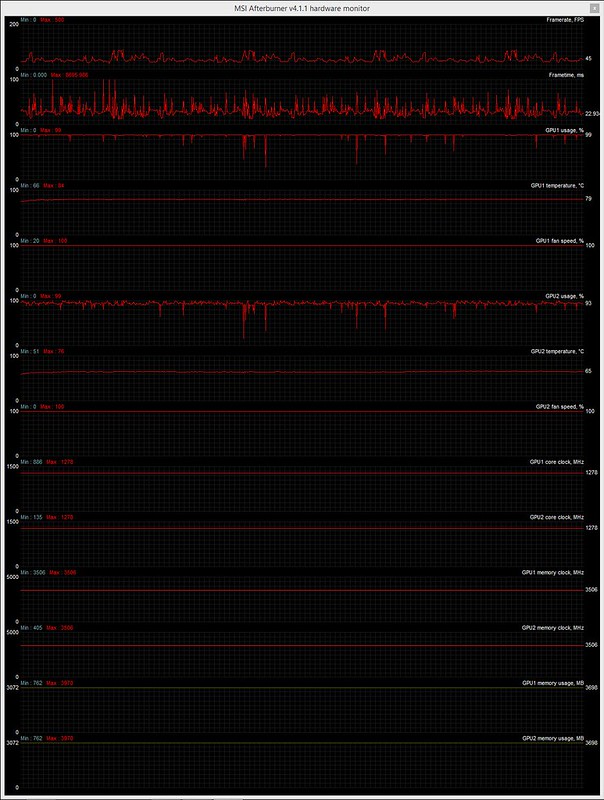

Once both cards are installed and in SLI mode, all the same settings, they only clock themselves up to 1278Mhz, and stay there for the duration of the game/bench.

I would blame heat as there are now two heat producing cards next to eachother rather than one, but they don't seem to be running any hotter, and my case has pretty good airflow.

Is there an automatic protection mode that kicks in in SLI making it harder to overclock than a single card, or should I just proceed as usual?

Appreciate any thoughts, or links where I can read up on this.

Thanks,

Matt

I just set up SLI for the first time with two 980 ti's.

I haven't started overclocking yet (I want to get some custom cooling going first) but I have experimented with just bumping the power limits, temp limits and fans up to max, and have made some observations.

With a single card (EVGA Superclocked 980ti ACX 2.0+) installed, or with two cards installed, running in single GPU mode it clocks itself up to 1349Mhz (!?) and stays there for the duration of the game/bench.

Once both cards are installed and in SLI mode, all the same settings, they only clock themselves up to 1278Mhz, and stay there for the duration of the game/bench.

I would blame heat as there are now two heat producing cards next to eachother rather than one, but they don't seem to be running any hotter, and my case has pretty good airflow.

Is there an automatic protection mode that kicks in in SLI making it harder to overclock than a single card, or should I just proceed as usual?

Appreciate any thoughts, or links where I can read up on this.

Thanks,

Matt

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)