HardOCP News

[H] News

- Joined

- Dec 31, 1969

- Messages

- 0

The Chinese language website GamerSky has posted a handful of pics of what they claim is the upcoming GeForce GTX 880. You'll need a translator to read the post but the pics are universal.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

is that an 8 pin and 2x 6 pin power? Is this common on preproduction samples or is it really going to be that much of a hog?

I'm already building a new system for X99 and Haswell-E this year. And I definitely need a new video card cuz I skipped my regularly scheduled upgrade last year. C'mon nVidia, release this shit already. And I need to know what AMD/ATI has up their sleeves. When all the new 2014 GPUs are out, then I can make my decision of who to go with. Liking the sound of Maxwell so far though. Power efficiency, runs cooler, and I heard the whole series is gonna be cheaper on the whole, though I have no idea how true that is. I really hope so. GPU prices are absolutely insane.

Engineering sample. I would assume 8+6 pin will be the final result.

OH please let it coincide with Broadwell and GTA V for PC !

Anybody know what size those memory chips are? Looking like either 4GB or 8GB total.

GTA 5 will run maxed out a a 580, much less an 880.

Anybody know what size those memory chips are? Looking like either 4GB or 8GB total.

I find it funny that Nvidia would release the 880M before the desktop series 800

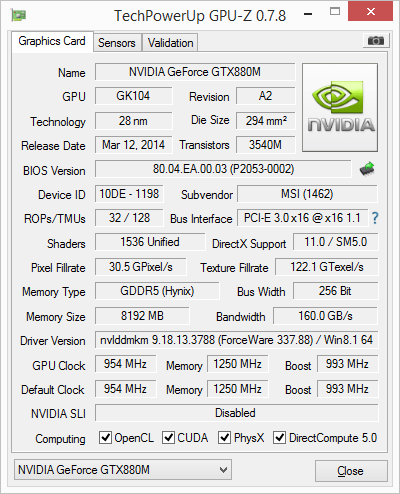

[IMG ]http://gpuz.techpowerup.com/14/07/03/hk5.png[/IMG]

GTA 5 will run maxed out a a 580, much less an 880.

It's a rebrand with higher clocks

I want bitcoiners to get off videocards and get onto SIAC (System in a chip) I'm seeing all over, such as ASIC miners, that way they can leave the rest of us alone and the market can recover

Its like how 290x's are finally coming onto the market, because bitcoin miners stopped buying them in anticipation of the next videocard gen, and in canada alone the prices on 290x's has dropped nearly $200 on both 290x's AND 780ti's

gta 4 was a horrible unoptimized POS, and i personally don't have faith in rockstar to improve it this time around

I want bitcoiners to get off videocards and get onto SIAC (System in a chip) I'm seeing all over, such as ASIC miners, that way they can leave the rest of us alone and the market can recover

Didn't they already do a GTX880?

Good for you

I skipped last year as well, bitcoin miners having destroyed the pricing on all video cards, it was impossible to get 290x's, which drove up the price, because you couldn't get 290x's, the price on 780ti's was through the roof because you couldn't buy a 290x etc etc

I want bitcoiners to get off videocards and get onto SIAC (System in a chip) I'm seeing all over, such as ASIC miners, that way they can leave the rest of us alone and the market can recover

Its like how 290x's are finally coming onto the market, because bitcoin miners stopped buying them in anticipation of the next videocard gen, and in canada alone the prices on 290x's has dropped nearly $200 on both 290x's AND 780ti's

I find it funny that Nvidia would release the 880M before the desktop series 800

I don't get why its an 8 pin + 6pin +6pin. 8pins provides 150 watts of power. a 6 pin is 75 watts. so why 150+75+75=300 watts they could just use 2 8 pins 150+150=300 and don't forget the slot alot provides another 75 watts if i'm correct. so the card is 375watts if it uses it allis that an 8 pin and 2x 6 pin power? Is this common on preproduction samples or is it really going to be that much of a hog?

It's probably just for development purposes.I don't get why its an 8 pin + 6pin +6pin. 8pins provides 150 watts of power. a 6 pin is 75 watts. so why 150+75+75=300 watts they could just use 2 8 pins 150+150=300 and don't forget the slot alot provides another 75 watts if i'm correct. so the card is 375watts if it uses it all

If you are bitcoin mining on GPUs still, I've got a bridge to sell ya.

Besides, nVidia cards aren't that good for mining (though great for gaming).

Those altcoin mining will be using ATi's offerings at least until some good ASICs come out.

That said...."GTX 880"....man...one digit shy of nostalgia

I still have my 8800 somewhere.

Uh...what? A GTX 670 should have kicked an 8800GTX's teeth inoh man. I only retired my 8800 GTX last year due to me thinking "Surely it'd get smashed by modern cards now". Only to buy a cheap a 670 as i could and find out that its the same or slower in some applications. talk about a let down. At the time i put it in a 2nd backup system to play with Win8 and one day.... out of the blue.. it shat itself. Really disappointed!

Uh...what? A GTX 670 should have kicked an 8800GTX's teeth in

oh man. I only retired my 8800 GTX last year due to me thinking "Surely it'd get smashed by modern cards now". Only to buy a cheap a 670 as i could and find out that its the same or slower in some applications. talk about a let down. At the time i put it in a 2nd backup system to play with Win8 and one day.... out of the blue.. it shat itself. Really disappointed!