maverick786us

2[H]4U

- Joined

- Aug 24, 2006

- Messages

- 2,118

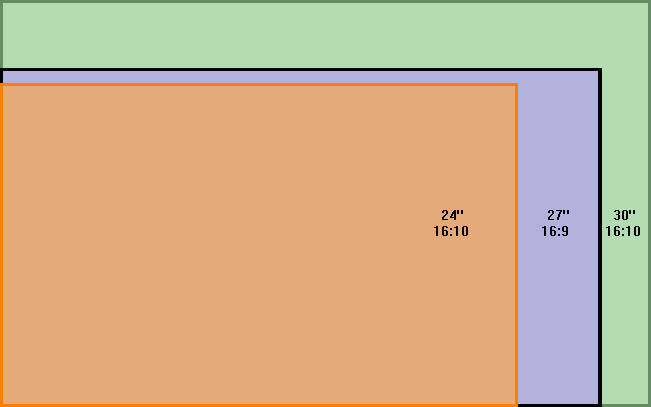

With 4K TVs on the horizon, and 4K monitors are on the pipeline. Will we expect steep decline in the prices of 30 inch displays, which is much anticipated for all the hard core enthusastics in this forum?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)