Michaelius

Supreme [H]ardness

- Joined

- Sep 8, 2003

- Messages

- 4,684

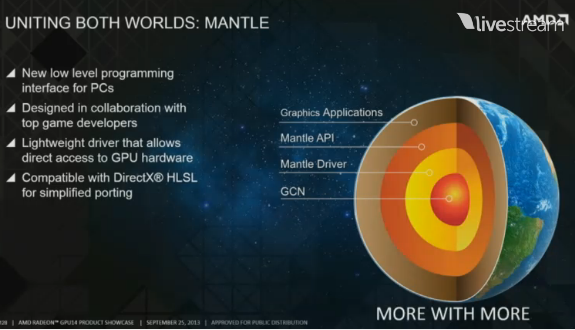

Nvidia won't be competing in games that support Mantle, that's pretty much a given. The advantages gained from having a low-level API is just too great for graphics intensive games. So the only real question that remains is how many game engines will actually use it. I personally suspect all the big ones will, and depending on how hard it's to work with( not very, I suspect), even some smaller titles might use it. It's really not all that much work to support another API in a game.

Are there some benchmarks out already ?

For all that talk about low-level API Xbox One still has rediculus amount of games not runing at 1080p with 7770 on board.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)