Hello,

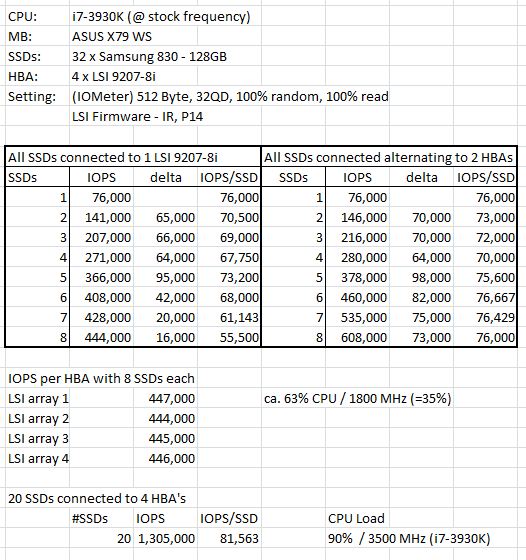

I am currently looking into setting up a decent size RAID 0 array with 8 to 16 SSDs, most likely Samsung 840 pro or OCZ Vectors with Adaptec RAID 71605E or MegaRAID SAS 9286CV-8eCC. I have looked at the HBA cards as well but I would like the option to boot Windows of the array at some stage. From my understanding no software raids allow booting, LSI fastpath is similar to software raid to provide extra performance from your CPU but without the limitation. What RAID card and SSDs do you recommend? From my understanding most cards will be able to provide the MB/s but not the IOPS.

Thank you.

I am currently looking into setting up a decent size RAID 0 array with 8 to 16 SSDs, most likely Samsung 840 pro or OCZ Vectors with Adaptec RAID 71605E or MegaRAID SAS 9286CV-8eCC. I have looked at the HBA cards as well but I would like the option to boot Windows of the array at some stage. From my understanding no software raids allow booting, LSI fastpath is similar to software raid to provide extra performance from your CPU but without the limitation. What RAID card and SSDs do you recommend? From my understanding most cards will be able to provide the MB/s but not the IOPS.

Thank you.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)