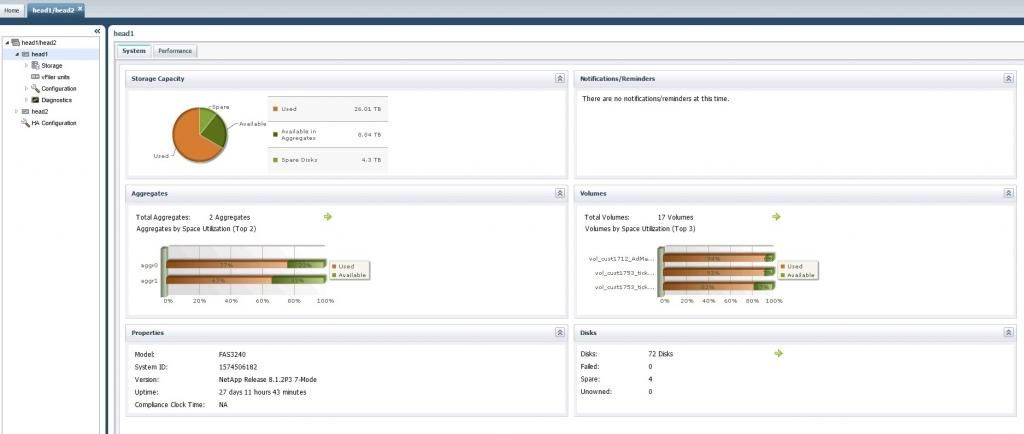

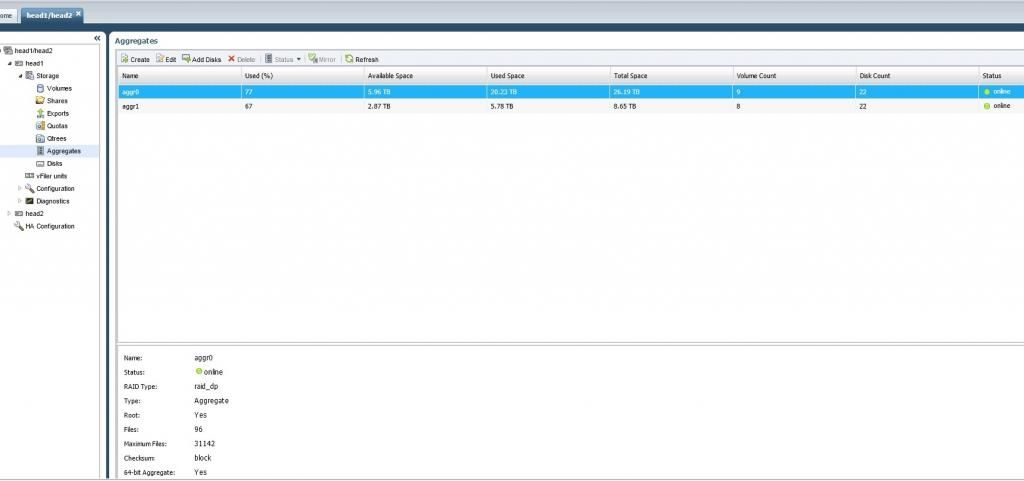

One of our datacenters has two FAS3240 heads and three DS4323 shelves. Head 1 has a combination of tier 1 and tier 2 storage while head 2 is just tier 2 storage. We also have 12 ESXi hosts running about 200 VMs. As far as VMs that are currently running, I would say about 85 are running on head 1 and another 85 are running on head 2.

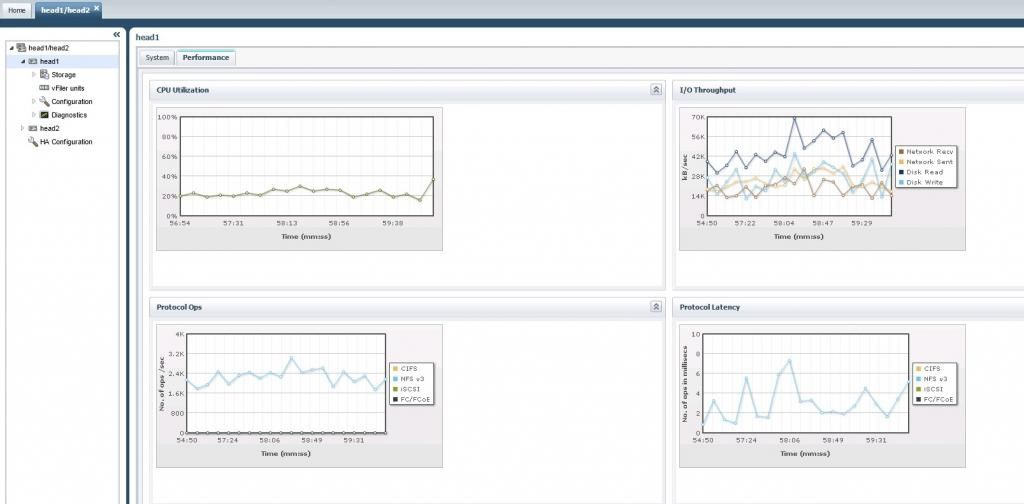

Each head only has three 1GB NIC connections setup for production. The performance has always seemed a bit slow, so I tested a simple file copy. I logged into a server with a 1GB connection to tier 1 storage and copied a 7 GB file to another server also with a 1GB connection to tier 1 storage. The highest speed it hit was 20MBps, but the average was lower about 13MBps. It took about 8.5 minutes to copy which calculates to just barely faster than 100Mbps.

Isn't that kind of slow for tier 1 storage? Our tier1 is 15k SAS drives.

Each head only has three 1GB NIC connections setup for production. The performance has always seemed a bit slow, so I tested a simple file copy. I logged into a server with a 1GB connection to tier 1 storage and copied a 7 GB file to another server also with a 1GB connection to tier 1 storage. The highest speed it hit was 20MBps, but the average was lower about 13MBps. It took about 8.5 minutes to copy which calculates to just barely faster than 100Mbps.

Isn't that kind of slow for tier 1 storage? Our tier1 is 15k SAS drives.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)