Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Unigine "Heaven" DirectX 11 Benchmark

- Thread starter HardOCP News

- Start date

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

Well, it's not working on my PC. I'm having a driver error with my ATI 5770 card, on Win7 x64.

Engine:init(): clear video settings for "ATI Radeon HD 5700 Series 8.660.6.0"

ALSound::require_extension(): required extension

AL_EXT_LINEAR_DISTANCE is not supported.

I get the same problem running XP 32, exactly the same error message.

I downloaded and installed the latest OpenAL, rebooted but it makes, no difference.

Am running GTX260 with 191.07 WHQL video driver.

Very different setups, so it looks like it is hardware and OS agnostic.

It looks great to me.

Why is it these demos always look better than the games we play?

I'd love to play games that had that much detail and ambience.

When the average game looks like this then I think we'll have arrived.

That's the thing about all these DirectX updates. It's going to be awhile before

we see it really leveraged. If you basically build a DX9 game and throw in a few DX11 effects it's kind of hokey. I see a lot of that in games these days.

Why is it these demos always look better than the games we play?

I'd love to play games that had that much detail and ambience.

When the average game looks like this then I think we'll have arrived.

That's the thing about all these DirectX updates. It's going to be awhile before

we see it really leveraged. If you basically build a DX9 game and throw in a few DX11 effects it's kind of hokey. I see a lot of that in games these days.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

I just hope rage uses DX11, and well i hope you all are aware of ID software being owned by bethesda software, or the parent company that they made tho.

MY point is, Elder scrolls using ID engine like ID's engine for rage :|:| that will be slick!

Every idTech engine uses OpenGL as the primary renderer and D3D Hardware rendering has never been an option. The only exception to this are the Doom3 engine based xbox/360 ports.

I hope devs don't think that those roads look good. Other than that it was ok, with amazing shadows.

I was mostly impressed with the lighting.

Am I the only person who kept thinking of Goldeneye 64 when the music first kicked in? (about :26ish in)

It was nice and purty, though I'll hop on the bandwagon that thinks this doesn't seem to have much standout fanciness. Then again DX10 only really wowed me in Crysis with the lighting through trees, and I didn't exactly spend much time playing the game looking up in the air. And as much as I hope this gets optioned for another Elder Scrolls game, chances are they already have something of their own going, considering their development cycles.

It was nice and purty, though I'll hop on the bandwagon that thinks this doesn't seem to have much standout fanciness. Then again DX10 only really wowed me in Crysis with the lighting through trees, and I didn't exactly spend much time playing the game looking up in the air. And as much as I hope this gets optioned for another Elder Scrolls game, chances are they already have something of their own going, considering their development cycles.

Kaldskryke

[H]ard|Gawd

- Joined

- Aug 1, 2004

- Messages

- 1,346

It's not about how bumpy the roads are, it's about how much detail can be added to an otherwise flat surface in real-time with dynamic LOD. And yes, the performance hit from tessellation for this demo is large, but that's because it's purposefully over-done. I'm sure that future games using tessellation will include a slider to determine the extent to which surfaces are subdivided. It's exciting that we'll finally have performance-friendly "displacement mapping" that performs well with shadows, SSAO, and anisotropic filtering

[Retaliation]

Limp Gawd

- Joined

- Dec 7, 2004

- Messages

- 505

Well,that sure was interesting,my back still is sore from just thinking of walking down that road.

Every idTech engine uses OpenGL as the primary renderer and D3D Hardware rendering has never been an option. The only exception to this are the Doom3 engine based xbox/360 ports.

It's off topic, but 'Rage' is Direct3d, id is dropping OpenGL support (and, thus, Linux).

It's off topic, but 'Rage' is Direct3d, id is dropping OpenGL support (and, thus, Linux).

Oh, damn, and here I go being outdated with my news.

Diablo2K

Supreme [H]ardness

- Joined

- Aug 10, 2000

- Messages

- 6,794

xXxDieselxXx

Gawd

- Joined

- Sep 1, 2004

- Messages

- 763

guitarguy6

[H]ard|Gawd

- Joined

- Oct 29, 2007

- Messages

- 1,948

It looks so damn good! I spent 20min walking around and checking everything out. Was pretty smooth at 1080p with 4X AF on one 5850. I would definitely buy another for xfire if games would use this.

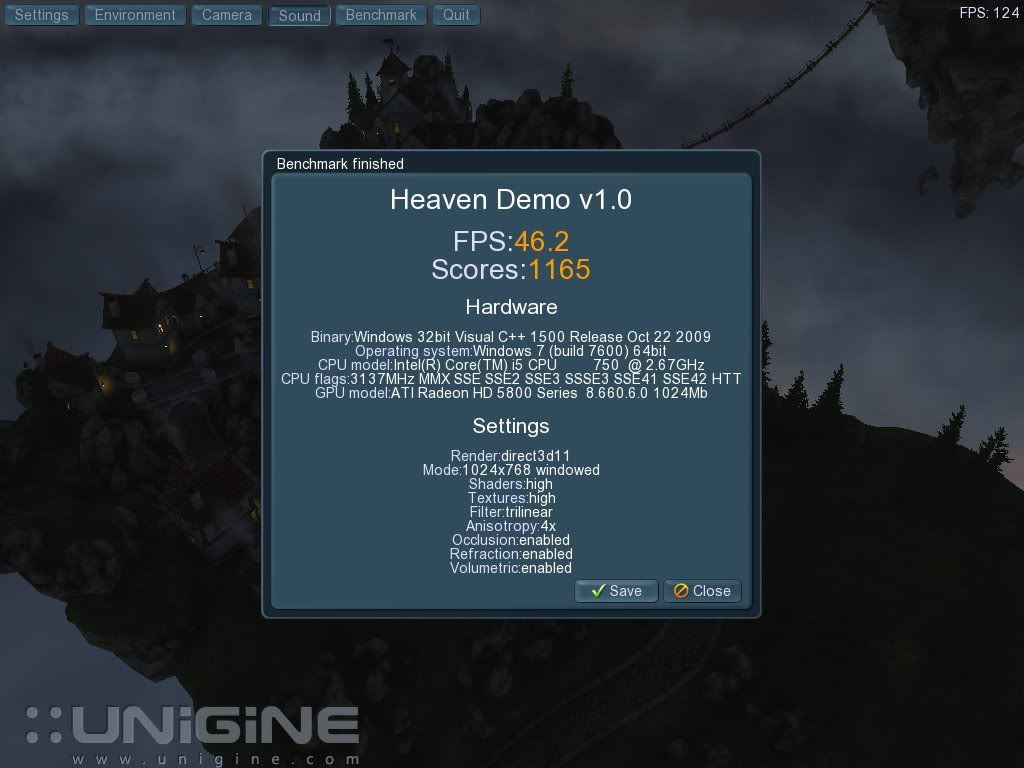

Unigine

Heaven Demo v1.0

FPS: 30.7

Scores: 773

Hardware

Binary: Windows 32bit Visual C++ 1500 Release Oct 22 2009

Operating system: Windows 7 (build 7600) 64bit

CPU model: Intel(R) Core(TM) i7 CPU 920 @ 2.67GHz

CPU flags: 3600MHz MMX SSE SSE2 SSE3 SSSE3 SSE41 SSE42 HTT

GPU model: ATI Radeon HD 5800 Series 8.670.0.0 1024Mb

Settings

Render: direct3d11

Mode: 1920x1080 fullscreen

Shaders: high

Textures: high

Filter: trilinear

Anisotropy: 4x

Occlusion: enabled

Refraction: enabled

Volumetric: enabled

Unigine

Heaven Demo v1.0

FPS: 30.7

Scores: 773

Hardware

Binary: Windows 32bit Visual C++ 1500 Release Oct 22 2009

Operating system: Windows 7 (build 7600) 64bit

CPU model: Intel(R) Core(TM) i7 CPU 920 @ 2.67GHz

CPU flags: 3600MHz MMX SSE SSE2 SSE3 SSSE3 SSE41 SSE42 HTT

GPU model: ATI Radeon HD 5800 Series 8.670.0.0 1024Mb

Settings

Render: direct3d11

Mode: 1920x1080 fullscreen

Shaders: high

Textures: high

Filter: trilinear

Anisotropy: 4x

Occlusion: enabled

Refraction: enabled

Volumetric: enabled

__hollywood|meow

[H]ard|Gawd

- Joined

- Feb 20, 2006

- Messages

- 1,500

I walked around in the demo checking out tessellation on/off.

I was under the impression that the added triangles were generated by hardware only. In this demo it almost looks like they are programmed.

its hardware accelerated, but to utilize the feature properly, and to deform it to the extent of the dragon, i believe you need to create a custom height map for the textures you're modifying...like relief mapping sort of. but way better because its efficient and yet more robust. but anyway its up to the dev to implement, and in this instance it was done with much thought and effort to generate hype

snaggletooth

Supreme [H]ardness

- Joined

- Aug 16, 2005

- Messages

- 4,767

All you folks running i7's @ 4GHz and 5800 series graphics cards but for some reason are not enabling at least 4xAA and 16xAF should be ashamed of yourselves.

its hardware accelerated, but to utilize the feature properly, and to deform it to the extent of the dragon, i believe you need to create a custom height map for the textures you're modifying...like relief mapping sort of. but way better because its efficient and yet more robust. but anyway its up to the dev to implement, and in this instance it was done with much thought and effort to generate hype

Thanks for the response.

I've gone and done some reading and that seems to be the explanation.

I guess it is impressive, but it sucks that we still have to depend on someone doing their job properly to reap the benefits of the feature.

FPS will prob go up a bit more once ATI gets their driver hax out for it.

I also found it interesting that the clouds below the city are seemingly harder to render than the city itself.

Just looking up and away from the clouds alone is enough to skyrocket my FPS from 60 to 200.

I also found it interesting that the clouds below the city are seemingly harder to render than the city itself.

Just looking up and away from the clouds alone is enough to skyrocket my FPS from 60 to 200.

Also the engine doesn't seem to occlude hidden geometry in this benchmark. Blocking off the entire view with a house or something didn't affect FPS.

I'd guess it's purposely more stressful than a real gameplay scenario would be.

Can someone also take an FPS comparison between tessellation on / off? I dont have a DX11 card.

I'd guess it's purposely more stressful than a real gameplay scenario would be.

Can someone also take an FPS comparison between tessellation on / off? I dont have a DX11 card.

heatlesssun

Extremely [H]

- Joined

- Nov 5, 2005

- Messages

- 44,154

Also the engine doesn't seem to occlude hidden geometry in this benchmark. Blocking off the entire view with a house or something didn't affect FPS.

I'd guess it's purposely more stressful than a real gameplay scenario would be.

Can someone also take an FPS comparison between tessellation on / off? I dont have a DX11 card.

Ask and ye shall receive!

Both tests run on the sig rig and 3x SLI GTX 280's. Performance for the DX11 verson was good but a a lot choppier than the DX 10 run. Also this engine seems to love SLI. Did test single card performance but my SLI inidcators were at max in both runs. So this engine looks to be multi-gpu friendly. AA was off.

compuguy1088

Limp Gawd

- Joined

- Jan 1, 2008

- Messages

- 317

Well, it's not working on my PC. I'm having a driver error with my ATI 5770 card, on Win7 x64.

Engine:init(): clear video settings for "ATI Radeon HD 5700 Series 8.660.6.0"

ALSound::require_extension(): required extension

AL_EXT_LINEAR_DISTANCE is not supported.

Having the same problem........on a 5850.....

But that's always been the case.Thanks for the response.

I've gone and done some reading and that seems to be the explanation.

I guess it is impressive, but it sucks that we still have to depend on someone doing their job properly to reap the benefits of the feature.

Where are the 2560x1600 benchmarks?

Mine from rig in sig.

Vsync disabled

Unigine

Heaven Demo v1.0

FPS:25.4

Scores:640

Hardware

Binary:Windows 32bit Visual C++ 1500 Release Oct 22 2009

Operating system:Windows 7 (build 7600) 64bit

CPU model:Intel(R) Core(TM)2 Quad CPU Q6600 @ 2.40GHz

CPU flags:3001MHz MMX SSE SSE2 SSE3 SSSE3 HTT

GPU model:NVIDIA GeForce GTX 280 8.16.11.9107 1024Mb

Settings

Render:direct3d11

Mode:2560x1600 fullscreen

Shaders:high

Textures:high

Filter:trilinear

Anisotropy:16x

Occlusion:enabled

Refraction:enabled

Volumetric:enabled

------------------------

Vsync enabled

Unigine

Heaven Demo v1.0

FPS:21.2

Scores:533

Hardware

Binary:Windows 32bit Visual C++ 1500 Release Oct 22 2009

Operating system:Windows 7 (build 7600) 64bit

CPU model:Intel(R) Core(TM)2 Quad CPU Q6600 @ 2.40GHz

CPU flags:3001MHz MMX SSE SSE2 SSE3 SSSE3 HTT

GPU model:NVIDIA GeForce GTX 280 8.16.11.9107 1024Mb

Settings

Render:direct3d11

Mode:2560x1600 fullscreen

Shaders:high

Textures:high

Filter:trilinear

Anisotropy:16x

Occlusion:enabled

Refraction:enabled

Volumetric:enabled

Yeah, I felt the same way--the ambience would be perfect for a survival/horror or mystery game. Gorgeous!Anyone else creeped out by the town?

this reminds me far too much of the myst games

Intel_Hydralisk

Supreme [H]ardness

- Joined

- Feb 6, 2005

- Messages

- 4,743

Ask and ye shall receive!

Both tests run on the sig rig and 3x SLI GTX 280's. Performance for the DX11 verson was good but a a lot choppier than the DX 10 run. Also this engine seems to love SLI. Did test single card performance but my SLI inidcators were at max in both runs. So this engine looks to be multi-gpu friendly. AA was off.

Funny how you can run in Dx11 without a Dx11 card.

SeegsElite

[H]ard|Gawd

- Joined

- Oct 6, 2007

- Messages

- 1,105

WHY CANT I EDIT?

1280x1024 resolution^

Can't edit posts in the news section of the forums.

simply amazing, I am def getting another 5870 when these games start rolling out... but i must wonder.... is my cpu the bottleneck here? Where is the line drawn on knowing if ur cpu is slowing down your 5870? Which cpu and what not, any of ya know?

FPS: 31.7

Scores: 798

Hardware

Binary: Windows 32bit Visual C++ 1500 Release Oct 22 2009

Operating system: Windows 7 (build 7600) 64bit

CPU model: Intel(R) Core(TM)2 Duo CPU E8500 @ 3.16GHz

CPU flags: 3318MHz MMX SSE SSE2 SSE3 SSSE3 SSE41 HTT

GPU model: ATI Radeon HD 5800 Series 8.660.6.0 1024Mb

Settings

Render: direct3d11

Mode: 1920x1080 2xAA fullscreen

Shaders: high

Textures: high

Filter: trilinear

Anisotropy: 8x

Occlusion: enabled

Refraction: enabled

Volumetric: enabled

FPS: 31.7

Scores: 798

Hardware

Binary: Windows 32bit Visual C++ 1500 Release Oct 22 2009

Operating system: Windows 7 (build 7600) 64bit

CPU model: Intel(R) Core(TM)2 Duo CPU E8500 @ 3.16GHz

CPU flags: 3318MHz MMX SSE SSE2 SSE3 SSSE3 SSE41 HTT

GPU model: ATI Radeon HD 5800 Series 8.660.6.0 1024Mb

Settings

Render: direct3d11

Mode: 1920x1080 2xAA fullscreen

Shaders: high

Textures: high

Filter: trilinear

Anisotropy: 8x

Occlusion: enabled

Refraction: enabled

Volumetric: enabled

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

Its worth clocking it a bit to see, I definitely see good gains while gaming with a fast CPU (4GHz+) on a GTX260.

Faster cards require more CPU so you will hopefully get some return.

Faster cards require more CPU so you will hopefully get some return.

TechLarry

RIP [H] Brother - June 1, 2022

- Joined

- Aug 9, 2005

- Messages

- 30,481

Wow. Major flashbacks to MYST !

Is this a real game in any way?

Is this a real game in any way?

The Benchmark reports the GTX280 for some reason, I'm rendering through the ATI card. Strange. I think it finds the GTX280 as the first card?

Ha!

That's AMD's secret!

They made a "real" 295 with two Nvidia 280's!

It's industrial espionage I tell you!

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)