Deadjasper

2[H]4U

- Joined

- Oct 28, 2001

- Messages

- 2,584

Been using Samba but wondering if NFS or some other protocol would be better. Samba is kinda slow.

TIA

TIA

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Faster and works well *ix to *ix. But not really for Windows.Been using Samba but wondering if NFS or some other protocol would be better. Samba is kinda slow.

TIA

Deadjasper, maybe a dumb question, but is your network 100 Mbps or 1000 Mbps?

Deadjasper, maybe a dumb question, but is your network 100 Mbps or 1000 Mbps?

Correct me if I'm wrong, but I think NFS still doesn't have an easy way to authenticate users. The minimum you should do is restrict your NFS shares to be accessed only by the IP addresses of the devices from which you are going to be accessing those shares, and your in the router you should configure those devices to never change their addresses. It's still quite poor security. If you trust all the devices in your LAN then it's not a big deal. One good way to secure NFS is to put all your trusted devices into a VLAN, but it might be pretty hard to configure as well...

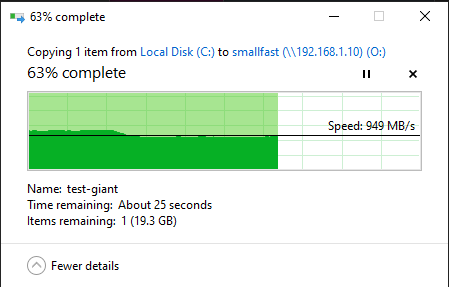

Honestly, SMB should have zero issues with throughput, especially on 1G networks. It keeps up just fine on my 10G:My network is 1G, of course. I looked at NFS years ago and wasn't able to make it work. I was hoping things were different now but it seems they are not. I have manged to figure out how to connect to a Windows share and of course, Linux to Linux is no problem. Guess I'll stick with Samba for now. It's not as fast as Windows but it's better than nothing.

That's hotHonestly, SMB should have zero issues with throughput, especially on 1G networks. It keeps up just fine on my 10G:

View attachment 559121

Sure.Eulogy, that's cool. Could you please post your setup? What operating systems are on either end of the transfer, the network cards, switch, type of cabling and their length? Are you using NVMe drives?

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

smallfast 928G 295G 633G - - 0% 31% 1.00x ONLINE -

mirror-0 464G 159G 305G - - 0% 34.3% - ONLINE

scsi-SATA_CT500MX500SSD1_2252E6968A00 - - - - - - - - ONLINE

scsi-SATA_CT500MX500SSD1_2246E687A7AC - - - - - - - - ONLINE

mirror-1 464G 136G 328G - - 0% 29.4% - ONLINE

scsi-SATA_CT500MX500SSD1_2246E687B65B - - - - - - - - ONLINE

scsi-SATA_WD_Blue_SA510_2._22431K800256 - - - - - - - - ONLINEDell R640

2x Intel Xeon Gold 5120s

472GB DDR4 ECC RDIMMs

40Gbps (Mellanox ConnectX-3)

240GB OS SSD (SATA)

Ubuntu 22.04.02I have almost this exact setup. Only difference is that I went with single mode fiber.Networking, simplified:

Desktop -> Mikrotik CRS305-1G-4S+ -> 10Gbps OM4 MM Fiber -> Brocade ICX-6610-48P -> 40Gbps QSFP+ -> smallfast server

Why SM? Unless you're running extreme distance, doesn't seem worth the extra cost to me.I have almost this exact setup. Only difference is that I went with single mode fiber.

When I first started getting the cables and optics I bought SMF, so I have just maintained that. SMF for 10 Gb was not that much more expensive, and was much less confusing as I started getting into things. I am not yet utilizing the 40Gb QSFP+ on the 6610, but I would go MMF between that and whatever I end up hooking it to (likely NAS). The primary goal was to get a 10Gb backboneWhy SM? Unless you're running extreme distance, doesn't seem worth the extra cost to me.