That's the beauty of the statement. Nobody can prove it wrong.could they have? possibly(doubt it though) if they said damn efficiency and cost, also nvidia pulling a rabbit out of their ass and figuring out how to get the power usage below 450w stock wasn't probably wasn't expected by AMD. but in all honesty they don't need to compete with the 4090, there's very little money to make with cards like that.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Intentionally Held Back from Competing with RTX 4090

- Thread starter sk3tch

- Start date

- Status

- Not open for further replies.

I think that could be the biggest story of that presentation, we already know they failed at this generation, but if the translation is correct and complete:The company execs also explained why an MCM approach to the GPU die may not be feasible yet unlike what we've seen with CPUs

As you know, current high-end GPU cores contain more than 10,000 arithmetic cores (floating point arithmetic units). This is over 1000 times the number of CPU cores. If you try to interconnect (connect) the GPU dies in this state, the number of connection points will be enormous, and reliable electrical signal transmission cannot be guaranteed. So, at the moment, it is difficult not only in man-hours but also in terms of cost to connect the GPU die with the same glue as the CPU die.

There's nothing you can't do, but... Rather than doing so, it is more efficient and less costly to create a large-scale GPU (core) at the moment.

Does not sound like they will promise at this point that the 8xxx will pull it off, GPU cores are so small doing so little of the complete work because how paralyzed the workload is, that it can get real quick fast that the cost of putting them on different physical die and sync back all of that data end up costing too much for the benefit of better yield.

Obviously this is 100% reading into the tone of a text instead of video-audio and that come from a Google Translate over that has well.

There will always be a market for people who want the best regardless of the price because they have the cash. That is a small crowd and they will not willingly settle for second best.That’s how you know this is BS, because 7900XT didn’t launch for $699, it’s dropping from it’s $799 launch price because no one wants to buy it, so AMD has apparently decided to retcon reality by claiming this was the strategy all along. Give me a break.

AMD is a publicly traded company. They’re watching Nvidia sell a bunch of $1600 cards to gamers who apparently have way too much money to burn. I’d AMD could play in that market, they would. They could have even launched a competitor at $1300 and still made insane margins siphoning that buyer away from Nvidia.

They want 4K max settings with the ray tracing and all that jazz they are the same group that we’re dropping the “big” numbers for SLI rigs. Same demographic.

When selling there AMD has a few problems, their ray tracing isn’t as good, they often don’t have driver optimizations for a week or three, and FSR is at best as good as DLSS but never better than. So AMD is left there fighting for customers and AMD doesn’t like fighting, because when you do you need to risk margins and AMD is all about them margins.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,054

Most likely the reason NVIDIA is sticking with monolithic for Lovelace's successor.I think that could be the biggest story of that presentation, we already know they failed at this generation, but if the translation is correct and complete:

As you know, current high-end GPU cores contain more than 10,000 arithmetic cores (floating point arithmetic units). This is over 1000 times the number of CPU cores. If you try to interconnect (connect) the GPU dies in this state, the number of connection points will be enormous, and reliable electrical signal transmission cannot be guaranteed. So, at the moment, it is difficult not only in man-hours but also in terms of cost to connect the GPU die with the same glue as the CPU die.

There's nothing you can't do, but... Rather than doing so, it is more efficient and less costly to create a large-scale GPU (core) at the moment.

Does not sound like they will promise at this point that the 8xxx will pull it off, GPU cores are so small doing so little of the complete work because how paralyzed the workload is, that it can get real quick fast that the cost of putting them on different physical die and sync back all of that data end up costing too much for the benefit of better yield.

Obviously this is 100% reading into the tone of a text instead of video-audio and that come from a Google Translate over that has well.

There will always be a market for people who want the best regardless of the price because they have the cash. That is a small crowd and they will not willingly settle for second best.

They want 4K max settings with the ray tracing and all that jazz they are the same group that we’re dropping the “big” numbers for SLI rigs. Same demographic.

When selling there AMD has a few problems, their ray tracing isn’t as good, they often don’t have driver optimizations for a week or three, and FSR is at best as good as DLSS but never better than. So AMD is left there fighting for customers and AMD doesn’t like fighting, because when you do you need to risk margins and AMD is all about them margins.

There are people in this market who will pay 30% more for a 5% uplift in performance, as you said, just because it's "the best". I'm not part of that demographic, because I think that's insane, but that doesn't mean it's not a market that can't easily be serviced. AMD is well aware of this, and it's clear from their statement they're trying to find a way to say they haven't "really" fallen behind, when it's clear they have.

And you know what, it doesn't even really matter if they have, that's the funny part. Considering Nvidia launched the 4080 at $1200, I would have been more than happy to buy something like the 7900XT or 7900XTX for the right price since either is fine for my use case. Even at the prices they launched at, for $200-$300USD, you'd have enough left over on the price differential to buy a decent Freesync monitor for good measure. The deal is, though I'm not willing to spend this kind of cash for either option (and I live in Canada, the land of crazy where price declines still haven't happened), because $1000 simply isn't a "reasonable target for high end gaming" in my opinion when it was $800 last generation with the 3080. Combine that with the fact that most people want to buy $300-$500 GPUs, and very few people ACTUALLY care about this segment, or if AMD is actually able to deliver there. I'll take the modern equivalent of 5700XT performance for $399 again all day.

Lmao, why is everything price fixing?

- The price of their GPU's are arbitrary. They could have made whatever they wanted at the price of $1k. They could have also priced it at whatever they wanted too.

- If AMD is holding back then I'd be more worried about price fixing with Nvidia, since according to AMD they just gave Nvidia the win and sales.

- As Nvidia has demonstrated, the best GPU sells the most with the current overpriced market. Anything less won't sell as much. So why hold back?

- Nobody cares about their $900 GPU's as evident by their 7900 XT price at $800. The XTX still remains at $1k.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,792

Because people get salty about gpu prices without wanting to acknowledge that consumers are partially at fault for it.Lmao, why is everything price fixing?

autistic consumers will base buying $300 gpus on who has the best $1500 gpu.

True the RTX 3050 massively outsold the much more powerful RX 6650 XT which was selling for the same price.

Because people get salty about gpu prices without wanting to acknowledge that consumers are partially at fault for it.

It's just a stupid argument to literally everything latelyBecause people get salty about gpu prices without wanting to acknowledge that consumers are partially at fault for it.

They make it sound like it is quite a bit, it could be bad translation obviously, but it make it sound like AMD would have priced it the same has Nvidia would have just because of how Nvidia priced it.Lmao, why is everything price fixing?

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,792

Everything has to be a fault on the supply side. It just has to be "checks list" Price Fixing now! The term isn't even being used correctly. Choosing not to compete is not price fixing. Not releasing a product is not price fixing.It's just a stupid argument to literally everything lately

Geez, king of the hill got it more correctly.

*Edit* Pricing your product similar to a competeing product IS NOT price fixing.

sharknice

2[H]4U

- Joined

- Nov 12, 2012

- Messages

- 3,752

That's the beauty of the statement. Nobody can prove it wrong.

No one can "prove it" but it's pretty clear to me if they could have they would have because the marketing boost alone would make it worth it.

If AMD made the fastest card people would regard AMD as the best and say things like "AMD makes the best cards". Right now people say "NVIDIA makes the best cards".

People say this even when they're not buying "the best" cards. They're buying budget cards but this is still what people talk about and influences what low end card they're buying.

There are of course people that do research and buy whatever is actually the best for them, but a large portion of people don't do that and it's very valuable to be the brand with "the best".

Right now buying an AMD card is like buying an off brand cereal to a lot of people.

4saken

[H]F Junkie

- Joined

- Sep 14, 2004

- Messages

- 13,165

Im an NVIDIA homer now, price gouging, etc, etc aside. I loved the 5870, 9700, 9800 Pro. My vid card died in my puter in 2008, the day my daughter was born, and one of my memories is wife saying to go pickup lunch and get a replacement card at best buy, which happened to be the 9800 pro at the time. ATI/AMD used to be able to do it, and I would buy the better card. But as a much older adult now with more funds to play with, yeah I'll pay the premium for the better cards now, just so happens that is NVIDIA currently. Just can't buy the story.No one can "prove it" but it's pretty clear to me if they could have they would have because the marketing boost alone would make it worth it.

If AMD made the fastest card people would regard AMD as the best and say things like "AMD makes the best cards". Right now people say "NVIDIA makes the best cards".

People say this even when they're not buying "the best" cards. They're buying budget cards but this is still what people talk about and influences what low end card they're buying.

There are of course people that do research and buy whatever is actually the best for them, but a large portion of people don't do that and it's very valuable to be the brand with "the best".

Right now buying an AMD card is like buying an off brand cereal to a lot of people.

III_Slyflyer_III

[H]ard|Gawd

- Joined

- Sep 17, 2019

- Messages

- 1,250

Best part of this article is the fact my 4090 doesn't even pull more than 500W full load... lol. Almost happy they over-engineered the cooling; this thing never even passes 52C gaming with a full overclock on it with max power and voltage.

Mindshare. It's all about mindshare.No one can "prove it" but it's pretty clear to me if they could have they would have because the marketing boost alone would make it worth it.

If AMD made the fastest card people would regard AMD as the best and say things like "AMD makes the best cards". Right now people say "NVIDIA makes the best cards".

People say this even when they're not buying "the best" cards. They're buying budget cards but this is still what people talk about and influences what low end card they're buying.

There are of course people that do research and buy whatever is actually the best for them, but a large portion of people don't do that and it's very valuable to be the brand with "the best".

Right now buying an AMD card is like buying an off brand cereal to a lot of people.

If you have the "best" product in a segment, everyone regards you as the segment leader. As long as AMD does not have the fastest product, they will be regarded as second place. When AMD can claim they have the fastest product UNCONTESTED, they will pull some mindshare away from Nvidia... if they keep doing it. AMD's problem is that Nvidia has had an extremely reliable track record of producing the fastest GPU each generation for years now. That is devastating for mindshare. Nvidia can sell a GPU for $1600+ because they are the uncontested leader of the market. AMD has to compete on value. Nvidia does not.

The other thing that AMD needs to do is they need to start researching market-disrupting tech. Recently, it feels like Nvidia creates something, and AMD then releases their own version a generation or 2 later. Nvidia is the market leader because they sink billions of dollars into bleeding-edge R&D each year. AMD just seems to copy them. That's not good for this industry since Nvidia seems to control all major technological advancements. We need to go back to the time when AMD released the HD 5870 that was able to use 3 displays native, and there was a second version that was able to use 6 displays. At the time, Nvidia was able to do 2, and if you wanted to do 3, you had to get a second GPU for SLI. Nvidia was caught with their pants down and it took them a couple of years to release a competing tech.

AMD needs to start researching disruptive tech. If they can't catch Nvidia with their pants down again, they will never catch them.

It's like saying Mazda is price fixing because they don't offer a car with a v8. These people are clueless.Everything has to be a fault on the supply side. It just has to be "checks list" Price Fixing now! The term isn't even being used correctly. Choosing not to compete is not price fixing. Not releasing a product is not price fixing.

Geez, king of the hill got it more correctly.

*Edit* Pricing your product similar to a competeing product IS NOT price fixing.

sirmonkey1985

[H]ard|DCer of the Month - July 2010

- Joined

- Sep 13, 2008

- Messages

- 22,414

Lmao, why is everything price fixing?

it's the easy answer for people that don't understand basic marketing and pricing to your competition.. Nvidia left the door wide open for them to price the way they did. if the 4080 was 900 dollars instead of 1200 dollars they would of priced accordingly but since nvidia didn't AMD's not going to either. AMD's made a name for themselves over the last 4 years and enough money along with it that they no longer have to play the price undercut game anymore.

true and they're also willing to sink billions to flood the marking side any time they feel threatened. they did it with the 5k and 6k series when AMD was quite competitive. as far as AMD copying them, i wouldn't say they are per say, nvidia just has the budget and man power to release things quicker than AMD can. AMD has typically been ahead and willing to take risks on the hardware side, it was just getting microsoft to actually do anything so they could use it.. e.g. GCN had supported ACE since day one yet it was never gained support until Vega. they took a risk on supporting GDDR4 which turned out to be a mistake since GDDR5 ended up going into production sooner than expected. they forced microsoft's hand and made them release DX12 when they decided to bring closer to metal support to desktop which led to vulkan which has led to gaming actually being an option on linux for the masses. what exactly has nvidia done other than tack on more proprietary crap to justify their insanely high prices?(not that AMD wouldn't be doing the same thing if they could) so no i wouldn't say all they do is copy nvidia, they just don't have the ability to spend money like nvidia can.Mindshare. It's all about mindshare.

If you have the "best" product in a segment, everyone regards you as the segment leader. As long as AMD does not have the fastest product, they will be regarded as second place. When AMD can claim they have the fastest product UNCONTESTED, they will pull some mindshare away from Nvidia... if they keep doing it. AMD's problem is that Nvidia has had an extremely reliable track record of producing the fastest GPU each generation for years now. That is devastating for mindshare. Nvidia can sell a GPU for $1600+ because they are the uncontested leader of the market. AMD has to compete on value. Nvidia does not.

The other thing that AMD needs to do is they need to start researching market-disrupting tech. Recently, it feels like Nvidia creates something, and AMD then releases their own version a generation or 2 later. Nvidia is the market leader because they sink billions of dollars into bleeding-edge R&D each year. AMD just seems to copy them. That's not good for this industry since Nvidia seems to control all major technological advancements. We need to go back to the time when AMD released the HD 5870 that was able to use 3 displays native, and there was a second version that was able to use 6 displays. At the time, Nvidia was able to do 2, and if you wanted to do 3, you had to get a second GPU for SLI. Nvidia was caught with their pants down and it took them a couple of years to release a competing tech.

AMD needs to start researching disruptive tech. If they can't catch Nvidia with their pants down again, they will never catch them.

Is not necessarily price fixing being the more exact affair.

Releasing very similar product at very similar time at very similar price can be price fixing, can be natural product of pure fighting for the most clients-profits, when there is only 2 competitor being in large part fixed is much more likely to happen than when you are Mazda.

And sometime what look like quite the price fixing affair (sony-microsoft in the console world where they seem to have decided to battle themselve outside the console price point-specs ) is not necessarily hurting consumer at all.

Same goes for all the gaz price being almost the same in a region and changing almost at the same time, it does not mean there is any price fixing going on but obviously does not mean that it is not in part the case.

And it could be all p.r. b.s obviously, but the talk was a bit like if they knew the competition product via some 'espionage" and aligned their own knowingly.

Releasing very similar product at very similar time at very similar price can be price fixing, can be natural product of pure fighting for the most clients-profits, when there is only 2 competitor being in large part fixed is much more likely to happen than when you are Mazda.

And sometime what look like quite the price fixing affair (sony-microsoft in the console world where they seem to have decided to battle themselve outside the console price point-specs ) is not necessarily hurting consumer at all.

Same goes for all the gaz price being almost the same in a region and changing almost at the same time, it does not mean there is any price fixing going on but obviously does not mean that it is not in part the case.

And it could be all p.r. b.s obviously, but the talk was a bit like if they knew the competition product via some 'espionage" and aligned their own knowingly.

Last edited:

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,922

AMD would have added a lot more X's by then. Like XTX².I agree with this statement, see ya in 2027 when the 10900 XTX releases...

AMD waited to see what Nvidia would launch and priced it just behind it. Meanwhile AMD appeals to almost nobody since the RTX 4090 which does sell, only does so because it's the best. The rest of the market is focused on $200-$300 GPU's, where neither AMD or Nvidia have any current gen products for. If AMD could they would, but they're always behind Nvidia.AMD is a publicly traded company. They’re watching Nvidia sell a bunch of $1600 cards to gamers who apparently have way too much money to burn. I’d AMD could play in that market, they would. They could have even launched a competitor at $1300 and still made insane margins siphoning that buyer away from Nvidia.

For one, they've done it in the past. Secondly, if AMD wanted market share like they should, they wouldn't be selling graphic cards for $900 and $1000 . They'd be doing what Intel is doing and pricing them where mainstream gamers would pay for a graphics card. Intel knows they have a bad product, but gave it a good price. AMD pretends they have a good product, and gives it a really bad price. Nobody is buying a 7900 XTX and thinks they're getting 800% value. So either AMD is living in their own little world and they unfortunately have to share it with Nvidia, or somethings up. A 7900 XTX would sell more at $800 and would be a serious consideration over the RTX 4070, let alone the 4080. Right now you can't compare the 7900 XTX to the RTX 4090. It's a far second place, which might as well be last place for the consumers that they're hoping to attract. It's either price fixing or stupidity.Lmao, why is everything price fixing?

TrunksZero

Gawd

- Joined

- Jul 15, 2021

- Messages

- 550

I think that could be the biggest story of that presentation, we already know they failed at this generation, but if the translation is correct and complete:

As you know, current high-end GPU cores contain more than 10,000 arithmetic cores (floating point arithmetic units). This is over 1000 times the number of CPU cores. If you try to interconnect (connect) the GPU dies in this state, the number of connection points will be enormous, and reliable electrical signal transmission cannot be guaranteed. So, at the moment, it is difficult not only in man-hours but also in terms of cost to connect the GPU die with the same glue as the CPU die.

There's nothing you can't do, but... Rather than doing so, it is more efficient and less costly to create a large-scale GPU (core) at the moment.

Does not sound like they will promise at this point that the 8xxx will pull it off, GPU cores are so small doing so little of the complete work because how paralyzed the workload is, that it can get real quick fast that the cost of putting them on different physical die and sync back all of that data end up costing too much for the benefit of better yield.

Obviously this is 100% reading into the tone of a text instead of video-audio and that come from a Google Translate over that has well.

It's worth noting that AMD is already doing MCM for CDNA2, the non-consumer grade graphics hardware(Radeon Instinct). But getting cost affordable to consumer grade is likely an issue still. But I bet we will see CDNA3 MCM for enterprise and research grade hardware.

HBM memory help, but is mostly for workload that has good result when running on different cards, sometime even in different machine, because you do not need to combine and make all the result speak to each other 60 times per second ?enterprise and research grade hardware

A bit like SLI continue to work well enough even if it had stutter and other issue in games that made it go away with temporal and whole screen effects making it harder and harder to split a game rendering workload.

GPU chiplet if it is ever pulled off could be exactly this and it is obvious why I would be limited to thing about something else but nothing else come to mindAMD needs to start researching disruptive tech. If they can't catch Nvidia with their pants down again, they will never catch them.

Bankie

2[H]4U

- Joined

- Jul 27, 2004

- Messages

- 2,467

Nvidia has been researching chiplets for years now as well. If it were the right time we'd already have those cards in hand. IMO AMD blew their load too early.GPU chiplet if it is ever pulled off could be exactly this and it is obvious why I would be limited to thing about something else but nothing else come to mind

GoodBoy

2[H]4U

- Joined

- Nov 29, 2004

- Messages

- 2,751

Mmmhmm...https://www.notebookcheck.net/AMD-i...spend-savings-on-other-PC-parts.700365.0.html

"AMD could have made an RTX 4090 competitor but chose not to in favor of a trade-off between price and competitive performance. AMD's rationale is that a 600 W card with a US$1,600 price tag is not a mainstream option for PC gamers and that the company always strived to price its flagship GPUs in the US$999 bracket. The company execs also explained why an MCM approach to the GPU die may not be feasible yet unlike what we've seen with CPUs."

They could have - but they didn't wanna.

PR Person doing their job.

If they could have, they would have.

Sounds like they are blaming it on the MCM (chiplet) approach, which could very well be true. But CPU's are using MCM design, and run at higher clocks... If MCM were to have any issues once they got the interposer figured out, I thought it would have been the varying clockspeeds of each chiplet, and getting them to all talk properly and efficiently (total WAG). If accurate, the GPU should be easier to make into a chiplet since they run at about half the clockspeed of typical CPU's.

WAG: Wild Ass Guess

Last edited:

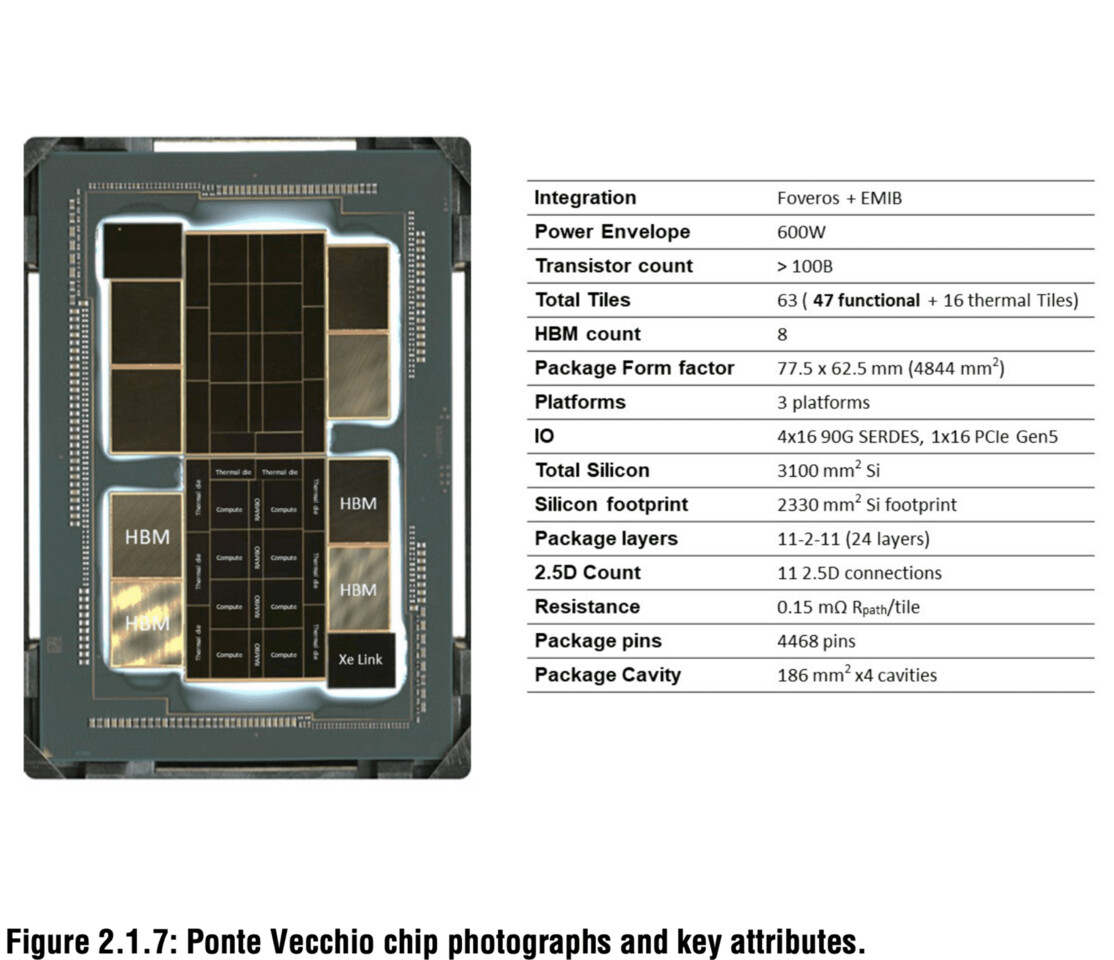

This is where NVidia’s interest in using the Intel Foundry Services comes in, let TSMC make the cores but have Intel package them.Mmmhmm...

PR Person doing their job.

If they could have, they would have.

Sounds like they are blaming it on the MCM (chiplet) approach, which could very well be true. But CPU's are using MCM design, and run at higher clocks... If MCM were to have any issues once they got the interposer figured out, I thought it would have been the varying clockspeeds of each chiplet, and getting them to all talk properly and efficiently (total WAG). If accurate, the GPU should be easier to make into a chiplet since they run at about half the clockspeed of typical CPU's.

WAG: Wild Ass Guess

Intel Foveros has come a long way and on paper works better than TSMCs packaging, TSMC just makes a better chip than Intel does. Ponte Vecchio displays that very well it has 63 tiles/chiplets that make up its GPU and it supposedly performs better than expected for what it was meant to do.

EmerilLIVE

Weaksauce

- Joined

- Jan 31, 2008

- Messages

- 111

Capturing the performance crown would be a huge boon to their perception and would increase sales of their lower SKU's. They know this and they didn't do it, so either they can't or they don't want to capture more market share.

Not so much they don’t as much as they can’t, AMD sells 100% of what they make, at this stage any market share gains will need to come directly at the expense of another more lucrative product segment. AMD makes a killing on custom silicon sales, they can’t and won’t jeopardize that uncontested cash flow to fight for a larger share of a shrinking market.Capturing the performance crown would be a huge boon to their perception and would increase sales of their lower SKU's. They know this and they didn't do it, so either they can't or they don't want to capture more market share.

Sony, Microsoft, Tesla, they all pay more for custom hardware with far better margins than any of us would be willing to pay for that level of GPU/CPU so why cut their numbers and risk loosing them or a lawsuit to gain 3-5% market share of a low volume low margin consumer space.

Every year AMD further positions itself as a boutique silicon designer, they are all about the custom integrated hardware, we are just the side hustle.

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

Eh, that's a nice way of saying it. A more cynical view would look at gamers as being paying beta testers so that they can develop data center hardware like Instinct cards. Well and also for effectively the custom silicon you bring up as well. The tech still has to get developed one way or another for them to even be able to semi-custom package it to begin with.Not so much they don’t as much as they can’t, AMD sells 100% of what they make, at this stage any market share gains will need to come directly at the expense of another more lucrative product segment. AMD makes a killing on custom silicon sales, they can’t and won’t jeopardize that uncontested cash flow to fight for a larger share of a shrinking market.

Sony, Microsoft, Tesla, they all pay more for custom hardware with far better margins than any of us would be willing to pay for that level of GPU/CPU so why cut their numbers and risk loosing them or a lawsuit to gain 3-5% market share of a low volume low margin consumer space.

Every year AMD further positions itself as a boutique silicon designer, they are all about the custom integrated hardware, we are just the side hustle.

Not Beta testers exactly, AMD just doesn't bother spending the money on us to verify their shit works before we get it. A Beta test would indicate they have a plan to make it better and a list of things they want to improve on, we get what we get because their investors don't see the value in dedicating resources to getting it right the first time.Eh, that's a nice way of saying it. A more cynical view would look at gamers as being paying beta testers so that they can develop data center hardware like Instinct cards. Well and also for effectively the custom silicon you bring up as well. The tech still has to get developed one way or another for them to even be able to semi-custom package it to begin with.

TrunksZero

Gawd

- Joined

- Jul 15, 2021

- Messages

- 550

The 7900 is already using a chiplet design. The only thing left to do is a consumer grade MCM videocard like the Instinct series.GPU chiplet if it is ever pulled off could be exactly this and it is obvious why I would be limited to thing about something else but nothing else come to mind

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,580

They could have. 7900 XTX from board partners which have increased power limits, can often overclock really well. And more/less match the 4090 in raster.

Which means AMD could have binned chips and released a bigger card.

I think probably the likely reason they did not do that, is that their architecture is not yet where they want it to be. They did make a pretty big improvement in Ray Tracing----they are still behind in overall RT preformance. And certain RT effects still absolutely tank their performance.

They are also still working on other features, such as FSR with machine learning, frame rate boosting like DLSS3, etc. But its very clear that their teams working on this stuff, aren't comparatively large. The 7900 cards release and their driver team apparently doesn't have the size/manpower, to make drivers for the new cards AND their previous cards. Not a good look. And that might mean that the teams working on the other features are also relatively small. They need to increase in these areas, if they want to get serious.

Once they get their architecture and features more fleshed out, and increase the amount of people working on this stuff: I think we will see AMD make whatever GPU products they can. That's what they do on the CPU side, because their CPU side is matured and very well featured. GPU still isn't quite there yet. They aren't exactly matching Nvidia, let alone beating them.

Which means AMD could have binned chips and released a bigger card.

I think probably the likely reason they did not do that, is that their architecture is not yet where they want it to be. They did make a pretty big improvement in Ray Tracing----they are still behind in overall RT preformance. And certain RT effects still absolutely tank their performance.

They are also still working on other features, such as FSR with machine learning, frame rate boosting like DLSS3, etc. But its very clear that their teams working on this stuff, aren't comparatively large. The 7900 cards release and their driver team apparently doesn't have the size/manpower, to make drivers for the new cards AND their previous cards. Not a good look. And that might mean that the teams working on the other features are also relatively small. They need to increase in these areas, if they want to get serious.

Once they get their architecture and features more fleshed out, and increase the amount of people working on this stuff: I think we will see AMD make whatever GPU products they can. That's what they do on the CPU side, because their CPU side is matured and very well featured. GPU still isn't quite there yet. They aren't exactly matching Nvidia, let alone beating them.

TrunksZero

Gawd

- Joined

- Jul 15, 2021

- Messages

- 550

My guess as to why a consumer grade MCM hasn't been done, is the logic for the 2 GPU cores has to be entirely on die and be perfect. Because in enterprise setups, it's not a big deal for the software to be the interaction layer for multiple GPU's. Since allot of software in research and servers is already coded to address multi-GPU setups. Games however are not and game developers will not have the time to code it. (SLI/Crossfire died with DX12 & Vulkan because coding the interaction flipped from Drivers side to software side with the new API's)

So figuring out how to make a multicore GPU MCM design work in a way that to the OS/software appears and operates as a monolithic... is probably pretty challenging.

So figuring out how to make a multicore GPU MCM design work in a way that to the OS/software appears and operates as a monolithic... is probably pretty challenging.

You are not wrong, Nvidia has a series of papers on the topic going as far back as 2017 (that I can find), what they have always encountered until very recently is that the required scheduler to move that amount of data at those speeds exceeds the cost of the GPU itself, the only space where it was financially viable was for very small GPU's what we could consider budget or entry-level. Apple has successfully implemented it at a prosumer level with the M1 Ultra, they very nicely join the two GPU cores in the two M1 chips that make up the Ultra but that highlights its weaknesses as much as its benefits as those are individually weak (by our standards) cores.My guess as to why a consumer grade MCM hasn't been done, is the logic for the 2 GPU cores has to be entirely on die and be perfect. Because in enterprise setups, it's not a big deal for the software to be the interaction layer for multiple GPU's. Since allot of software in research and servers is already coded to address multi-GPU setups. Games however are not and game developers will not have the time to code it. (SLI/Crossfire died with DX12 & Vulkan because coding the interaction flipped from Drivers side to software side with the new API's)

So figuring out how to make a multicore GPU MCM design work in a way that to the OS/software appears and operates as a monolithic... is probably pretty challenging.

Enterprise can afford to not worry about the needed schedulers, the software they run is inherently multi-GPU friendly, NVLink exists for that exact reason, and CUDA as a platform excels here, the consumer space doesn't have that luxury, nobody here would want to spend $2000 on the latest and greatest MCM GPU from either team that has specs 3x the best of the previous generation to watch one core ramp to 100% while the other bounces from 4-5, while the game performance itself averages maybe 25% better with frame stuttering that makes it unplayable. So there is just a lot that needs to be lined up to automate the process for us and that is expensive, the challenge comes in coordinating the gaming industry to adopt it and patch for it in advance so it works with existing titles on launch.

EmerilLIVE

Weaksauce

- Joined

- Jan 31, 2008

- Messages

- 111

Sony & Microsoft both license the designs from AMD and source production themselves. AMD's margin per unit on Radeon graphics cards are significantly higher than they make per console, but consoles are a high-volume product comparatively. Just look at nVidia's massive margins and do the math on the margin from dGPU sales. A major difference is in cash flow where Sony & Microsoft are paying AMD a significant amount up front for designing their SOC's, whereas with Radeon GPU's AMD is fully paying for R&D themselves and must recoup that cost from selling products, although the SOC's that they design for Sony & Microsoft are simply derived from their existing product stack, so it is hardly a 1-to-1 comparison. AMD could produce more Radeon GPU's, they would need to source increased manufacturing capacity, including FAB capacity from TSMC.Not so much they don’t as much as they can’t, AMD sells 100% of what they make, at this stage any market share gains will need to come directly at the expense of another more lucrative product segment. AMD makes a killing on custom silicon sales, they can’t and won’t jeopardize that uncontested cash flow to fight for a larger share of a shrinking market.

Sony, Microsoft, Tesla, they all pay more for custom hardware with far better margins than any of us would be willing to pay for that level of GPU/CPU so why cut their numbers and risk loosing them or a lawsuit to gain 3-5% market share of a low volume low margin consumer space.

Every year AMD further positions itself as a boutique silicon designer, they are all about the custom integrated hardware, we are just the side hustle.

You clearly don't understand how that agreement works, AMD sells them a bundle containing the CPU, RAM, and most of the board components such as capacitors and regulators, and Microsoft and Sony are free to find somebody to solder them to a board and fill in the cheaper components they would have on hand.Sony & Microsoft both license the designs from AMD and source production themselves. AMD's margin per unit on Radeon graphics cards are significantly higher than they make per console, but consoles are a high-volume product comparatively. Just look at nVidia's massive margins and do the math on the margin from dGPU sales. A major difference is in cash flow where Sony & Microsoft are paying AMD a significant amount up front for designing their SOC's, whereas with Radeon GPU's AMD is fully paying for R&D themselves and must recoup that cost from selling products, although the SOC's that they design for Sony & Microsoft are simply derived from their existing product stack, so it is hardly a 1-to-1 comparison. AMD could produce more Radeon GPU's, they would need to source increased manufacturing capacity, including FAB capacity from TSMC.

AMD makes 89% of what Nvidia does on gaming hardware sales, yet sells drastically less hardware, consoles make up a huge part of their income and it is the only thing that keeps them relevant in gaming, they can not risk it in any capacity.

You make it sound like AMD can just call TSMC and ask for more time, TSMC is booked, and even with Apple, Nvidia, and AMD decreasing their silicon orders from TSMC, they are still booked, time with TSMC on any of their nodes is booked bare minimum a year in advance if TSMC and the others weren't at capacity, and in a state where they can't meet the demand, we wouldn't be in the situation we currently find ourselves. AMD could manufacture more consumer GPUs but who is buying them? The only way they gain market share would be by slashing their margin, they have promised investors to surpass Nvidia's margins of 63% by 2025, but they aren't doing that by getting into a price war for a race to the bottom consumer GPU sales. Say they cut their cards to a point where they only have a 20% margin could they increase sales 3x to cover that spread, what if Nvidia follows? You and I would love that price war, their investors and their legal teams would shit a brick, it is actively against their best interest to do that so they won't.

Twisted Kidney

Supreme [H]ardness

- Joined

- Mar 18, 2013

- Messages

- 4,099

I'm sure they could, but it's hard to turn a profit on a video card that comes with a power plant.

Maybe that’s their new thing, they have AMD chairs, desks, mountain bikes, maybe AMD reactors are up next.I'm sure they could, but it's hard to turn a profit on a video card that comes with a power plant.

TrunksZero

Gawd

- Joined

- Jul 15, 2021

- Messages

- 550

I'm sure they could, but it's hard to turn a profit on a video card that comes with a power plant.

Usual_suspect

Limp Gawd

- Joined

- Apr 15, 2011

- Messages

- 317

That's why I stepped out of the AMD arena--they don't have any "proprietary killer features" that entice me to stick with them. Nvidia has the money and spends the money to research these things that bring extra value to their cards. When I did my research on the 7900XT and XTX, and the 40-series cards I applied the same logic that I did when I bought my iPhone. Yes, Android devices are usually spec monsters, are open-ended, and can be customized to my heart's content, but Apple supports their devices for up to five years, have local Apple stores I can go to for service, etc. but to translate it to why I chose Nvidia over AMD it's simple: Value. Things like DLSS, and Frame Generation add a lot of value to the 4070Ti, something AMD doesn't have, and looking at the rumored product stack for the 7000-series--it's looking like it'll be a typical generational improvement with nothing of value added to make them worth sticking around with. I think, in large, this is what's helped Nvidia stay on top, outside of being generally better performing cards, they bring value adds like AI Machine Learning, DLSS, Nvenc, and CUDA--things AMD hasn't really been able to compete with, and if they did, it was short-lived.true and they're also willing to sink billions to flood the marking side any time they feel threatened. they did it with the 5k and 6k series when AMD was quite competitive. as far as AMD copying them, i wouldn't say they are per say, nvidia just has the budget and man power to release things quicker than AMD can. AMD has typically been ahead and willing to take risks on the hardware side, it was just getting microsoft to actually do anything so they could use it.. e.g. GCN had supported ACE since day one yet it was never gained support until Vega. they took a risk on supporting GDDR4 which turned out to be a mistake since GDDR5 ended up going into production sooner than expected. they forced microsoft's hand and made them release DX12 when they decided to bring closer to metal support to desktop which led to vulkan which has led to gaming actually being an option on linux for the masses. what exactly has nvidia done other than tack on more proprietary crap to justify their insanely high prices?(not that AMD wouldn't be doing the same thing if they could) so no i wouldn't say all they do is copy nvidia, they just don't have the ability to spend money like nvidia can.

Yes, AMD has done a lot of good for the gaming community, but they can't just rest on their past accomplishments--they have nothing outside of them. RSR, AMD's built-in to drivers upscaling tech, great tech, but it's a one-trick pony, but it's inferior to FSR 2 which is usable by Nvidia and Intel which means you don't need an AMD card to capitalize on it. DLSS on the other hand is driven by AI, which requires Nvidia's Tensor cores, produces better image quality at almost the same performance levels, and comes with additional features such as DLAA, DLDSR, Reflex and Frame Generation (DLSS 3.0/40-Series), RTX VSR, etc.

Say what you want about G-Sync, but it ushered in the era of Adaptive Sync. Freesync, while not a direct clone of G-Sync, was inspired by it, and I will say that in a lot of ways it was a copycat move to try and paint AMD in a positive light, which it did, but not enough of one to move GPU's, especially once NVidia opened up their hardware to support FreeSync as well as G-Sync. I don't know about you, but having smooth, tear-free gameplay has become an essential thing to me, and a ton of other people as well.

AMD has pretty much played copycat to Nvidia for quite some time. Yes, took risks, but look where that landed them. Yes, at times they were ahead of Nvidia with newer hardware techs, like currently supporting DP 2.0 and 2.1, sure GCN once utilized was a great architecture, but all that doesn't translate to benefitting the end user. First, how many DP 2.0 displays are out there and affordable to the masses? ACE taking so long to finally come around, and GPUs featuring it still getting dominated by Nvidia didn't bode well whatsoever, but hey, at least they tried it. Sure, they motivated MS to push out DX12, and aided with Vulkan's development which aided in gaming on Linux, but again how many people game on Linux, and even with DX12 out, what has AMD really done with it? Ultimately, AMD has done a lot of good behind the scenes, but consumers want their product to work now, not years down the road, and they want the best performance day 1, not five years down the road. I can say Vulkan was probably the most successful thing to come out of AMD for us consumers.

As I've said before in another message board--AMD needs that killer feature, something that only their cards can do because, at the end of the day, you can't just rely solely on the performance of your hardware to move cards, value is king, especially when people are dropping $800+ on your product.

TrunksZero

Gawd

- Joined

- Jul 15, 2021

- Messages

- 550

"value is a king"... that's a pretty hard sentiment to take to heart for todays pricing. Especially if you advocating for nVidia.

- Status

- Not open for further replies.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)