I really liked the sapphire toxic as well. Too bad they were kinda late to the AIB party. XFX and Powercolor went day one. Asrock and Sapphire lagged this time.I was very close, it had add to cart for the Merc on BB, I didn't know AIBs were going to be available so I investigated and lost the window to add it to the cart. Yep, you snooze you lose.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD 7900XT and 7900 XTX Reviews

- Thread starter LFaWolf

- Start date

Most of my suppliers don’t even deal with AsRock. I’ve never actually handled one of their products as a result, how are they? Sapphire I have to break my own preconceptions there as I still remember them as the off rand ATI, obviously not the case but that’s just how I remember them.I really liked the sapphire toxic as well. Too bad they were kinda late to the AIB party. XFX and Powercolor went day one. Asrock and Sapphire lagged this time.

GoodBoy

2[H]4U

- Joined

- Nov 29, 2004

- Messages

- 2,745

It's good that AMD is finally getting competitive and has some RT prowess. Even if it is just reaching to 3090 level of RT. 3090 is a great card BTW, and even in the heavy-lift games like Cyberpunk, plays quite well as long as you also use G-Sync or adaptive sync tech. Even on a 2080Ti it felt smooth with G-Sync when getting 35fps.

UE5 has full raytracing support I believe. It accelerates lumen performance:

So AMD having some good RT hardware (I think that is how these newest cards work) will be a big benefit.

I think the 2 newest AMD cards along with the 4080 could use $100 price reductions but as long as they just all sell out, prices will stay where they are. The renamed 4070Ti is supposed to get a price cut from the original msrp, let's hope.

UE5 has full raytracing support I believe. It accelerates lumen performance:

Whitepaper said:"Enabling raytracing in UE5 is a bit different from UE4. In UE4, we enable raytracing in the project settings

then control individual raytracing effects in the post process volume. In UE5, several lighting components

(e.g., Global Illumination and reflections) are solved in one system (Lumen). To achieve this, Lumen is

heavily dependent on raytracing. As a result, Lumen can leverage GPUs with hardware raytracing support

to improve and accelerate raytracing. With non-supported hardware, Lumen falls back to “software

raytracing” which depends on distance fields instead of meshes.

To benefit from hardware raytracing, we need to enable it for Lumen and other systems as explained

below:

1. Hardware Raytracing

The first step is to make sure ‘Use Hardware Ray Tracing when available’ is enabled in the project

settings/rendering section. This allows other rendering systems to use hardware in raytr..."

So AMD having some good RT hardware (I think that is how these newest cards work) will be a big benefit.

I think the 2 newest AMD cards along with the 4080 could use $100 price reductions but as long as they just all sell out, prices will stay where they are. The renamed 4070Ti is supposed to get a price cut from the original msrp, let's hope.

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

If AIB's are making a premium product, that always takes time to launch. It's a shame about EVGA as an example, I wasn't aware of the lengths they went to get performance out of their Kingpin cards.I really liked the sapphire toxic as well. Too bad they were kinda late to the AIB party. XFX and Powercolor went day one. Asrock and Sapphire lagged this time.

Full custom boards, increased lines in power in addition to power unlocks, as well as custom cooling. All that engineering takes time.

Not sure what Sapphire does to get their cards to be more performative, but if they're doing any of that, then it makes sense. Custom cooling in particular can take a lot of time to build if they need to do a bunch of fabrication and test. Toxic looks to have the biggest 7900XTX air cooler, for better or for worse.

Getting to market faster is only a part of it. Being better is another thing entirely.

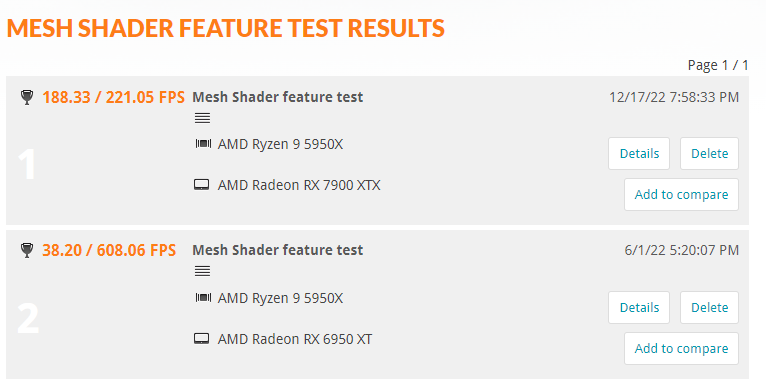

Mesh Shaders Seem Broken on AMD Radeon RX 7900 Series GPUs: Half as Fast as the NVIDIA RTX 3080 Ti in 3DMark MS Benchmark

the GPU ends up slower than every present and last-generation high-end offering, including the RTX 3080, RTX 3080 Ti, and RTX 3090, as well as the RDNA 2-based RX 6800 XT and the 6900 XT. This issue was originally highlighted by @Digidi20 on Twitter, one of the members of the 3DCenter team.

https://www.hardwaretimes.com/mesh-...he-nvidia-rtx-3080-ti-in-3dmark-ms-benchmark/

They lied about the performance, and people are still believing them? Numbers don't lie. Left numbers are without hardware mesh shader. Right is mesh shader acceleration.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,572

I would wait for a couple of drivers updates, before trying to say the 7900 series is broken. The performance is so inconsistent, it really just feels like their driver team didn't have time to get most games optimized. And mesh shaders aren't featured in any games right now. Seems like that's the last thing they need to focus on.Mesh Shaders Seem Broken on AMD Radeon RX 7900 Series GPUs: Half as Fast as the NVIDIA RTX 3080 Ti in 3DMark MS Benchmark

the GPU ends up slower than every present and last-generation high-end offering, including the RTX 3080, RTX 3080 Ti, and RTX 3090, as well as the RDNA 2-based RX 6800 XT and the 6900 XT. This issue was originally highlighted by @Digidi20 on Twitter, one of the members of the 3DCenter team.

https://www.hardwaretimes.com/mesh-...he-nvidia-rtx-3080-ti-in-3dmark-ms-benchmark/

Mesh Shaders Seem Broken on AMD Radeon RX 7900 Series GPUs: Half as Fast as the NVIDIA RTX 3080 Ti in 3DMark MS Benchmark

the GPU ends up slower than every present and last-generation high-end offering, including the RTX 3080, RTX 3080 Ti, and RTX 3090, as well as the RDNA 2-based RX 6800 XT and the 6900 XT. This issue was originally highlighted by @Digidi20 on Twitter, one of the members of the 3DCenter team.

https://www.hardwaretimes.com/mesh-...he-nvidia-rtx-3080-ti-in-3dmark-ms-benchmark/

I am willing to bet this is drivers. Lot of people are jumping the gun on this. Given how there seems be optimaztion gaps in lot of titles and performance being uneven.

Legendary Gamer

[H]ard|Gawd

- Joined

- Jan 14, 2012

- Messages

- 1,584

Here's some video card porn for everyone. Had to include the blurry action shot too. 6900XT on top and 7900XTX on the bottom.

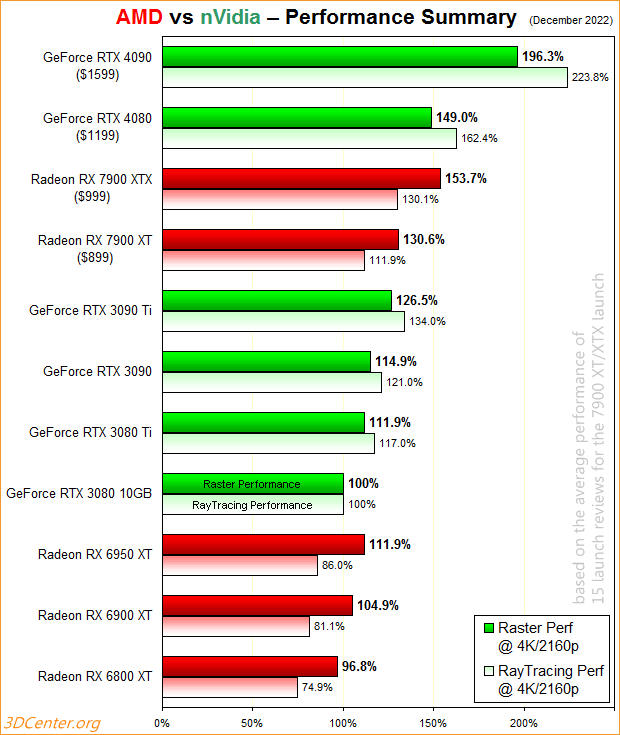

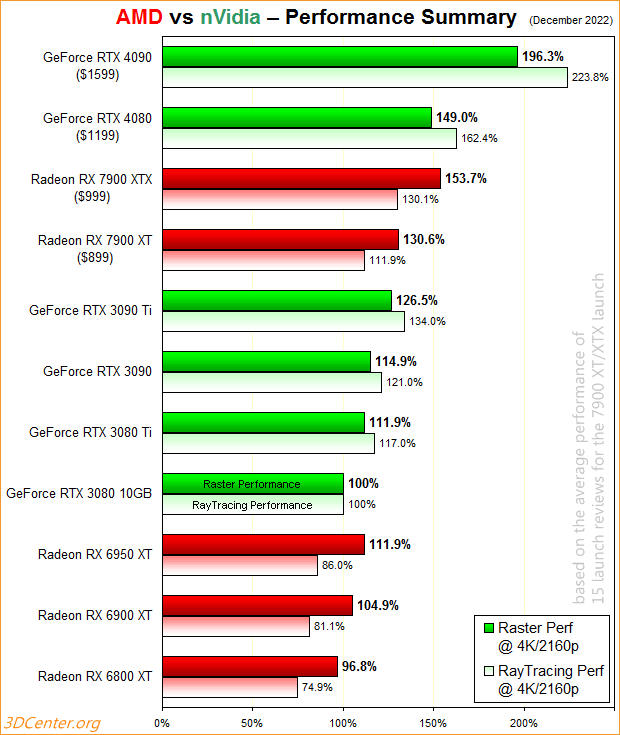

AMD vs nVidia Performance Summary (December 2022): average raster & ray tracing performance @ 2160p based on the work of 15 reviews by CB, HWL, Igor's, PCGH, DF, KitGuru, Paul's, TPU, TS/HWU, Tom's, PurePC, HWUpgrade, Le Comptoir, Tweakers & Quasarzone

https://twitter.com/3DCenter_org/status/1605262002280087552?s=20&t=R3vwxpcLbWFByX1ZuyPfew

https://twitter.com/3DCenter_org/status/1605262002280087552?s=20&t=R3vwxpcLbWFByX1ZuyPfew

So overall we are looking at almost 50% over 6900xt and 40+% over 6950xt. Not sure where people keep getting this only 30% over 6900xt. This is good estimate from multiple sources I guess. So with drivers still maturing may be they will get to that performance/efficiency target over 6950xt.AMD vs nVidia Performance Summary (December 2022): average raster & ray tracing performance @ 2160p based on the work of 15 reviews by CB, HWL, Igor's, PCGH, DF, KitGuru, Paul's, TPU, TS/HWU, Tom's, PurePC, HWUpgrade, Le Comptoir, Tweakers & Quasarzone

https://twitter.com/3DCenter_org/status/1605262002280087552?s=20&t=R3vwxpcLbWFByX1ZuyPfew

View attachment 535886

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,788

People have a habit of cherry picking numbers from a reviewer that got the results they agree with most.So overall we are looking at almost 50% over 6900xt and 40+% over 6950xt. Not sure where people keep getting this only 30% over 6900xt. This is good estimate from multiple sources I guess.

So overall we are looking at almost 50% over 6900xt and 40+% over 6950xt. Not sure where people keep getting this only 30% over 6900xt. This is good estimate from multiple sources I guess. So with drivers still maturing may be they will get to that performance/efficiency target over 6950xt.

Use of an AMD platform for testing (with Smart Access Memory enabled) makes a fairly significant performance difference - north of 10% in some games and especially at lower resolutions. Thus, if you're comparing a reviewer's numbers with an Intel platform vs AMD, you're going to see wildly different results.

Yea TPU on 4K ultra reported 60fps on reference. I got 70 on ultra 4K with 7700x. So kinda big discrepancy.Use of an AMD platform for testing (with Smart Access Memory enabled) makes a fairly significant performance difference - north of 10% in some games and especially at lower resolutions. Thus, if you're comparing a reviewer's numbers with an Intel platform vs AMD, you're going to see wildly different results.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,572

Prove it?Use of an AMD platform for testing (with Smart Access Memory enabled) makes a fairly significant performance difference - north of 10% in some games and especially at lower resolutions. Thus, if you're comparing a reviewer's numbers with an Intel platform vs AMD, you're going to see wildly different results.

Hardware unboxed looked at SAM on a few different platforms and the results don't line up with your post.

That said, the test is a few months old, of course do not include the 7900 cards.

Yea TPU on 4K ultra reported 60fps on reference. I got 70 on ultra 4K with 7700x. So kinda big discrepancy.

*Their test system for the 7900xtx review, is a 5800x3D. In some games, Zen 4 blows that CPU away. That's likely the difference, right there, for your specific games.

Last edited:

AMD smart access memory is different than Intel Smart Access Memory even in late 2022 ? I think that something that was maybe true in some point 2020 and that people repeat now.Use of an AMD platform for testing (with Smart Access Memory enabled) makes a fairly significant performance difference - north of 10% in some games and especially at lower resolutions. Thus, if you're comparing a reviewer's numbers with an Intel platform vs AMD, you're going to see wildly different results.

with Intel, AMD and Nvidia GPU using Rebar, I would imagine recent Intel platform got good at it, Intel GPU themselve seem to absolutely need rebar to work correctly.

Last edited:

Prove it?

Hardware unboxed looked at SAM on a few different platforms and the results don't line up with your post.

That said, the test is a few months old, of course do not include the 7900 cards.

*Their test system for the 7900xtx review, is a 5800x3D. In some games, Zen 4 blows that CPU away. That's likely the difference, right there, for your specific games.

Try this: https://www.thefpsreview.com/2022/1...0-xtx-and-xt-smart-access-memory-performance/

This seem to be comparing resizable bar on vs off, not AMD vs Intel platform and it also necessitate Rebar to have a bigger effect on RDNA 3 to RDNA 2 to move that needle no ?

Is there any tester that disable rebar in their bios or use pre Ryzen 3xxx-Intel 10xxx system that does not support it ? I had the feeling that we have only seen rebar on metrics for a while now.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,572

At the beginning of the article, they ask the question Are reviewers using Intel systems, hurting Radeon Radeon RX 7900 Series GPU performance?

But, they do not answer that question, with numbers.

Then in the conclusion, they imply a few times, that using an AMD plaform, has an advantage over using an Intel platform, for resizeable BAR, with a 7900 series GPU. But they do not show any proof. Here is one example:

If you are on an Intel platform, you won’t get AMD Smart Access Memory, but you can still enable Resizable BAR on them as well, so at least do that for the best possible experience.

The additional extrapolation here, is that AMD is doing something extra/optimized, which gives them a performance boost with their own cards, compared to using an Intel system. However, at least going by Hardware Unboxed's tests from a few months ago, that is not true. In the final performance numbers, an Alderlake system is giving more/less the same performance boost from resizeable BAR, as a Zen 3 system.

And actually, it seems to be more based on whether or not the motherboard vendor spent time tuning the BIOS to properly utilize resizeable BAR and/or Smart Access Memory. In HU's video, at that time, it seems like their Zen 2 3950x was not well supported for it. Even though they could technically enable the feature.

Last edited:

Try re-reading what you quoted which is the reason for my reply and you'll see exactly how the article I linked is relevant.At the beginning of the article, they ask the question Are reviewers using Intel systems, hurting Radeon Radeon RX 7900 Series GPU performance?

But, they do not answer that question, with numbers.

Then in the conclusion, they imply a few times, that using an AMD plaform, has an advantage over using an Intel platform, for resizeable BAR, with a 7900 series GPU. But they do not show any proof. Here is one example:

If you are on an Intel platform, you won’t get AMD Smart Access Memory, but you can still enable Resizable BAR on them as well, so at least do that for the best possible experience.

The additional extrapolation here, is that AMD is doing something extra/optimized, which gives them a performance boost with their own cards, compared to using an Intel system. However, at least going by Hardware Unboxed's tests from a few months ago, that is not true. In the final performance numbers, an Alderlake system is giving more/less the same performance boost from resizeable BAR, as a Zen 3 system.

And actually, it seems to be more based on whether or not the motherboard vendor spent time tuning the BIOS to properly utilize resizeable BAR and/or Smart Access Memory. In HU's video, at that time, it seems like their Zen 2 3950x was not well supported for it. Even though they could technically enable the feature.

Also, are you saying that the data obtained in the article is a lie? It's showing an obvious performance increase that likely makes up for the lower numbers other reviewers got.

And are you seriously posting a video using a 3000 series Ryzen as your proof that it doesn't matter?

You seem to be talking about 2 separate things, does rebar matter vs does AMD rebar work better in general (with intel-nvidia gpus has well) than intel rebar or does it work better specially with RDNA 2-RDNA 3 gpus.Also, are you saying that the data obtained in the article is a lie? It's showing an obvious performance increase that likely makes up for the lower numbers other reviewers got.

And people that get rebarless number for the 7900xtx would also tend to get rebarless numbers for the 6900xt, does it hurt one more than the other ?

Has for the only 30% over a 6900xt I am not sure I heard that one before, techpower up has +47% over the 6900xt at 4K at that tend to be the online reference, it is over the 6950xt I would imagine that +30

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,572

You are confusing yourself.Try re-reading what you quoted which is the reason for my reply and you'll see exactly how the article I linked is relevant.

Also, are you saying that the data obtained in the article is a lie? It's showing an obvious performance increase that likely makes up for the lower numbers other reviewers got.

And are you seriously posting a video using a 3000 series Ryzen as your proof that it doesn't matter?

Schro said this:

Use of an AMD platform for testing (with Smart Access Memory enabled) makes a fairly significant performance difference - north of 10% in some games and especially at lower resolutions. Thus, if you're comparing a reviewer's numbers with an Intel platform vs AMD, you're going to see wildly different results.

I quoted them and asked them to "prove it" (prove that SAM on an AMD platform, performs better than REBAR on an Intel platform, with an AMD GPU).

So far, I haven't seen any evidence showing that. The article you linked, does not show that with numbers. Even though they do otherwise seem to say that.

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,000

I think we need to establish this:

Is it Resizable BAR that creates a performance difference

or

Is it the AMD Platform that creates a performance difference

We need to isolate these variables.

Is it Resizable BAR that creates a performance difference

or

Is it the AMD Platform that creates a performance difference

We need to isolate these variables.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,203

Its only 37% over the 6950xt. Don't move the goalposts. Amd themselves said 50-70% over the 6950xt.So overall we are looking at almost 50% over 6900xt and 40+% over 6950xt. Not sure where people keep getting this only 30% over 6900xt. This is good estimate from multiple sources I guess. So with drivers still maturing may be they will get to that performance/efficiency target over 6950xt.

While it might get there eventually, nobody said just 30% over the 6900xt. If so, show links/screen shots.

I get it. I am not here to take arms over the subject. Its because in some titles the performance is kinda all over the place. In newer titles it does tend to be much faster. AMD did drop the ball there but yea people have been saying all over the internet its 30-35% over 6900xt. I am not really going to go back and search each reditt or twitter for comments, its just what I read during browsing.Its only 37% over the 6950xt. Don't move the goalposts. Amd themselves said 50-70% over the 6950xt.

While it might get there eventually, nobody said just 30% over the 6900xt. If so, show links/screen shots.

The Mad Atheist

2[H]4U

- Joined

- Mar 9, 2018

- Messages

- 2,167

AMD should revamp their naming scheme, I already had a Radeon 9700 Pro and a AIW Radeon 9600 XT.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,203

I get it. I am not here to take arms over the subject. Its because in some titles the performance is kinda all over the place. In newer titles it does tend to be much faster. AMD did drop the ball there but yea people have been saying all over the internet its 30-35% over 6900xt. I am not really going to go back and search each reditt or twitter for comments, its just what I read during browsing.

If you search reddit and Twitter enough, you could find people saying the card is faster than the 4090, but if course, that's just noise, same as the 'only 30% faster than the 6900xt' crowd.

So why then bring it up? They are insignificant and people really only care about what is said on the manner from those with credititicals.

Legos vs Ethos vs Pathos. Outside of politics, Ethos is tied to Legos. Try and ignore the Pathos.

Look like BB dropped the ball on my merc 310 order. I didn’t see the green bar move until yesterday and pick up was today. So was pretty sure it wasn’t gonna be available on the pick up date. They did confirm it’s on its way to store when I chatted with total tech support. With winter storm coming up I doubt I get it before Monday, tomorrow is still a chance but doubt it. My first delayed pick up Lmao.

UPDATE:Mesh Shaders Seem Broken on AMD Radeon RX 7900 Series GPUs: Half as Fast as the NVIDIA RTX 3080 Ti in 3DMark MS Benchmark

the GPU ends up slower than every present and last-generation high-end offering, including the RTX 3080, RTX 3080 Ti, and RTX 3090, as well as the RDNA 2-based RX 6800 XT and the 6900 XT. This issue was originally highlighted by @Digidi20 on Twitter, one of the members of the 3DCenter team.

https://www.hardwaretimes.com/mesh-...he-nvidia-rtx-3080-ti-in-3dmark-ms-benchmark/

AMD Acknowledges Mesh Shader Bug: Engineers Working on a Fix

We reached out to AMD and were informed that the team is working on the issue.

AMD hasn’t admitted anything as of now. The chipmaker is aware of the issue and is actively working on a fix.

https://www.hardwaretimes.com/amd-acknowledges-mesh-shader-bug-engineers-working-on-a-fix/

It’s good they acknowledge there is an issue, but I don’t like how they don’t specify which team is working on it the issue, they also never call it a bug or a problem.UPDATE:

AMD Acknowledges Mesh Shader Bug: Engineers Working on a Fix

We reached out to AMD and were informed that the team is working on the issue.

AMD hasn’t admitted anything as of now. The chipmaker is aware of the issue and is actively working on a fix.

https://www.hardwaretimes.com/amd-acknowledges-mesh-shader-bug-engineers-working-on-a-fix/

Issues and bugs are two very different things.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,572

I haven't yet heard any clear, credible leak, that the 7900 series actually has hardware issues. For now, I am willing to bet this is a driver issue. As are the performance inconsistencies in some games.It’s good they acknowledge there is an issue, but I don’t like how they don’t specify which team is working on it the issue, they also never call it a bug or a problem.

Issues and bugs are two very different things.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,788

This is most likely it. The "broken silicon" was always very farfetched.I haven't yet heard any clear, credible leak, that the 7900 series actually has hardware issues. For now, I am willing to bet this is a driver issue. As are the performance inconsistencies in some games.

Firmware, driver, hardware, design oversight? They simply could have made a design decision and decided Mesh Shaders weren’t important at this stage. There’s currently only 2 or 3 games that make use of them actively, if you had to choose between mesh or ray tracing at this stage it’s obvious which gets the cut.I haven't yet heard any clear, credible leak, that the 7900 series actually has hardware issues. For now, I am willing to bet this is a driver issue. As are the performance inconsistencies in some games.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,011

While I agree, if this turns out to be a hardware "issue" this makes the 7000 series less future proof than it should be. Once mesh shaders supplant current shader technology, it will leave the 7000 series dead in the water.Firmware, driver, hardware, design oversight? They simply could have made a design decision and decided Mesh Shaders weren’t important at this stage. There’s currently only 2 or 3 games that make use of them actively, if you had to choose between mesh or ray tracing at this stage it’s obvious which gets the cut.

- Joined

- May 18, 1997

- Messages

- 55,596

This conflicts with what THG reported on this.UPDATE:

AMD Acknowledges Mesh Shader Bug: Engineers Working on a Fix

We reached out to AMD and were informed that the team is working on the issue.

AMD hasn’t admitted anything as of now. The chipmaker is aware of the issue and is actively working on a fix.

https://www.hardwaretimes.com/amd-acknowledges-mesh-shader-bug-engineers-working-on-a-fix/

While I agree, by the time mesh shaders become the norm the 7000 series will be old enough that it would be like complaining that ray tracing doesn't work on the 1000 series.While I agree, if this turns out to be a hardware "issue" this makes the 7000 series less future proof than it should be. Once mesh shaders supplant current shader technology, it will leave the 7000 series dead in the water.

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,868

Scope this out, seems pretty interesting

Link goes to an article from Oct 2021 on a Win 11/CPU cache bug.UPDATE:

AMD Acknowledges Mesh Shader Bug: Engineers Working on a Fix

We reached out to AMD and were informed that the team is working on the issue.

AMD hasn’t admitted anything as of now. The chipmaker is aware of the issue and is actively working on a fix.

https://www.hardwaretimes.com/amd-acknowledges-mesh-shader-bug-engineers-working-on-a-fix/

Can't find the quoted article under the GPU section of the site.

- Joined

- May 18, 1997

- Messages

- 55,596

They deleted the story as it was bullshit.Link goes to an article from Oct 2021 on a Win 11/CPU cache bug.

Can't find the quoted article under the GPU section of the site.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

Broke likely isn't the correct way to say it. Hasn't been the first time this has happened in GPU history like with the FX series fiasco and less than optimal SM 2.0 support.This is most likely it. The "broken silicon" was always very farfetched.

Hopefully this is just a driver level issue, and not something baked into silicon. This will present some serious issues as UE5 titles start rolling out.

Bankie

2[H]4U

- Joined

- Jul 27, 2004

- Messages

- 2,467

I went to AMD.com just a bit ago and was placed in queue for an XTX so they might still be available for someone that's interested.

I thought about it but nah. $1000 to play games that aren't even limited by my 2080ti is just dumb in my case. I'd be better off buying a 5800x3d or adding some more RGB.

I thought about it but nah. $1000 to play games that aren't even limited by my 2080ti is just dumb in my case. I'd be better off buying a 5800x3d or adding some more RGB.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)