MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,466

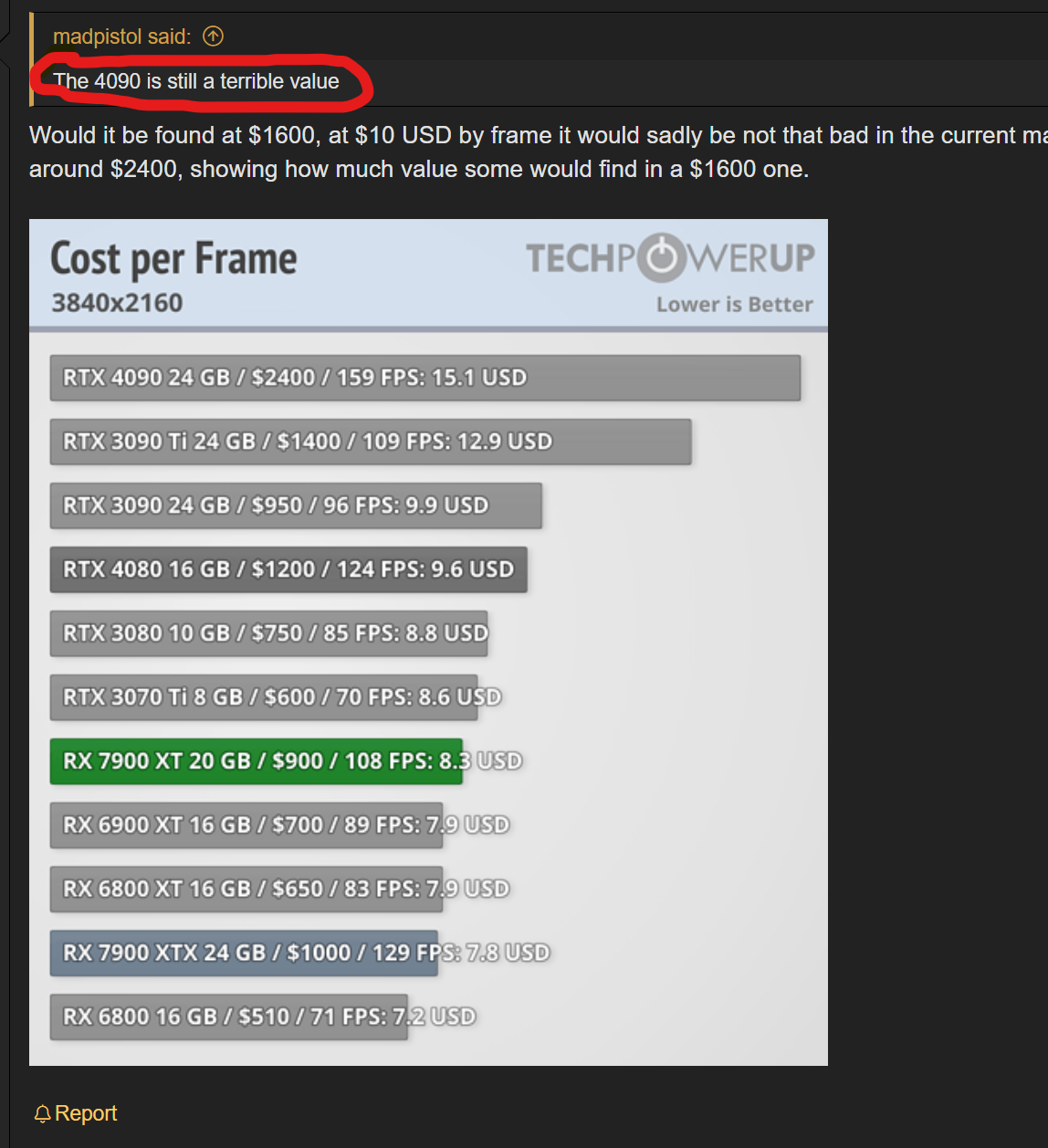

I bet Jensen is wishing he didn't give us a 102 die in the 4090, could have easily launched the 4080 as the top dog and had no problem.

It's fine at least he reserved the 102 die for the 90 Class GPU this time and did not put it in a $700 80 Class GPU like the 3080 lol

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)