The 7900xtx and xt are cheaper but I’m not sure how they are exactly beating the 4000 cards.AMD fans and Nvidia fans are both missing the point, here.

AMD was essentially required to put out a card this time of year and so was Nvidia. AMD challenged Nvidia to a game of slam-your-dick-in-a-car-door chicken, and Nvidia did three rails of coke, slammed their dick in the car door, and then hit the power lock. Then AMD said, OMG, you actually slammed your dick in a car door? Fucking outstanding.

Nvidia's like, why aren't you slamming your dick in the door? And AMD says that's retarded, why would anyone slam their dick in a car door?

AMD put out two cards that beat their current line-up, of which there is quite a lot of still, and that's it. They have a full stack and it runs all the way up to, but not over $1K. And it's all profit at the top for them. This makes Nvidia look desperate and greedy for most people.

They're still fighting off the "value" brand burden, on CPU and GPU fronts. I think that changes when AMD doesn't have to compete with existing AMD and Nvidia new-old stock.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Join us on November 3rd as we unveil AMD RDNA™ 3 to the world!

That about sums it up... they probably could have ran these at 80% of their current power. Used a normal power adapter (or just had no issues with the new connector being pushed much less). Seems like they probably still would have retained a small lead. Ya this is like a sprinter going full tilt while having their head turned to watch the competition that is a few lengths back, tripping over their laces.4090 performs at, what, 95 percent at 70 percent power? They're running it at 140 percent power for a 5 percent boost in performance? They had to make a cooler for it. They decided to use a weird plug for it.

Nvidia got so scared shitless by AMD's rumor machine, they made a card that now has a rep for catching fire.

They slammed their dick in a card door.

What Nvidia need worry about is the first AMD chiplet refresh part. This launch feels very much like Zens launch. Zen1 was great but it wasn't a clear Intel killer, better in some ways better value still slower in single core. It was the tech that made people say oh shit Intel is in trouble.

Rumors Nvidia is actually planning another monolithic design after ADA are pretty strong. If that is true Nvidia is NOT going to get cheaper any time soon.

Even if AMD didn't pour the power in or throw extra chiplets on to beat Nvidia handily this gen... RDNA 3 is still a big Ru Ro for Nvidia.

Axman

VP of Extreme Liberty

- Joined

- Jul 13, 2005

- Messages

- 17,296

The 7900xtx and xt are cheaper but I’m not sure how they are exactly beating the 4000 cards.

I don't expect them to. "Trade blows" at best. No matter what Nvidia and their fans will point out "superior ray-tracing," "CUDA," and "DLSS." Even if AMD beat them in raster and RT, Nvidia still has features that AMD can't compete with, marketing-wise. Their strategy here is to make Nvidia look greedy and desperate.

I think they could have charged quite a bit less, but it's also a balancing act. They have to put money in their FSR fund if they want to take on DLSS. I'm seeing AMD fans hurt by the pricing based on their post-crypto expectations, they think it's too high.

They have to price it like it is. Less expensive, less over-the-top, less gaudy, less freaking fire hazard, but still a premium product. I mean, let's assume that AMD could sell these at $750 and $600 and still make a profit. What does that look like when Nvidia is charging double for their high end? It makes AMD look cheap, and not in a way that people associate with affordable.

We should wait for benches before we call AMD the victor under $1500... but I have a feeling that is exactly what is going to happen.The 7900xtx and xt are cheaper but I’m not sure how they are exactly beating the 4000 cards.

The 4080 will likely trades blows with the 7900. Probably just like last gen Nvidia loving games will be wins for the 4080 and AMD loving games will go the other way, with the odd outlier where the 7900 may even best the 4090 or get bested by a 3070. lol

EDIT - I changed the last card to 3070.

Last edited:

Very napkin math, but it seem that roughly we can expect the 7900xtx to be about 86% of a 4090 while the more expensive 4080 to be about 72% of an 4090.The 7900xtx and xt are cheaper but I’m not sure how they are exactly beating the 4000 cards.

Which seem a clean not just more performance by dollar, but significantly more performance (+20%) for significantly less (16%) which would be quite the beating. And that for 4k at 1080p-1440p could be significantly more but maybe not that relevant.

You have more VRAM, DP 2.1, both being possibly irrelevant, but all things being equal better have it than not, obviously need to wait for actual reviews of the actual product and RT-DLSS-frame generation could change fast, but looking at what Call Of Duty just achieved to do without RT and frame generation.....

Axman

VP of Extreme Liberty

- Joined

- Jul 13, 2005

- Messages

- 17,296

What Nvidia need worry about is the first AMD chiplet refresh part.

Like I said (maybe in another thread) edzotly.

The amount of headroom AMD has in terms of clocks, die size, stacked cache, faster memory ... and that's still on 5nm.

I do wonder if they'll skip the refresh. It's obviously planned, but if they can get Ryzen 8000 out and RX 8000 out in a hurry together, I think that's a possibility for them, too.

Flogger23m

[H]F Junkie

- Joined

- Jun 19, 2009

- Messages

- 14,351

When will actual benchmarks come out?

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 51,992

they are out on dec 13, so then or maybe the 12th.When will actual benchmarks come out?

I expect 8000 will be more refresh like then any other previous gen. Just going by what they did with Zen. It will probably still be RDNA 3 and it isn't very important if they decide to call them 7950s or just go to 8000.Like I said (maybe in another thread) edzotly.

The amount of headroom AMD has in terms of clocks, die size, stacked cache, faster memory ... and that's still on 5nm.

I do wonder if they'll skip the refresh. It's obviously planned, but if they can get Ryzen 8000 out and RX 8000 out in a hurry together, I think that's a possibility for them, too.

Zen 2 and Zen 3 used the exact same 2 billion 12nm transistors. I expect AMD probably designed the GPU IO to last a few product cycles. It seems to me whipping out slightly altered chiplets for a refresh is much more trivial then non chiplet chips where the entirety needs to be retooled and tweaked. I know I have talked about them mixing and matching chiplets from different runs... that might be more then their tech will allow with syncing. Still think it might be a great solution to go hard for datacenter... every second cycle you design a Datacenter chip instead and mix and match for each industry. First gen you do a raster heavy chiplet for gaming. Second gen you built a AI powerhouse chiplet. Datacenter parts get a bunch of the latest greatest AI chiplet + a few of the last gen Raster chips. Gaming refresh part uses the same gaming chiplets as last gen but add 1 or 2 of the data center chiplets.

AMD could do very short runs of lots of small chiplets and just glue them into the same everything else. If you can do that it almost seems like a wasted opportunity to not do that. I almost hope AMD turns out 6 month refreshes for a few years just to really twist Nvidia.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,011

The 7900xtx and xt are cheaper but I’m not sure how they are exactly beating the 4000 cards.

Why wait, the fandom already doing Gods work for AMD and claiming the 7900xtx matches the 4090 already.We should wait for benches before we call AMD the victor under $1500... but I have a feeling that is exactly what is going to happen.

The 4080 will likely trades blows with the 7900. Probably just like last gen Nvidia loving games will be wins for the 4080 and AMD loving games will go the other way, with the odd outlier where the 7900 may even best the 4090 or get bested by a 3080. lol

https://www.thefpsreview.com/2022/1...-benchmarks-comes-close-in-watch-dogs-legion/

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

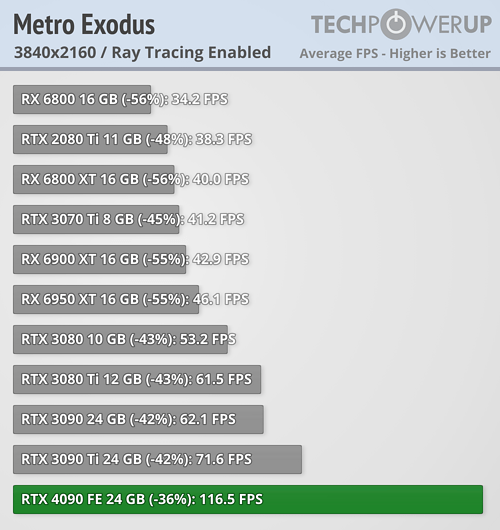

Lol they actually think the 7900xtx is going to match the 4090 in Metro LL with ray tracing on. The numbers they are basing it on are BS as they show the 4090 getting 59.9 fps while techpowerup shows 116.5 fps. So reality is the 4090 is going to be nearly TWICE as fast as the 7900xtx in some ray tracing games.Why wait, the fandom already doing Gods work for AMD and claiming the 7900xtx matches the 4090 already.

https://www.thefpsreview.com/2022/1...-benchmarks-comes-close-in-watch-dogs-legion/

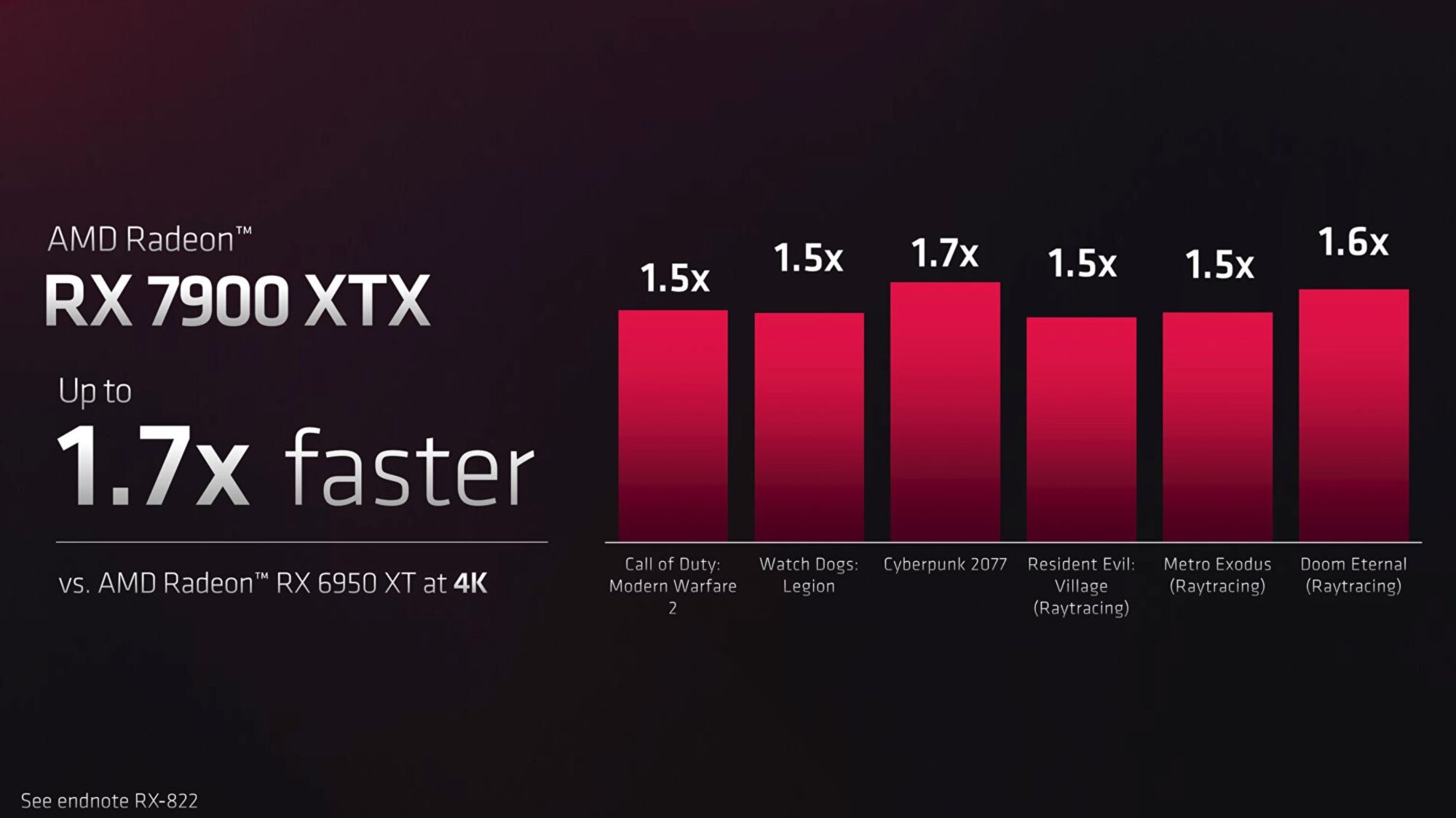

Yep. I noticed this on the slide that shows uplift compared to the 6950 XT.Lol they actually think the 7900xtx is going to match the 4090 in Metro LL with ray tracing on. The numbers they are basing it on are BS as they show the 4090 getting 59.9 fps while techpowerup shows 116.5 fps. So reality is the 4090 is going to be nearly TWICE as fast as the 7900xtx in some ray tracing games.

View attachment 524509

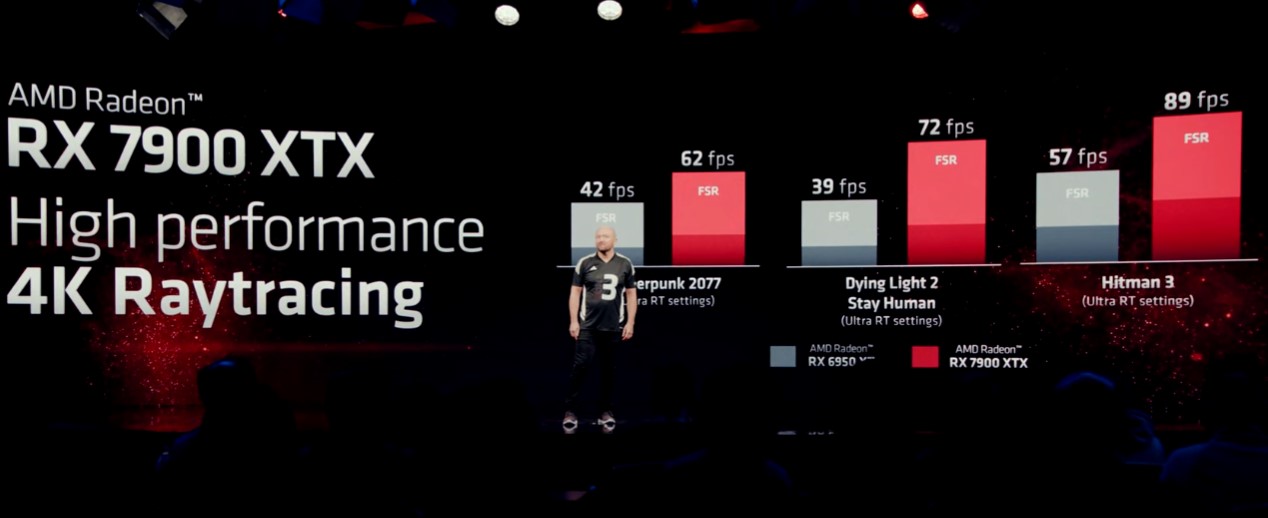

Doom Eternal and Metro Exodus (Enhanced Edition?) aren't super difficult to run with RT enabled. They've both very well optimized. BUT, Watch Dogs: Legion and Cyberpunk 2077 are super demanding RT titles, and AMD shows their uplift without RT. This should concern everyone; AMD is being very careful on their test scenarios for a reason.

For reference, an RTX 4090 is able to crush Doom Eternal and Metro Exodus Enhanced Edition at 4K maxed settings without DLSS enabled.

I agree its obvious what they did there... and I don't like the stupid apple style bars. Its obvious their RT is improved but still not stellar.Yep. I noticed this on the slide that shows uplift compared to the 6950 XT.

View attachment 524519

Doom Eternal and Metro Exodus (Enhanced Edition?) aren't super difficult to run with RT enabled. They've both very well optimized. BUT, Watch Dogs: Legion and Cyberpunk 2077 are super demanding RT titles, and AMD shows their uplift without RT. This should concern everyone; AMD is being very careful on their test scenarios for a reason.

For reference, an RTX 4090 is able to crush Doom Eternal and Metro Exodus Enhanced Edition at 4K maxed settings without DLSS enabled.

Having said that I still don't think that many people really give a toss about RT much yet.

The sad fact is this list of 6 games pretty much sums up RT titles on the market that aren't remasters or poker titles. I'll care about Ray tracing when more then 100 games on the market can actually use it... which at current pace won't be till 2024. Till then RT is to GPUs in 2022/23 as Truform was to GPUs with the 8500 in 2001-2004. Sure eventually tessellation became ubiquitous and it is used in every game now. But the technology although cool was just not a selling feature at all for 4-5 GPU generations.... because the number of games that used the tech could be counted on your physical human digits.

Twisted Kidney

2[H]4U

- Joined

- Mar 18, 2013

- Messages

- 4,094

As a builder of tiny PCs I'm a bit relieved to see those power numbers.

There's still a part of me that loves the challenge of an 800W PC in a 6-10L case.

There's still a part of me that loves the challenge of an 800W PC in a 6-10L case.

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

They improved RT performance over the 6950 xt by 1.5x to 1.6x in their best cases it seems. The 4090 is over 2.5x faster than the 6950xt in most ray tracing titles. Even the 4080 is going to poop all over the 7900xtx in ray tracing games.

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

Looking closer at techpowerup the 4090 is just insane for ray tracing except in Far Cry 6 for some reason.

Of the 8 ray tracing games there the avg increase over the 6950xt was 2.3x. If you leave out the poor result of Far Cry 6 it is 2.6x overall increase.

I am guessing the 4090 will be at least 60% faster than the 7900xtx in most tracing titles at 4k.

Of the 8 ray tracing games there the avg increase over the 6950xt was 2.3x. If you leave out the poor result of Far Cry 6 it is 2.6x overall increase.

I am guessing the 4090 will be at least 60% faster than the 7900xtx in most tracing titles at 4k.

Far Cry 6 barely has any ray traciing in it.Looking closer at techpowerup the 4090 is just insane for ray tracing except in Far Cry 6 for some reason.

Of the 8 ray tracing games there the avg increase over the 6950xt was 2.3x. If you leave out the poor result of Far Cry 6 it is 2.6x overall increase.

I am guessing the 4090 will be at least 60% faster than the 7900xtx in most tracing titles at 4k.

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

That cant be the only reason as some of those other ray tracing titles have very little ray tracing effects.Far Cry 6 barely has any ray traciing in it.

Farcry 6 uses a very broken approach to how they implemented the DX12 DRX libraries, I'd get into it but I am like 6 cans in and just can't. But in far cry 6, they did some shady shit around how they generate shadows and it absolutely tanks performance with no visual improvement the only RT effect worth working with there is reflections, and even then ... it's kinda minor.Looking closer at techpowerup the 4090 is just insane for ray tracing except in Far Cry 6 for some reason.

Of the 8 ray tracing games there the avg increase over the 6950xt was 2.3x. If you leave out the poor result of Far Cry 6 it is 2.6x overall increase.

I am guessing the 4090 will be at least 60% faster than the 7900xtx in most tracing titles at 4k.

Farcry 6 is an AMD title and the whole engine was tuned around AMD's Fidelity FX tweaks and it shows on both console and PC.

d3athf1sh

[H]ard|Gawd

- Joined

- Dec 16, 2015

- Messages

- 1,232

AMD shows their uplift without RT. This should concern everyone

Anyone who cares about RT. We exist and could be a majority.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,788

Could be, sure. Sometime in the future.could be a majority.

GDI Lord

Limp Gawd

- Joined

- Jan 14, 2017

- Messages

- 305

Definitely not today though.Anyone who cares about RT. We exist and could be a majority.

I try to care about it. Some games look nice but most games I have to look for it. Then someone shows me cyberpunk without RT and I still think it’s on so I feel stupid.Anyone who cares about RT. We exist and could be a majority.

I feel like we are not there yet where games look like demos. Which do look nice with RT on.

On top in multiplayer shooters etc. it’s pointless no one cares about looking for rt or they are dead.

I try to care about it. Some games look nice but most games I have to look for it. Then someone shows me cyberpunk without RT and I still think it’s on so I feel stupid..

I feel like we are not there yet where games look like demos. Which do look nice with RT on.

On top in multiplayer shooters etc. it’s pointless no one cares about looking for rt or they are dead.

Definitely not today though.

Could be, sure. Sometime in the future.

Some kind of typo in there.

Majority, including me, obviously dont give a rats ass about RTX.

Rumored AIB models with higher voltage options should be about 15-20 percent faster:

The architecture was designed to run at 3GHz.

This is basically what I assumed this entire time. The VERY interesting part is just how much overhead the XTX will have in AIB partners hands. Or just to get to the point: just how much it will close any and all gaps. Moving from 2.3 to 3 GHz, I assume no more than MAYBE a 15% uplift (more likely 10-12%). But that is a massive swing against any and all 4080's that will inevitably come down the pike especially at this price point.

Let's assume a 3GHz Sapphire Toxic costs $1200, I don't think there is any 4080 that nVidia can launch that will look favorable without a massive price drop and performance bump.

Speculation from twitter user XpeaGPU (Based on AIB source in Shenzen)

Bad news: Navi31 needs a respin to fix the issue preventing to reach the 3GHz target at reasonable power level. Hardware team failed this design. Good news: The problem has been identified and corrected for Navi32

RX 7900 XTX AIB cards with 3x8-PIN reaches 2.8GHz at 450W. Less than 5% perf uplift by this OC on synthetic benchmark (TSE) For now, cannot go above 2.9GHz, whatever the voltage, whatever the power, whatever the binned die, because of "instabilities"

https://twitter.com/XpeaGPU/status/1589071686493958145?s=20&t=DJdMZw2pVhx8Et8yzjtUbA

https://twitter.com/XpeaGPU/status/1589075249337085952?s=20&t=DJdMZw2pVhx8Et8yzjtUbA

crazycrave

[H]ard|Gawd

- Joined

- Mar 31, 2016

- Messages

- 1,878

I hate to play both sides, but it's why I have 2 rigs = RX 6700 and RTX 3070 when RT matters

Same. I have one with a RTX 4090 and one with a PS5.I hate to play both sides, but it's why I have 2 rigs = RX 6700 and RTX 3070 when RT matters

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,788

If that's what works for you, so be it. My ownership of both brands has been 50/50.I hate to play both sides, but it's why I have 2 rigs = RX 6700 and RTX 3070 when RT matters

I don't see what the big deal is. What the hoopla is all about. You get basically bleeding edge performance without all the super bloated fantasy and magic bafoonery of dlss. You get blistering raster perf and a much lower tdp, no melting power connector, and lower price. What's the big problem?

I can see AMD sales crushing Nvidia on price alone. But fans love thier boys in the end.

anyways I have a 6900xt with waterblock sitting in a box. I moved to a new place and decided to use my 6800h amd/3070ti laptop for a long while. I may get a xtx and rebuild a desktop but then my $2000 Lenovo Legion will not get used so I'll pass and just enjoy my laptop with its smooth gsync to odyssey g7 connection.

I can see AMD sales crushing Nvidia on price alone. But fans love thier boys in the end.

anyways I have a 6900xt with waterblock sitting in a box. I moved to a new place and decided to use my 6800h amd/3070ti laptop for a long while. I may get a xtx and rebuild a desktop but then my $2000 Lenovo Legion will not get used so I'll pass and just enjoy my laptop with its smooth gsync to odyssey g7 connection.

Last edited:

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,688

The relative performance of the XTX to the 4090 is worse than the 6900 XT was to the 3090. The raytracing gap is probably going to be even larger this gen. AMD themselves said the XTX competes with the 4080.

The hype behind the XTX is misplaced... People are just overreacting because "Nvidia bad". Same thing happened when the 6900 XT was announced. Once people calm down and see the benchmarks, it will fizzle out.

As for TDP, it's lower but the card is probably not more efficient... Power limit a 4090 to 355W, or overclock the XTX to 450W, and the 4090 will probably still win both scenarios (reminder: the 4080 16 GB is 320W). AMD made the smarter move since it allows them to use smaller heatsinks and keep the old power connectors.

The 7900 XT is a rebranded 7800 XT with higher MSRP, it belongs in the garbage next to the 4080 12 GB.

The one thing working in AMD's favor is the 4080 16 GB's terrible pricing.

The hype behind the XTX is misplaced... People are just overreacting because "Nvidia bad". Same thing happened when the 6900 XT was announced. Once people calm down and see the benchmarks, it will fizzle out.

As for TDP, it's lower but the card is probably not more efficient... Power limit a 4090 to 355W, or overclock the XTX to 450W, and the 4090 will probably still win both scenarios (reminder: the 4080 16 GB is 320W). AMD made the smarter move since it allows them to use smaller heatsinks and keep the old power connectors.

The 7900 XT is a rebranded 7800 XT with higher MSRP, it belongs in the garbage next to the 4080 12 GB.

The one thing working in AMD's favor is the 4080 16 GB's terrible pricing.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,011

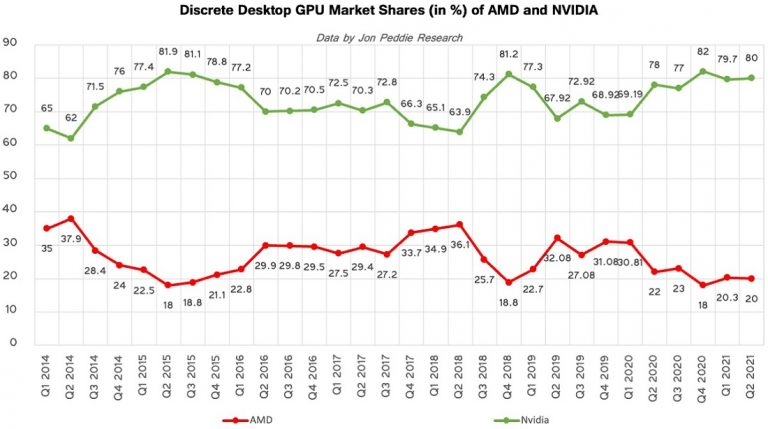

Hate to burst anyone's bubble, but let's wake up from fantasy land. AMD will not be crushing Nvidia's sales this gen, as always they won't even come close.I can see AMD sales crushing Nvidia on price alone.

Mindshare is a powerful thing.Hate to burst anyone's bubble, but let's wake up from fantasy land. AMD will not be crushing Nvidia's sales this gen, as always they won't even come close.

View attachment 524615

It's tit for tat. I'm not talking about forever crushing them. It's just this will be AMDs tat for Nvidia Tit, lol.Hate to burst anyone's bubble, but let's wake up from fantasy land. AMD will not be crushing Nvidia's sales this gen, as always they won't even come close.

View attachment 524615

Product for product. Even if Nvidia sells more cards than AMD, if AMD sells every card they make and Nvidia sells only half the cards they make, volume of gross production numbers doesn't mean sales performance always, then AMD is the clear winner on this tit-for-tat cycle.

It's like saying if Remington makes 1 Billion cartridges for 9mm but only sells 500 million this year but Winchester makes 500 million and sells 500 million then WInchester beat Remington in sales performance in the same market space. As an Investor I'm going to place my investment in Winchester because thier sales performance is much higher.

Axman

VP of Extreme Liberty

- Joined

- Jul 13, 2005

- Messages

- 17,296

Speculation from twitter user XpeaGPU (Based on AIB source in Shenzen)

Maybe for like configurations with locked drivers, but I'm willing to bet that (if they release them in this config) in 1-hi with extra cache it'll be well over 10 percent.

CAD4466HK

2[H]4U

- Joined

- Jul 24, 2008

- Messages

- 2,691

Jim weighs in.

Is AMD really on the path to glory?

Is AMD really on the path to glory?

I have been thinking this. This chip is so small when it comes to GCD. And they can make a bigger chip. Also someone notice not once amd called it navi31?

I think there is a reason for it. Maybe this time we are going to see a bigger refresh not just something with higher clocks. They have a lot of room to play with.

May be something more refined, bigger second half next year.

There were rumors about bigger chip with more shaders. That might be it.

Fact that this GCD is on 300mm says a lot they can work with.

I think there is a reason for it. Maybe this time we are going to see a bigger refresh not just something with higher clocks. They have a lot of room to play with.

May be something more refined, bigger second half next year.

There were rumors about bigger chip with more shaders. That might be it.

Fact that this GCD is on 300mm says a lot they can work with.

Jim weighs in.

Is AMD really on the path to glory?

Lmao just linked this. He does have a point. They can easily have a bigger refresh. Rumors were there about a chip being doubled shaders. They were calling us 16k. I think given amd is only mentioning the CUs.

They could make a die 400-500GCD. and still be efficient with more experience on the node. They can really scale rdna3 on refresh before even going RDNA 4.

Improving raster/Ray tracing even further

Jim weighs in.

Is AMD really on the path to glory?

I think its obvious they are on a path the beating Nvidia up. Assuming they don't screw something up... OR Nvidia manages a switch to chiplets themselves. Reports are their next gen will be monolithic as well.

AMD has reduced their development costs by a magnitude, not just production costs. Not only are these cheaper to fab... it will be much much cheaper to design new generations. Reusing the 6nm bits for 2-3 generations is viable. They did it with Zen. They only really need a full redesign every couple gens. They could get even more savings fabbing the older process bits all at once, ordering chips for 2 generations of GPUs and just warehousing them for a year.

I think its obvious they are on a path the beating Nvidia up. Assuming they don't screw something up... OR Nvidia manages a switch to chiplets themselves. Reports are their next gen will be monolithic as well.

AMD has reduced their development costs by a magnitude, not just production costs. Not only are these cheaper to fab... it will be much much cheaper to design new generations. Reusing the 6nm bits for 2-3 generations is viable. They did it with Zen. They only really need a full redesign every couple gens. They could get even more savings fabbing the older process bits all at once, ordering chips for 2 generations of GPUs and just warehousing them for a year.

I am really thinking this time we are going to see little bigger 7950xt. They can do a lot with the refresh. The rumors of higher core count navi might be bigger chip. I mean GCD is only 300mm so so much to work with there. They can have a big refresh even before RDNA 4.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)