Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Join us on November 3rd as we unveil AMD RDNA™ 3 to the world!

Comixbooks

Fully [H]

- Joined

- Jun 7, 2008

- Messages

- 21,991

AMD has some good GPU vendors still like XFX and recently ASROCK not sure of the quality of the cards they produce but Nvidia is slim picking.

riev90

Limp Gawd

- Joined

- Oct 21, 2022

- Messages

- 362

I have tried asrock AMD's cards:AMD has some good GPU vendors still like XFX and recently ASROCK not sure of the quality of the cards they produce but Nvidia is slim picking.

RX 5500 XT Challenger

RX 6500 XT ITX

RX 6600 Challenger

They have above average quality in terms of build (heatsink, fan(s), shroud (plastic), with aluminium backplate).

The fans running at high-ish RPM even at force limit at 70%, so not quite silent.

The gpu averaging 65 ~ 75c, hot spot 80 ~ 90c, and vrm 75 ~ 85c.

So pretty much comparable with Sapphire (Pulse), XFX, Asus (Dual series), Powercolor (fighter), and better than MSI (Mech & Armor) and Biostar.

Regarding Nvidia, they still have many famous AIB like Asus, Giga, MSI, Galax, Colorful.

funkydmunky

2[H]4U

- Joined

- Aug 28, 2008

- Messages

- 3,866

That is why Nvidia is pushing DLSS 3.0 They know that is what AMD won't be able to touch (AMD will be chewing their ass on every other front including $$) despite throwing all previous RTX cards in the toilet as unable to support it. Typical Nvidia all cost for first place even when you abandon your own. WTF tensor cores? Ya could last a few gens?AMD has been doing good on the features and software side recently. They're a bit later, but typically have stuff comparable to DLSS, G Sync, etc. Big down side at the moment is ray tracing performance.

LoL

That is why Nvidia is pushing DLSS 3.0 They know that is what AMD won't be able to touch (AMD will be chewing their ass on every other front including $$) despite throwing all previous RTX cards in the toilet as unable to support it. Typical Nvidia all cost for first place even when you abandon your own. WTF tensor cores? Ya could last a few gens?

LoL

Isn't dlss3 not really even dlss? Not fully edumacated on Ada yet

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,788

As far as I've seen it's pretty much frame interpolation.Isn't dlss3 not really even dlss? Not fully edumacated on Ada yet

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 41,957

The "superior" case was only in one game (Assassin's Creed), and it turned out as the developer said that they accidentally skipped a rendering path in the D3D 10.1 implementation they said they would fix. Apparently, it couldn't be fixed since Ubisoft just removed 10.1 as an option. This "superior" performance never manifested in any other games that had a 10.1 rendering option.The only difference is that it did pay off in the end when AMD did get better drivers. Also, wasn't AMD's DX10 cards superior to Nvidia's due to DX10.1? Intel just needs to hire Valve to make drivers for them because Valve seems to be doing a great job at it.

schoolslave

[H]ard|Gawd

- Joined

- Dec 7, 2010

- Messages

- 1,293

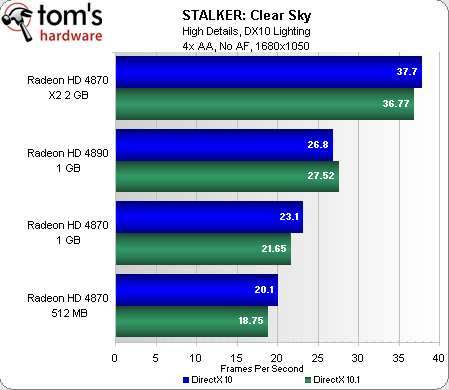

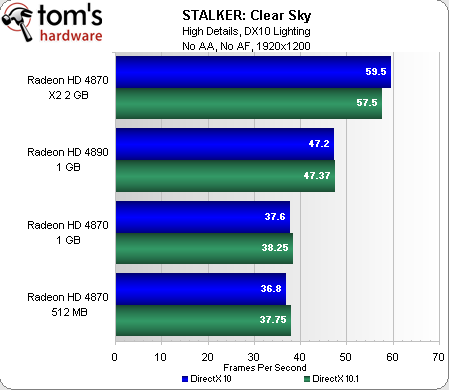

Wasn’t there also something similar with STALKER Clear Sky?The "superior" case was only in one game (Assassin's Creed), and it turned out as the developer said that they accidentally skipped a rendering path in the D3D 10.1 implementation they said they would fix. Apparently, it couldn't be fixed since Ubisoft just removed 10.1 as an option. This "superior" performance never manifested in any other games that had a 10.1 rendering option.

Thunderdolt

Gawd

- Joined

- Oct 23, 2018

- Messages

- 1,015

DLSS 2 is still current and is supported on every card which has RTX - that's three generations of cards. There are more 20-series cards in the field which support DLSS 2 than there are total AMD cards sold from the 20-series launch through today.That is why Nvidia is pushing DLSS 3.0 They know that is what AMD won't be able to touch (AMD will be chewing their ass on every other front including $$) despite throwing all previous RTX cards in the toilet as unable to support it. Typical Nvidia all cost for first place even when you abandon your own. WTF tensor cores? Ya could last a few gens?

LoL

Accept 10.1 was superior because it allowed developers to reuse depth buffers.... according to the developer there was ZERO image degradation. Only effect was a massive performance buff.The "superior" case was only in one game (Assassin's Creed), and it turned out as the developer said that they accidentally skipped a rendering path in the D3D 10.1 implementation they said they would fix. Apparently, it couldn't be fixed since Ubisoft just removed 10.1 as an option. This "superior" performance never manifested in any other games that had a 10.1 rendering option.

Why did they choose to remove 10.1 from the game.... HMMM let me think perhaps 10.1 was not the way it was meant to be played or something.

Interview with Ubisofts tech lead at the time......

TR: Is this "render pass during post-effect" somehow made unnecessary by DirectX 10.1?

Beauchemin: The DirectX 10.1 API enables us to re-use one of our depth buffers without having to render it twice, once with AA and once without.

TR: What other image quality and/or performance enchancements does the DX10.1 code path in the game offer?

Beauchemin: There is no visual difference for the gamer. Only the performance is affected.

I mean I'm sure the bag full of Nvidia cash had ZERO to do with this BS at the time....

TR: What specific factors led to DX10.1 support’s removal in patch 1?

Beauchemin: Our DX10.1 implementation was not properly done and we didn’t want the users with Vista SP1 and DX10.1-enabled cards to have a bad gaming experience.

TR: Finally, what is the future of DX10.1 support in Assassin’s Creed? Will it be restored in a future patch for the game?

Beauchemin: We are currently investigating this situation.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 41,957

Yes, but there was either no or a negligible uplift compared to D3D 10.0. There were not large gains to be had like the "Assassin's Creed" anomaly.Wasn’t there also something similar with STALKER Clear Sky?

Second-to-last question says it wasn't done properly.Accept 10.1 was superior because it allowed developers to reuse depth buffers.... according to the developer there was ZERO image degradation. Only effect was a massive performance buff.

Why did they choose to remove 10.1 from the game.... HMMM let me think perhaps 10.1 was not the way it was meant to be played or something.

Interview with Ubisofts tech lead at the time......

TR: Is this "render pass during post-effect" somehow made unnecessary by DirectX 10.1?

Beauchemin: The DirectX 10.1 API enables us to re-use one of our depth buffers without having to render it twice, once with AA and once without.

TR: What other image quality and/or performance enchancements does the DX10.1 code path in the game offer?

Beauchemin: There is no visual difference for the gamer. Only the performance is affected.

I mean I'm sure the bag full of Nvidia cash had ZERO to do with this BS at the time....

TR: What specific factors led to DX10.1 support’s removal in patch 1?

Beauchemin: Our DX10.1 implementation was not properly done and we didn’t want the users with Vista SP1 and DX10.1-enabled cards to have a bad gaming experience.

TR: Finally, what is the future of DX10.1 support in Assassin’s Creed? Will it be restored in a future patch for the game?

Beauchemin: We are currently investigating this situation.

No denying there were good features in 10.1, as most of them were pushed forward into 11. People just like to look for excuses to say "NVIDIA bad." There are plenty examples of that as exposed on [H], so it seems like this is a weird one to grasp onto.

DX10.1 and it’s removal and general abandonment by the development community had more to do with Windows Vista more than anything else.Accept 10.1 was superior because it allowed developers to reuse depth buffers.... according to the developer there was ZERO image degradation. Only effect was a massive performance buff.

Why did they choose to remove 10.1 from the game.... HMMM let me think perhaps 10.1 was not the way it was meant to be played or something.

Interview with Ubisofts tech lead at the time......

TR: Is this "render pass during post-effect" somehow made unnecessary by DirectX 10.1?

Beauchemin: The DirectX 10.1 API enables us to re-use one of our depth buffers without having to render it twice, once with AA and once without.

TR: What other image quality and/or performance enchancements does the DX10.1 code path in the game offer?

Beauchemin: There is no visual difference for the gamer. Only the performance is affected.

I mean I'm sure the bag full of Nvidia cash had ZERO to do with this BS at the time....

TR: What specific factors led to DX10.1 support’s removal in patch 1?

Beauchemin: Our DX10.1 implementation was not properly done and we didn’t want the users with Vista SP1 and DX10.1-enabled cards to have a bad gaming experience.

TR: Finally, what is the future of DX10.1 support in Assassin’s Creed? Will it be restored in a future patch for the game?

Beauchemin: We are currently investigating this situation.

But DX10 as a whole was pretty bad to actually use, 10.1 broke even more and required yet another code path. Developers would need to program for DX 9c, 10, and 10.1 it was a bad API and I still have nightmares from the few times we attempted to use it. DX9 was solid and DX10/10.1 was convoluted on a wildly unpopular OS, DX11 learned a lot of what not to do from DX10 and 10.1.

But 10.1 itself was unstable and very difficult to develop with, it was incredibly finicky and things that should work just wouldn’t. But the times it wouldn’t were more related to what the system was doing elsewhere than what your code was doing. It was infuriating.

funkydmunky

2[H]4U

- Joined

- Aug 28, 2008

- Messages

- 3,866

I don't think there should be any reason why previous versions of RTX couldn't support DLSS 3, even if to a lesser extent like the generations of RT. I think at this point in time backwards support would have really hurt the 40 series as the 3090/ti with DLSS 3 would have really crapped on their fancy graphs. You know the graph that got the 4080 12GB un-launchedDLSS 2 is still current and is supported on every card which has RTX - that's three generations of cards. There are more 20-series cards in the field which support DLSS 2 than there are total AMD cards sold from the 20-series launch through today.

cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,074

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,688

Hope prices are competitive, the retail GPU market is still fucked.

If performance of top tier is in the ball park as Nvidia's, expect similar pricing. Market has shown it will pay it and possibly more.Hope prices are competitive, the retail GPU market is still fucked.

Agreed. Also the only two AAA titles I've had any interest in - finished Control (worth it), haven't fired up CP2077 yet.Those are pretty much the only 2 titles I ever see given to show the merits of RT. Yes, even on this very forum.

I've never, ever, been able to get into a Metro game. And I loved stalker. Something about that engine just doesn't work with my brain - movement is all ~wrong~Metro: Exodus Enhanced Edition is the golden standard, since it is purely ray traced. Control is still hybrid rasterization+ray tracing.

Meh? (And before you go there, I've got plenty of both last/current generation cards - Ampere and RDNA2 - so no fanboy possible). FSR works fine, as does DLSS - arguably DLSS is a bit better, but since I'm not playing at 4k yet, I haven't needed it or FSR. As for RT - I'm with NightReaver. Tell me a can't-miss game that has it that makes a huge difference outside of Control or (arguably) Metro? CP2077 is supposedly "fine" now, but it's not what I'd put up there as can't miss. And for that matter, Control was still a good game without it when it came out. Good games are good games - RT or not.Lies. Most big new games come with raytracing, and your 6800xt has no DLSS plus suffers at 4k. Nice try.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 41,957

It's the narrow default FOV. The default is 45 degrees vertical, which works out to 74 degrees horizontal on a 16:9 widescreen monitor. They upped it to 60 degrees vertical in Exodus by default, which is 90 degrees horizontal. Optimal vertical FOV would be 74 degrees.I've never, ever, been able to get into a Metro game. And I loved stalker. Something about that engine just doesn't work with my brain - movement is all ~wrong~

Huh. First time I've seen an answer for that. Lemme go reinstall that and give it a try - I assume there's a change possible?It's the narrow default FOV. The default is 45 degrees vertical, which works out to 74 degrees horizontal on a 16:9 widescreen monitor. They upped it to 60 degrees vertical in Exodus by default, which is 90 degrees horizontal. Optimal vertical FOV would be 74 degrees.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 41,957

https://www.pcgamingwiki.com/wiki/Metro_2033#Field_of_view_.28FOV.29Huh. First time I've seen an answer for that. Lemme go reinstall that and give it a try - I assume there's a change possible?

https://www.pcgamingwiki.com/wiki/Metro_2033_Redux#Field_of_view_.28FOV.29

https://www.pcgamingwiki.com/wiki/Metro:_Last_Light#Field_of_view_.28FOV.29

https://www.pcgamingwiki.com/wiki/Metro:_Last_Light_Redux#Field_of_view_.28FOV.29

https://www.pcgamingwiki.com/wiki/Metro_Exodus#Field_of_view_.28FOV.29

https://www.pcgamingwiki.com/wiki/Metro_Exodus_Enhanced_Edition#Field_of_view_.28FOV.29

A value of 60 will give you 90 hFOV in 16:9. 74 will give you 106 hFOV. You can go up to a maximum of 90, which is 120 hFOV at 16:9. There are no side effects from changing the FOV, as far as I know.

Since I barely pay attention (obviously), what's a "normal" FOV for most games? assume 16:9.https://www.pcgamingwiki.com/wiki/Metro_2033#Field_of_view_.28FOV.29

https://www.pcgamingwiki.com/wiki/Metro_2033_Redux#Field_of_view_.28FOV.29

https://www.pcgamingwiki.com/wiki/Metro:_Last_Light#Field_of_view_.28FOV.29

https://www.pcgamingwiki.com/wiki/Metro:_Last_Light_Redux#Field_of_view_.28FOV.29

https://www.pcgamingwiki.com/wiki/Metro_Exodus#Field_of_view_.28FOV.29

https://www.pcgamingwiki.com/wiki/Metro_Exodus_Enhanced_Edition#Field_of_view_.28FOV.29

A value of 60 will give you 90 hFOV in 16:9. 74 will give you 106 hFOV. You can go up to a maximum of 90, which is 120 hFOV at 16:9. There are no side effects from changing the FOV, as far as I know.

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,688

Most people use 90-100 horizontal fov.Since I barely pay attention (obviously), what's a "normal" FOV for most games? assume 16:9.

cjcox

2[H]4U

- Joined

- Jun 7, 2004

- Messages

- 2,933

The bad thing about "AMD Announcements"... the let down. Because, by now, we believe it will be 4x faster than a 4090, the size of small matchbook and actually reduce overall temperature of your computer by 10C.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 41,957

Using 90 hFOV as a baseline for 4:3, 74 vFOV would be considered "normal." Most games these days seem to use a hFOV of 80-90 in 16:9 resolutions, which is 50-60 vFOV.Since I barely pay attention (obviously), what's a "normal" FOV for most games? assume 16:9.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,788

....what?The bad thing about "AMD Announcements"... the let down. Because, by now, we believe it will be 4x faster than a 4090, the size of small matchbook and actually reduce overall temperature of your computer by 10C.

It can it just works very poorly when you are either CPU or GPU bound. The lack of dedicated hardware for optical flow would cause a staggering decrease in FPS.I don't think there should be any reason why previous versions of RTX couldn't support DLSS 3, even if to a lesser extent like the generations of RT. I think at this point in time backwards support would have really hurt the 40 series as the 3090/ti with DLSS 3 would have really crapped on their fancy graphs. You know the graph that got the 4080 12GB un-launched

You are welcome to experiment with how your 900, 1000, 2000 or 3000 series can handle it at a software level.

https://developer.nvidia.com/opticalflow-sdk

The optical flow SDK has been around for a long while now but it’s been limited in it’s practical usage by how long it takes to process images.

Where I think optical flow can really shine is VR, what if it’s used to alternate the real render image for each eye, so say the left eye is generating a real frame every odd number and the right every even, so every frame has at least 1 real image but then you alternate the eye that the generated frame is displayed on. Would that still have a big increase in latency? Because with the normal generated frame it essentially can’t take input on that frame but in this case for VR every time a pair of frames is displayed one would be real.

Last edited:

Most people use 90-100 horizontal fov.

Gratzi! Maybe I'll finally like it!Using 90 hFOV as a baseline for 4:3, 74 vFOV would be considered "normal." Most games these days seem to use a hFOV of 80-90 in 16:9 resolutions, which is 50-60 vFOV.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,011

He's pointing out how AMD GPUs are overhyped before release. Way too often do the Radeon rumors over-promise and under-deliver. AMD themselves have over-hyped their next release just to fall flat on their faces, least we forget the Radeon Rebellion.....what?

Part of me believes that Nvidia pays people to overhype AMD cards so that they are always met with some degree of disappointment when they are actually launched.He's pointing out how AMD GPUs are overhyped before release. Way too often do the Radeon rumors over-promise and under-deliver. AMD themselves have over-hyped their next release just to fall flat on their faces, least we forget the Radeon Rebellion.

Would be pretty cool if they can compete on a level playing field with NVIDIA for once. Or even … ahead … for once. At least in the GPU department.

AMD will likely claim that it can like always and fall short, just like always.

cjcox

2[H]4U

- Joined

- Jun 7, 2004

- Messages

- 2,933

Strange thing is, this time, they don't have to be totally "on par", they can be off (typical), and provide a smaller and less power demanding card and actually "wins" sanity wise. There's a bit more at stake this time around apart from performance.AMD will likely claim that it can like always and fall short, just like always.

Tagline: AMD Radeon, the card you can use today.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,011

I can believe it, and in my mind it works both ways. Part of me believes this whole melting connector might be sponsored by team red to be completely honest. The fact that multiple technical people have tried to intentionally fuck with the connector and not a single one has been able to even get a spark let alone melt the plastic speaks volumes to me.Part of me believes that Nvidia pays people to overhype AMD cards so that they are always met with some degree of disappointment when they are actually launched.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,011

Have to disagree. Mind share really works by being top dog over and over. The 6900XT was a beast of a card, and yet collectively the 3090 was the mind share winner no matter how you slice it.Strange thing is, this time, they don't have to be totally "on par", they can be off (typical), and provide a smaller and less power demanding card and actually "wins" sanity wise. There's a bit more at stake this time around apart from performance.

Tagline: AMD Radeon, the card you can use today.

AMD will deliver in all the ways it needs to, I am sure the raster performance will be awesome, and the power-to-performance curve will be great. But AMD does not have the resources to compete with Nvidia on feature sets and stability, Nvidia has huge teams of software and hardware engineers pioneering research in fields that AMD doesn't even bother with and that trickledown is what helps keep them in the lead.AMD will likely claim that it can like always and fall short, just like always.

It was a really good card that still lost out to the 3080 which all things considered was the "cheaper" option. But the last gen was a wash nothing was really available so the good card was the one you could actually get.Have to disagree. Mind share really works by being top dog over and over. The 6900XT was a beast of a card, and yet collectively the 3090 was the mind share winner no matter how you slice it.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,788

Were the Navi cards over hyped? I don't remember much about the 5700xt marketing.He's pointing out how AMD GPUs are overhyped before release. Way too often do the Radeon rumors over-promise and under-deliver. AMD themselves have over-hyped their next release just to fall flat on their faces, least we forget the Radeon Rebellion.

Idk, last couple of releases seemed sensible.

The hype up to the launch of the 5000 series GPUs was pretty intense and while they didn't fall on their faces when they did launch there were a lot of unhappy people posting about how AMD failed to deliver and didn't meet their own expectations then either. It's been a recurring theme for AMD's GPU launches for a while especially when comparing them against the xx80 class and up.Were the Navi cards over hyped? I don't remember much about the 5700xt marketing.

Idk, last couple of releases seemed sensible.

- Joined

- May 18, 1997

- Messages

- 55,596

Bottom line is that AMD will sell every GPU it makes. Guaranteed.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,788

Tbh a lot of people believed (and still believed) that the 5700xt was supposed to compete with the 2080.The hype up to the launch of the 5000 series GPUs was pretty intense and while they didn't fall on their faces when they did launch there were a lot of unhappy people posting about how AMD failed to deliver and didn't meet their own expectations then either. It's been a recurring theme for AMD's GPU launches for a while especially when comparing them against the xx80 class and up.

The 6000 series competed just fine.

The last gen was a total shit show and there was no stable price/performance metric to measure off of so the only measurement method really was "does it work for you at that price", because it's not like you couldn't buy the cards at any point it was just a matter of paying for them at specific points.Tbh a lot of people believed (and still believed) that the 5700xt was supposed to compete with the 2080.

The 6000 series competed just fine.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)