Rockenrooster

Gawd

- Joined

- Apr 11, 2017

- Messages

- 955

Imagine a 5950X3D....

They would have to bin the living crap out of it though..

They would have to bin the living crap out of it though..

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

There wouldn't really be any gains. The cache in a 5950x is split between CCX. And its likely they would also have to do two separate cache stacks. One for each CCX.Imagine a 5950X3D....

They would have to bin the living crap out of it though..

Not often that there is any bad product from those types of companies, only bad price, with the 5800x at $339 and the 5900x at $394, 5950x at $536, the $450 5800x3d is not that interesting to some.Idk, I don't get the problem some have with this CPU. Wouldn't be the 1st time a company really dialed in a cpu for a specific workload. If it happens to be the best at gaming so be it.

Heh. Where's all the people who will pay $500 more for 2% more performance in games if it's a video card?Not often that there is any bad product from those types of companies, only bad price, with the 5800x at $339 and the 5900x at $394, 5950x at $536, the $450 5800x3d is not that interesting to some.

At $390 all of a sudden no one would have anything bad to say I imagine.

Could be more to it too, but pricing is often what shift perception.

Yeah, I mean I get that. It's pricey. But if it's "the best", then I know certain people will pay a premium for it.Not often that there is any bad product from those types of companies, only bad price, with the 5800x at $339 and the 5900x at $394, 5950x at $536, the $450 5800x3d is not that interesting to some.

At $390 all of a sudden no one would have anything bad to say I imagine.

Could be more to it too, but pricing is often what shift perception.

Heh. Where's all the people who will pay $500 more for 2% more performance in games if it's a video card?

The larger cache in a server with many VM’s will see big improvements, I’m holding off an upgrade especially for them.Milan-X proves the increased cache even on multiple CCXs pays dividends. Just not with gaming. Random I/O workloads like virtualization will see it.

Ya know, the extra e cores of the 12700k will pay dividends as well...

Yep, as heat sinks for the George Foreman Grill p-cores…Ya know, the extra e cores of the 12700k will pay dividends as well...

But these are power consumption numbers for Prime95, where's the chart comparing power consumption numbers when gaming?

Computerbase did measure 12900KS power consumption during gaming, and it was quite high (no 5800X3D numbers yet as they stick to NDA).Don't have one. They have superpi, prime95, and cinebench. Oh, and system idle power, which is just a bit lower for the x3d.

The 12700k is just as efficient as the 5800x3d.Yep, as heat sinks for the George Foreman Grill p-cores…

He's not saying it's not efficient, he's saying the "efficiency" cores are only good for pulling heat away from the p-cores. Part tongue in cheek, I'm sure, but they do do that. They also take load from lower priority processes off of the p-cores, allowing them to sleep more, improving efficiency...when they're being used as intended, anyway. They don't directly help framerate or frametime, but can help indirectly when there are background processes that would otherwise get in the way.The 12700k is just as efficient as the 5800x3d.

First review for the 5800x3D out. A big meh from me.

Where's the video card that is a $500 upgrade but offers only 2% more performance? In EVGA's lineup, for example, $510 takes you from a $489 3060 Ti FTW3 to a $999 3080 FTW3. That's a 50% jump in performance using real games at real resolutions. Maybe the gap is smaller when using 32x32 pixel AMD benchmarks.Heh. Where's all the people who will pay $500 more for 2% more performance in games if it's a video card?

Der8auer did a video a while back, playing with just the e cores, and said they're actually pretty impressive for what they are:He's not saying it's not efficient, he's saying the "efficiency" cores are only good for pulling heat away from the p-cores. Part tongue in cheek, I'm sure

https://www.lmgtfy.app/#gsc.tab=0&gsc.q=hyperboleWhere's the video card that is a $500 upgrade but offers only 2% more performance?

Where's the video card that is a $500 upgrade but offers only 2% more performance? In EVGA's lineup, for example, $510 takes you from a $489 3060 Ti FTW3 to a $999 3080 FTW3. That's a 50% jump in performance using real games at real resolutions. Maybe the gap is smaller when using 32x32 pixel AMD benchmarks.

https://www.tomshardware.com/news/nvidia-geforce-rtx-3080-ti-reviewWhere's the video card that is a $500 upgrade but offers only 2% more performance? In EVGA's lineup, for example, $510 takes you from a $489 3060 Ti FTW3 to a $999 3080 FTW3. That's a 50% jump in performance using real games at real resolutions. Maybe the gap is smaller when using 32x32 pixel AMD benchmarks.

Anyone not buying at MSRP today is a sucker. The shortage is over. EVGA was showing both cards I mentioned as in stock when I posted. There is so much inventory available that the 3060 Ti is even on sale at a discount direct from EVGA.He's probably referring to the 3090 and 3080Ti "real world" prices vs. MSRP. Some of those price jumps were huge for minimal gains. Things are normalizing, but a lot of that had to do with simply buying whatever you could get your hands on.

Well, I kinda just made up numbers. But there's certainly people here who will buy the top card because it's the fastest one out there, without any regard for any kind of price-to-performance ratio (and that's fine) so for anyone like that to complain about the price-to-performance of the 5800x3d would be silly, assuming there was anyone that met all those criteria.He's probably referring to the 3090 and 3080Ti "real world" prices vs. MSRP.

LTT's review is out.

Spoilers:

As expected, real world performance does not align with AMD's benchmarks except that it is much faster than AMD's previous best. And this second place gaming effort comes with a substantial cost to everything other than gaming.

Think about it this way:Well, I kinda just made up numbers. But there's certainly people here who will buy the top card because it's the fastest one out there, without any regard for any kind of price-to-performance ratio (and that's fine) so for anyone like that to complain about the price-to-performance of the 5800x3d would be silly, assuming there was anyone that met all those criteria.

Ah, no. "The AMD Ryzen 7 5800X3D primarily focuses its marketing on gaming advancements, which is because the additional L3 Cache layer on the X3D will mostly be visible in gaming scenarios. Impact to certain production applications, like rendering in Cycles (Blender) or Adobe Premiere, will be limited more by frequency and core count than by cache. That said, gaming often benefits from extra cache, and we see that here. The R7 5800X3D puts the brand new Intel i9-12900KS to shame for value, and although the 5800X3D can’t be overclocked, it also doesn’t really need it. Memory tuning is still available, as is Infinity Fabric tuning, and that’s more important for AMD anyway."The $450 5800X3D offers a performance boost only at 720p and below.

Fixed for youyou're buying into a dead platform if you build anything Intel.

Considering you will be more GPU bound at 1440p/4k, it would not show how much more performance a CPU would be since all the CPU's would get right around the same framerate.Glancing at the various video reviews and most print reviews, everyone keeps showing 1080p.

At least Guru shows some #'s for higher resolutions. Not sure their game choices are the best, but at least it's something.

https://www.guru3d.com/articles_pages/amd_ryzen_7_5800x3d_review,24.html

Basically this! There are some specifics you can assign them but that requires the direct intervention of the developers, which will take a few years to work in, hopefully, AMD gets their own BIG.little designs into the field, but that's not terribly likely, and depending on what they choose to do for their future designs may not be needed as much, but AMD doesn't have nearly the same presence in the OEM space as Intel so the EU and California regulations don't impact them to nearly the same degree, and in the laptop space their chips are already efficient enough there for now at least.He's not saying it's not efficient, he's saying the "efficiency" cores are only good for pulling heat away from the p-cores. Part tongue in cheek, I'm sure, but they do do that. They also take load from lower priority processes off of the p-cores, allowing them to sleep more, improving efficiency...when they're being used as intended, anyway. They don't directly help framerate or frametime, but can help indirectly when there are background processes that would otherwise get in the way.

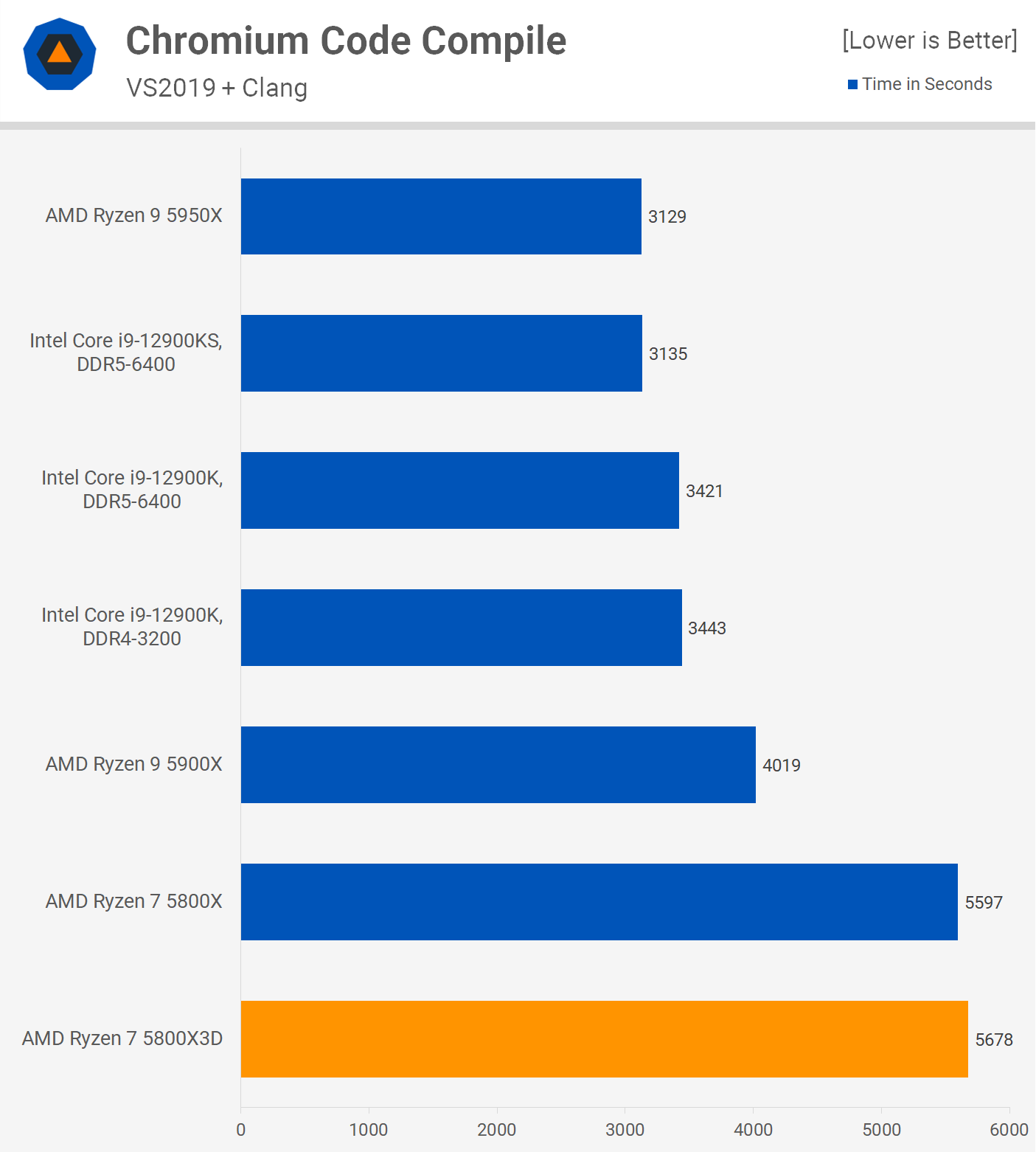

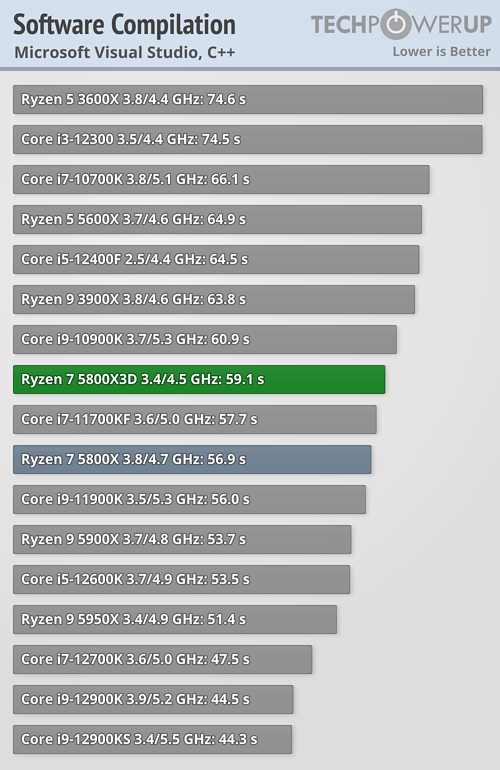

Would be hard to go faster than the cheaper 5900 if your compilation workload is quite parallelized (not sure if they count has load bearing):I'm more interested is how this thing does with code compilation, and in garbage collected languages at runtime. I do not expect a big jump, but didn't see any load-bearing numbers yet.