Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Amazon’s New World game is bricking GeForce RTX 3090 graphics cards

I'm going to keep away from this beta. I usually driver lock my stuff to 145 fps and keep my GPU locked at 59% power usage. In benchmarking I've been able to pull 494W out of my 3090. My old i7 board had a PCIE connector which boosted PCIE W draw higher by cards that asked for it, my current x570 only peaks at 480W.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

Honestly, I think the game is just bricking a bunch of shit because of how demanding it is anyways. Besides how poorly designed the power delivery is for some of these more recent cards, you've also just got a pretty typical scenario where a big new demanding game is killing hardware that was likely going to die at some point anyways. Most of the CryEngine based games have always done this. ARK killed a lot of stuff as well.reddit thread on cards bricking

Looks Like it's bricking a huge range of cards. Some that I've seen gamers report complete bricking on:

970gtx

980ti

2070

2080ti

3090 (dozens of ftw3 evga, several gigabyte, asus, msi)

vega 56

vega 64

Other than Hardware being bricked, game is causing hard resets on essentially all gpu architectures from 900 series to 3000 series, and vega to 6000 series.

This is absolutely nuts.

German Muscle

Supreme [H]ardness

- Joined

- Aug 2, 2005

- Messages

- 6,943

Yeah, it pretty much is definite. The driveshaft will break or the diff will scatter. This is why aftermarket driveshafts and diff braces are some of the first mods done to them.There is more than one Hellcat, and no it's not a definite that you will break the driveshaft with drag slicks. That sounds like some story you'd hear from people who abuse their cars like idiots. I recall hearing something similar, but then again that can indeed happen if you go around driving the car like a madman on a track (say jumps).

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,920

At this point I think it has enough media attention that anyone is going to report a dead graphics card. Looking at that reddit thread only 1 person with a Vega card a Vega 64 had an issue and he was able to get it working again once it cooled down. These cards are old enough that I bet they have a lot of dust on them. Nothing on that reddit thread about a dead GTX 970 but lots on EVGA 980 Ti's and EVGA 1080's. Why do I feel this is like EVGA has been allowing these cards to run past their power limit for a long time and now this game New World is exposing this practice?reddit thread on cards bricking

Looks Like it's bricking a huge range of cards. Some that I've seen gamers report complete bricking on:

970gtx

980ti

2070

2080ti

3090 (dozens of ftw3 evga, several gigabyte, asus, msi)

vega 56

vega 64

Other than Hardware being bricked, game is causing hard resets on essentially all gpu architectures from 900 series to 3000 series, and vega to 6000 series.

This is absolutely nuts.

oldmanbal

2[H]4U

- Joined

- Aug 27, 2010

- Messages

- 2,613

Well from tests people have run, it looks like a few things are happening:Honestly, I think the game is just bricking a bunch of shit because of how demanding it is anyways. Besides how poorly designed the power delivery is for some of these more recent cards, you've also just got a pretty typical scenario where a big new demanding game is killing hardware that was likely going to die at some point anyways. Most of the CryEngine based games have always done this. ARK killed a lot of stuff as well.

The game is able to inconveivable override your system settings and push the power well beyond its max running specs (this literally should not be possible or allowed. There's a reason videocard tuning utilities lock power limits and voltage over a certain point, too much juice = death). The result is that it creates massive power spikes running through the gpu, and is frying internal components. Some videocards have less effective means at setting the hard upper limit for how much, and the intervals for that to be delivered. Additionally, when these exraordinary loads get forced through inadequate components in the gpu, any faults in the design and manufacture process will instantly fail, and you get a scenario like the 3090 EVGA FTW3 cards that clearly have product design faults in their power delivery systems.

Separately: Some cards will simply die when pushed too hard due to manufacturing defaults unrelated to product design. This is pretty common and correlates with why you get some random reports of cards dying when playing a certain demanding game. However, these are just due to errors at the factory, and aren't repeatable across the entire product line. These issues don't happen because a game is running the card beyond hard upper power limits, as was the case with NEW WORLD.

Why a game studio, would enable a setting that forces gpus significantly beyond locked upper power limits is completely beyond comprehension.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

If you're hitting the set FPS limit in NVCP the card isn't going to stay fully powered/clocked. It only runs what it needs to hit the target.

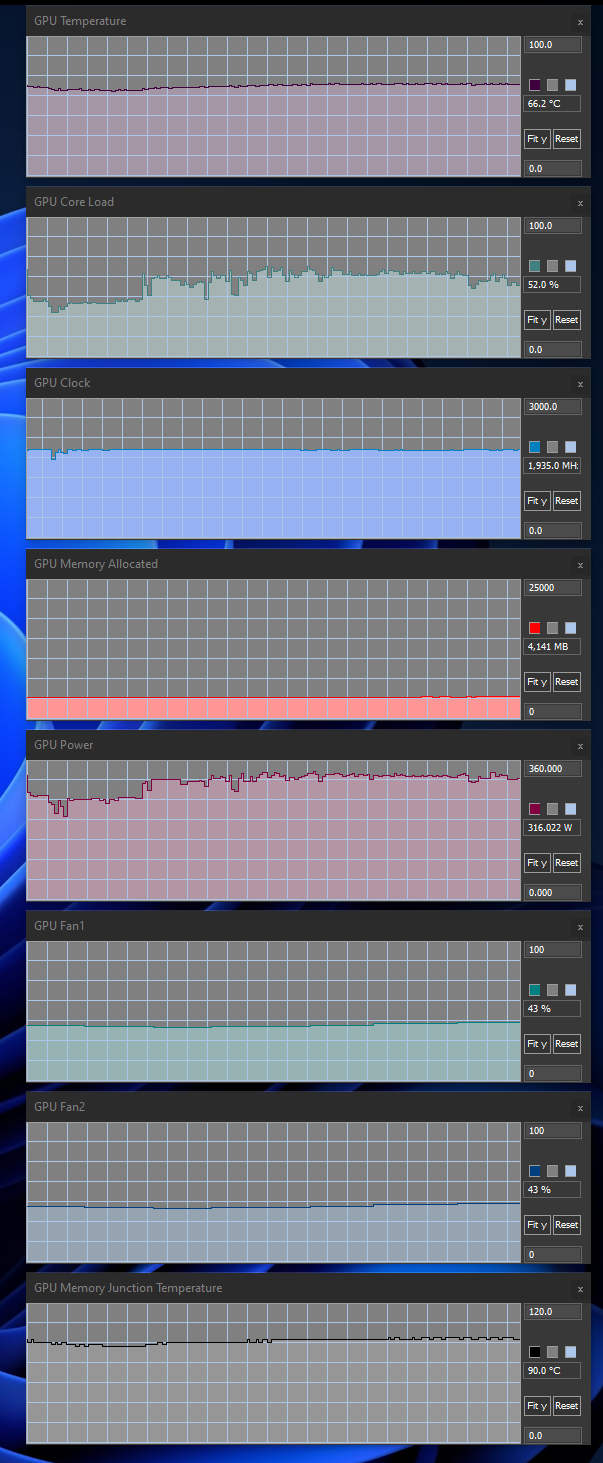

I think he is saying it's odd the card is close to it's full load as far as wattage goes yet only at 50% gpu load.If you're hitting the set FPS limit in NVCP the card isn't going to stay fully powered/clocked. It only runs what it needs to hit the target.

Yeah, of course, that's how it always works.. except for this game. Look at the clockspeed, temp (which again is the highest i've ever seen this card) and power draw while also at only 50% load.If you're hitting the set FPS limit in NVCP the card isn't going to stay fully powered/clocked. It only runs what it needs to hit the target.

Yup, just seems strange.I think he is saying it's odd the card is close to it's full load as far as wattage goes yet only at 50% gpu load.

OutOfPhase

Supreme [H]ardness

- Joined

- May 11, 2005

- Messages

- 6,577

I really want to see the post-mortem on this to see what exactly allowed it to bypass all the safety limits.

next-Jin

Supreme [H]ardness

- Joined

- Mar 29, 2006

- Messages

- 7,387

Yeah, of course, that's how it always works.. except for this game. Look at the clockspeed, temp (which again is the highest i've ever seen this card) and power draw while also at only 50% load.

Yup, just seems strange.

Yeah Jayz2cents had a video where he was actually showing that and explaining how it’s incredibly weird (on the evga card). He did not experience it with other cards.

IIRC he was getting something like 10-20w more juice than the settings in Afterburner and almost 100% power usage with 60% load or something strange.

I guess it could also be a faulty part on the cards, not necessarily a design fault. You’d think a design fault would show way more people complaining and it could possibly be with other manufacturers if they used a standard design template.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,548

Yeah, it pretty much is definite. The driveshaft will break or the diff will scatter. This is why aftermarket driveshafts and diff braces are some of the first mods done to them.

If you dont know how to launch a car then yes you can tear that all up. Loss of traction then the sudden gripping of the tires after spinning like that multiple times will shatter most rear ends or drivelines.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,548

You would think the Nvidia driver would stop this from happening, hopefully they get the glitch fixed shortly.

Haven't ran into any issues really with a 3090 FE . hits about the same 350 W power use that most games do. only thing that seems to get hot is the memory temp, but that seems to happen in all MMORPG games for some reason (Guild wars 2 gets the memory verry hot as well).

Only, kinda weird spot is it maxes out the card when waiting in the queue line.

Only, kinda weird spot is it maxes out the card when waiting in the queue line.

German Muscle

Supreme [H]ardness

- Joined

- Aug 2, 2005

- Messages

- 6,943

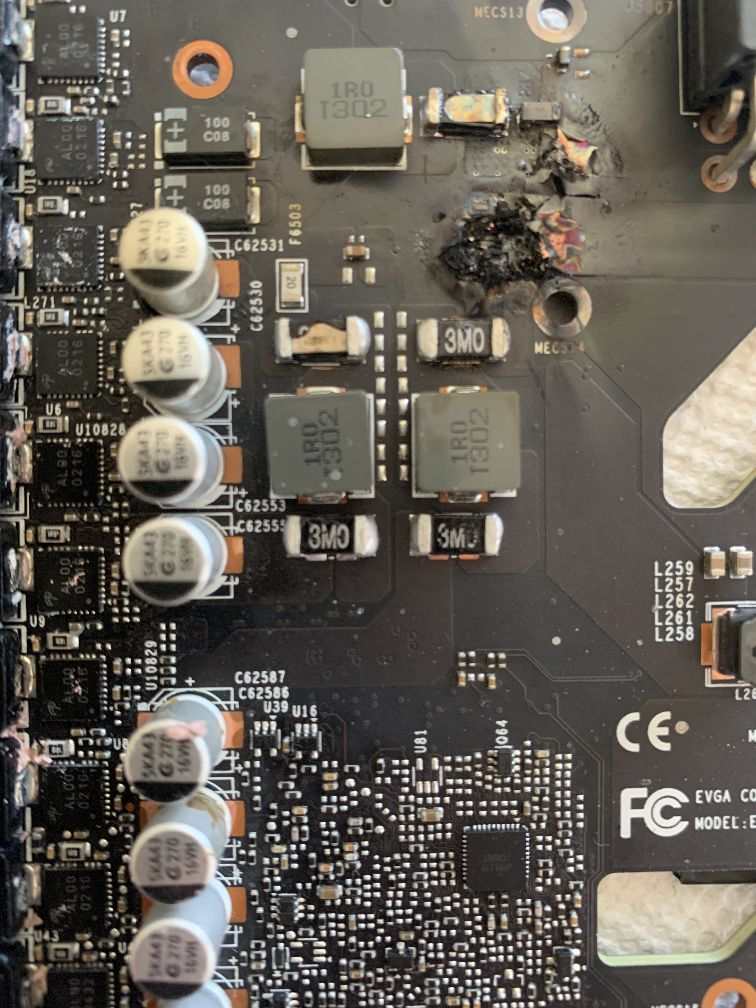

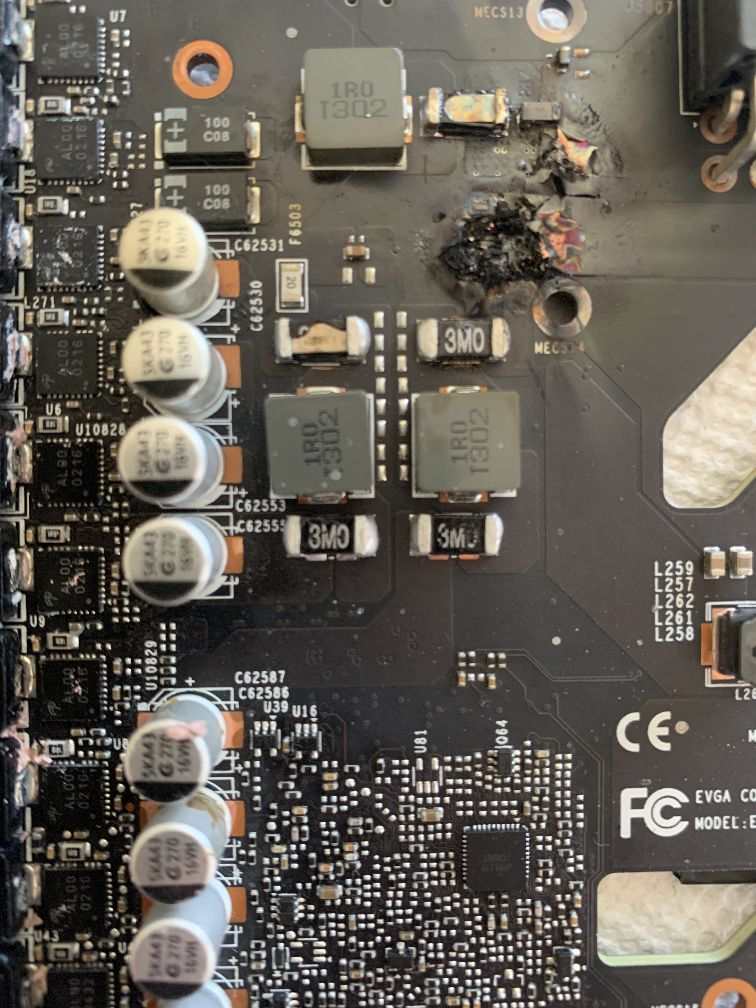

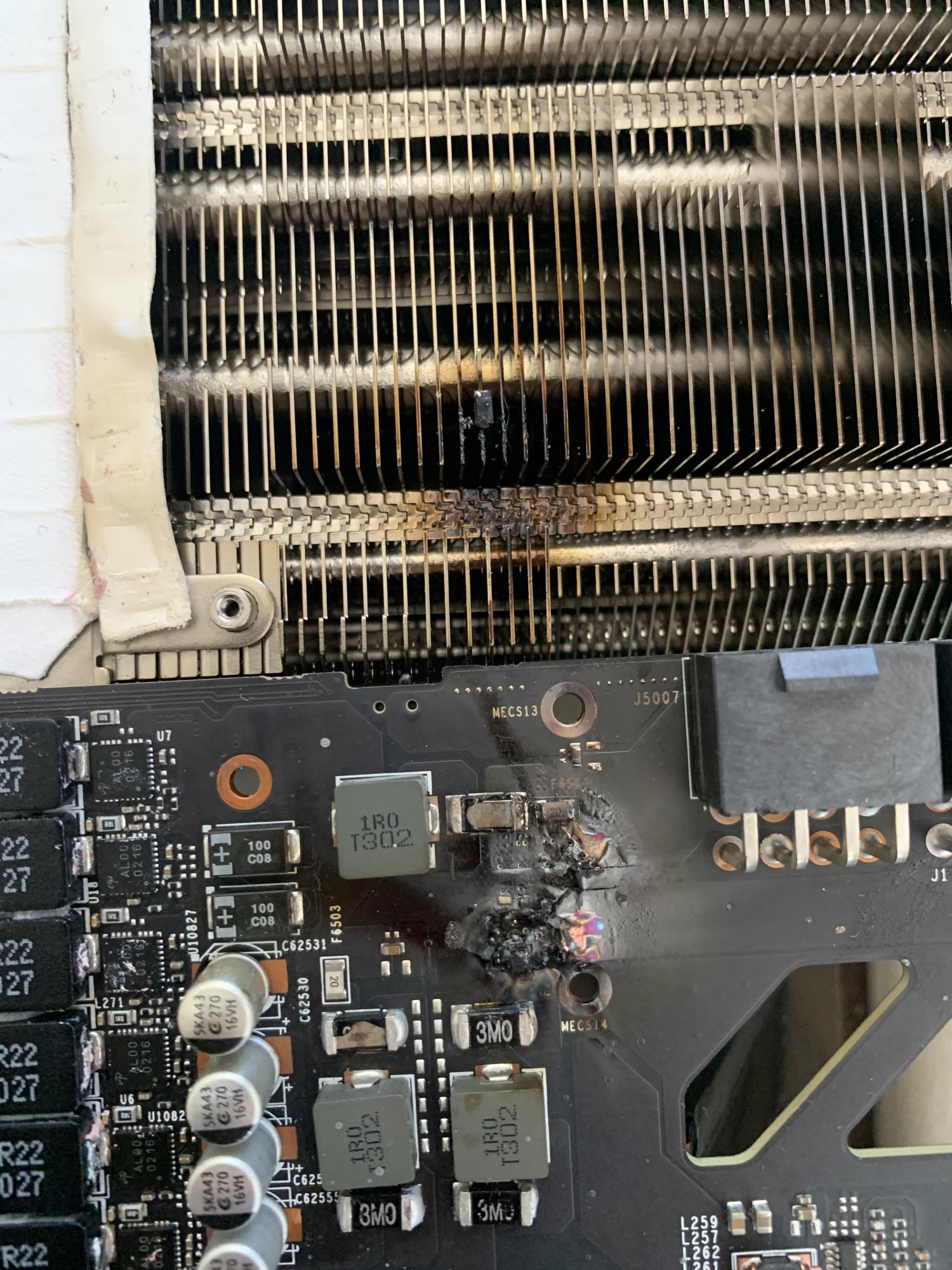

Just a FYI, There is a shitbag going around posting a picture of a blown shunt and trying to pass it off as part of this issue. This is 1000% fake news. That card was sold on ebay as a shunt modded card a month or two ago and the buyer took the block off and put a stock cooler on it and blew the card up and fucked my buddy over and he had to take it back and still has the card. Why this dipshit is doing this im not sure as he doesnt even have that card anymore and has nothing to gain from it other then throwing gasoline on a fire. Only reason im posting this is because its gaining traction for some stupid ass reason.

So if you see this image being passed around as from New World. Its a lie.

So if you see this image being passed around as from New World. Its a lie.

Last edited:

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,028

they could put in a universal frame cap but then people would bitch....You would think the Nvidia driver would stop this from happening, hopefully they get the glitch fixed shortly.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

It only seems to be the increased wattage 3 8pin cards really having an issue. The reference cards really can’t spike that much since there isn’t much more than 375w physically available.Haven't ran into any issues really with a 3090 FE . hits about the same 350 W power use that most games do. only thing that seems to get hot is the memory temp, but that seems to happen in all MMORPG games for some reason (Guild wars 2 gets the memory verry hot as well).

Only, kinda weird spot is it maxes out the card when waiting in the queue line.

Not true. The only physical limit for wattage available is that of the power supply. At some point, it's no longer able to provide additional amps, so the voltage on the output rails drops. The amount of amperage available on each of the 8 pin cables is not limited in any other way, though. Even a card with only two connectors could conceivably draw 500+ watts (that is 42+ amps) from the power supply - it would just exceed the ratings of the cables and connectors, and at some point the resistance through them causes the voltage to droop.It only seems to be the increased wattage 3 8pin cards really having an issue. The reference cards really can’t spike that much since there isn’t much more than 375w physically available.

When current limiting happens, it's on the demand side. Circuitry on the card is actively used to reduce the number of amps that flow through the buck converters and into the logic. It's not necessarily guaranteed that that circuitry will detect and respond to a situation where more current is being drawn than is allowed. There are a few different ways of monitoring current draw, and some of them are more robust than others.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

That's the point, that circuitry seems to be doing the job on the reference design cards. The other cards, not so much.Not true. The only physical limit for wattage available is that of the power supply. At some point, it's no longer able to provide additional amps, so the voltage on the output rails drops. The amount of amperage available on each of the 8 pin cables is not limited in any other way, though. Even a card with only two connectors could conceivably draw 500+ watts (that is 42+ amps) from the power supply - it would just exceed the ratings of the cables and connectors, and at some point the resistance through them causes the voltage to droop.

When current limiting happens, it's on the demand side. Circuitry on the card is actively used to reduce the number of amps that flow through the buck converters and into the logic. It's not necessarily guaranteed that that circuitry will detect and respond to a situation where more current is being drawn than is allowed. There are a few different ways of monitoring current draw, and some of them are more robust than others.

Falkentyne

[H]ard|Gawd

- Joined

- Jul 19, 2000

- Messages

- 1,868

Just a FYI, There is a shitbag going around posting a picture of a blown shunt and trying to pass it off as part of this issue. This is 1000% fake news. That card was sold on ebay as a shunt modded card a month or two ago and the buyer took the block off and put a stock cooler on it and blew the card up and fucked my buddy over and he had to take it back and still has the card. Why this dipshit is doing this im not sure as he doesnt even have that card anymore and has nothing to gain from it other then throwing gasoline on a fire. Only reason im posting this is because its gaining traction for some stupid ass reason.

So if you see this image being passed around as from New World. Its a lie.

View attachment 378278

It would help if the entire original picture were shown, which shows the heatsink *Shorting* the shunt mod.

Been playing the New World Beta on my Kingpin 3090 and so far no problems that i can see with it. I have the display/Visual settings on Ultra/High and i get around 60 FPS or so on my G9 monitor. (5,120 x 1,440 resolution) Average temps on the card are in the mid 50's range.

As long as i don't get this i'm fine:

As long as i don't get this i'm fine:

German Muscle

Supreme [H]ardness

- Joined

- Aug 2, 2005

- Messages

- 6,943

Your not going to have this issue with a Kingpin. They are built to run at a TDP of 1000w. This game isnt going to overrun the power delivery for that.Been playing the New World Beta on my Kingpin 3090 and so far no problems that i can see with it. I have the display/Visual settings on Ultra/High and i get around 60 FPS or so on my G9 monitor. (5,120 x 1,440 resolution) Average temps on the card are in the mid 50's range.

Falkentyne

[H]ard|Gawd

- Joined

- Jul 19, 2000

- Messages

- 1,868

The orange glow on the RAM and the smoke and fire look scaringly like an EAF running at 45MW! ;-)

That picture was something someone created from a very clever fire animation video. It was only obvious it wasn't real by looking at the speed of the flames.

Of course it's fake but the proximity of the ram, its color and the flames and smoke remind me of EAF running at full power which I've witnessed in person.That picture was something someone created from a very clever fire animation video. It was only obvious it wasn't real by looking at the speed of the flames.

The reality is OCP is quick enough that any emission of light from onboard components would only be caught by camera if it were shooting video with a decent frame rate. Something for slomo guys! ;-)

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,920

I'm surprised this hasn't been mentioned but how power efficient are the new RTX 3000 cards? Nvidia users were making fun of AMD for using a lot of power but now these cards with a new game can pull enough power to kill themselves. That's nuts.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

The reference design is pretty good given the performance with the max of 350w. The issue becomes the designs with increased delivery that will do 400w+. The power use skyrockets for very minimal gains at that point. 400w+ for an extra 5-8% tops over the reference of 350w ain’t worth it IMO, and the spikes really only seem to happen on the non-reference cards.I'm surprised this hasn't been mentioned but how power efficient are the new RTX 3000 cards? Nvidia users were making fun of AMD for using a lot of power but now these cards with a new game can pull enough power to kill themselves. That's nuts.

Ihaveworms

Ukfay Ancerkay

- Joined

- Jul 25, 2006

- Messages

- 4,628

I remember world of warcraft would hit very high FPS in the menus back in the day as well.

It may not be a matter of how much power it's pulling but more of a matter of a state of oscillation or hysteresis that puts the power delivery circuits into a viscous feedback to self destruction!I'm surprised this hasn't been mentioned but how power efficient are the new RTX 3000 cards? Nvidia users were making fun of AMD for using a lot of power but now these cards with a new game can pull enough power to kill themselves. That's nuts.

It could be one of those weird scenarios where this game causes the circuitry to oscillate in a manner that causes it to self destruct before any protection circuits swing into play. There are so many possible combinations and this one wasn't uncovered in testing before release. And that's where the problem lies simply because there's a thin line between normal operating mode, maximum allowable mode and semiconductor SOA absolute limits which when exceeded result in immediate destruction which shuts everything down via OCP and blows fuses on the PCB. In this case it would not matter if the board was cooled with a standard vapor chamber / cold plate or a custom block. It would be interesting to compare reference design to FE cards and so called fortified designs aka Kingpin models. Of course no one wants to sacrifice a GPU they paid thousands for in the name of science, that would be a job for the influencers with lots of disposable income and even then there may be rules what considers fair allowable use of products, etc.

Forcing v-sync is probably a good start as that combined with other things specific to this game could be causing these modes. The users that had their system shut down but were able to restart were lucky. Of course if they tried again and it blew up...

Forcing v-sync is probably a good start as that combined with other things specific to this game could be causing these modes. The users that had their system shut down but were able to restart were lucky. Of course if they tried again and it blew up...

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,920

I mean yea but the amount of power these cards can pull seems very inefficient. To me it seems like board partners are trying to differentiate themselves from each other and the reference design by allowing these cards to pull more power than they should. Nowadays they differentiate themselves by winning benchmarks by a few frames but this maybe at the cost of you not knowing that card will kill itself by turning off the governor, while making the device cheaper to manufacturer by putting in less capable components than the reference design. One thing I've learned from watching Actually Hardcore Overclocking is that board partners almost always use inferior VRMs and other components compared to reference with the exception of Asus.It may not be a matter of how much power it's pulling but more of a matter of a state of oscillation or hysteresis that puts the power delivery circuits into a viscous feedback to self destruction!

I bet on the 3090 at least it has something to do with overheating on the backside....my 3090 would about burn me before I added fans blowing on the back before I put it on waterHrmmm all those making fun of my 6900xt.... on this site too!

My 6900xt is not bricking.

While your 3090 well...... rip

Glad I got rid of my evga 3090. AMD has been a winner through abd through for me this round.

I believe Kyle did but wouldn't say what it was other then it was fixedSpeaking of this, did anyone ever figure out what was causing Space Invaders on the 2080 Ti?

EVGA is scalping people very large time for the RMA of these cards, lol.

https://www.techpowerup.com/285288/...er-level-pricing-for-advanced-gpu-rma-program

Might as well gift some leftover PowerLink pieces of fiasco shit with them when the cards come back to their owners. EVGA being EVGA, as usual.

https://www.techpowerup.com/285288/...er-level-pricing-for-advanced-gpu-rma-program

Might as well gift some leftover PowerLink pieces of fiasco shit with them when the cards come back to their owners. EVGA being EVGA, as usual.

so then don't do advanced RMA and just RMA it as usual.EVGA is scalping people very large time for the RMA of these cards, lol.

https://www.techpowerup.com/285288/...er-level-pricing-for-advanced-gpu-rma-program

Might as well gift some leftover PowerLink pieces of fiasco shit with them when the cards come back to their owners. EVGA being EVGA, as usual.

so then don't do advanced RMA and just RMA it as usual.

True, after these news you can standard RMA the cards. Though it still looks bad for that program of them.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)