Yes, the modules are 3 GBps faster, but the bus is also wider.Actually, if you did some research, you will know that nvidia limited their own memory speed and bandwidth, from memory it was about only 3 Gbps less than AMD. Having faster memory wont buy you more VRAM space

6800/6800 XT/6900 XT: 512 GB/s

RTX 3080: 760 GB/s

RTX 3090: 936 GB/s

You have to factor in both speed and bus width to get actual memory speed, and the 3080 and 3090 are significantly faster than the RX 6000 cards.

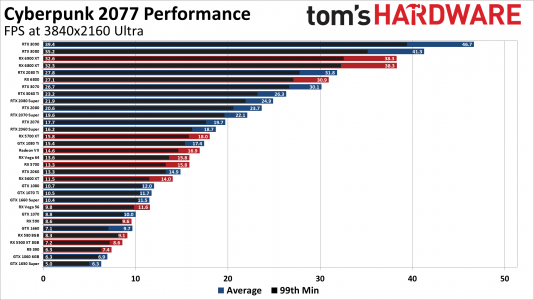

More memory bandwidth = better performance at higher resolutions.

The RX 6000 cards will constantly be limited because of their crippled memory bus.

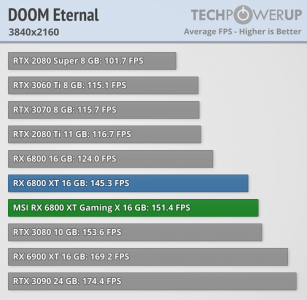

Also, you do realize that the RTX 3080 is faster than the RX 6800 XT in Doom Eternal, Cyberpunk 2077, and Flight Sim 2020, right? That puts a cork in your theory, doesn't it?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)