NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,799

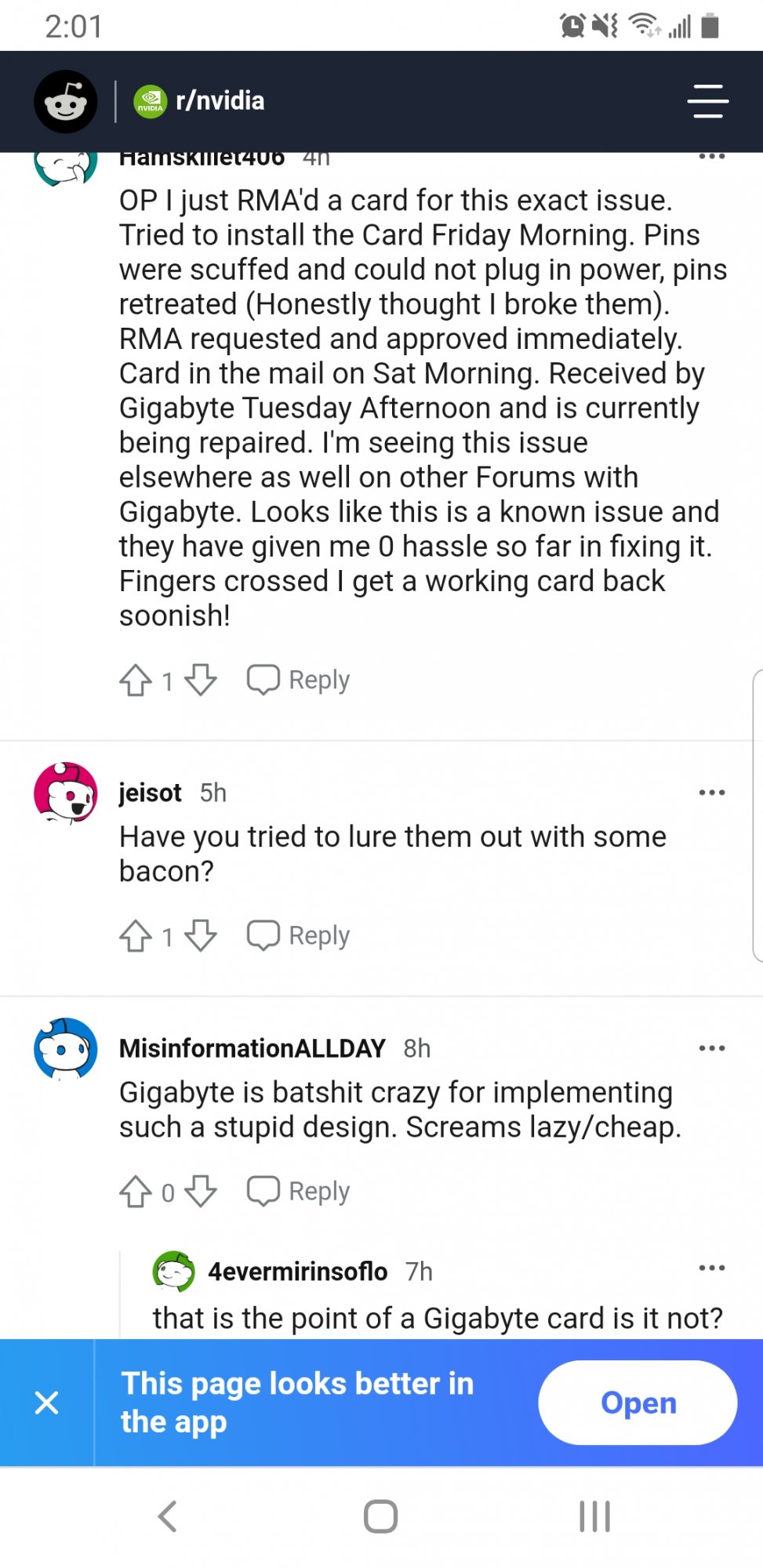

Lol Gigabyte messing up a simple connector. It doesn't have to be a point of failure, but when you go cheap enough anything can be.Gigabyte breakout power boxes have been failing on users while the /nvidia mods are doing their pr thing and deleting all the posts with pictures. Took screenshots of this thread that linked to a larger one that was deleted while I was reading through the comments. Someone said this wasn't an extra point of failure. Seems that wasn't true. It's a simple repair but now you have people sending cards back because the connectors on the breakout box is being pushed through.

View attachment 284505

View attachment 284506

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)