Auer

[H]ard|Gawd

- Joined

- Nov 2, 2018

- Messages

- 1,972

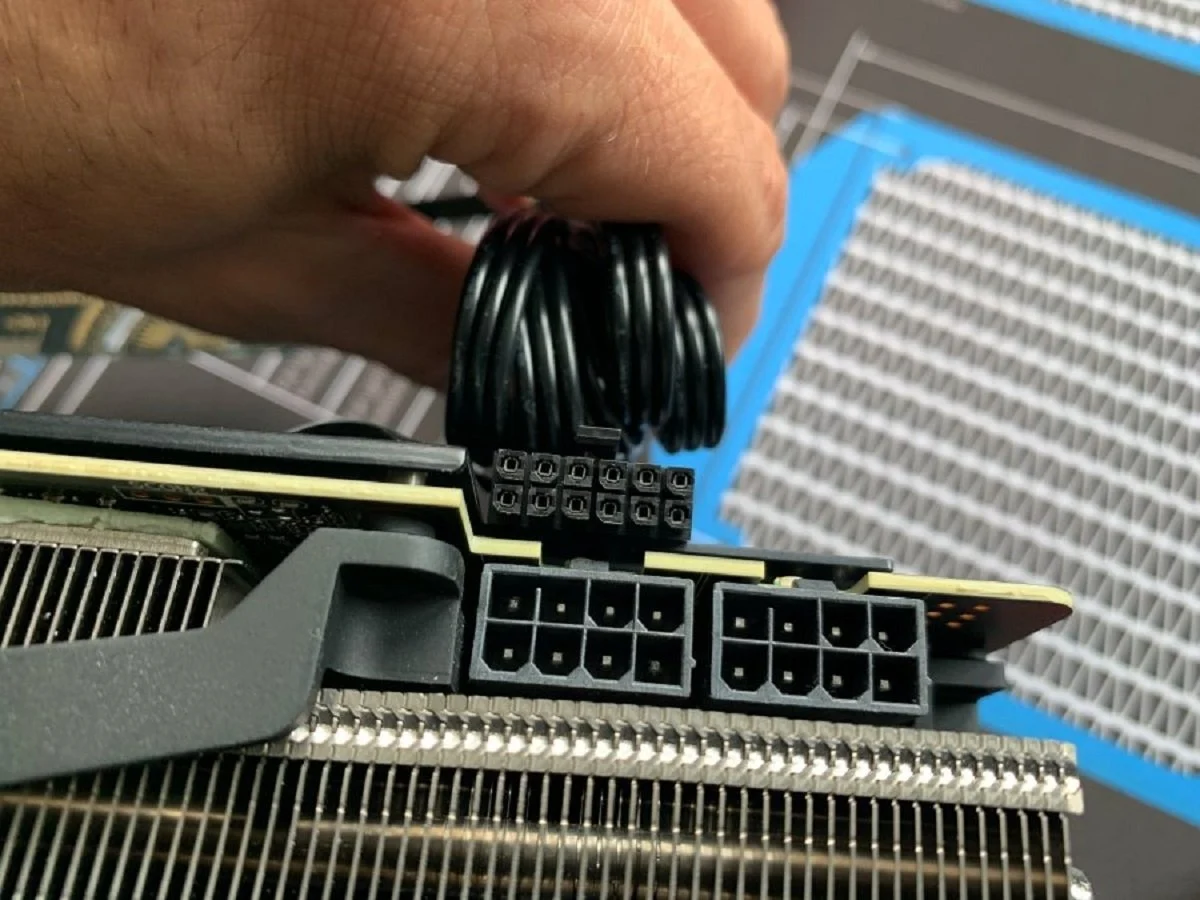

Doubt it, Splitters will be around regardless. Jerryrigged Ebay China crap wont go away just because of a new plug...I wonder if the ultimate goal to avoid issues due to users using 6 and 8 pin splitters to power higher end cards. I seem to vaguely recall that some of the RX5700 crash issues were being blamed on how the auxiliary power was being delivered to the card.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)