GeForstner

n00b

- Joined

- May 28, 2020

- Messages

- 2

so there is hope for both C9 and CX when HDMI 2.1 GPU's arrive - or on what is the custom-res-60hz-limit depending on?It will not work. Custom reso’s are limited to 60hz for now...

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

so there is hope for both C9 and CX when HDMI 2.1 GPU's arrive - or on what is the custom-res-60hz-limit depending on?It will not work. Custom reso’s are limited to 60hz for now...

It will not work. Custom reso’s are limited to 60hz for now...

Not true. Have not tried that specific resolution @120 hz but several others.

Did you try a CUSTOM resolution? That's the key thing here, 1440p and 1080p works at 120Hz as they aren't really custom resolutions but ones that you could already pick from the TV by default.

K. Ive played HLL at 3840x1600 because on epic its demanding for 4k. But there was no way i could get it higher than 60hz. After that iVe trie d 3840x1440. Also no success. Maybe im doing it wrong.

1440p at higher hz, yes. But only at 2560. Havent tried 3440.

Yeah, I can't stand that QUANTUMTV channel. He's one of those people who has more rhetoric and controversy than facts/analysis; and he has some weird hard-on about his life's mission to bash LG. In short, a hack.This guy is a known idiot.

No. Ive just replace it with a proper 2.1 cable to prep my 3080ti arrivalNot sure what problems you are experiencing but maybe your HDMI cable is not good enough to handle the needed bandwidth?

No. Ive just replace it with a proper 2.1 cable to prep my 3080ti arrival

If i set a higher frequency itll not pass the test. Itll flicker black and back. And if i set the frequency to 60 it works.

I really want this, I am more interested in how 4k 120hz G-sync works for those that have it and can come back on the gaming experience.

Looks like more ABL (automatic brightness limiter) has come up again. Honest for gaming & movies, I've never once noticed it and I have very high standards.

But for windows / office work, my god it's horrible. I'm one of the fortunate ones who could work from home due to COVID 19 for the past 3 months. I've been using my OLED as my secondary monitor since I had a 4x1080p monitor setup at work. It's so annoying using spreadsheets, word documents, or websites in general. The screen constantly changes brightness almost anytime I scroll or do anything.

I'm a fanboy/white knight of OLED's but even I couldn't recommend it for any type of PC work.

Can you lower brightness when doing desktop/browsing activities such that the ABL would never actually kick in (for example, say ABL knocks fullscreen white down to 150nits ... what if you set brightness low enough that the screen never went higher than 150 nits period)? For desktop type activities, I imagine 150nits is still reasonably bright and contrasty (especially with OLED blacks and some amount of light control in your space) but I've never had an OLED and have not tried this. I'm curious if any OLED owners can comment on this kind of scenario.

According to RTings CX review, the CX has aggressive ABL like the C9, E9.

"The CX has decent HDR peak brightness, enough to bring out highlights in HDR. There's quite a bit of variation when displaying different content, and it gets the least bright with large areas, which is caused by the aggressive ABL. "

That is how it is with HDR.

With SDR, there is a Peak Brightness Setting. Since it limits the peak brightness it doesn't seem compatible with HDR.

From the Rtings C9 Review, regarding SDR settings concerning ABL:

"If ABL bothers you, setting the contrast to '80' and setting Peak Brightness to 'Off' essentially disables ABL, but the peak brightness is quite a bit lower (246-258 cd/m² in all scenes)."

Yeah, I can't stand that QUANTUMTV channel. He's one of those people who has more rhetoric and controversy than facts/analysis; and he has some weird hard-on about his life's mission to bash LG. In short, a hack.

Considering the very poor FALD contrast in the 2020 sets with 1 hdmi 2.1 port, the glow (or dim corona) halos in FALD zone balancing along with the other weaknesses shown - OLED ABL is a fair tradeoff. I didn't even think it was that bad in the hate video personally, and he was playing a SDR game off of a console or emulator which means he could have easily set SDR mode to never have ABL. A more relevant example would be ABL kicking on in HDR content side by side with the color temperature mapped version.

I thought there might be a 2020 version of the Q9 but with hdmi 2.1 so I was looking out of curiousity for a future living room purchase. The Q9FN has native contrast of 6055:1 but more importantly the Q9FN with FALD active it has 19,018:1 contrast ratio. The 2020 QLED/LED LCD sets with 1 hdmi 2.1 port that I mentioned are horrible by comparison, and their game mode makes them even worse.

Edit:

The Q90/Q90R RTings review quotes 11,200:1 contrast ratio with FALD active so there is that one at least.

"The Q90 has a great local dimming feature. There's very little blooming, but it tends to dim the edges of bright objects, causing a vignetting effect, and small highlights like stars are crushed. In Game Mode, the local dimming doesn't react as quickly to changes in a scene, leading to more visible blooming. "

" Unfortunately the TV's 'Ultra Viewing Angle' optical layer makes the pixels hard to see clearly. We observed the same issue on the Q900R pixel photo. "

"HDMI 2.1 : UNKNOWN"

There are no HDMI 2.1 cables, only bandwidth

Can't really come up with any good ideas unfortunately, just that I know for a fact that custom resolutions at 120 hz works.

They have for DP; for HDMI, not yet, but they very likely will for HDMI 2.1 to ensure proper HDR support.I really hope this popularity of these TVs get Nvidia to reimplement 10bit support in their consumer GPUs. They must already be aware of the demand. Has anyone posted this to their user forum?

....

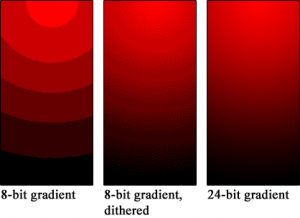

Your viewing distance would definitely matter with how visible degredation to the original source material would be when using 8bit, 8bit dithered, or lowering chroma .. something like like seeing the difference between 1080p, 1440p, 4k, and 8k native panels is relative to your viewing distance. Since these are going to be used as monitors by some of us at around 4' viewing distance rather than from 8' to 10' away at a couch that could be very visible (even then people notice and complain about banding though).

Lower chroma definitely affects text detail/fidelity and so will affect high detail textures and photos, etc. and as shown in example below it also affects graphics in games. Whether that bothers you or not is another matter.

K. Ive played HLL at 3840x1600 because on epic its demanding for 4k. But there was no way i could get it higher than 60hz. After that iVe trie d 3840x1440. Also no success. Maybe im doing it wrong.

1440p at higher hz, yes. But only at 2560. Havent tried 3440.

I've done some additional testing. I think all custom resolutions work (tested many normal monitor formats without scaling). But ONLY If the frequency is 60Hz or less. Also - i've tested Gsync in pendulum demo for instance at 3440x1440. It smoothens out significantly. It works till 60hz... anything above will cause tearing again.No. Ive just replace it with a proper 2.1 cable to prep my 3080ti arrival

If i set a higher frequency itll not pass the test. Itll flicker black and back. And if i set the frequency to 60 it works.

I've done some additional testing. I think all custom resolutions work (tested many normal monitor formats without scaling). But ONLY If the frequency is 60Hz or less. Also - i've tested Gsync in pendulum demo for instance at 3440x1440. It smoothens out significantly. It works till 60hz... anything above will cause tearing again.

Even with scaling set to gpu? Very odd if so.This is my experience on the C9 as well. Only 1920x1080 and 2560x1440 work at 120 Hz, custom resolutions like 3840x1080, 3840x1440 and 3840x1600 only work at 60 Hz until we get HDMI 2.1 GPUs.

Even with scaling set to gpu? Very odd if so.

Yes. Refuses to output anything higher than 60 Hz.

I am very confused about the 4k 120 gsync not working, because it s should works ? The gsync range is 40 > 120... Is it possible that is a firmware bug or a nvidia driver issue ? Because of that it is no buying for me

In de nvidia pendulum demo at 4k 120Hz you cannot enable Gsync. The motion is smooth though.... and there is NO tearing. I've tested it on 40-115 fps.I am very confused about the 4k 120 gsync not working, because it s should works ? The gsync range is 40 > 120... Is it possible that is a firmware bug or a nvidia driver issue ? Because of that it is no buying for me

In de nvidia pendulum demo at 4k 120Hz you cannot enable Gsync. The motion is smooth though.... and there is NO tearing. I've tested it on 40-115 fps.

If you enable the GSYNC compatible indicator in nvidia control panel - it will NOT show up on the 4k 120hz... if i switch to supported reso's it shows. So i think the pendulum gsync indicator aligns with the one setup through the nvidia control panel.

If you set the display at 3840x1440 - pendulum shows GSYNC is enabled. But i still get massive tearing. So it might not actually work with custom resolutions.

In de nvidia pendulum demo at 4k 120Hz you cannot enable Gsync. The motion is smooth though.... and there is NO tearing. I've tested it on 40-115 fps.

If you enable the GSYNC compatible indicator in nvidia control panel - it will NOT show up on the 4k 120hz... if i switch to supported reso's it shows. So i think the pendulum gsync indicator aligns with the one setup through the nvidia control panel.

If you set the display at 3840x1440 - pendulum shows GSYNC is enabled. But i still get massive tearing. So it might not actually work with custom resolutions.

I use mine for 6-8 hours per day on average since starting WFH in December 2018 and I do notice the ABL if there is a lot of white on the screen, but unlike Seyumi it doesn't bother me in the least. It is good to point out that it exists as it could be annoying to some but I am not bothered by it and usually welcome the reduction in brightness because even at lower brightness settings like I run, these can feel like staring at the sun if you have light sensitivity which my eyes seem to (I frequently find myself squinting when outside on bright days, lol). I would say that this will vary per person. It is certainly not a dealbreaker for PC use for me. I use mine for everything and love it - by far the best monitor I've ever had in 25 years of owning and using PCs. /shrug

Because I've tested it. gsync works @ 4k60 but not 4k120.How do we know it does not work?

Because I've tested it. gsync works @ 4k60 but not 4k120.

The key to success is to not run things fullscreen. Which in most cases is pointless anyway even though lots of people still do it as they have alwayd done it. Regardless of if one is using an OLED or not, I would recommend installing something like PowerToys and divide the screen in different areas, as it is not that often you really need 4K fullscreen (at least I don't). Besides being more productive, that will also improve the ABL situation, especially with some theme changes. PowerToys is free and it also remembers position and size of application (if you want it to), so you won't have to constantly rearrange things.

https://github.com/microsoft/PowerToys

..The 4k quadrants are not the same size intentionally, with one larger quadrant and 3 more tiled to fill the rest, leaving one small window area "gap" in the middle top if I have 4 windows going.

..When I click each named monitor button it moves the active window to a full height centered or other favorite position I set specifically to each monitor on each of those "monitor buttons".

..The corner XL is for media generally when I want a big window but not fullscreen, it leaves about 25% of the screen available at the top for tiling my screenshot app, several chat windows, and foobar across in a row.

..The up left and mid left come in handy for getting a hold of misc apps.

..The 40% left , 60% right buttons are not only useful for splitting the screen perfectly on the fly, but they correspond to the quadrant window widths. That means the can fill the left or right side of a quad of windows without covering the other half of the monitor's 2 open windows.

I also use a few other arrays of buttons that I get to with that "level up" arrow. I set up some apps so that when I click their streamdeck button it focuses on the app. I'm working on figuring out a script so that when I click the app's stream deck button it will check to see if it's open, open it if not, restore it if minimized, or minimize it.. all with one button. There are a few functions that try to do a few of those but I haven't found a pre-made one that does all of that yet so I'll have to experiment. For now I can for example click the "foobar" icon to focus it, then have play/pause toggle button whose graphic changes ( >, || ), and a fwd and back button.

The focusing of apps would come in handy with the window management buttons but as it is now I only have 11 keys at a time with the recursion button avaialable so I have to jump up to the main directory/level then back down to the app buttons one. I was thinking of getting a streamdeck XL someday if they go on sale again since that has a lot more buttons but now that I've been watching a few youtube videos about it, it might work better just adding a second streamdeck so that only half of the buttons change when you recurse directories.

There is a lot more stuff you can do with both DisplayFusion Pro and Streamdeck in themselves and with other software like OBS, etc. if you wanted to put a lot of time into it - but you don't really have to go crazy to get some very convenient functionality. Also worth mentioning that there is a streamdeck app for phones or tablets so I'm pretty sure you don't have to buy one if you just want to try it out that way.

Displayfusion Pro has full featured taskbar that includes all of the windows taskbar functions and more. I've been using displayfusion pro for years for all of it's multi-monitor functions. The displayfusion pro taskbar completely hides itself when set to auto hide.

https://www.displayfusion.com/Features/Taskbar/

Thread from 2020 with the displayfusion author referencing the 2nd thread as being a valid workaround:

https://www.displayfusion.com/Discu...f39s/?ID=46c7fa88-585f-463a-adec-1acaebb5a1b9

The original displayfusion forum thread with the author detailing how to hide the windows taskbar on the primary monitor completely in order to replace it with displayfusion's taskbar there:

https://www.displayfusion.com/Discu...kbar/?ID=3d0cad5b-2ab0-4b2d-acbc-cd2b45389c38

------------------------------------------------------

Taskbar Magic is referenced by most of the pages that mention completely hiding the default windows taskbar. You can then add the full featured and completely hide-able displayfusion taskbar in its place (or set the primary monitor's apps to show on the displayfusion taskbars on other monitors in an array) .

https://www.404techsupport.com/2015/09/16/hide-taskbar-start-button-startup/

View attachment 243716

https://superuser.com/questions/219605/how-to-completely-disable-the-windows-taskbar/641719

reddit r/windows/comments/atj5l2/is_there_a_way_to_hide_the_taskbar_completely/

-------------------------------------------------------

You can also use taskbar hider which sets the show/hide of the taskbar to a hotkey toggle, but I don't think this one gets rid of the sliver of taskbar showing by itself.

http://www.itsamples.com/taskbar-hider.html

------------------------------------------------------

Use any at your own risk but they seem to be working methods.

https://www.dropbox.com/s/480hjn1n0thpl3j/Taskbar-Magic.zip

https://www.displayfusion.com/