DoubleTap

2[H]4U

- Joined

- Dec 16, 2010

- Messages

- 2,990

I have an 8TB Barracuda - it's maybe 6 months old and barely used. It won't fit in my new ITX system. It mostly has family archives and backups - photos, etc.

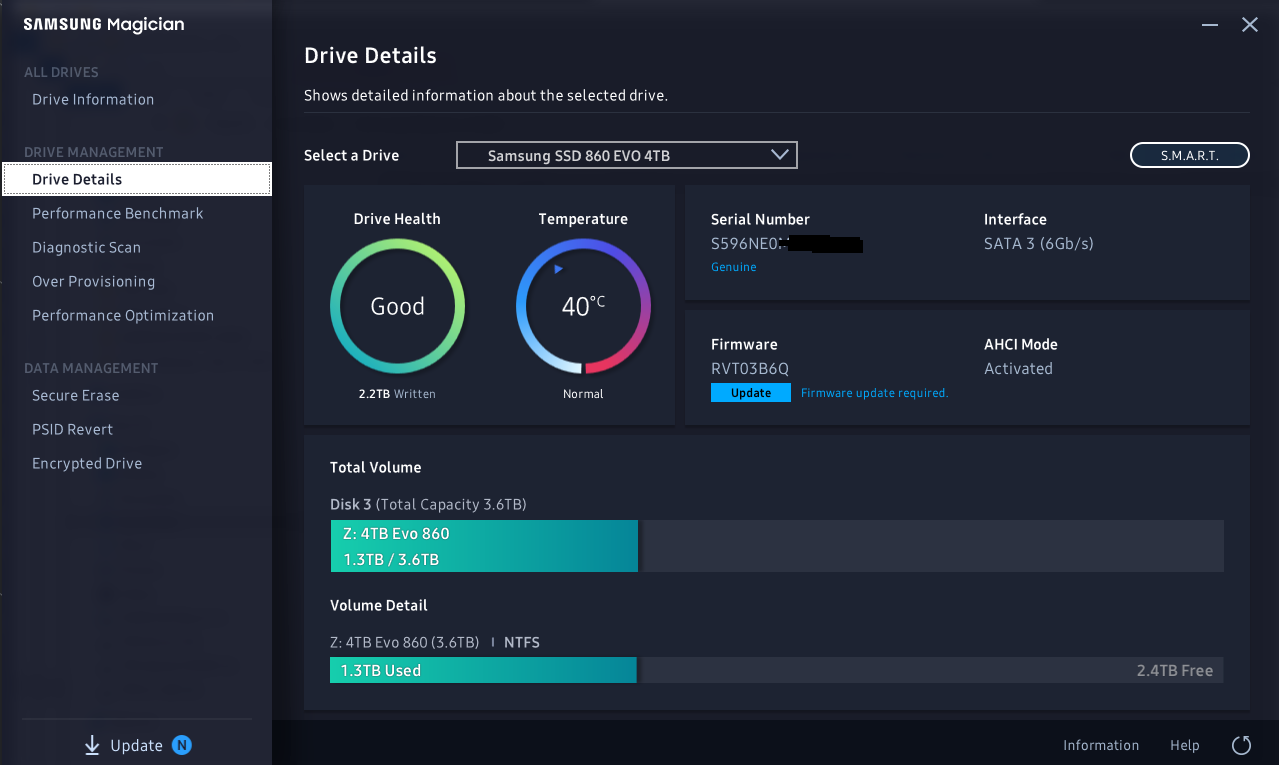

Just got a 4TB Samsung Evo 860 SSD

I manually copied the data from the HDD to the SSD about a week ago (using windows explorer) I compared the file count and capacity size when done - it matched up.

Didn't think about it for a week.

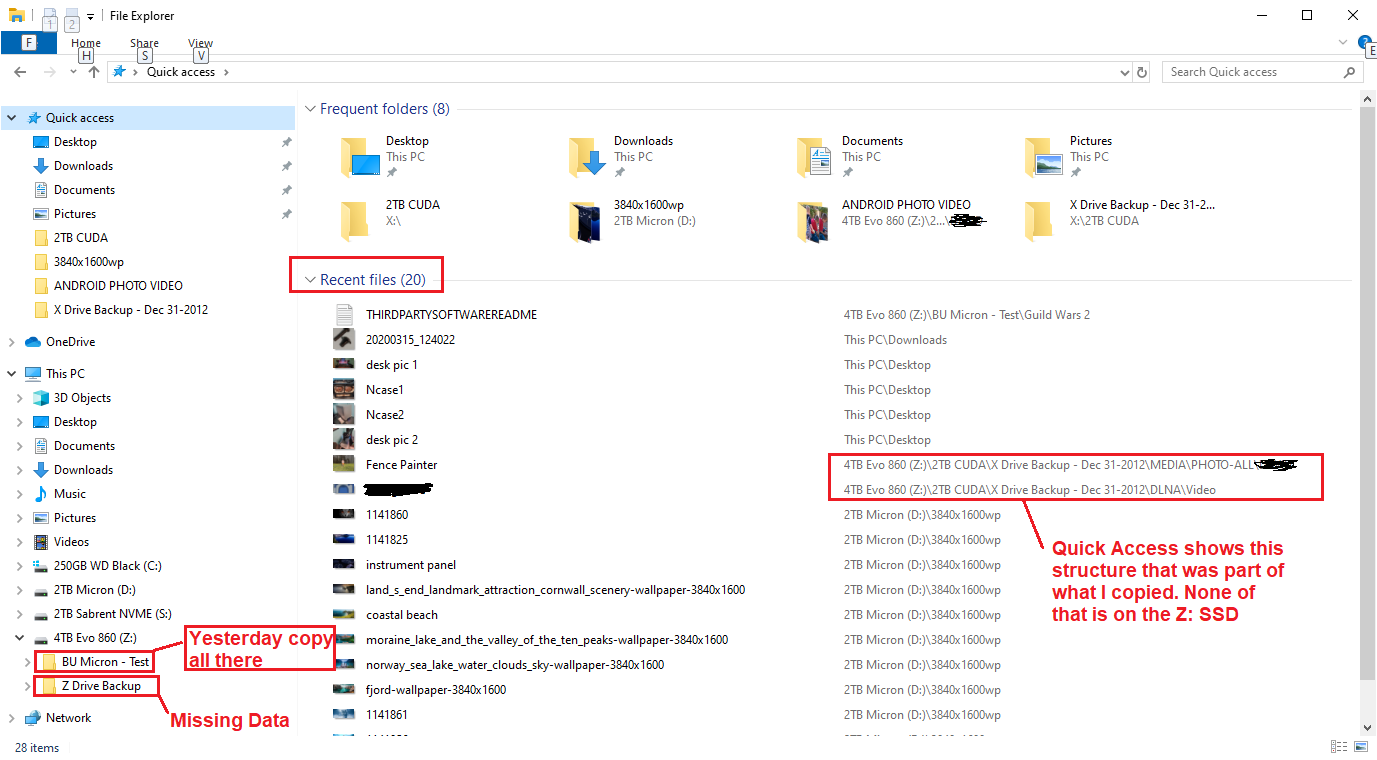

Now, when I look at the 4TB SSD, it's basically empty

There was one folder in the drive, (DATA/Apple Backup 2017/Apple Computer) and it contains 0 bytes.

There should be about a dozen folder structures.

Yes, I suppose I could have used a backup program, but it's just a bunch of file structures - it took half the day and I did it in chunks and kept manually checking to make sure each chunk copied over. Nobody else has access to this system and everything else seems fine.

Fortunately, I left the original data on the spinner, but this has me a little unnerved and worried about the functionality of the 4TB Evo.

Any ideas on what could cause this?

Just got a 4TB Samsung Evo 860 SSD

I manually copied the data from the HDD to the SSD about a week ago (using windows explorer) I compared the file count and capacity size when done - it matched up.

Didn't think about it for a week.

Now, when I look at the 4TB SSD, it's basically empty

There was one folder in the drive, (DATA/Apple Backup 2017/Apple Computer) and it contains 0 bytes.

There should be about a dozen folder structures.

Yes, I suppose I could have used a backup program, but it's just a bunch of file structures - it took half the day and I did it in chunks and kept manually checking to make sure each chunk copied over. Nobody else has access to this system and everything else seems fine.

Fortunately, I left the original data on the spinner, but this has me a little unnerved and worried about the functionality of the 4TB Evo.

Any ideas on what could cause this?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)