Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD RX 5700 XT card is better than a GTX 1080 at ray tracing in new Crytek demo

- Thread starter kac77

- Start date

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

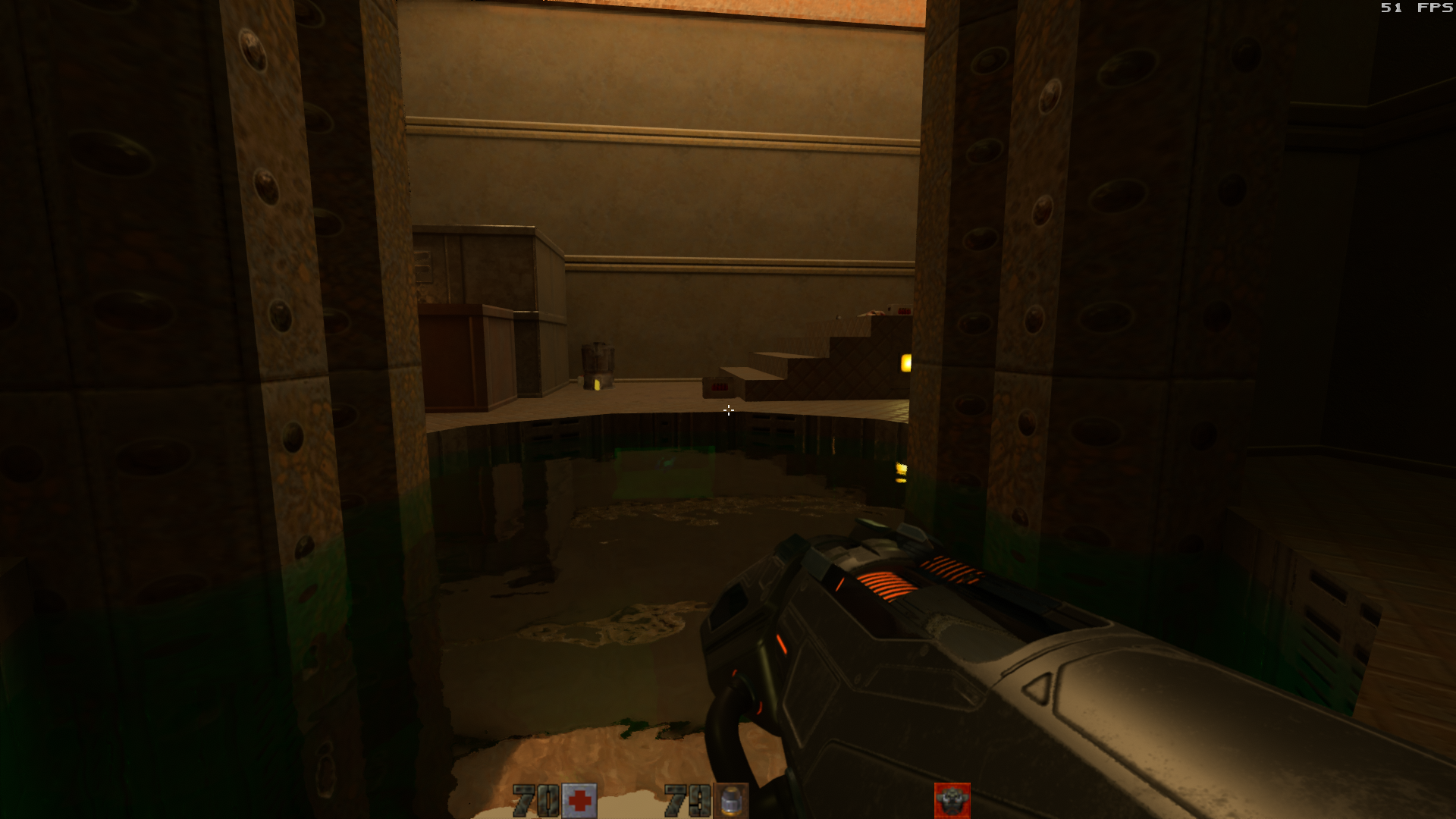

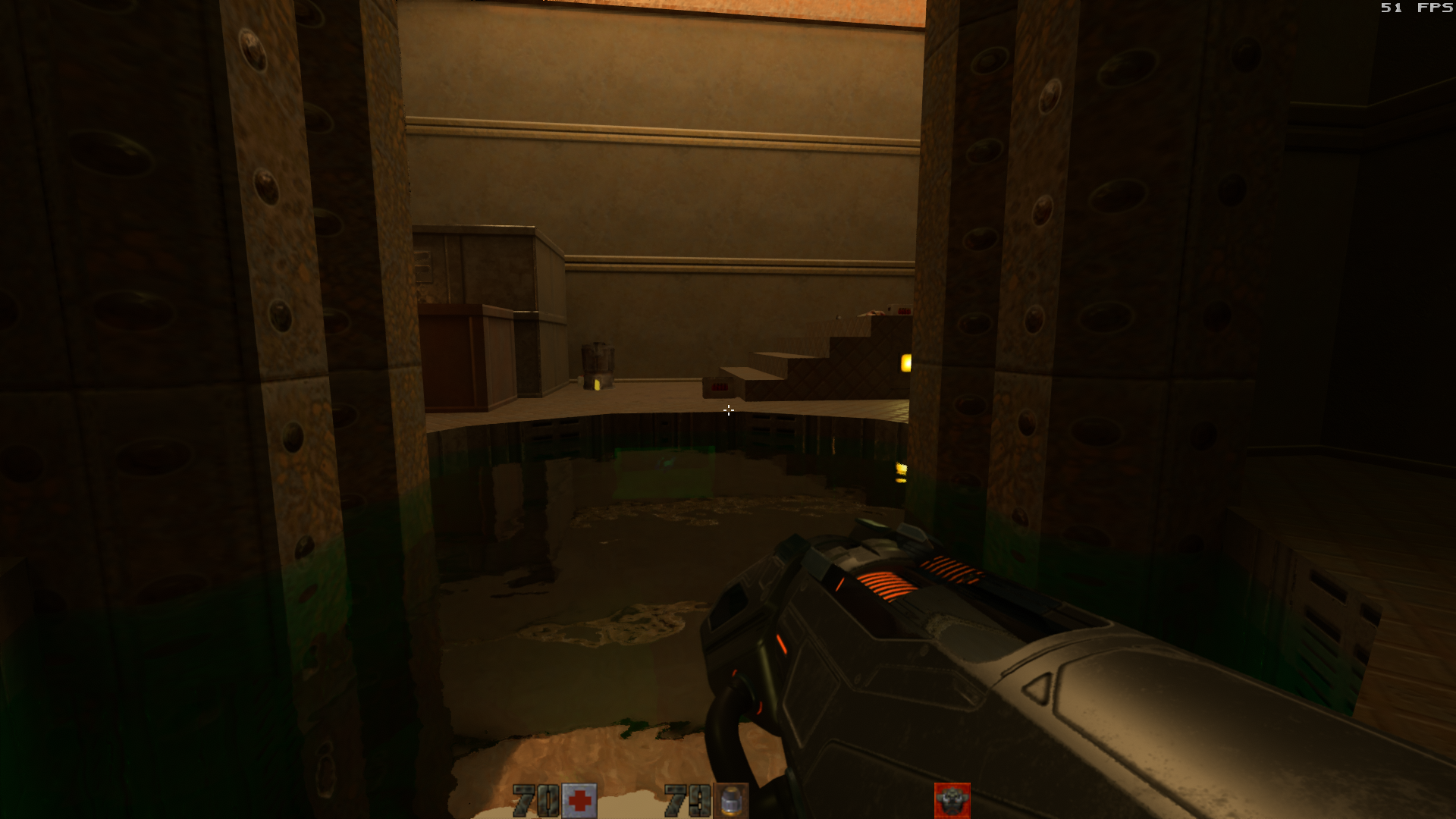

Getting back to the Crytek reflection demo, hey my Radeon 5700xt is doing real time raytracing at very smooth frame rates at 1440p.

Wait till Crytek gets around to using hardware and compare with a 2060 again

Yes maybe Crysis 4Wait till Crytek gets around to using hardware and compare with a 2060 again

https://play4.uk/crytek-might-just-teased-the-release-date-of-crysis-4-game-news-3020

PontiacGTX

Gawd

- Joined

- Aug 9, 2013

- Messages

- 808

Because Directx's rendering pipeline and Cryengine's DX11 arent optimized for GCN/Navi or computeWhy would you assume that? I’m not aware of any specific advantage Navi has in DX12/Vulkan. If anything it’s Nvidia that has a driver advantage in DX11 so moving to DX12 should even the playing field.

even some DX12 titles wouldnt get better performance due to how it isnt a 100% native DX12 oriented implementation,maybe vulkan would give an advantage to AMD but afaik I havent seen the first Cryengine Vulkan implementation

Last edited:

Not true and this is why it's deceitful. All DX12 cards are capable of doing this today. In order for a card to be DX12 certified it has to be able to do all of the features. That certification you have to pay for.Nvidia IS the only brand capable of that level of graphics. At least till next year.

Just because Nvidia pays a developer to use their tool kit does not mean all other cards can't perform that feature.

Your response is specifically what I'm talking about . If you think Nvidia is only chip manufacturer that can do ray tracing you would be majorly wrong.

Because Directx's rendering pipeline and Cryengine's DX11 arent optimized for GCN/Navi or compute

even some DX12 titles wouldnt get better performance due to how it isnt a 100% native DX12 oriented implementation,maybe vulkan would give an advantage to AMD but afaik I havent seen the first Cryengine Vulkan implementation

I meant which aspects of AMD’s architecture lead you to believe it should have an advantage in DirectX and Vulkan? The asynchronous compute hype train moved on a long time ago.

Not true and this is why it's deceitful. All DX12 cards are capable of doing this today. In order for a card to be DX12 certified it has to be able to do all of the features. That certification you have to pay for.

Just because Nvidia pays a developer to use their tool kit does not mean all other cards can't perform that feature.

Your response is specifically what I'm talking about . If you think Nvidia is only chip manufacturer that can do ray tracing you would be majorly wrong.

That's all good and dandy, except that nvidia IS the only manufacturer capable of real time raytracing effects in games

That could change next year, and who knows, maybe AMD and/or Intel could have a better/faster implementation of Raytracing. When/if that day comes, then you'll be absolutely right....

Now you're starting to see the issue. I was never saying that what Nvidia is doing is inherently bad, what I'm saying and noko is saying as well is that the fidelity and area effected of the ray tracing is low enough that rasterization of similar objects can be done with accuracy that's pretty darn close, and not only that but any card that's dx12 especially can recreate the effect without dedicated hardware. It's not completely clear yet just how close other implementations will get. but if reviewers don't start now and making the distinction to create a better educated consumer there will be problems.So do an apples to apples? I'm pretty curious myself. Maybe see if they'll let you publish on FPSReview as a news article.

Well, it's using ray tracing, and it's real time, but I agree that it should be made clear that the hybrid rendering being done isn't 'real-time full-scene ray tracing'.

If you're not doing full scene rendering then in reality we would call this an effect. Effects can be replicated, full scene rendering largely cannot. You are either doing it or you are not because the amount of objects affected is everything you're seeing on the screen not just one object.

This point is ignored in every review I've seen and because Nvidia doesn't correct them I see it as deceitful. However just like an all things the reviewers are the ones that hold the manufacturer's accountable. So someone needs to start analyzing the true effect of a single Ray traced object hopefully before other solutions become visible to the average consumer. If the reviewers don't make a case for the difference your average consumer will never know. This is so problematic.

It's not the only one capable. It's the only one that's showed you an example. There's a big difference and even that is questionable since the crytech engine which you mentioned is doing the same thingb with other cards that are not RTX cards.That's all good and dandy, except that nvidia IS the only manufacturer capable of real time raytracing effects in games. Sure there's the Crytek demo and World of tanks, but none of those are coming this year. And at least Crytek plans to support RTX on its demo.

That could change next year, and who knows, maybe AMD and/or Intel could have a better/faster implementation of Raytracing. When/if that day comes, then you'll be absolutely right....

Now you're starting to see the issue. I was never saying that what Nvidia is doing is inherently bad, what I'm saying and noko is saying as well is that the fidelity and area effected of the ray tracing is low enough that rasterization of similar objects can be done with accuracy that's pretty darn close, and not only that but any card that's dx12 especially can recreate the effect without dedicated hardware. It's not completely clear yet just how close other implementations will get. but if reviewers don't start now and making the distinction to create a better educated consumer there will be problems.

If you're not doing full scene rendering then in reality we would call this an effect. Effects can be replicated, full scene rendering largely cannot. You are either doing it or you are not because the amount of objects affected is everything you're seeing on the screen not just one object.

This point is ignored in every review I've seen and because Nvidia doesn't correct them I see it as deceitful. However just like an all things the reviewers are the ones that hold the manufacturer's accountable. So someone needs to start analyzing the true effect of a single Ray traced object hopefully before other solutions become visible to the average consumer. If the reviewers don't make a case for the difference your average consumer will never know. This is so problematic.

Where have you been? Rasterization (and more particularly shaders) has been faking RayTracing effects for years. All this fancy lighting and shadow effects try to replicate RT to some extent. You get all of these for "free" with RT, problem is performance. The reason there's hybrid rendering is simply because rasterization is already good enough for most things. But RT still has an edge in lighting, shadows and reflections. (I'm oversimplifying here, but bare with me).

Not in games.... Maybe next year.It's not the only one capable. It's the only one that's showed you an example. There's a big difference and even that is questionable since the crytech engine which you mentioned is doing the same thingb with other cards that are not RTX cards.

Geeeez is that so hard to understand?

what I'm saying and noko is saying as well is that the fidelity and area effected of the ray tracing is low enough that rasterization of similar objects can be done with accuracy that's pretty darn close

This is objectively false. It is incredibly expensive and in some cases impossible to replicate the quality of certain raytraced effects via rasterization.

and not only that but any card that's dx12 especially can recreate the effect without dedicated hardware.

Of course they can. But how is that relevant unless they can do so at acceptable performance?

I don’t share your concern that people are so clueless that reviewers need to go out of their way to explain the difference between full scene raytracing and raytraced “effects”. Everything we see in a game is a combination of effects. Why is raytracing special in that regard?

I know you're over simplifying. But I put that bit in there for a reason.Where have you been? Rasterization (and more particularly shaders) has been faking RayTracing effects for years. All this fancy lighting and shadow effects try to replicate RT to some extent. You get all of these for "free" with RT, problem is performance. The reason there's hybrid rendering is simply because rasterization is already good enough for most things. But RT still has an edge in lighting, shadows and reflections. (I'm oversimplifying here, but bare with me).

I understand it perfectly fine. You were the one that said the only t one capable not me.Not in games.... Maybe next year.

Geeeez is that so hard to understand?

Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

I understand it perfectly fine. You were the one that said the only t one capable not me.

I think we all knew what he meant is that nVidia is the only one proven capable, today and as seen by the customer, in actual games to give playable frame rates with RT.

Especially with new tech I don’t buy cards based on what they *might* do. AMD has an awful track record with executing on potentials.

Well Quake II RTX runs pretty darn good on two 1080 Ti considering it is so called raytraced (reality is it is assisted only and still a hybrid). Meaning RTX cards are not the first real time raytracing cards for games -> another falsehood by Nvidia.

The raytraced reflections in the Crytek Demo are just as good or better than the RTX so called reflections in BF5 -> Meaning non RTX cards are indeed capable of real time raytracing and this is from in all intensive purposes a midrange AMD card (still their best at this time). If Nvidia defined real time raytracing as what we see in the 6 titles then so be it. Now calling any of those titles real time raytracing is a joke in my book, only small parts of them are actually enhanced by using the RTX cards hardware that is if one can live with the performance impact.

RTX has to be the most overrated, empty promise, hyped tech with the most exorbitant priced hike GPU line of all time for a feature that gives minuscule IQ benefits at a huge performance cost for a few tittles that use it. Now there are some upcoming titles such as Doom Eternal and CyberPunk 2077, will those make RTX worth it? Shadow of the Tomb Raider went from a lighting to a shadow only implementation, Asseto Corsair Competizione totally dropped it due to performance and insignificant IQ improvement, Wolfenstein Youngblood -> HUH? nope. The overhyped DSLL is another Nvidia feature that in the end did not improve upon or actually degraded quality when used. One can see why Nvidia desperately needed GPP to try to control the narrative - cough cough - turned out they did not after all - plenty of folks seems to have bowed to whatever Nvidia spewed out of their mouths.

The raytraced reflections in the Crytek Demo are just as good or better than the RTX so called reflections in BF5 -> Meaning non RTX cards are indeed capable of real time raytracing and this is from in all intensive purposes a midrange AMD card (still their best at this time). If Nvidia defined real time raytracing as what we see in the 6 titles then so be it. Now calling any of those titles real time raytracing is a joke in my book, only small parts of them are actually enhanced by using the RTX cards hardware that is if one can live with the performance impact.

RTX has to be the most overrated, empty promise, hyped tech with the most exorbitant priced hike GPU line of all time for a feature that gives minuscule IQ benefits at a huge performance cost for a few tittles that use it. Now there are some upcoming titles such as Doom Eternal and CyberPunk 2077, will those make RTX worth it? Shadow of the Tomb Raider went from a lighting to a shadow only implementation, Asseto Corsair Competizione totally dropped it due to performance and insignificant IQ improvement, Wolfenstein Youngblood -> HUH? nope. The overhyped DSLL is another Nvidia feature that in the end did not improve upon or actually degraded quality when used. One can see why Nvidia desperately needed GPP to try to control the narrative - cough cough - turned out they did not after all - plenty of folks seems to have bowed to whatever Nvidia spewed out of their mouths.

Now CryEngine 5.6 has Vulkan beta while having DX 12 support since 2016. It will be interesting if Crytek can leverage the RT cores on RTX cards -> Would be nice to see a good efficient implementation which will allow a broader use of the hardware. It may come to, for good RTX, a game engine designed from scratch (not tacked on feature) implementing hardware RT. The Crytek Demo is a rather fantastic software step using raytracing for reflections (really a good use for raytracing).

Last edited:

Auer

[H]ard|Gawd

- Joined

- Nov 2, 2018

- Messages

- 1,972

Reflections. (really a good use for raytracing).

So you admit or are saying Nvidia is not doing raytracing?What pre-rendered demos are Nvidia passing off as raytracing? The Star Wars thing?

Raytracing is simply sending a ray into a geometry data structure and returning the hit results. What do you mean by “real” raytracing. If you mean that the entire scene has to be raytraced then I’m sure you would agree that’s a pretty silly thing to say in 2019.

Raytracing simulates roughly how light or photons reacts in a given scene with objects and materials. Light can be absorbed, bounced, bent (refracted), colored, go through, blocked etc. In BF5, are the shadows a result from photon calculations from simulated light, no it uses rasterize light source (not rays or photons) creating lightmaps/shadowmaps/. . . to overlay the area darken with a few other tricks. Are the objects themselves lighted by raytracing to figure out the intensity/color etc. Nope - HDRI maps, Pre-rendered baked in textures, hardware lights are used. Refraction of how light truly bends through materials or a much simplier math, bumpmap/normal map shader? Uses shaders and not raytracing. Maybe best, what is actually being done in BF5 with raytracing? Basically from viewpoint to surface and if reflective going backwards to what objects would be visible and getting those texture coordinates to render those objects, rasterized and projected onto the reflective surface with other tricks. Even the reflection is almost 100% rasterized and not using photon mapping to figure out the light of the pixel - it is only used for finding the coordinates of what object/texture/rasterize lighting to show on the surface. Look at good raytrace reflections and compare them to BFV and or the Crytek Demo and the quality difference is there. Still probably good enough for gaming.

Jensen Hung was right that raytracing does just work in properly lighting up a whole scene - except he forgot to mention RTX does not do raytracing, it is very much hybrid with 99%+ (my guess) rasterization with some raytracing elements for calculation.

Raytracing itself is also only a simulation or rough approximation, real life environments has virtually infinite number of photons that bounce around, gets absorb, reflected etc. Shadows are not maps of objects but areas where there are less photons lighting up that area. Even millions of photons per pixel would not be enough to reflect real world lighting - it would definitely be close enough for humans not to be able to tell the difference which for most purposes is good enough - definitely for gaming.

Auer

[H]ard|Gawd

- Joined

- Nov 2, 2018

- Messages

- 1,972

So basically where we at now with this thread is "Nvidia doesn't really do ray tracing and whatever it is theyr'e doing AMD cant do it."

No, my take, Nvidia redefined in a broad general way what real time Raytracing means which technically is so far from actual traditional raytracing methods - perverted maybe the better way to look at it. It would be similar if I gave you a glass of distilled water, put one drop of orange juice in it and tell you it is orange juice.So basically where we at now with this thread is "Nvidia doesn't really do ray tracing and whatever it is theyr'e doing AMD cant do it."

Can it lead to better simulation, graphics and new techniques - yes. Some of us, maybe a few just find the whole way Nvidia presented it as just plain out dishonest to sell something at a much higher price which technically it cannot do as described. Doesn't matter because AMD, Microsoft and probably Sony will just go along with it for marketing purposes and profit. Game developers as well. As for AMD - they can do those effects now with their current hardware lineup so really nothing too special Nvidia brought to the table - The Crytek Demo reflections shows this, interesting is how much faster a lower shader count RNDA card 5700XT does over a Vega. Putting matrix type math into fix hardware can speed things up, will be interesting what AMD does and future Nvidia methods. Will Nvidia redo the Cuda cores and incorporate more for RT? AMD? It is definitely worthwhile new tech.

Last edited:

What do you think orange juice really is?No, my take, Nvidia redefined in a broad general way what real time Raytracing means which technically is so far from actual traditional raytracing methods - perverted maybe the better way to look at it. It would be similar if I gave you a glass of distilled water, put one drop of orange juice in it and tell you it is orange juice.

Can it lead to better simulation, graphics and new techniques - yes. Some of us, maybe a few just find the whole way Nvidia presented it as just plain out dishonest to sell something at a much higher price which technically it cannot do as described. Doesn't matter because AMD, Microsoft and probably Sony will just go along with it for marketing purposes and profit. Game developers as well. As for AMD - they can do those effects now with their current hardware lineup so really nothing too special Nvidia brought to the table - The Crytek Demo reflections shows this, interesting is how much faster a lower shader count RNDA card 5700XT does over a Vega. Putting matrix type math into fix hardware can speed things up, will be interesting what AMD does and future Nvidia methods. Will Nvidia redo the Cuda cores and incorporate more for RT? AMD? It is definitely worthwhile new tech.

No, my take, Nvidia redefined in a broad general way what real time Raytracing means which technically is so far from actual traditional raytracing methods - perverted maybe the better way to look at it. It would be similar if I gave you a glass of distilled water, put one drop of orange juice in it and tell you it is orange juice.

Can it lead to better simulation, graphics and new techniques - yes. Some of us, maybe a few just find the whole way Nvidia presented it as just plain out dishonest to sell something at a much higher price which technically it cannot do as described. Doesn't matter because AMD, Microsoft and probably Sony will just go along with it for marketing purposes and profit. Game developers as well. As for AMD - they can do those effects now with their current hardware lineup so really nothing too special Nvidia brought to the table - The Crytek Demo reflections shows this, interesting is how much faster a lower shader count RNDA card 5700XT does over a Vega. Putting matrix type math into fix hardware can speed things up, will be interesting what AMD does and future Nvidia methods. Will Nvidia redo the Cuda cores and incorporate more for RT? AMD? It is definitely worthwhile new tech.

You accuse nvidia of doing fake RT yet claim AMD can do (even "faker") RT effects.

Yeah that makes sense...

Technically even if you just trace 1 ray, its still raytracingSampling 1000 rays (or whatever low count Nvidia is using) per frame is not true ray tracing. If you don’t understand that, I suggest you go back and do some research.

Yeah just ask Jensen.Technically even if you just trace 1 ray, its still raytracing

nvidia - redefining ray tracing from millions of rays to a couple. Legends in their own minds.

crazycrave

[H]ard|Gawd

- Joined

- Mar 31, 2016

- Messages

- 1,878

Hawaii does DX 12 games on hardware from 2013 if you want to know who builds for the long run .

Yeah just ask Jensen.

nvidia - redefining ray tracing from millions of rays to a couple. Legends in their own minds.

Actually NVidia is allowing millions of rays, but the reality is that even millions is not enough without optimizations. Anyone moaning about NVidia not doing pure 100% ray tracing without any optimizations is clueless.

Without optimization, you could need thousands of rays per pixels, that would be billions/frame. That is really never going to be possible in our foreseeable future, where we keep using technology instead of magic.

Back in Reality, we need every optimization possible to do Real Time Ray tracing.

NVidia is NOT doing "fake" Ray Tracing. They are accelerating standard RT algorithms, and providing the APIs, that lets developers potentially put millions of rays into a frame. But when you have millions of pixels, millions of rays is still not a lot.

The optimization work mainly falls to the developers, who determine how to use that ray budget that NVidia enables.

Optimizations are not faking it. They are choosing where to spend your budget. You may replace traditional shadows by tracing lights sources out to cast shadows. You can replace screen space reflections, by tracing rays from the reflective surfaces to get more accurate reflections, many effects can be replaced by RT effects, but in modern games at modern resolution, you won't have the ray budget to replace everything with RT effects.

It's too bad that only NVidia has RT HW so far, as it seems to make some anti-NVidia people come up without outlandish theories about what NVidia is "doing wrong" with Ray Tracing.

Actually NVidia is allowing millions of rays, but the reality is that even millions is not enough without optimizations. Anyone moaning about NVidia not doing pure 100% ray tracing without any optimizations is clueless.

Without optimization, you could need thousands of rays per pixels, that would be billions/frame. That is really never going to be possible in our foreseeable future, where we keep using technology instead of magic.

Back in Reality, we need every optimization possible to do Real Time Ray tracing.

NVidia is NOT doing "fake" Ray Tracing. They are accelerating standard RT algorithms, and providing the APIs, that lets developers potentially put millions of rays into a frame. But when you have millions of pixels, millions of rays is still not a lot.

The optimization work mainly falls to the developers, who determine how to use that ray budget that NVidia enables.

Optimizations are not faking it. They are choosing where to spend your budget. You may replace traditional shadows by tracing lights sources out to cast shadows. You can replace screen space reflections, by tracing rays from the reflective surfaces to get more accurate reflections, many effects can be replaced by RT effects, but in modern games at modern resolution, you won't have the ray budget to replace everything with RT effects.

It's too bad that only NVidia has RT HW so far, as it seems to make some anti-NVidia people come up without outlandish theories about what NVidia is "doing wrong" with Ray Tracing.

A small sample is not true ray tracing. That is my point. Passing it off as true ray tracing or people even calling it ray tracing is laughable. It’s a misrepresentation and to make an argument to the contrary shows a deceptive at the most and naive point of view in the least. Your “optimizations” are a hack because of the architectures failure - as it cannot legitimately produce enough rays. Even by factors of 1000.

before Jensen, nvidia and this bullshit - ray tracing was a well understood term.

after Jensen and nvidia. - now we have to qualify every fuking time we use the term ray tracing because of some asshole company has bastardized the term for profit.

I just wish Jensen had called it something else instead of ripping off a mainstream term. It’s like calling a water sprinkler a thunderstorm. Stupid analogy but it’s representative.

It could have been Amd, intel, whoever. Doesn’t matter. Disingenuous and deceiving.

OK, tell me what is being raytraced in BFV, Metro?Actually NVidia is allowing millions of rays, but the reality is that even millions is not enough without optimizations. Anyone moaning about NVidia not doing pure 100% ray tracing without any optimizations is clueless.

Without optimization, you could need thousands of rays per pixels, that would be billions/frame. That is really never going to be possible in our foreseeable future, where we keep using technology instead of magic.

Back in Reality, we need every optimization possible to do Real Time Ray tracing.

NVidia is NOT doing "fake" Ray Tracing. They are accelerating standard RT algorithms, and providing the APIs, that lets developers potentially put millions of rays into a frame. But when you have millions of pixels, millions of rays is still not a lot.

The optimization work mainly falls to the developers, who determine how to use that ray budget that NVidia enables.

Optimizations are not faking it. They are choosing where to spend your budget. You may replace traditional shadows by tracing lights sources out to cast shadows. You can replace screen space reflections, by tracing rays from the reflective surfaces to get more accurate reflections, many effects can be replaced by RT effects, but in modern games at modern resolution, you won't have the ray budget to replace everything with RT effects.

It's too bad that only NVidia has RT HW so far, as it seems to make some anti-NVidia people come up without outlandish theories about what NVidia is "doing wrong" with Ray Tracing.

Both fake so there, neither really doing much ray tracing but it is a start. The reflections on Crytek Demo by Nvidia definition is real time ray tracing and AMD does not need to have dedicated hardware that sits idle most of the time in virtually every game made. Anti-Nvidia people lol, how about anti misleading, promising more then delivered, just calling it out. If Nvidia has a worthy product for the money I buy normally but some of their methods makes that harder to accept.You accuse nvidia of doing fake RT yet claim AMD can do (even "faker") RT effects.

Yeah that makes sense...

If Nvidia hardware could do very effective ray tracing then VRay using RT cores should really be 10x-30x faster vice 1.4x faster with RT on compared to off with RTX cards. To be clear, cool tech just needs much more work at this stage.

A small sample is not true ray tracing. That is my point. Passing it off as true ray tracing or people even calling it ray tracing is laughable. It’s a misrepresentation and to make an argument to the contrary shows a deceptive at the most and naive point of view in the least. Your “optimizations” are a hack because of the architectures failure - as it cannot legitimately produce enough rays. Even by factors of 1000.

before Jensen, nvidia and this bullshit - ray tracing was a well understood term.

after Jensen and nvidia. - now we have to qualify every fuking time we use the term ray tracing because of some asshole company has bastardized the term for profit.

I just wish Jensen had called it something else instead of ripping off a mainstream term. It’s like calling a water sprinkler a thunderstorm. Stupid analogy but it’s representative.

It could have been Amd, intel, whoever. Doesn’t matter. Disingenuous and deceiving.

This is True Ray Tracing, there is no change in understanding. You don't see any of these complaints from industry players versed in the art. They are pretty much universally excited to see more acceleration.

You tend to just see these "fake/untrue" claims from people with no background, and an Axe to grind.

RTX is also being used accelerate ray tracing render programs like Blender, it's using NVidias purpose built HW that improves the Ray budgets to render RT scenes faster. Is Blender "fake" Ray Tracing because it can now use RTX HW to accelerate it's work?

RTX is a Ray Tracing tool, that provides hardware to accelerate the task of doing real ray tracing.

You can use it render frames in blender faster, you can do full scene RT in a much simpler game like Quake 2, or you spend that budget to add real RT effects to modern games.

It's just an API for to boost Real/True Ray tracing.

A finite ray budget, doesn't render it false.

This is True Ray Tracing, there is no change in understanding. You don't see any of these complaints from industry players versed in the art. They are pretty much universally excited to see more acceleration.

You tend to just see these "fake/untrue" claims from people with no background, and an Axe to grind.

RTX is also being used accelerate ray tracing render programs like Blender, it's using NVidias purpose built HW that improves the Ray budgets to render RT scenes faster.

RTX is a Ray Tracing tool, that provides hardware to accelerate the task of doing real ray tracing.

You can use it render frames in blender faster, you can do full scene RT in a much simpler game like Quake 2, or you spend that budget to add real RT effects to modern games.

It's just an API for to boost Real/True Ray tracing.

A finite ray budget, doesn't render it false.

see they did it to you. “Rtx is a api to boost real/true ray tracing”.

Really? Laughable.

True or real full ray tracing does not require rasterization.

See there we go again, qualifying it for your bastardized rasterization/ray tracing terminology.

Last edited:

Auer

[H]ard|Gawd

- Joined

- Nov 2, 2018

- Messages

- 1,972

I personally cant wait for some more older games to get RT(X).

I personally cant wait for some more older games to get RT(X).

So basically where we at now with this thread is "Nvidia doesn't really do ray tracing “

Auer

[H]ard|Gawd

- Joined

- Nov 2, 2018

- Messages

- 1,972

I'm just gonna call all RT RTX until something else comes along. Much easier that way.

The directx implementation is DXR.I'm just gonna call all RT RTX until something else comes along. Much easier that way.

So basically where we at now with this thread is "Nvidia doesn't really do ray tracing “

Maybe the axe grinders are.

Where I am at, is where I stated, NVidia gives you a Ray budget. That budget can be used to render ray traced projects in blender, or it can be used to render some amount of RT effects in games, up to, and including all the effect if the game resolution/poly count is low enough.

At least you’ve backed off from the “real ray tracing” marketing bs to a “ray tracing budget” LoLMaybe the axe grinders are.

Where I am at, is where I stated, NVidia gives you a Ray budget. That budget can be used to render ray traced projects in blender, or it can be used to render some amount of RT effects in games, up to, and including all the effect if the game resolution/poly count is low enough.

Small budget, but great there are things a few ray casts can do to enhance rasterization. And yes, Amd will be doing the exact same thing shortly with DXR as nvidia does with RTX.

So you admit or are saying Nvidia is not doing raytracing?

Nope, not sure how you came to that conclusion.

For folks who think raytracing is "fake" unless every pixel is raytraced with multiple bounces I suggest you come back in a few decades. The rest of us will just have to make do until then with raytraced shadows, reflections, ambient occlusion and GI.

Maybe once AMD also supports RT people won't find it necessary to come up with arbitrary definitions of raytracing just to hate on Nvidia. At least one can hope.

The fact remains that using a few rays to enhance rasterization is not a ray traced image or ray tracing as it’s been known since it’s inception on the 70s.Nope, not sure how you came to that conclusion.

For folks who think raytracing is "fake" unless every pixel is raytraced with multiple bounces I suggest you come back in a few decades. The rest of us will just have to make do until then with raytraced shadows, reflections, ambient occlusion and GI.

Maybe once AMD also supports RT people won't find it necessary to come up with arbitrary definitions of raytracing just to hate on Nvidia. At least one can hope.

RTX and DXR are hybrid, using a few cast rays to enhance rasterization. How did that suddenly become ray tracing? I already told you how. Nv marketing. If you think that’s hating on nvidia, so be it. I don’t give a fuk.

So we’ve now come from billions of rays and no rasterization to a couple to enhance rasterization and it’s now called the same thing “ray tracing”. That’s not ray tracing. That’s RTX and DXR. If you can’t or don’t want to understand the difference then you are obtuse and ignorant of the definition of ray tracing that has been used for fifty years.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)