Just about the only thing I've gotten out of this thread so far is that Linus posted a huge whiny rant complaining about the literal exact thing his video was about. It was a six hour delay and he had already shot and edited his AMD review. He could have easily done a single video which was a head-to-head review and simply released it 6 hours later than the rant.

I don't care much for Linus but he had every right to call Intel on their BS stunt. It was quite refreshing in this corporate suck up culture, actually.

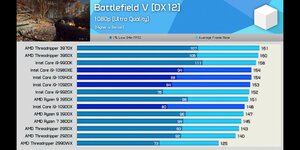

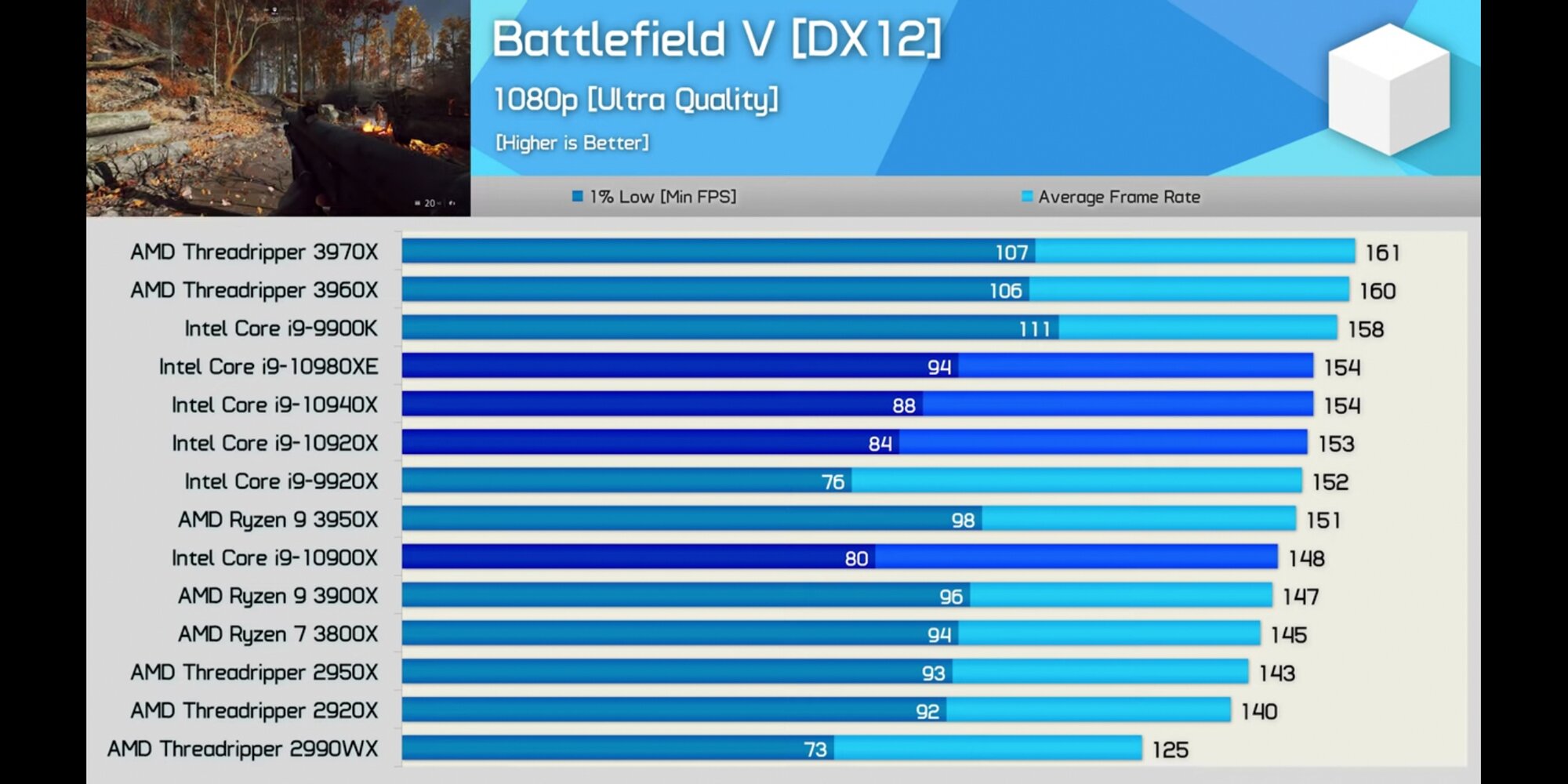

That's certainly...a take.Oh right, I also learned that Intel's new stuff beats AMD's new stuff in some ways while losing to it in others - and many comparisons actually could go either way depending on the allegiance of the one doing the benchmarking. Or, in other words, the two brands have achieved parity.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)