But, those patents cause a 30% hit in performance. I personally know this, because I have an RTX2080 and don't use ray tracing because of the performance hit.

What’s an acceptable performance hit for raytracing ?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

But, those patents cause a 30% hit in performance. I personally know this, because I have an RTX2080 and don't use ray tracing because of the performance hit.

But, those patents cause a 30% hit in performance. I personally know this, because I have an RTX2080 and don't use ray tracing because of the performance hit.

What’s an acceptable performance hit for raytracing ?

This is the same thing that happened when shadows where introduced, except the GPU manufactures didn't make you pay an extra $200 for it.

Might as well go back to selling PhysX co-processors if the feature negates performance upgrade..

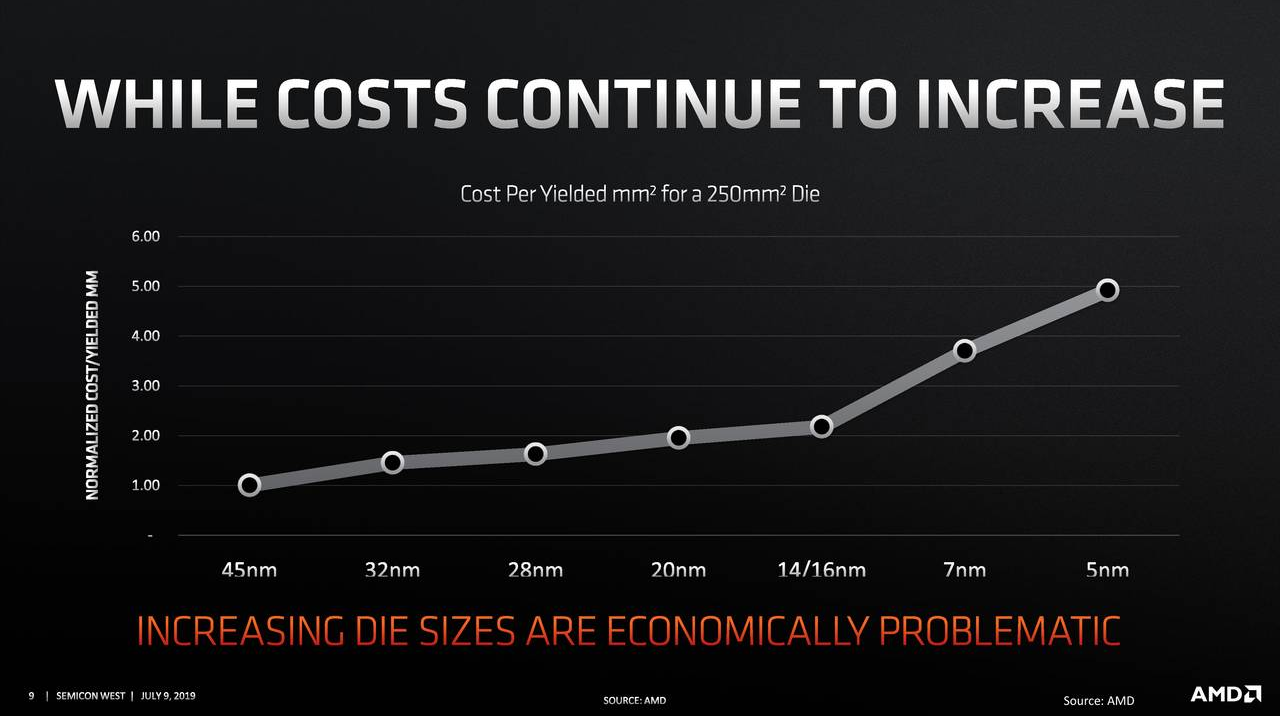

I think what we saw at Computex is the entire Gaming Industry standing firmly behind AMD and their RDNA architecture. This isn't a tit-for-tat type of thing for me, as I buy many cards a year and from whomever. I am speaking from now and until 4k @ 120Hz Gaming is a truly viable thing. And seeing the RDNA whitepapers now, I think RDNA is the way to go. AMD's new design allows for more parallelism and simultaneous execution...

If what is out there is true, then Infinity Fabric 2.0+ will play a huge parts in unifying GPU cache. And just order up how many CU's you want on a project. Nifty stuff.

That was a lengthy non-reply...

Wait, are you attacking me?

I clearly stated my standing: " if the feature negates performance upgrade.." <

I have a RTX2080 and if I turn the new features on, my card performs worse than the card I upgraded from. And at $800 bucks, that is just a marketing gimmick. And we all know it, and every owner of an RTX2080 knows it. Which are many of my 50 year old friends...

I have a RTX2080 and if I turn the new features on, my card performs worse than the card I upgraded from.

Except RT on RTX is not RT but a whole bunch of rasterization with a simple element of RT added in tanking performance in many cases for little benefit (more detrimental in other words then benefit). Exception would be Quake II but that game has so few objects, low polygon count etc. and if that is how gaming with RTX should be - I think I will pass - give me more higher quality rasterization instead. RTX is no where near to point of really being usable overall other than an experiment and tack on feature. If the developers can extract more performance and get it to shine, that would be wonderful. Trying to quantify how much it will need to improve to make it really a game changer is another thing - my guess, yes guess is 6x-8x current ability.

I don't know if one could just do a highly efficient one component RT, such as hardware to just do one aspect as in shadows or reflections or GI. For example the next console has very fast RT for reflections and that is it or maybe two things that are very fast and not trying to do all aspects of RT. Might be a way better approach.

Except RT on RTX is not RT but a whole bunch of rasterization with a simple element of RT added in tanking performance in many cases for little benefit (more detrimental in other words then benefit).

I don't know if one could just do a highly efficient one component RT, such as hardware to just do one aspect as in shadows or reflections or GI. For example the next console has very fast RT for reflections and that is it or maybe two things that are very fast and not trying to do all aspects of RT. Might be a way better approach.

You know that makes no sense right? That's like saying tessellation isn't tessellation but a whole bunch of rasterization with a simple element of tessellation. See how silly that sounds? Completely agree that the IQ improvement has to be worth the performance hit and that's been inconsistent with the few RT titles so far.

RT is just tracing a ray through a scene of geometric objects. Whether you use those rays to render shadows, reflections or GI is up to the developer. The hardware doesn't care what the rays are used for. That's the beauty of RT. It's a very flexible and simple algorithm compared to the crazy hacks that are required with rasterization. The upside is less headache for developers with the downside of being murder on hardware.

There is a long history of cutting edge features being murder on frame rates and raytracing is one of the most intensive features released in a long time.

Comparing performance of RT vs no-RT is pointless as RT will always be much slower. The only thing that matters now is how quickly devs can extract the IQ benefits of real-time RT hardware. With both next generation consoles adopting RT next year we’re finally set up for the first true leap in IQ in many years.

Going to be blunt, but everything you are saying is obvious.

Seems you are avoiding the actual conversation (in that) the CURRENT RTX cards don't do anyone justice and nobody uses these ray tracing features. These are facts and if you owned a rtx2080 then you would understand.

You keep going on about the future, when we already know the direction ray tracing is heading, because we have been watching it for 25+ years coming at us. We know an awful lot about ray tracing and hardware than can do real time ray tracing. So again, WE DO... know the direction ray-tracing will be taking in games. We know quite a bit about DirectX ML and DirectX RT and what other open standards can do.

You are not educating anyone with your points, on other than to say "In the future.."

Again, most here are talking about now, today. Many (like me) already own RTX cards and don't use the features you are so highly touting. And yes, in the future I can see them being a big thing, but not with Turing attached to your rig. Nobody is going to spend $1,400+ on a 2080ti, to play Battlefield V at 1080p @ 90FPS.... lol. Nvidia's Ray Tracing cards are feeble. Jensen jumped the gun, trying to rekindle the PhysX days, but the industry has a ray tracing solution in place already. Those cards are coming in the next 6 months.

Cool opinions...

Going to be blunt, but everything you are saying is obvious.

Seems you are avoiding the actual conversation (in that) the CURRENT RTX cards don't do anyone justice and nobody uses these ray tracing features. These are facts and if you owned a rtx2080 then you would understand.

You keep going on about the future, when we already know the direction ray tracing is heading, because we have been watching it for 25+ years coming at us. We know an awful lot about ray tracing and hardware than can do real time ray tracing. So again, WE DO... know the direction ray-tracing will be taking in games. We know quite a bit about DirectX ML and DirectX RT and what other open standards can do.

Again, most here are talking about now, today. Many (like me) already own RTX cards and don't use the features you are so highly touting. And yes, in the future I can see them being a big thing, but not with Turing attached to your rig. Nobody is going to spend $1,400+ on a 2080ti, to play Battlefield V at 1080p @ 90FPS.... lol. Nvidia's Ray Tracing cards are feeble. Jensen jumped the gun, trying to rekindle the PhysX days, but the industry has a ray tracing solution in place already. Those cards are coming in the next 6 months.

... and nobody uses these ray tracing features. These are facts ...

Yes that is why it is an upgrade from Polaris. Never meant to be a high-end card.Navi is a very poor upgrade from Vega..

Yes that is why it is an upgrade from Polaris. Never meant to be a high-end card.

Ah..I see the rpoblem now...so when AMD get raytracing...it will no longer be a "gimmick"....the grapes are indeedd sour

It's a gimmick to me until I can push games @ 1440p(My current setup) with good fps (say 100+)

That is effing funny...I just watched this video about Cyrix yesterday (going down memory lane):

One of their selling points...was 13 FPS in Quake (some 16 min in, linked to it):

Kids these days...so spoiled they have no respect (or clue) about how far we have come...*sigh*

(Really, I cannot take such statements seriously)

Uhuh, my very first machine was a Tandy, I am no kid, I had a 486sx, 486dx4 etc...it's not being spoiled, it's realizing I can get more and not settling for less

Rather missing out...because you set to lofty barriers.

My first Computer was the Commodore 64 (I had an Atari console before that, but that was no computer)...

This was my first ever computergame I played:

Having that foundtation...I cannot take some people's comments about "unplayable" seriously...

At first I did...but the I got an after school job...and save up for a 5’25” 1541 floppy drive...oh the speed!Yeah, but did you start out using the Tape drive. 1/2 hour loading, and then flip the tape over for the other half hour error verification step.

Rather missing out...because you set to lofty barriers.

My first Computer was the Commodore 64 (I had an Atari console before that, but that was no computer)...

This was my first ever computergame I played:

Having that foundtation...I cannot take some people's comments about "unplayable" seriously...

Not missing out on anything, Generally speaking, RT or not, I won't play a game until I can run it smoothly on Max, I just started working on TW3. I just don't enjoy playing a game and having it run choppy

If the longer frametimes can be contained, you can get smooth, just not high FPS. Which is fine for a lot of games where the extra fidelity is desirable.