blackmomba

Gawd

- Joined

- Dec 5, 2018

- Messages

- 774

Yeah just the number 1 and 2 most popular gaming engines...

For mobile games and games no one really plays.. ok

Hardly significant

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Yeah just the number 1 and 2 most popular gaming engines...

Clueless. They are the biggest engines and used in many games. EA also is behind it with frostbite, and square Enix.For mobile games and games no one really plays.. ok

Hardly significant

Do you realize even RTX 2060 can calculate 5 gigarays/s which translates intersection of ten times the amount of rays that is needed for 4K@60Hz if all it calculated was primary rays one ray per pixel and did not have to run any shaders for each intersection? Note: this is supposed to be from real workloads. Even if we apply bullshit-correction factor into account it means calculating ray intersection is not an issue for even weakest RTX card.Clearly some kind of absurd misinterpretation on your part.

Higher RT performance will require more transistors, thus more die area, and thus a higher price.

Trying to devote the same amount of die area to RT performance across a product line, would make low end cards too expensive, and high end cards under-powered.

RT performance will be segmented just like Raster performance is. It really couldn't be otherwise.

RTX if anything was an excuse for price increase and not the reason1080ti vs 2080ti is or atleast was a 40% increase in price. prety sure u can just look at prices of both cards and see it is more or less 40% +- can only guess i 980ti -> 1080ti was more similar in price, while the 1080ti were more expensive for me it was not by alot. idk what other reason they get to near double price of same tier graphics card in 1 generation, seems rather special. at best i can get a 2080ti for 14-1500 usd as lowest price in norway... importing is pointless cause the gov. screw u over on tax and processing. that is prety much just the same as release unfortunately.

Let's see what excuse will AMD have for Navi pricing ^_^

Apparently AMD wants to shed it's "Value" label tho, at least in the CPU space.

https://www.techpowerup.com/256340/...alternative-x570-motherboards-to-be-expensive

Perhaps AMD GPU prices won't be far off from Nvidia's either.

Navi situation is just hilarious to me because AMD fanboys were the loudest ones to criticize Nvidia for their RTX lineup pricing and so far it seems AMD will do exactly the same move but with less excuses... or more precisely with more sad excusesThey won't need one, plenty of people on these forums are now attacking anybody who wants cheaper products because "businesses exist to make money" and "prices don't live in a vacuum", etc. Bitcoin bought both Nvidia and AMD a year or more of boosted prices and revenue, and I suppose the rise of e-sports over the past 4-5 years has as well. These defensive quotes spread through social media and forums like a virus and somehow manage to normalize exorbitant pricing.

Navi situation is just hilarious to me because AMD fanboys were the loudest ones to criticize Nvidia for their RTX lineup pricing and so far it seems AMD will do exactly the same move but with less excuses... or more precisely with more sad excuses

Do you realize even RTX 2060 can calculate 5 gigarays/s which translates intersection of ten times the amount of rays that is needed for 4K@60Hz if all it calculated was primary rays one ray per pixel and did not have to run any shaders for each intersection? Note: this is supposed to be from real workloads. Even if we apply bullshit-correction factor into account it means calculating ray intersection is not an issue for even weakest RTX card.

Isn't that how capitalism works though? That's not AMD being greedy or evil, that's AMD being realistic about being a publicly traded company.

Ideally, if AMD can compete, then as nVidia loses sales they will lower prices, AMD will follow to match, and you'll get in a small price war until someone gets to the point they can't cut the price any further.

And if AMD can't compete, then nVidia stays where they are and continues to sell at high margins. And next-gen nVidia sees if they can raise those margins just a bit further while maintaining sales.

I think the question could answer itself.

There's the GTX1660Ti vs RTX2060. It's too early on the stearsurvey to know which has a bigger share (the 2060 is higher on the list for now), but in a few months we'll have a better picture.

Yeah, I'm sure stock holders hate that

Turing SM is not new design, it is taken from Volta with some tweaks and RT core added in to it.You do realize that even the 2060 die is very Large.

You do realize that NVida has a proportional amount of RT cores at every level, Titan RTX has double the Shaders as 2070 and it has Double the RT cores as well. If all the RT cores they needed was the amount in the 2060 they would have stopped there and added more Shaders.

Where did this even come from?But of course, you think know better than NVidia does when it comes to designing RT GPUs.

I think everyone agrees that adding 50% bloat to the die with next to 0 return for the end user for their money is a brutal design choice. If/when the time is right, I think AMD's implementation will be more elegant and efficient. Maybe some sort of CPU/GPU hybrid approach?

Where did this even come from?

I didn't say they should redesign their chip but that as it is there is no shortage of ray-intersection processing power to worry about.

Where did I say 2060 RT shader performance was all card like 2080Ti needed? Eh?If you are claiming 2060 levels of RT HW is all they need, then when they have double this on high end cards it would clearly be a waste, and the implication is that you think you know more about this problem space than NVidia.

Further beyond that we now have Q2 RTX, which is pure ray tracing, and is almost certainly limited by RT HW.

At 1080p, a 2060 squeaks 60 FPS with global illumination turned down to medium, and a 2080 Ti, nearly doubles it.

That isn't shader performance, it's RT HW performance.

That is with an ancient game with ultra low poly counts.

Full Ray tracing on a modern title would almost certainly crush even a Titan RTX.

You are going to see RT HW increase with each generation, to get closer to doing full RT on modern games.

Where did I say 2060 RT shader performance was all card like 2080Ti needed? Eh?

I said RT core performance is more than enough suggesting that they do not need to increase RT core count or its size any furhter, at least for current revision of DXR that does not have any fixed shader program templates that can be made into ASIC to save shader performance by not needing to execute them on shaders.

And what is with your comprehension capabilities? Did I not explain each ray intersection is handled by shader program? You think "shader program" is executed on what? Quake2 RTX make shaders scream on my RTX card just as strogs scream dying from my unbeatable blaster... hyper ofc ; )

If you still do not understand how this works go read some documentation or to save time tutorial like this one https://devblogs.nvidia.com/vulkan-raytracing/ and https://iorange.github.io/

Do your homework and all confusion will leave your mind and you will be enlightened knowing how to shoot rays from your cameras properly =)

Note: It's been some years since I've written any 3d rendering engine, and I did so as a hobby, so do your own research on anything I say.

The problem is in how raytracing works. Rather than baked in static lighting that we're used to with rasterization, ray tracing is essentially breaking a scene all the way down to the level of photons. Every single pixel you see is being traced from every light source to the object right down to the pixel, EVERY SINGLE FRAME. When you move in real life, the angle between the objects you're seeing and the light source (light bulbs, the sun, etc) is constantly changing causing a shift in perceived brightness and color. Multiply your screen resolution (1920x1080) and you'll be at ~2,000,000, consider how many rays are being cast per frame, and then multiply that by how many frames per second you expect to game to run, and you should now see the problem. That's just scratching the surface without thinking about how light interacts and scatters or moving and static objects casting shadows and reflections. Rasterized games don't have that problem because the lighting doesn't change as you move through a scene - or if it does it's happening in a pre-calculated (baked in) manner, rather than being computed in real time.

Hopefully that makes sense. There are many great technical references available online if you want to learn how it actually works.

Nvidia hardware implementation sucks compared to what? We literally have no other RT implementation to compare it against.

and then this fuggin guy just drops the best comment of 2019 possibly the best comment ive ever read on hardocp. i never "got" what this tray-dacing was all about and he just enlightens me like a child.

Not quite true.

I have seen real time raytracing done in 3 ways:

RTX hardware - RT cores/Tensor-cores) on Turing cards doing DXR. (NVIDIA)

RT in shaders - Some Turing cards/Pascal cards (NVIDIA)

RT on CPU - Intel has done this for years as a tech concept (Intel)

One players is missing frrom the "party" though" (AMD)

Not sure what the problem is

Not sure what the problem is. Look at the title of this thread and then you wonder why I sought a simplified way to explain ray tracing to people who might not understand it? Post wasn't even meant for you.

Apologies if I belittled anybody, but I think you're being impressively overdramatic.

In an actual shipping game...

Not sure what the problem is. Look at the title of this thread and then you wonder why I sought a simplified way to explain ray tracing to people who might not understand it? Post wasn't even meant for you.

Apologies if I belittled anybody, but I think you're being impressively overdramatic.

You're being complimented

[some people haven't drawn the line between the rasterization done in real-time consumer graphics and the type of rendering done for CGI, so when it dawns on them that ray tracing is what they've been staring at for years and that it's moving from being an offline render to a real-time render, they might get over-dramatic]

my comment was ambiguousand unclear when i reviewed it again. it was meant as a 100% compliment, sorry for being a weirdo.

I had always heard that Pixar ray traced their movies but now that the topic is in the limelight, I'm reading that Toy Story all the way up to Cars were entirely rasterized. I was curious to know how long a single frame from one of those movies would have taken to render in the 90s. When I was in school I recall doing a still image of a few transparent balls (or maybe it was some simple fractal) inside of a cube with one light source, I remember that taking many hours to render a single frame on a 486 or early Pentium. It would be mind-blowing if a scene as detailed as a modern pixar movie (or AAA game) could be rendered in real time now.

Ah my fault, guess I missed half the thread and lost the context. Every year that goes by sees me more and more out of touch with what people are saying anymore, especially online... I blame the anxiety.. X_X

Under Galyn Susman and Sharon Calahan, the lighting team orchestrated the final lighting of the shot after animation and shading. Each completed shot then went into rendering on a "render farm" of 117 Sun Microsystems computers that ran 24 hours a day.[36] Finished animation emerged in a steady drip of around three minutes a week.[62] Depending on its complexity, each frame took from 45 minutes up to 30 hours to render. The film required 800,000 machine hours and 114,240 frames of animation in total.[37][57][63] There are over 77 minutes of animation spread across 1,561 shots.[59] A camera team, aided by David DiFrancesco, recorded the frames onto film stock. To fit a 1.85:1 aspect ratio, Toy Story was rendered at a mere 1,536 by 922 pixels, with each of them corresponding to roughly a quarter-inch of screen area on a typical cinema screen

For mobile games and games no one really plays.. ok

Hardly significant

“Pixar artists already rely on NVIDIA ray tracing, and RTX more than doubles the performance they will see. We’re excited to use RTX on our upcoming films,” said Steve May, CTO of Pixar Animation Studios.

“RTX technology is a game changer for our architectural visualization pipeline,” said Gamma Basra, partner and head of Visualization at Fosters+Partners. “We can iterate options to ascertain the optimal design in real time without the need to wait hours for the render to come back.”

Unity and Unreal?!!What engines do you think developers use en mass these day? Infinity?

Ok so Unity is geared towards people starting out and indies. I'm not sure that's where we are going to get anything too impressive visually for at least a while

For UE4, sure it's popular, but I don't find myself playing anything that uses it. Lots of second and third tier titles in the list of games that UE4 is used by.

Hopyfully is doesn't turn out to be like async compute

How on earth did you come to the notion to compare RT with Ascyn compute?Ok so Unity is geared towards people starting out and indies. I'm not sure that's where we are going to get anything too impressive visually for at least a while

For UE4, sure it's popular, but I don't find myself playing anything that uses it. Lots of second and third tier titles in the list of games that UE4 is used by.

Hopyfully is doesn't turn out to be like async compute

So you where wrong.

Then there are two options...happy now?

Thank you for being so concerned about my happinessAlso for agreeing with me that there are no comparable hardware implementations of RT to support the OP.

Where did I mention RT performance of RTX 2060 being most that we will need?I said RT HW, not Shader performance.

I what kind of shader work do you think is being done in Q2-RTX??

It's going to be extremely minimal. It's unlikely in the extreme that Q2-RTX is shader limited.

If you are correct, 2060 is the most RT HW we need and NVidia will correct that going forward, by reducing RT HW proportion on high end models.

But I think you have completely wrong, and RT HW will increase across the board on future models.

Edit:

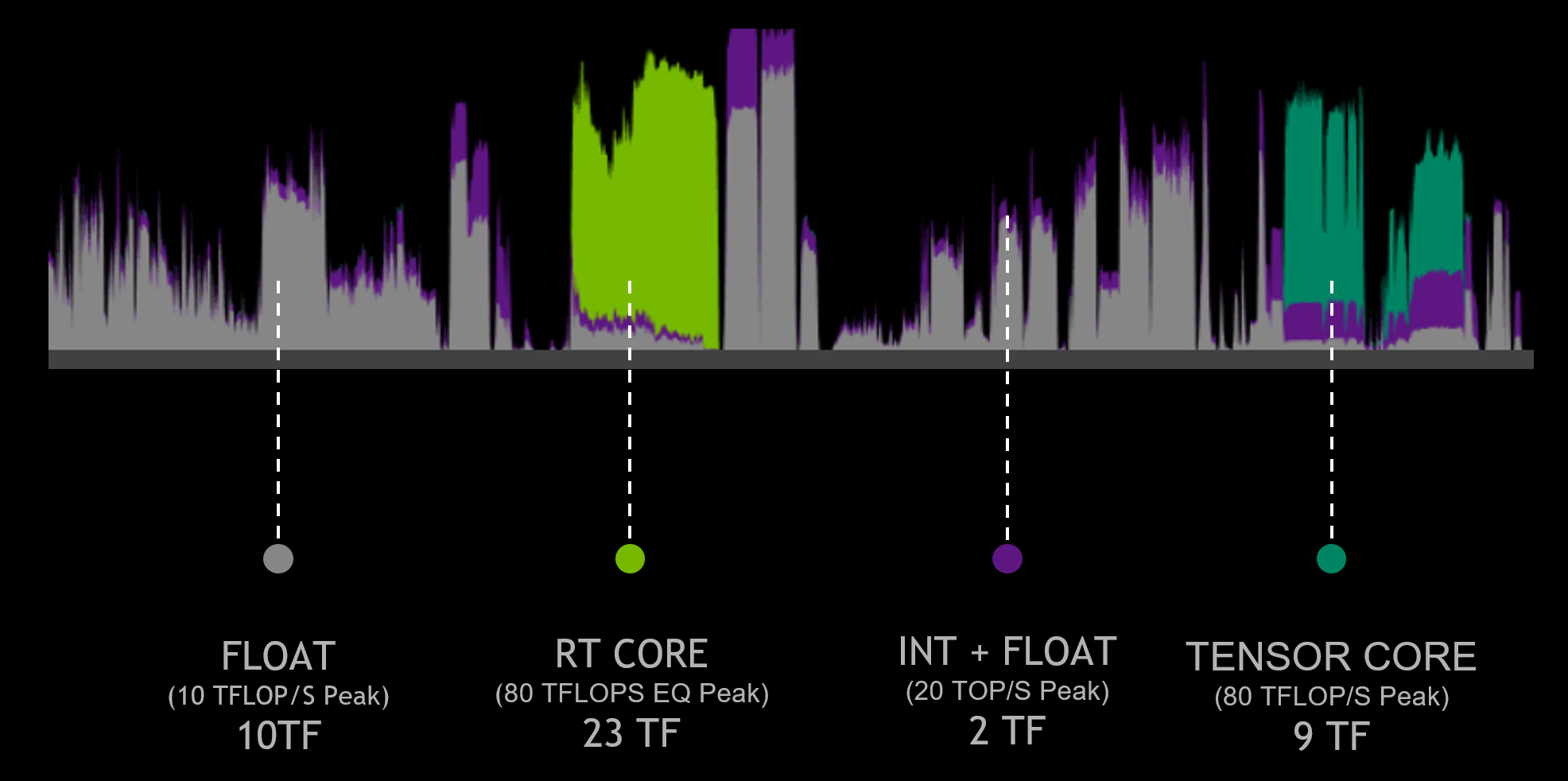

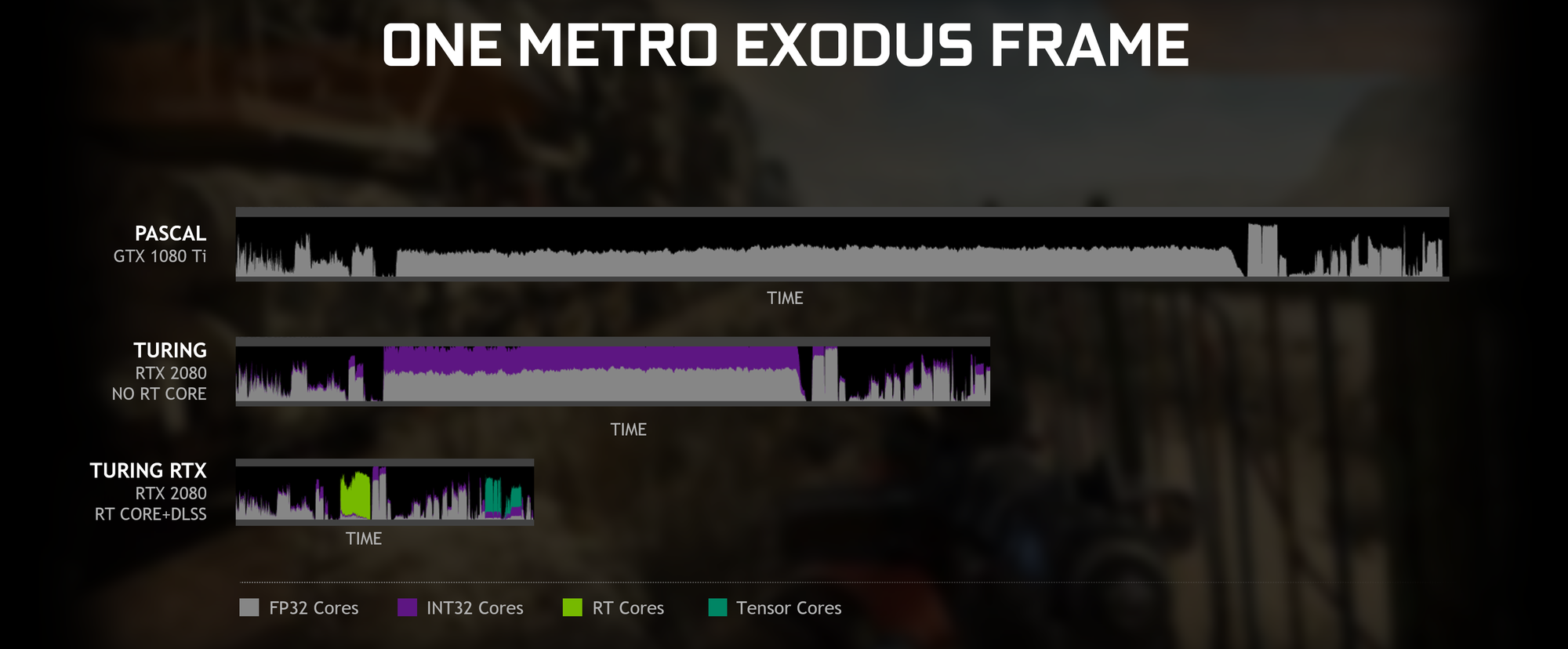

Here is a visual to point out why you are totally off the mark. It's time/load graph of a Metro Exodus frame.

Note that while the RT cores are busy doing their RT work, FP32 and Int32 cores are extremely lightly loaded. Negligibly so.

Ray Tracing is NOT shader limited.

Where did I mention RT performance of RTX 2060 being most that we will need?

...

Is it not proof of what I way saying all along that RT hardware is already overpowered?