CAD4466HK

2[H]4U

- Joined

- Jul 24, 2008

- Messages

- 2,686

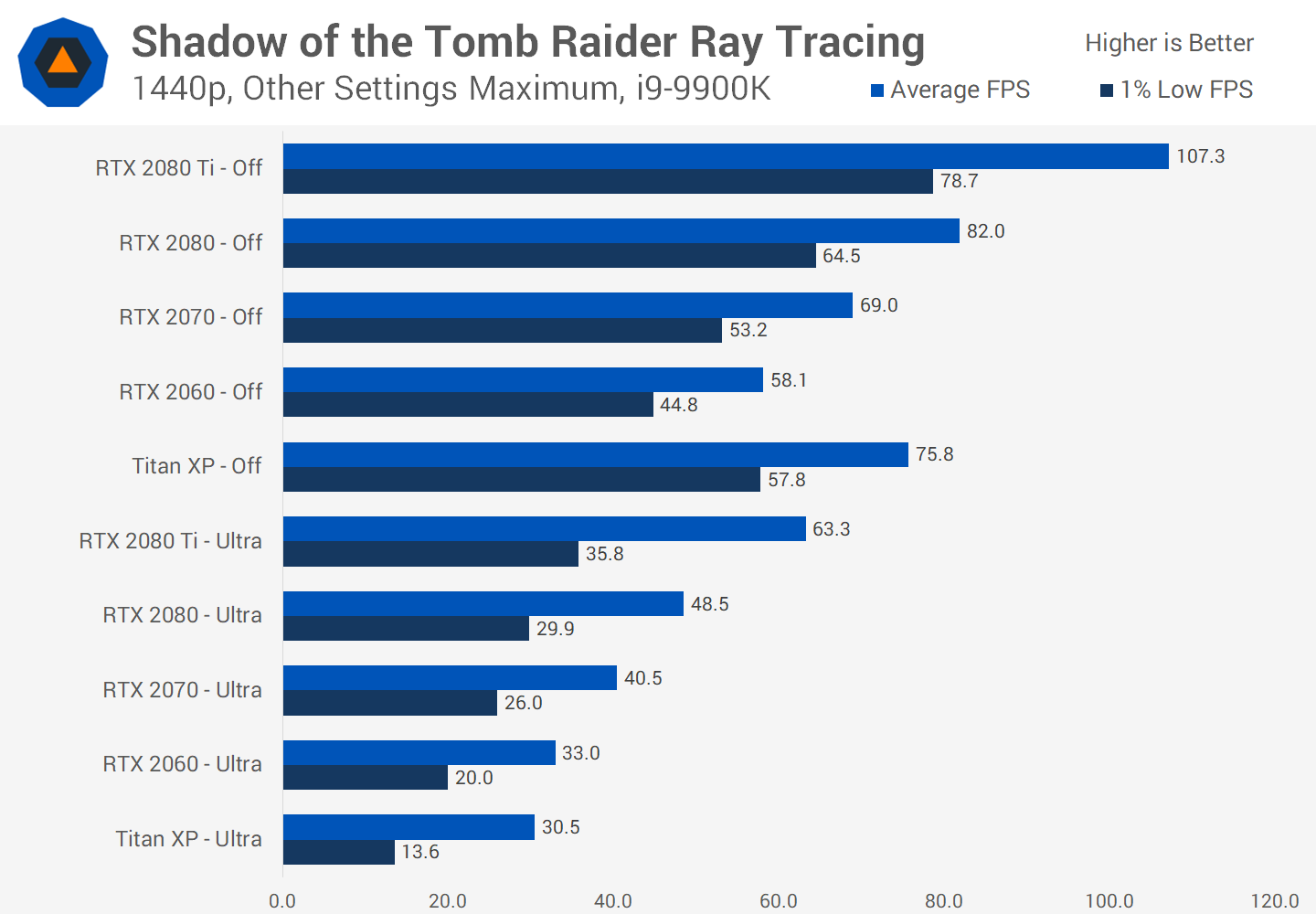

No surprises here. it's slower than a RTX 2060.

https://www.techspot.com/review/1831-ray-tracing-geforce-gtx-benchmarks/

And this begins to illustrate one of the key problems with ray tracing on Pascal: the experience is very inconsistent. This is because there is such a large difference between the capabilities of a card like the Titan X without ray tracing and with ray tracing. So as you move around an environment with varying ray counts, interactions and degrees of ray tracing, the performance of the Titan X fluctuates massively. In areas with little ray tracing, performance is decent but when you’re in an area with lots of shadows, your frame rate will absolutely tank.

https://www.techspot.com/review/1831-ray-tracing-geforce-gtx-benchmarks/

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)