IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

I never use to think the high refresh made much of a difference but in more recent years I have struggled with eye fatigue even with good glasses. I noticed switching to a 144hz and 120hz monitors I am no longer having the issue. I am a productivity user so not really gaming almost at all.

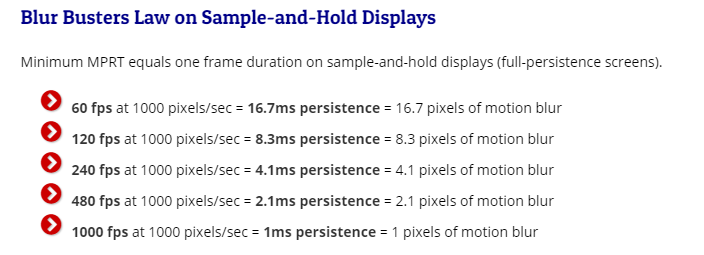

I find that it makes a difference on the desktop- I wouldn't mind pushing to 1000Hz for everything, supposing the panel can keep up.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)