TeleFragger

[H]ard|Gawd

- Joined

- Nov 10, 2005

- Messages

- 1,114

im getting older and it is starting to frustrate me a bit more but still love toying with this..

so this thread is good for old archive sake.. good info here LOL..

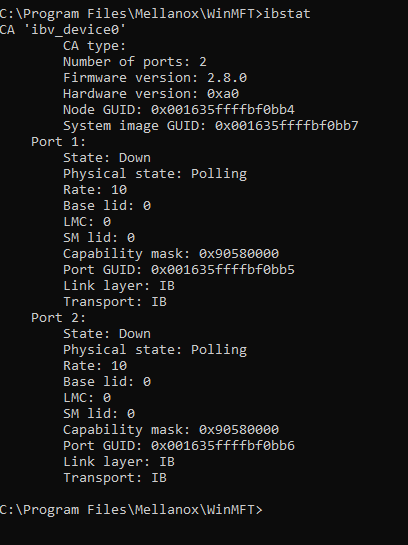

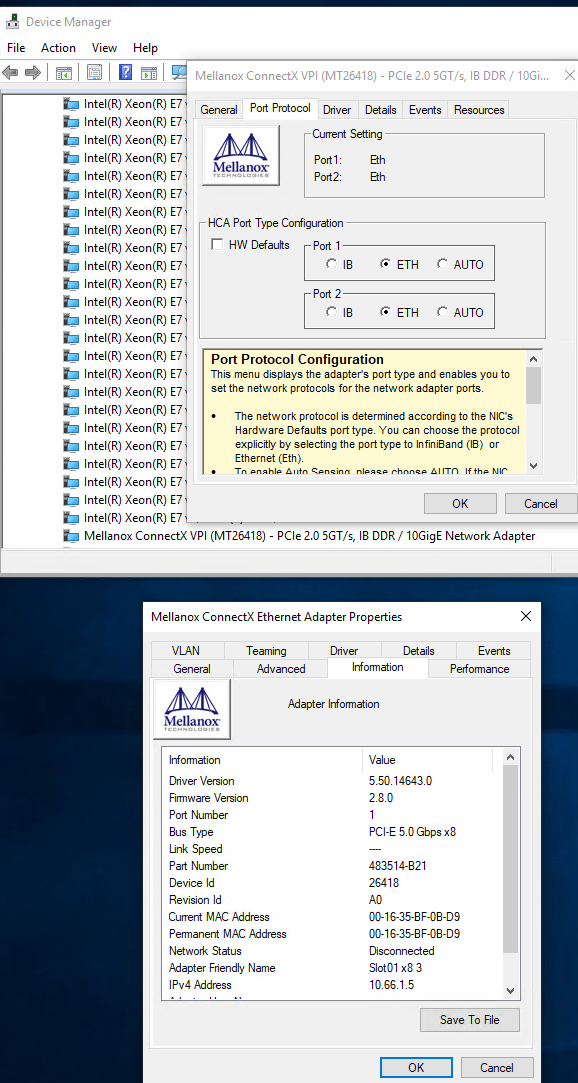

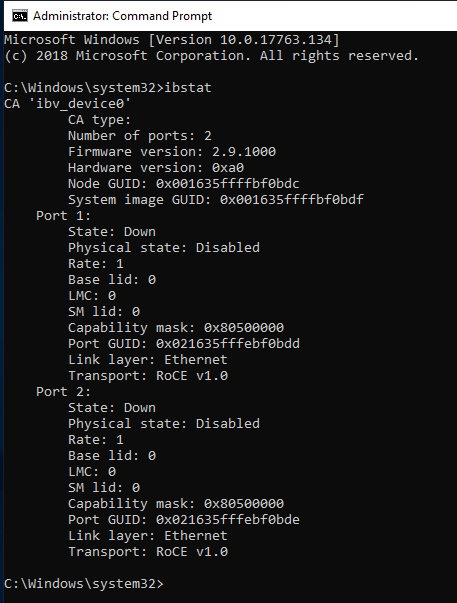

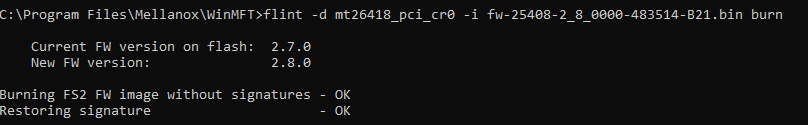

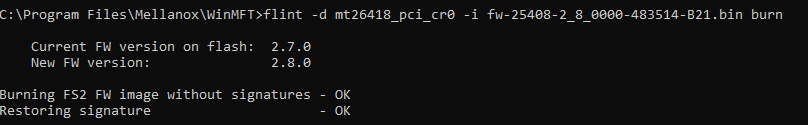

so I have the post above showing 2.8 fw and failing on 2.9 that is no HP fw...

I have another card in another machine (to get 2 machines with ethernet going)...

so back to this one first..

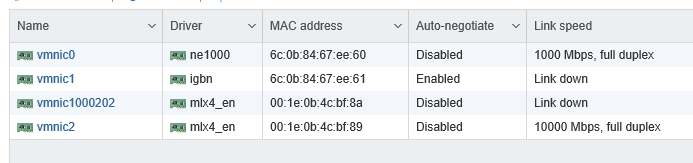

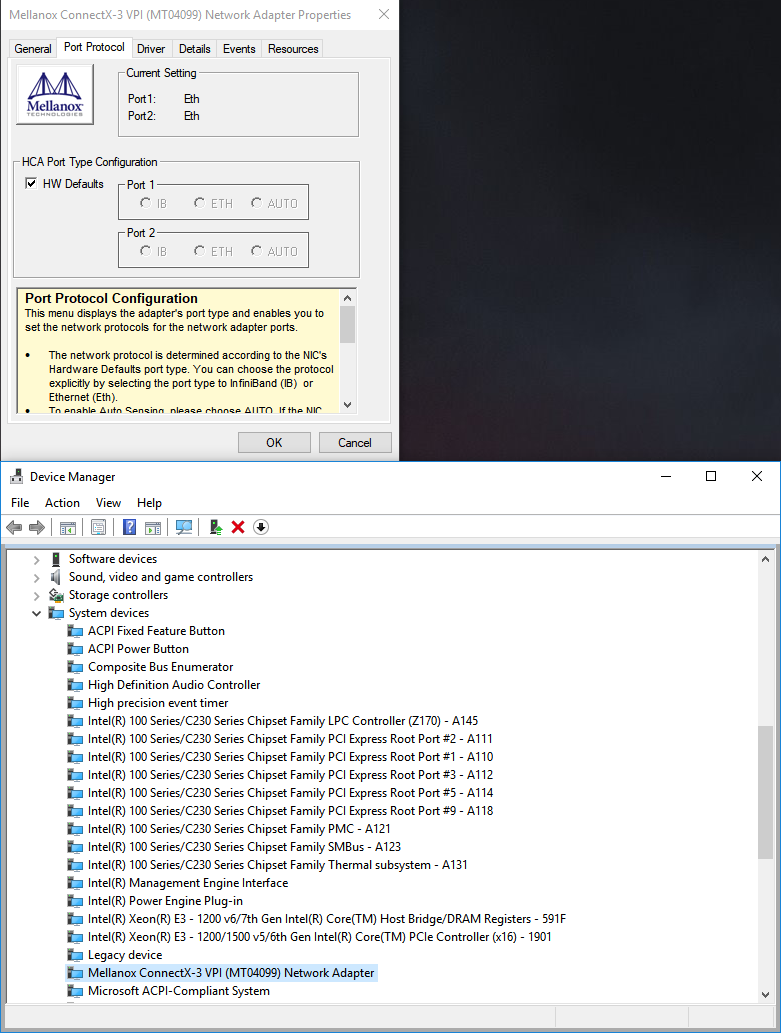

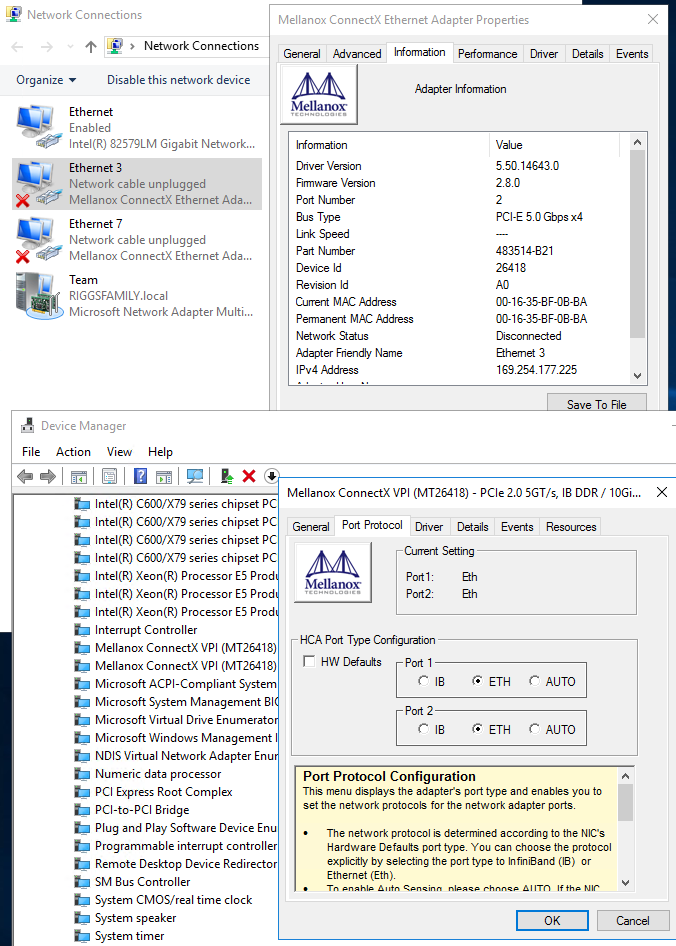

there are 2 nic shown under like in post 1 but under system devices there is another...found out while cruizin all over google!!!!! hah..

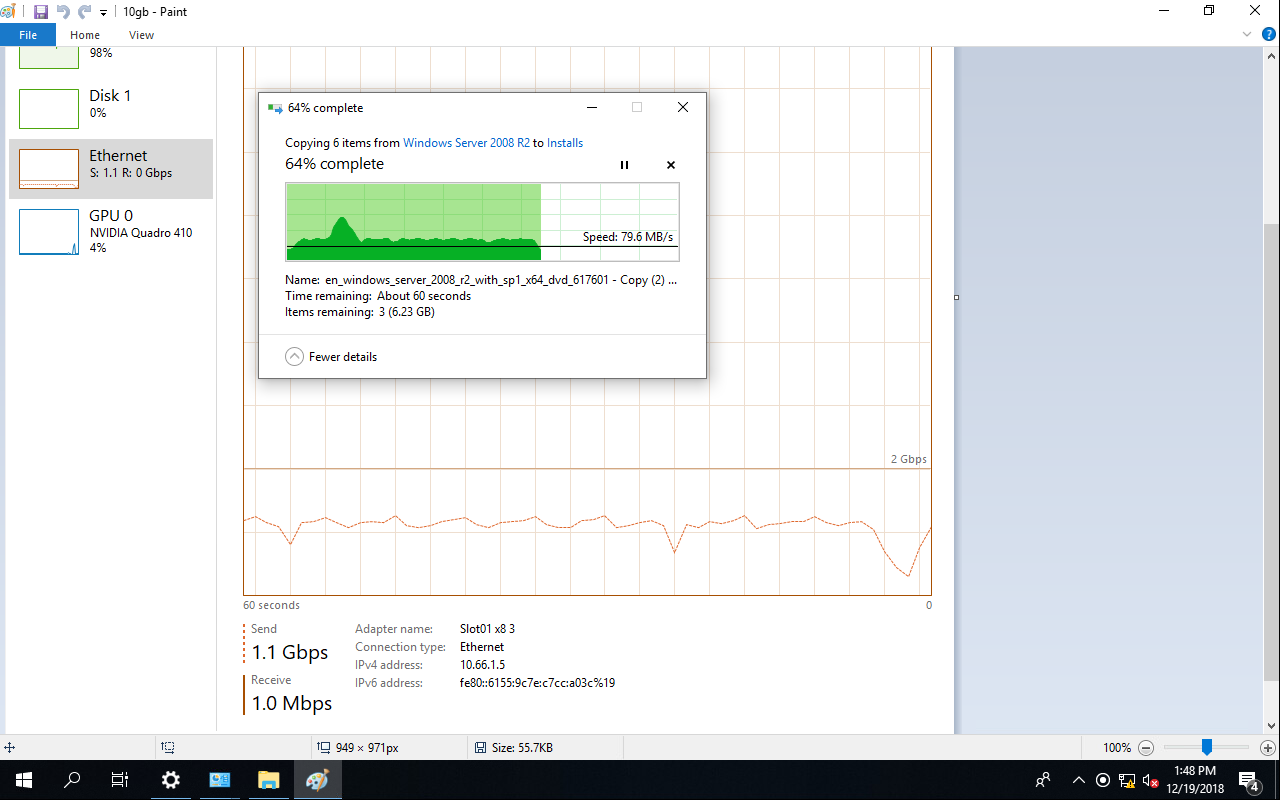

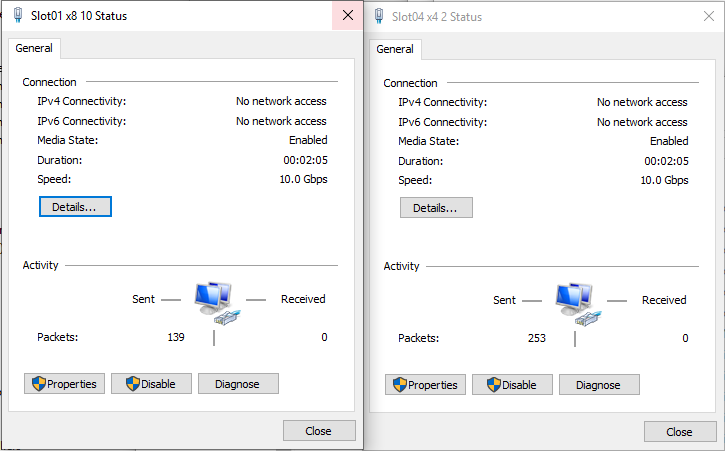

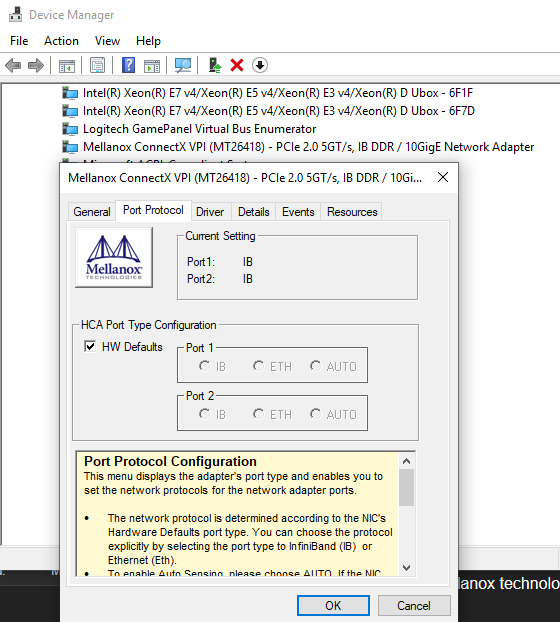

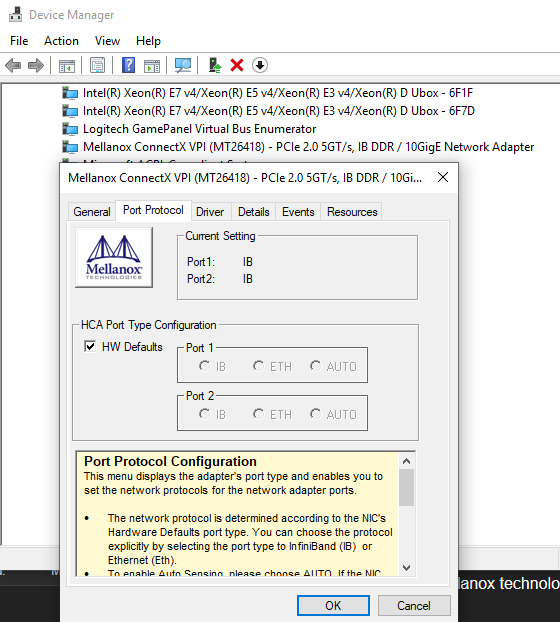

I uncheck HW Defaults - and choose ETH for both and BOOM... all good.. even the top 2 adapters change from IPoib to Ethernet Adapters. can post screenshot later...

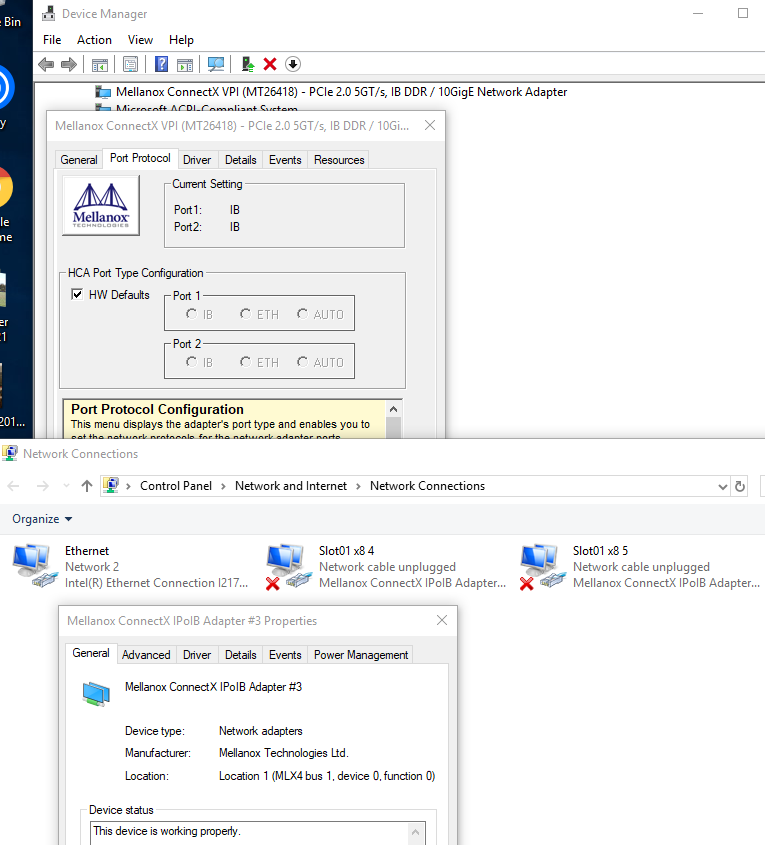

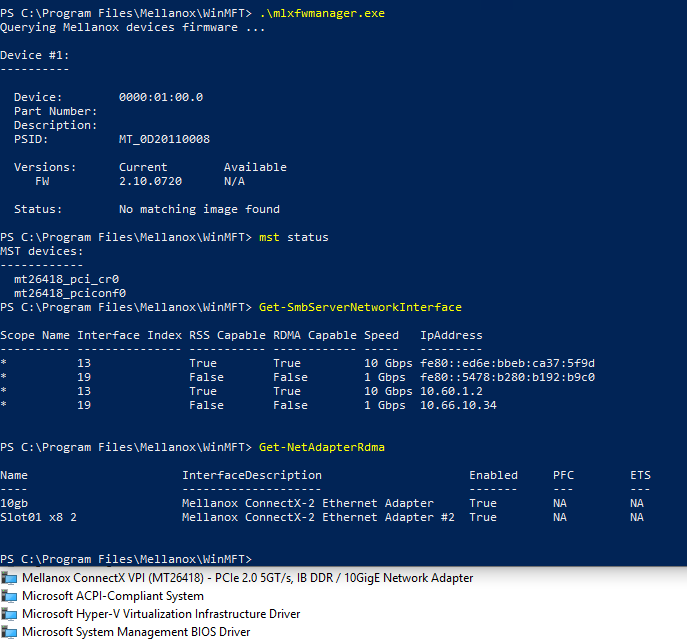

so I have a 2nd machine up and running and . if I uncheck HW defaults... and choose ETH my machine bluescreens and reboots... doing fw check it was 2.7 and I found HP FW of 2.8 which is what the good one has. Flashed it.. yup worked!!!! WOOOOT..

still bluescreens.. CRAP...

but side note on flashing... these are old cards...

having WinMFT_x64_4_11_0_103.exe installed it gave an unsupported card issue... google once again for the save.. said install an older version...

installed... WinMFT_x64_3_5_0_16.exe

AND!!!!!!!!!!!

hah.. so still trying to get 2 machines going. I have 3 other cards but in the same machine where these seem to be working... wont load drivers, etc so I may have a few defective cards..

more to come!

so this thread is good for old archive sake.. good info here LOL..

so I have the post above showing 2.8 fw and failing on 2.9 that is no HP fw...

I have another card in another machine (to get 2 machines with ethernet going)...

so back to this one first..

there are 2 nic shown under like in post 1 but under system devices there is another...found out while cruizin all over google!!!!! hah..

I uncheck HW Defaults - and choose ETH for both and BOOM... all good.. even the top 2 adapters change from IPoib to Ethernet Adapters. can post screenshot later...

so I have a 2nd machine up and running and . if I uncheck HW defaults... and choose ETH my machine bluescreens and reboots... doing fw check it was 2.7 and I found HP FW of 2.8 which is what the good one has. Flashed it.. yup worked!!!! WOOOOT..

still bluescreens.. CRAP...

but side note on flashing... these are old cards...

having WinMFT_x64_4_11_0_103.exe installed it gave an unsupported card issue... google once again for the save.. said install an older version...

installed... WinMFT_x64_3_5_0_16.exe

AND!!!!!!!!!!!

hah.. so still trying to get 2 machines going. I have 3 other cards but in the same machine where these seem to be working... wont load drivers, etc so I may have a few defective cards..

more to come!

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)