HiCZoK

Gawd

- Joined

- Sep 18, 2006

- Messages

- 860

I agree. Especially because plasma and crt are gone too and also did other things right

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Uh... not trying to inflame anyone, but did you just say abuse regarding people who do nothing more than display images on their display, which is precisely their main function? It doesn't matter if you display noise 24/7, or blocks of black/white that never, ever move. Displays are built for displaying. So, no sort of displaying of images can possibly constitute abuse. That is literally their only purpose. Excusing some forms of displayed image as abuse, tells me you're simply excusing a very real - however infrequent - grave flaw in the technology.

Take your second point: 14% of image issue, whether 7% burn-in or image retention. Even if we just think of 7% burn-in, that's unacceptably high. If LG sells 1 million TVs, that's 70,000 people with burn-in. That's a lot of burn-in! For a product whose only function is to display images, having 7% of the production fail at its main and only function is really, really bad!

Right now, OLED is still too high-risk, because buying a product that has one main function where 7% of the production is quite likely to fail, is a bad value proposition. There is definitely a luck component, then, because you don't see OLEDs advertised as great for everything except if you only watch cable news all day! Then don't buy this! They're displays. They must be able to display everything, perfectly, to the best of their ability, until they die. Burn-in is a very real situation that proves they fail at their main function, without any abuse of any sort.

Again, not trying to antagonize anyone, just wanted to point out that those 2 arguments, abuse and % of product failures, are simply unacceptable. At least, they are to me... and I'd think they should be to anyone forking more than one thousand dollars for a display.

Uh... not trying to inflame anyone, but did you just say abuse regarding people who do nothing more than display images on their display, which is precisely their main function? It doesn't matter if you display noise 24/7, or blocks of black/white that never, ever move. Displays are built for displaying. So, no sort of displaying of images can possibly constitute abuse. That is literally their only purpose. Excusing some forms of displayed image as abuse, tells me you're simply excusing a very real - however infrequent - grave flaw in the technology.

This kind of argument perplexes me. If you use any sort of consumer product outside of what is considered normal operating conditions and expected use (and they all come with them) -- yes, that is abuse.

I do nothing more than drive my car, which is its main function -- to be driven. To use your words, that is literally its only purpose. Are you really telling me that if I act like a speed demon, have a lead foot, slam the brakes, and hit the accelerator that the car should last 150,000 miles? I'd say good luck with that. People would probably tell me I'm a shitty driver, too.

Nonsense. Almost every machine made by humans won't last as long pushing it to 100% of its capabilities versus a lower percentage. Running a bright red stationary CNN logo for 20 hours per day at 100% brightness for months on end is not a normal viewing experience. ALL display types can get burn-in if abused. I don't think I've ever seen an airport LCD that didn't have burn in.

Nonsense. Almost every machine made by humans won't last as long pushing it to 100% of its capabilities versus a lower percentage. Running a bright red stationary CNN logo for 20 hours per day at 100% brightness for months on end is not a normal viewing experience. ALL display types can get burn-in if abused. I don't think I've ever seen an airport LCD that didn't have burn in.

You'd make a very good point, if it weren't because these are very different scenarios. My car can go up to 140mph - or whatever, I've never really looked at the end of that thing. However, it was designed to drive between 10-100mph, anything else out of range would be pushing it.... (other good stuff)

SDR is a limited (narrow) band, having a 1000nit HDR display doesn't push that same rendered band 2x to 3x higher like it would ramping up the brightness on a SDR screen. SDR brightness is relative, HDR uses a different system for brightness which uses absolute values. It opens up the spectrum so content's highlights, shadows, etc can go into a much broader band (1000nit peak to .05 black depth). When SDR goes "outside" of it's narrow band, it crushes colors to white, and muddies dark detail to black. HDR will show the actual colors at higher brightness highlights (gleaming reflections,edges) without crushing to white. HDR shows the same content at the same brightness when that content falls within a calibrated SDR range, it does not scale up the brightness of the whole scene like turning the brightness of a SDR screen up would.

If you viewed HDR content on a 1000 nit display or a 500 nit display, any scene where the peaks are lower than 500 nits should look exactly the same on both displays, due to the way that brightness is now coded. It represents an exact value, not a relative value.

For scenes which have highlights exceeding 500 nits, you will have highlight clipping on the lower brightness display (everything above 500 nits turns white) which would not be present on the higher brightness display. <---- Which will show full color gradations across the spectrum in bright highlights instead of crushing to white after hitting the sdr ceiling.

HDR enables far more vivid, saturated, and realistic images than SDR ever could.

High-brightness SDR displays are not at all the same thing as HDR displays.""

Referring to PQ as an 'absolute' standard means that for each input data level there is an absolute output luminance value, which has to be adhered to. There is no allowance for variation, such as changing the gamma curve (EOTF), or increasing the display's light output, as that is already maxed out.

(This statement ignores dynamic meta-data, more on which later.

One of the often overlooked potential issues with PQ based HDR for home viewing is that because the standard is 'absolute' there is no way to increase the display's light output to overcome surrounding room light levels - the peak brightness cannot be increased, and neither can the fixed gamma (EOTF) curve.

As mentioned above, with PQ based HDR the Average Picture Level (APL) will match that of regular SDR (standard dynamic range) imagery. The result is that in less than ideal viewing environments, where the surrounding room brightness level is relatively high, the bulk of the PQ HDR image will appear very dark, with shadow detail potentially becoming very difficult to see.

To be able to view PQ based 'absolute' HDR imagery environmental light levels will have to be very carefully controlled. Far more so than for SDR viewing. This really does mean using a true home cinema environment.

Or, the PQ EOTF (gamma) has to be deliberately 'broken' to allow for brighter images

Uh... not trying to inflame anyone, but did you just say abuse regarding people who do nothing more than display images on their display, which is precisely their main function? It doesn't matter if you display noise 24/7, or blocks of black/white that never, ever move. Displays are built for displaying. So, no sort of displaying of images can possibly constitute abuse. That is literally their only purpose. Excusing some forms of displayed image as abuse, tells me you're simply excusing a very real - however infrequent - grave flaw in the technology.

Take your second point: 14% of image issue, whether 7% burn-in or image retention. Even if we just think of 7% burn-in, that's unacceptably high. If LG sells 1 million TVs, that's 70,000 people with burn-in. That's a lot of burn-in! For a product whose only function is to display images, having 7% of the production fail at its main and only function is really, really bad!

Look, I get it. OLEDs look wonderful. Ignoring their other problems (comparatively low brightness at full white, gradients not up to par with other LCD screens), they're a really attractive option. But we gain nothing from excusing their very real flaws. A few months ago I had to choose: get a 55" OLED or FALD LCD. I ended up getting a Vizio P55-F1 because it looks wonderful and only cost me $800. It's not as striking as an OLED, but it's pretty damn close for much less money. And it has none of the flaws that OLED has (it does, like most LCDs, have other flaws, but that's not what we're talking about here). Right now, OLED is still too high-risk, because buying a product that has one main function where 7% of the production is quite likely to fail, is a bad value proposition. There is definitely a luck component, then, because you don't see OLEDs advertised as great for everything except if you only watch cable news all day! Then don't buy this! They're displays. They must be able to display everything, perfectly, to the best of their ability, until they die. Burn-in is a very real situation that proves they fail at their main function, without any abuse of any sort.

Don't take it from me. The market has pretty much agreed with this perspective. Or you think MicroLED is being aggressively developed just because? MicroLED has the same benefits of OLED, with none of the drawbacks of organic components. If OLED were so great and risk-free, there would be no point in developing MicroLED. And yet, the advantages are obvious to the key market players. Let's not ignore the reasons why that is happening.

Again, not trying to antagonize anyone, just wanted to point out that those 2 arguments, abuse and % of product failures, are simply unacceptable. At least, they are to me... and I'd think they should be to anyone forking more than one thousand dollars for a display.

Yes. For 4K, anything less than 32" is just too small and cramped. Probably need a minimum of 40" for 8K.Uh, more 27" nonsense. Can we please get more 32"+ monitors, preferably around 40" and in the very near future? 27" is started to feel very small, especially with 4K. I'm not looking forward to the spate of 27" 8K monitors you know we're going to get.

it's unclear that micro LED will be able to provide a buyable product any time soon or even if it does, that it will be anything more than an incremental upgrade on current FALD backlighting.

While you're watching 24/7 CNN i'll be enjoying HDR movies and games with true blacks.

Uhm... No. Read up on it. You clearly don't understand what microLED is if you think it's just "incremental".

Uhm... No. Read up on it. You clearly don't understand what microLED is if you think it's just "incremental".

Please don't act like OLEDs are the 2nd coming of Christ. You have nicely deep blacks, but your highlights are garbage. Enjoy your 500nit whites and full screen dimming during daylight viewing while I get 1000+ nits. Both technologies are great, you need to buy depending on when you view content. OLED ain't better, it simply has different advantages. MicroLED will have the best of both worlds: pure blacks and bright highlights.

I'm well aware of what it is, you're assuming that RGB per-pixel microLED Is going to be commercialized any time soon, but all indications are that this is 5-10 years away if not longer. The LEDs are still too big for use in anything sub-100 inch and they are having substantial difficulties making them any smaller. The only possible use of microLED in the next couple of years is improved backlighting for LCDs.

lol no

FYI - you were likely looking at plasma displays. Tons of airports were using them (for whatever reason, never did figure it out). The screens at the airport I've seen were clearly plasma displays with phosphor burn. Again... Not sure who the genius was who picked that display tech for that particular scenario (displaying bright static images) but there you go.

FYI - I know what the difference is between a plasma and an LCD. LCD does and will get burn in after a sufficient amount of time.

Right from the horses mouth:

The HD Guru spoke to Bob Scaglione, Senior Vice President of Marketing for Sharp Electronics USA. He acknowledged that pixels can get “stuck” on its LCD HDTVs, leaving a retained image.

I'm VERY curious if the eSports version of the JOLED will have a low-persistence rolling scan mode.

Theoretically, rolling scan can be lagless -- by scanning in realtime off the cable -- unlike global strobe (which has to scanout to LCD in the dark, before flashing the backlight). Less lag than ULMB!

The infinite OLED contrast gives a far better "HDR" image feel than having a thousand nits blasted at your face with BLB and hazy blacks.Please don't act like OLEDs are the 2nd coming of Christ. You have nicely deep blacks, but your highlights are garbage. Enjoy your 500nit whites and full screen dimming during daylight viewing while I get 1000+ nits. Both technologies are great, you need to buy depending on when you view content. OLED ain't better, it simply has different advantages. MicroLED will have the best of both worlds: pure blacks and bright highlights.

The infinite OLED contrast gives a far better "HDR" image feel than having a thousand nits blasted at your face with BLB and hazy blacks.

Besides, the current 2018 OLED models are like 700-800 nits, more than enough. 2019 models coming out will have something like 1500 nits I hear, if you really want to kill your eyes.

2000 nits is for a super bright sunlit room maybe, definitely not something absolutely needed. But I know people comment their 800 nit OLED's about being too bright in in dark room usage, where OLED shines the most.So, stop spreading FUD about 2K nits melting your face off. You're not displaying the whole screen at 2K nits. (here's a bit more, easy to follow info on this)

And the real solution to get those 2000nits and absolute blacks? Hint: starts with Micro and ends with LED.

%100 correct. But the post I was responding to wasn't talking about contrast. It was talking about black levels. If black levels are good, but highlights are garbage, your contrast is not that great, which shows in OLEDs in their widely accepted poor gradients. As for LCDs, you get better highlights, but the lack of true black doesn't allow the display to get as punchy (also, "hazy blacks"??? You clearly haven't looked at decent ~$1000 FALD LCDs in 2018 - seriously, go to your nearest best buy and check the Vizio P55-F1 that I bought for even less money. And there's better models than that. I watch movies with the usual top/bottom black bars at night, pitch black room, and those borders are indistinguishable from the rest of the room's darkness. Even my husband, who understands nothing about technology, has repeatedly pointed out how strikingly dark blacks look on this TV).

That "infinite contrast" argument needs to die, it's such marketing garbage. Sure, divide anything by 0 and you get infinite. But you certainly don't get infinite contrast with OLED. There are limits, marked by how bright they can get. Funny you say 800nits is enough, when serious display critics are already saying true HDR benefits come only starting at around 2000nits and OLEDs absolute blacks, everything else is compromised in one way or another. If 800 were enough, why would Dolby master material at ten thousand nits?

Extra points: do not be ignorant and spread the FUD that more nits will burn your eyes. Unless the creator and viewer are absolute idiots, nobody is going to watch 2000nits fullscreen for hours on end, just like you don't look directly towards the sun for minutes on end (much higher nits) to blind yourself. The capability is what matters, to sustain bright highlights when they're needed, which is brief lapses of time (hence why brightness retention is codified into the HDR specs). But for fun, let's actually make a real comparison of brightness. On a regular sunny day, you're looking at 10,000 lumens while you're outside. That's not scorching your eyes, is it? (granted, we have to calculate square feet, but we don't need to get that specific for this simple comparison). Now convert those 10K lumens into nits, and for the equivalent, non-eye-scorching luminance, that'd be 32910nits. As in, thirty thousand nine hundred and ten. That's equivalent to daylight on a sunny day. So, stop spreading FUD about 2K nits melting your face off. You're not displaying the whole screen at 2K nits. (here's a bit more, easy to follow info on this)

And the real solution to get those 2000nits and absolute blacks? Hint: starts with Micro and ends with LED.

FYI - I know what the difference is between a plasma and an LCD. LCD does and will get burn in after a sufficient amount of time.

Right from the horses mouth:

The HD Guru spoke to Bob Scaglione, Senior Vice President of Marketing for Sharp Electronics USA. He acknowledged that pixels can get “stuck” on its LCD HDTVs, leaving a retained image.

Thanks for clarifying. EDIT: So I'm guessing that the polarizer "switches" or whatever they're called just get stuck in a certain position?

DLP's can have that issue too, where the micromirror gets stuck and a "burn-in" appearance can happen. I didnn't realize LCD can have that too.

You sound like you watch watch daytime TV outside in Arizona.

Black levels are laughable on LCD even on a 300+ zone FALD. HDR highlights on an LCD LMFAO. LCD is laughably pathetic in every way compared to OLED in a dark room.

Your eye has limited dynamic range itself. If you like to watch dark movies in dark rooms, great, buy an OLED. Otherwise, with the average brightness of content and the average brightness of viewing rooms, I find the blacks on top notch LCDs to be good these days.

The black plastic bezel on my LCD here is actually not as black as the black background of this webpage in a lit room.

Yeah if you view in brightly lit rooms LCDs aren't as terrible by comparison, but you're losing a ton of detail from the ambient light.

One of the often overlooked potential issues with PQ based HDR for home viewing is that because the standard is 'absolute' there is no way to increase the display's light output to overcome surrounding room light levels - the peak brightness cannot be increased, and neither can the fixed gamma (EOTF) curve.

As mentioned above, with PQ based HDR the Average Picture Level (APL) will match that of regular SDR (standard dynamic range) imagery. The result is that in less than ideal viewing environments, where the surrounding room brightness level is relatively high, the bulk of the PQ HDR image will appear very dark, with shadow detail potentially becoming very difficult to see.

To be able to view PQ based 'absolute' HDR imagery environmental light levels will have to be very carefully controlled. Far more so than for SDR viewing. This really does mean using a true home cinema environment.

Or, the PQ EOTF (gamma) has to be deliberately 'broken' to allow for brighter images

The WRGB OLEDs due to the introduction of the 'white' sub-pixel, this distorts the standard RGB color channel relationship - excessively at HDR brightness levels. (if you sum the Y of 100% patch of R+G+B primaries you get 400nits while the same time if you display a 100% White patch you get 800nit...so your color gamut is limited to 400 nits... this means that WRGB OLED's can never be calibrated accurately for HDR... ...but can be calibrated with 3D LUT in SDR mode, the recommendation is up 105-110 nits, there will be to ABL limiting and displays are more stable overs the time at these nits levels.

each display is doing different tone mapping according to the movie metadata, some displays don't do anything, some are roll-off sooner when they will see 4000nits (like 2017 LG OLED's), others don't do anything (like 2016 LG OLED's....if you send 1000 or 4000), others like Panasonic EZ1000 is counting MaxCLL also, but not all values; it's ignoring any MaxCLL below 401nits and above about 5000 nits. If you pause a frame and send different MaxCLL with HD Fury, the picture will change, it can be measured also what its doing by taking greayscale sweep.

For these reasons with HDR10 and static metadata, because there no golden standard about what tone/gamut mapping the HDR10 displays will perform with each movie incoming metadata, it's up to display model/brand/firmware that strategy will follow (clip/soft roll-off etc).

Dolby Vision bypass each display model/brand tone mapping and its using their own (Dolby's) defined tone/gamut mapping algorithm, per frame, so its more accurate.

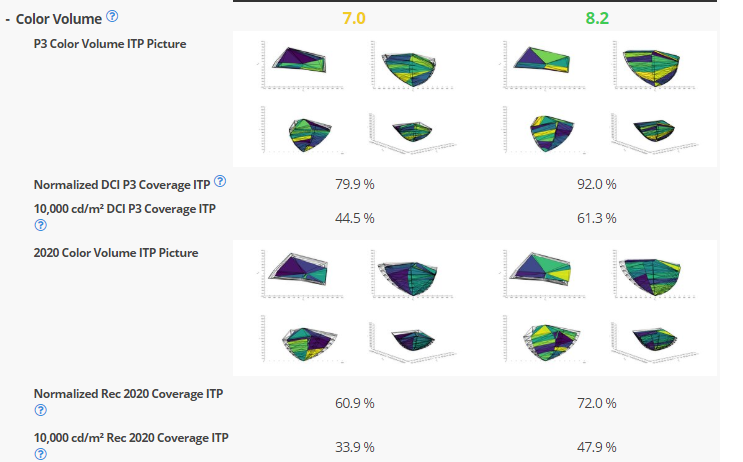

The LG C8 OLED TV is better than the Samsung Q9FN for most people, unless you watch a lot of static content and are concerned about burn-in. The LG C8 has an infinite contrast ratio and no need for a local dimming feature, as well as an ultra-wide viewing angle, but it can experience permanent burn-in. The C8 has a nearly instantaneous response time, although this can bother some people as 24p content can appear to stutter. The Samsung Q9FN has much better color volume and is much brighter

(all the good things)

Obviously, the LG can’t hit the same 2000-nit peaks as the Samsung Q9FN. Nor can it offer the same lush levels of vividness in bright scenes. Nevertheless, it’s capable of impactful highlights and its colours don’t suffer any issues with saturation. Mostly bright scenes, meanwhile, don’t endure anywhere near the amount of clipping seen on older OLED models.

-- and watch a lot of static content and don't want to blow thousands of dollars on something that risks permanent burn-in. --That being said, I’d still recommend checking out the Samsung Q9FN if most of your viewing is in brightly lit conditions, or if you want that real HDR ‘punch’.

Microled seems better

It won't be definitively better until each sub pixel has its own micro led like oled.It doesn't just seem better. It IS better. But there's no point in talking about MicroLED now because we won't get monitor sized mLED displays for many many years, if ever.

[...]contrary to what I know many people reading this might expect, even the brightest parts of the image on this claimed 10,000 nits screen didn’t make me feel uncomfortable. They didn’t hurt my eyes. They didn’t make me worry for the safety of children, or send me rushing off to smother myself in suntan lotion. Even though the area of Sony’s stand where the screen was appearing was blacked ou

These shots showed, too, that it’s only when you’ve got this sort of real-life brightness at your disposal that colors truly start to look representative of reality rather than a construct limited by the screen technology of the day.

One LED per pixel wouldn't make sense - how would you make the individual colors? It'd only work as the backlight in an LCD. Maybe you're thinking of Mini LED FALD LCDs? That's going to initially only be a few thousand zones though, at most - a long, long way from the 8 million needed for per-pixel backlighting on a 4k display. I think if it ever becomes economical to do 8 million LEDs, it won't be much of a stretch to do 24 million for a properly emissive Micro LED display.It won't be definitively better until each sub pixel has its own micro led like oled.

Not just each pixel like what seems to be their first goal.