Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

RTX 2070 vs RTX 2080 vs GTX 1080 Ti vs GTX 1070 @ [H]

SSimmons05

Limp Gawd

- Joined

- Mar 11, 2007

- Messages

- 202

I just got a gtx 2080 msi Seahawk X, and it boosted to 2130 before hitting power limit according to gpu-z. It was insane performance at that level at 144/1440p. And it never got over 56 with the AIO water cooler, and it's dead silent. Highly recommend it over most other 2080s out there.

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

Honestly, the 2070 looks kind of nice seeing the review.

It's at least the performance jump from the 1070 people wanted, and the price isn't totally crazy given the current market (meaning versus the cost of a 1080 Ti).

Granted, Nvidia could have priced it better, but that is what it is.

It's at least the performance jump from the 1070 people wanted, and the price isn't totally crazy given the current market (meaning versus the cost of a 1080 Ti).

Granted, Nvidia could have priced it better, but that is what it is.

So you didn't read the review?

And you don't understand that 'playable' is subjective?

What do you know about frame delivery?

The challenge with a 'minimum FPS' number is that it doesn't necessarily show that frames are being uniformly delivered at <33ms, which would be necessary to make the 'solid 30+ FPS' claim. Further, depending on the game and the user, 30FPS may very well simply be too slow.

On balance, I don't disagree with your implied point; I've gotten along at 30FPS quite well myself at times. But that's me, not Brent_Justice.

I fully understand these are subjective things, but when the graphs clearly show that most of the time the games run at above 30 fps I would still call that well within "playable" as 30 fps doesn't exactly stutter or drop inputs etc. I would rather see reviews objectively stating that the game runs at a mere 30 fps rather than call it "unplayable". The review does not state why they considered it unplayable at all.

Based on the results I would say the 980 Ti and 1070 are still good buys on the used market if you want to play at 1080p or 1440p compared to spending a lot more on a 2070.

- Joined

- May 18, 1997

- Messages

- 55,601

Damn shame the guys that produce the content are so unreachable.I fully understand these are subjective things, but when the graphs clearly show that most of the time the games run at above 30 fps I would still call that well within "playable" as 30 fps doesn't exactly stutter or drop inputs etc. I would rather see reviews objectively stating that the game runs at a mere 30 fps rather than call it "unplayable". The review does not state why they considered it unplayable at all.

/dev/null

[H]F Junkie

- Joined

- Mar 31, 2001

- Messages

- 15,182

I know my 1070s are aging for 1440p, but I'm doing ok without a 2xxx series. Even though SLI isn't "supported" the best, I've found it worked well on quite a few (older) games I want to play. Currently playing through Metro 2033 Redux, then LL Redux, and then probably Shadow of War or perhaps Tomb Raider (2013). No regrets buying them "returned" (With full factory warranty) for $250-$275 ea in Q1 2017.

When this is no longer enough, I've just picked up a GTX 1080 for $260 or so (currently in my Linux box) for 1080p play. I think I"m set for a while...

With my game backlog HUGE, I have no issues playing games I've missed out on with my (quickly aging) gpus. When I catch up to 2016/2017/2018 games in 2020 or 2021 or whatever, I'll buy my GTX 2070/2080 for $250...

When this is no longer enough, I've just picked up a GTX 1080 for $260 or so (currently in my Linux box) for 1080p play. I think I"m set for a while...

With my game backlog HUGE, I have no issues playing games I've missed out on with my (quickly aging) gpus. When I catch up to 2016/2017/2018 games in 2020 or 2021 or whatever, I'll buy my GTX 2070/2080 for $250...

dgz

Supreme [H]ardness

- Joined

- Feb 15, 2010

- Messages

- 5,838

Thanks for the article. I think we got the jump we expected, not the one we wanted.

My question is why there was not a 1070ti used instead of a 1700. If you are using a 1800ti why not use its little brother.

I looked and compared same games used in the ASUS ROG STRIX GTX 1070 Ti GAMING Advanced Edition article https://www.hardocp.com/article/2017/12/05/asus_rog_strix_gtx_1070_ti_gaming_advanced_edition/

and it seemed like the 1070ti was on the same level, not better but in the same range as 2070. I mean no one is buying a 1070. Of the 10 series it seems 1070ti, 1080 and 1080ti are the only pieces worth mentioning.

Enjoy visiting this website everyday since 2001. I feel like a kid waiting for ice cream while it loading.

I looked and compared same games used in the ASUS ROG STRIX GTX 1070 Ti GAMING Advanced Edition article https://www.hardocp.com/article/2017/12/05/asus_rog_strix_gtx_1070_ti_gaming_advanced_edition/

and it seemed like the 1070ti was on the same level, not better but in the same range as 2070. I mean no one is buying a 1070. Of the 10 series it seems 1070ti, 1080 and 1080ti are the only pieces worth mentioning.

Enjoy visiting this website everyday since 2001. I feel like a kid waiting for ice cream while it loading.

- Joined

- May 18, 1997

- Messages

- 55,601

Thanks for the suggestion.My question is why there was not a 1070ti used instead of a 1700. If you are using a 1800ti why not use its little brother.

I looked and compared same games used in the ASUS ROG STRIX GTX 1070 Ti GAMING Advanced Edition article https://www.hardocp.com/article/2017/12/05/asus_rog_strix_gtx_1070_ti_gaming_advanced_edition/

and it seemed like the 1070ti was on the same level, not better but in the same range as 2070. I mean no one is buying a 1070. Of the 10 series it seems 1070ti, 1080 and 1080ti are the only pieces worth mentioning.

Enjoy visiting this website everyday since 2001. I feel like a kid waiting for ice cream while it loading.

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

This is [H]. 30fps ain't [H]ard. At that point you are better off getting a PS4 Pro or XB1X for your money. Not a joke, I'm being serious.the games run at above 30 fps I would still call that well within "playable"

- Joined

- May 18, 1997

- Messages

- 55,601

In all seriousness, there are some games that are playable at 30, but most are certainly not. We aim for the 60fps mark generally with great IQ settings. If you want to turn your IQ way down and get 100+, it does not require high end hardware, just gotta give up the eye candy.This is [H]. 30fps ain't [H]ard. At that point you are better off getting a PS4 Pro or XB1X for your money. Not a joke, I'm being serious.

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

Yeah, I actually love old games, even revisiting ones I've played before. It's nice running them at max settings and getting enough performance to do 4K or Surround at high refresh.With my game backlog HUGE, I have no issues playing games I've missed out on with my (quickly aging) gpus. When I catch up to 2016/2017/2018 games in 2020 or 2021 or whatever, I'll buy my GTX 2070/2080 for $250...

I recently went back to play the F.E.A.R. series and it holds up well. In some ways the graphics were a bit dated, but the gameplay was as good or better than stuff today.

This is [H]. 30fps ain't [H]ard. At that point you are better off getting a PS4 Pro or XB1X for your money. Not a joke, I'm being serious.

I have a PS4 Pro. That doesn't mean I don't also play on PC at higher resolutions and framerates.

Nebell

2[H]4U

- Joined

- Jul 20, 2015

- Messages

- 2,382

Why do you suggest getting 2070 over 1080Ti at the same price point when 1080Ti is 10-20% faster which is huge?

I picked up a 1080Ti for $679 shipped about a month ago. Don't have any regrets for that purchase now.

Also looks like I will be holding onto my Titan X Pascal (2016 version) for another year or so.

2000 series is the first time I'll be skipping a generation in a while. Pretty underwhelming, NVIDIA.

Also looks like I will be holding onto my Titan X Pascal (2016 version) for another year or so.

2000 series is the first time I'll be skipping a generation in a while. Pretty underwhelming, NVIDIA.

It is almost like the 20 series cards were moved up the product stack vs the 10 series in price and performance. Ex the 2080 ti is like the Titan at $1200 and the performance, the 2080 is like the 1080 ti, etc. We have not heard anything about a Titan card yet, and if you continue down the product stack, it looks like the 2060 would slot in at $450.

sirmonkey1985

[H]ard|DCer of the Month - July 2010

- Joined

- Sep 13, 2008

- Messages

- 22,414

It is almost like the 20 series cards were moved up the product stack vs the 10 series in price and performance. Ex the 2080 ti is like the Titan at $1200 and the performance, the 2080 is like the 1080 ti, etc. We have not heard anything about a Titan card yet, and if you continue down the product stack, it looks like the 2060 would slot in at $450.

at this point i think they'd be better off just not releasing a 2060 at all.

Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

It is almost like the 20 series cards were moved up the product stack vs the 10 series in price and performance. Ex the 2080 ti is like the Titan at $1200 and the performance, the 2080 is like the 1080 ti, etc. We have not heard anything about a Titan card yet, and if you continue down the product stack, it looks like the 2060 would slot in at $450.

2070 is $499 and 2060 will not have RTX features so the die will be maybe half the size and should be significantly cheaper.

Maybe I should say could be... but it should release around 1060 msrp IMO.

GoodBoy

2[H]4U

- Joined

- Nov 29, 2004

- Messages

- 2,749

... We have not heard anything about a Titan card yet....

The Titan V has Tensor cores, it is on Volta but it is basically the 'Titan' match for the Turing cards.

https://www.nvidia.com/en-us/titan/titan-v/

Titan V

21.1 billion transistors

5120 cuda cores, 1200Mhz base clock, 1455 boost

640 Tensor cores (Raytracing, DLSS)

12Gb HBM2

652.8 Gb/s memory bandwidth

$2999

RTX 2080 Ti

18.6 billion transistors

4352 cuda cores, 1350Mhz base clock, 1635Mhz boost

544 Tensor cores

11Gb GDDR6

616 Gb/s memory bandwidth

$1199

TheStiggyOne

n00b

- Joined

- Apr 29, 2018

- Messages

- 26

Here are the local brick-and-mortar prices in Vancouver, Canada (all prices in CAD):

GTX 1080 - starting at $630

GTX 1080 Ti - was starting at $830, but now has completely disappeared

RTX 2070 - starting at $770

RTX 2080 - starting at $1080

RTX 2080 Ti - backordered / preorder starting at $1600

Interestingly, the 1080 Ti finally went on sale for a couple of weeks prior to the RTX launch.... And then, once the 2080 was actually available in stock and the 2070 coming, they all vanished.

I was coming from GTX 980 SLI on 1440p@144Hz, so for a single card replacement I had to get the 1080 Ti at the minimum. Alas, by the time I was ready to make the purchase (shodding my car in a set of Conti DWS 06 before the winter truly arrives was more urgent), the 1080 Ti was nowhere to be found. So, I ended up with an Asus RTX 2080 Dual-fan OC for $1100.

My alternative was to go with the GTX 1080, but then I would have had to get 2, thus once again SLI-ing.... Along with the pitfalls of running multi-GPU again, that would have cost me... more?

GTX 1080 - starting at $630

GTX 1080 Ti - was starting at $830, but now has completely disappeared

RTX 2070 - starting at $770

RTX 2080 - starting at $1080

RTX 2080 Ti - backordered / preorder starting at $1600

Interestingly, the 1080 Ti finally went on sale for a couple of weeks prior to the RTX launch.... And then, once the 2080 was actually available in stock and the 2070 coming, they all vanished.

I was coming from GTX 980 SLI on 1440p@144Hz, so for a single card replacement I had to get the 1080 Ti at the minimum. Alas, by the time I was ready to make the purchase (shodding my car in a set of Conti DWS 06 before the winter truly arrives was more urgent), the 1080 Ti was nowhere to be found. So, I ended up with an Asus RTX 2080 Dual-fan OC for $1100.

My alternative was to go with the GTX 1080, but then I would have had to get 2, thus once again SLI-ing.... Along with the pitfalls of running multi-GPU again, that would have cost me... more?

Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

The Titan V has Tensor cores, it is on Volta but it is basically the 'Titan' match for the Turing cards.

https://www.nvidia.com/en-us/titan/titan-v/

Titan V

21.1 billion transistors

5120 cuda cores, 1200Mhz base clock, 1455 boost

640 Tensor cores (Raytracing, DLSS)

12Gb HBM2

652.8 Gb/s memory bandwidth

$2999

RTX 2080 Ti

18.6 billion transistors

4352 cuda cores, 1350Mhz base clock, 1635Mhz boost

544 Tensor cores

11Gb GDDR6

616 Gb/s memory bandwidth

$1199

RT cores are different than tensor cores. The 2080ti has 72 RT cores.

The Titan V also has a larger die.

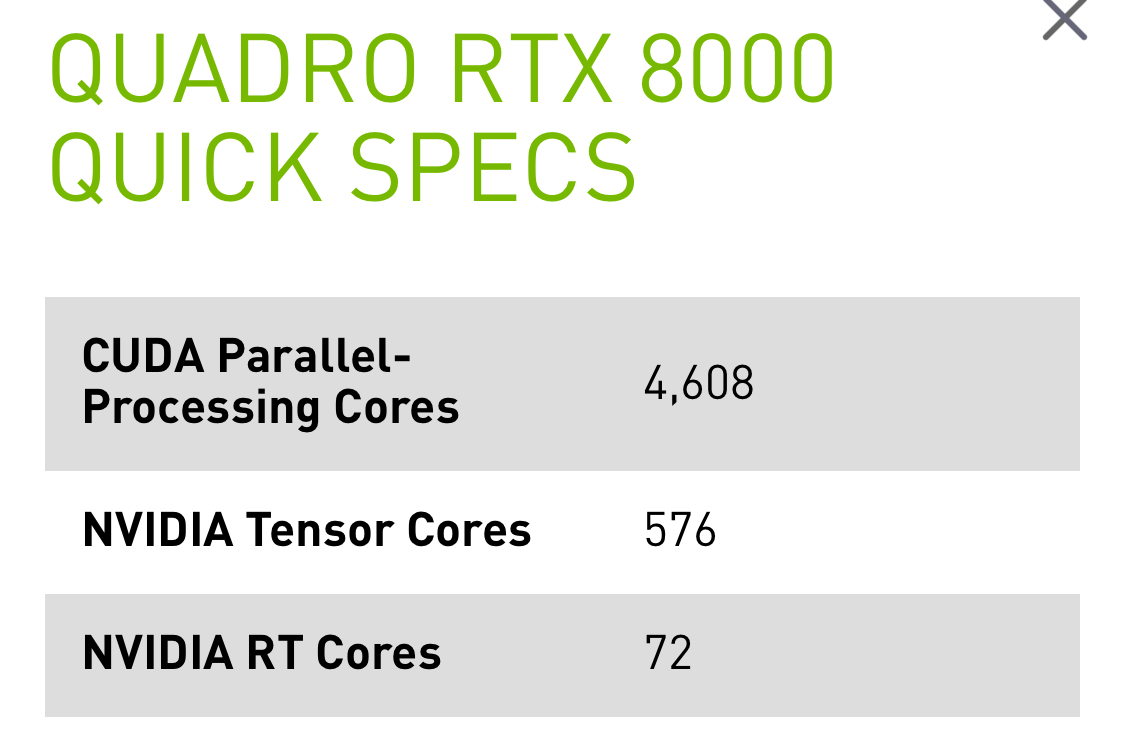

The RTX 8000 is actually what I would expect from a Titan (minus some ram).

Last edited:

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,548

RT cores are different than tensor cores. The 2080ti has 72 RT cores.

The Titan V also has a larger die.

The RTX 8000 is actually what I would expect from a Titan (minus some ram).

View attachment 114555

Tensor is tensor cores, Nvidia driver enabled RTX features for Volta https://www.game-debate.com/news/24...-real-time-ray-tracing-support-for-volta-gpus

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

Tensor is tensor cores, Nvidia driver enabled RTX features for Volta https://www.game-debate.com/news/24...-real-time-ray-tracing-support-for-volta-gpus

RTX is more than ray-tracing. DLSS uses the Tensor cores and is part of RTX.

Enabling RTX on a GPU that lacks RT cores means that said GPU will not be doing RT in hardware.

Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

Tensor is tensor cores, Nvidia driver enabled RTX features for Volta https://www.game-debate.com/news/24...-real-time-ray-tracing-support-for-volta-gpus

DICE was using quad Titan Vs and running RT in the teens before they got the 2080tis. A single 2080ti was destroying 4x Titan Vs.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,548

DICE was using quad Titan Vs and running RT in the teens before they got the 2080tis. A single 2080ti was destroying 4x Titan Vs.

Will be interesting to see when they actually release a game with the features.

Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

Will be interesting to see when they actually release a game with the features.

Yeah who the hell knows anymore lol.

I find it interesting they’ve been super quiet about RTX anything....

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,548

RTX is more than ray-tracing. DLSS uses the Tensor cores and is part of RTX.

Enabling RTX on a GPU that lacks RT cores means that said GPU will not be doing RT in hardware.

Yet unless It has been mentioned somewhere for sure, you cant do Ray Tracing and DLSS which makes me think they are using the same part of the chip.

Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

Yet unless It has been mentioned somewhere for sure, you cant do Ray Tracing and DLSS which makes me think they are using the same part of the chip.

Ray tracing uses tensor cores to do denoising on the output of the RT cores. Some games support both but I am hesitant to say they can do both at the same time.

I am 99% sure the Star Wars demo was demoing both RT and DLSS at the same time though.

But like you said... lets see a real game...

Tom Petersen stated during and interview with PCPer that RT+DLSS is possible on the RTX.Yet unless It has been mentioned somewhere for sure, you cant do Ray Tracing and DLSS which makes me think they are using the same part of the chip.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,548

Tom Petersen stated during and interview with PCPer that RT+DLSS is possible on the RTX.

At the same time cause I haven't found anything concrete on that, which makes me think it's the Tensor cores do both.

Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

At the same time cause I haven't found anything concrete on that, which makes me think it's the Tensor cores do both.

Tensor cores do the ass end (denoising) of ray tracing and all of DLSS.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,548

Tensor cores do the ass end (denoising) of ray tracing and all of DLSS.

Bigger question becomes if you try to do both do you lose 50% performance. I am looking forward to seeing people get to play with this cause I dont think Nvidia is being totally upfront with how it works.

Nasty_Savage

Fully [H]

- Joined

- Mar 19, 2001

- Messages

- 32,633

Thanks for the review. Have a Strix 1080, and was a little leary about this release for once. I was hearing Volta for so long and then all of a sudden RTX. This looks like a padded stopgap for whatever is next. By the time Ray Tracing is available regularly in games, the next gen will be out. I don't follow as close as some of you, but I thought Volta was the next jump up, this Turing seemed to come from out of nowhere. Gonna pass this gen.

Vader1975

Gawd

- Joined

- May 11, 2016

- Messages

- 821

We now have a good view of apples to apples value in current generation gaming. What we don't know is what Tensor is going to do with future titles. I smell the 2070 losing performance once you start trying to push these new features. ETC

Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

We now have a good view of apples to apples value in current generation gaming. What we don't know is what Tensor is going to do with future titles. I smell the 2070 losing performance once you start trying to push these new features. ETC

DLSS would make it faster in traditionally made (rasterized) games, albeit DLSS2X is aimed at IQ. Ray tracing will most likely make it much slower but speed is not the objective at this point.

Last edited:

GoodBoy

2[H]4U

- Joined

- Nov 29, 2004

- Messages

- 2,749

RT cores are different than tensor cores. The 2080ti has 72 RT cores.

The Titan V also has a larger die.

The RTX 8000 is actually what I would expect from a Titan (minus some ram).

View attachment 114555

Ahh, I think you are right. But I never saw the RT cores in the specs on the 2080Ti..

Thanks for the review. Have a Strix 1080, and was a little leary about this release for once. I was hearing Volta for so long and then all of a sudden RTX. This looks like a padded stopgap for whatever is next. By the time Ray Tracing is available regularly in games, the next gen will be out. I don't follow as close as some of you, but I thought Volta was the next jump up, this Turing seemed to come from out of nowhere. Gonna pass this gen.

Volta was a step from Pascal to Turning.

Pascal

Volta = Pascal + Tensor cores

Turing = Volta + RT cores

Just a generalization, there were probably other changes as well, updates to cuda cores, etc. Turing uses the new NVlink as well for SLI, a much faster interconnect compared to the old SLI bridges.

Without games where we can turn on new features, it is still too early to tell. I wouldn't write Turing off just yet.

Thanks for the review, guys. I have decided to hang on to my 1080ti's just a wee bit longer...

By way of: GeForce > Community > Forums > Support > GeForce Hardware > GeForce RTX 20-Series

On Reddit: If you ever remember my first PC, the GPU died today...

By way of: GeForce > Community > Forums > Support > GeForce Hardware > GeForce RTX 20-Series

On Reddit: If you ever remember my first PC, the GPU died today...

Excellent and in-depth review (as expected from HardOCP).

I didn't think to look before this review but I checked the top GPU stats on PrimeGrid and with the various algorithms it's also borne out that there just isn't that much difference between the 1080ti and the 2080.

http://www.primegrid.com/gpu_list.php#GFN22

GFN are the most computationally intensive - even on a fast card GFN22 can take the better part of a day to complete one work unit.

I didn't think to look before this review but I checked the top GPU stats on PrimeGrid and with the various algorithms it's also borne out that there just isn't that much difference between the 1080ti and the 2080.

http://www.primegrid.com/gpu_list.php#GFN22

GFN are the most computationally intensive - even on a fast card GFN22 can take the better part of a day to complete one work unit.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)