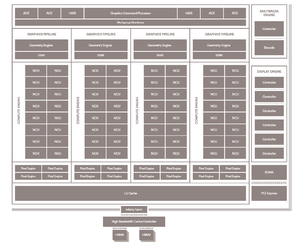

AMD shocked the CPU world when they released the epic Threadripper 2990WX. This 32 CPU 64 threaded beast crushed all Intel CPUs in multithreaded applications including most notably Cinebench.

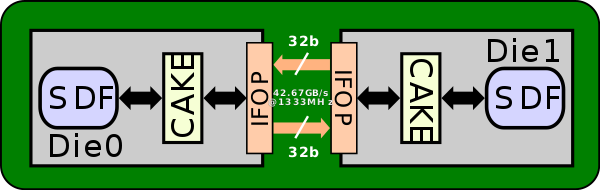

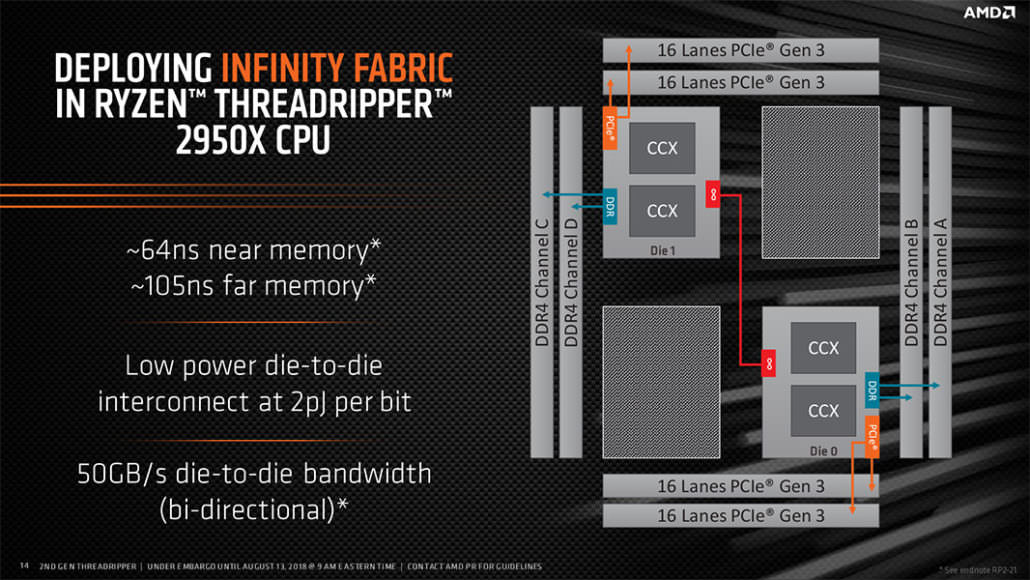

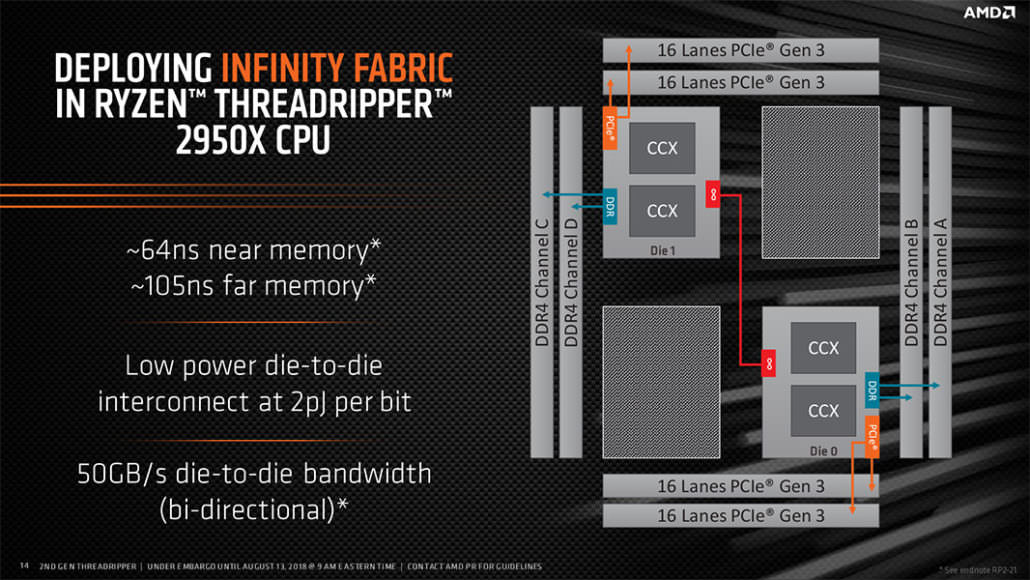

What makes the 2990WX possible is the technology known as Infinity Fabric.

This unique technology allows all 32 cores of the Threadripper to gain access to the system memory.

If AMD wants to make a mark on the GPU world, they should implement Infinity Fabric to create true multi-gpu solutions and just let Crossfire die.

Crossfire doesn't succeed because developers are too lazy to dedicate coding resources to make it work when they know that 99% of the market uses single GPU solutions.

So to get around this, leverage the power of Infinity Fabric to create seamless multi-gpu solutions.

Create multi-gpu video cards that use the infinity fabric to link two gpus into a single virtual GPU that shares memory access across that fabric. Then design your own drivers to access and utilize this 'Virtual GPU' as a single unit.

Nvidia's expansionism of their price range actually creates an opportunity gap that AMD can exploit if they can successfully leverage new technology to create compelling counter solution.

For example the RTX 2080 Ti runs at $1200.

If AMD can create a VX 1090 with 2x smaller GPU (450-500mm2) that outperforms the Nvidia solution (750mm2) at $999 then they are winning the game.

At $699 they can again undercut the 2080 with 2x smaller core GPU, let's dub it the VX 1080.

As for single solution GPUs, RX 1070 and RX 1060 answer the call, bringing Vega level performance to mainstream consumers at around $299 and $199 respectively.

12GB on the VX line, 8 and 6 GB on the RX lines and AMD has achieved market segmentation. Of course skip HBM2 for these consumer lines, and go with DDR6 to have affordable memory for these components. And you can even downgrade to DDR5 or DDR5X for lower end models - RX 1050, 1030, etc. Meanwhile removing crossfire support from its video cards reduces manufacturing costs and increases profit margins for AMD.

How does Nvidia counter? They either need to cut prices or come out with faster products at reasonable prices. A win for all consumers.

Oh and before I go, what could be AMD's Titan killer? Oh that would be the AMD Cosmos VX 1099 featuring 4x GPUs configured just like the 2990WX, while featuring 24 GB-32GB of HBM2 memory. It would be aimed as an enterprise solution, but if you price it at $2499 for the 24GB version and $2799 for the 32GB version,it would undercut and potentially crush its competition with sheer brute force compute power.

What makes the 2990WX possible is the technology known as Infinity Fabric.

This unique technology allows all 32 cores of the Threadripper to gain access to the system memory.

If AMD wants to make a mark on the GPU world, they should implement Infinity Fabric to create true multi-gpu solutions and just let Crossfire die.

Crossfire doesn't succeed because developers are too lazy to dedicate coding resources to make it work when they know that 99% of the market uses single GPU solutions.

So to get around this, leverage the power of Infinity Fabric to create seamless multi-gpu solutions.

Create multi-gpu video cards that use the infinity fabric to link two gpus into a single virtual GPU that shares memory access across that fabric. Then design your own drivers to access and utilize this 'Virtual GPU' as a single unit.

Nvidia's expansionism of their price range actually creates an opportunity gap that AMD can exploit if they can successfully leverage new technology to create compelling counter solution.

For example the RTX 2080 Ti runs at $1200.

If AMD can create a VX 1090 with 2x smaller GPU (450-500mm2) that outperforms the Nvidia solution (750mm2) at $999 then they are winning the game.

At $699 they can again undercut the 2080 with 2x smaller core GPU, let's dub it the VX 1080.

As for single solution GPUs, RX 1070 and RX 1060 answer the call, bringing Vega level performance to mainstream consumers at around $299 and $199 respectively.

12GB on the VX line, 8 and 6 GB on the RX lines and AMD has achieved market segmentation. Of course skip HBM2 for these consumer lines, and go with DDR6 to have affordable memory for these components. And you can even downgrade to DDR5 or DDR5X for lower end models - RX 1050, 1030, etc. Meanwhile removing crossfire support from its video cards reduces manufacturing costs and increases profit margins for AMD.

How does Nvidia counter? They either need to cut prices or come out with faster products at reasonable prices. A win for all consumers.

Oh and before I go, what could be AMD's Titan killer? Oh that would be the AMD Cosmos VX 1099 featuring 4x GPUs configured just like the 2990WX, while featuring 24 GB-32GB of HBM2 memory. It would be aimed as an enterprise solution, but if you price it at $2499 for the 24GB version and $2799 for the 32GB version,it would undercut and potentially crush its competition with sheer brute force compute power.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)