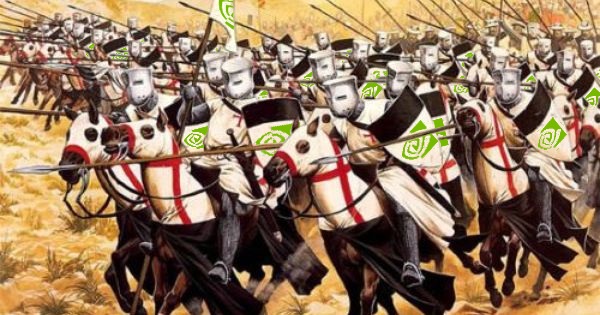

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

A lot of this argument comes from how they try to manipulate the market and lock in consumers.

Aside from GPP (which wasn't 'vendor lock-in'), what you're listing as lock-in are also technologies that they got to market first. The G-Sync example I see repeated here is a great example: they had working hardware when AMD had a hacked solution in response, and AMD is still cleaning up the shitshow that is FreeSync such that it's actually competitive with G-Sync across the board.

Gameworks runs on AMD hardware, and sometimes better- it's done in DirectX!

CUDA is another example- but before CUDA, there was nothing. Apple did OpenCL because fruit; AMD picked it up because they had nothing else. Also, CUDA is fast.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)