- Joined

- May 18, 1997

- Messages

- 55,598

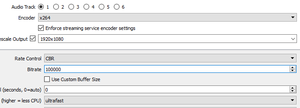

AMD ReLive versus NVIDIA ShadowPlay Performance

We take AMD ReLive in the AMD Radeon Software Adrenalin Edition and NVIDIA ShadowPlay as part of GeForce Experience and find out which one is more FPS and CPU-efficient for recording games while gaming. We will compare features, specifications, and find out overall which better suits content creators for recording gameplay on AMD and NVIDIA GPUs.

We take AMD ReLive in the AMD Radeon Software Adrenalin Edition and NVIDIA ShadowPlay as part of GeForce Experience and find out which one is more FPS and CPU-efficient for recording games while gaming. We will compare features, specifications, and find out overall which better suits content creators for recording gameplay on AMD and NVIDIA GPUs.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)