thebufenator

[H]ard|Gawd

- Joined

- Dec 8, 2004

- Messages

- 1,383

Has anyone purchased any of the 8+ GPU mining boards?

I am looking at getting either a:

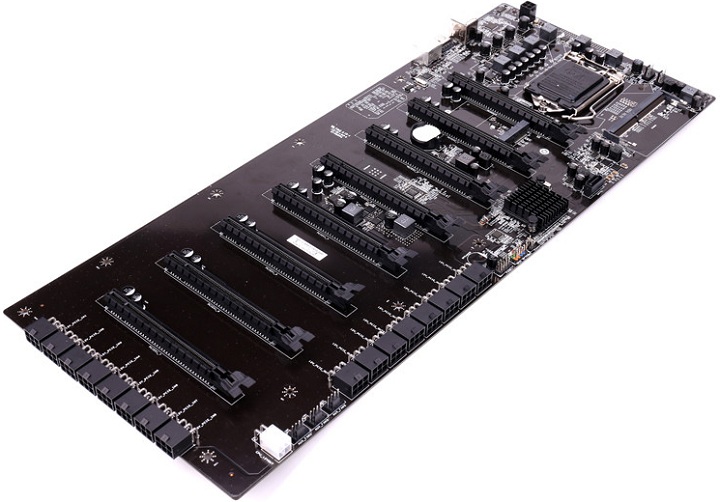

Asrock H110 Pro

https://www.newegg.com/Product/Product.aspx?Item=N82E16813157781

or

Biostar TB250

https://www.newegg.com/Product/Product.aspx?Item=N82E16813138454

Curious if anyone has feedback on them.

I am looking at getting either a:

Asrock H110 Pro

https://www.newegg.com/Product/Product.aspx?Item=N82E16813157781

or

Biostar TB250

https://www.newegg.com/Product/Product.aspx?Item=N82E16813138454

Curious if anyone has feedback on them.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)