Hi everyone,

I'm new here and new to HW RAID (or any kind of RAID for that matter), and recently bought a used Areca 1882ix-24. Haven't installed it yet, still waiting for it to clear customs (taking forever!).

So I have some questions about setting everything up.

For starters, I bought 3 x 10TB HGST (model 0F27352) which will be set up in RAID5 until I expand to more drives a little later this year. I wanted to get 4Kn drives, but when I ordered them, they were the only 10TB HGST drives available. Now someone more knowledgeable than me has told me that I will not be impacted by any performance loss if I use either 512e or 4Kn drives. But what difference would I feel between the two in real world use? This will be a storage server, so no heavy I/O.

Another thing would be that I currently own an older setup with no PCIe2.0 or 3.0 slots other than the second PCIe2.0 slot which would have been dedicated for a second GPU. I do plan to upgrade, but waiting on Cannonlake to be launched. I'm short of funds now anyway and I want to see if they'll change the LGA. So my second question would be if the Areca will work properly in this second PCIe2.0 slot, considering the first one holds the GPU.

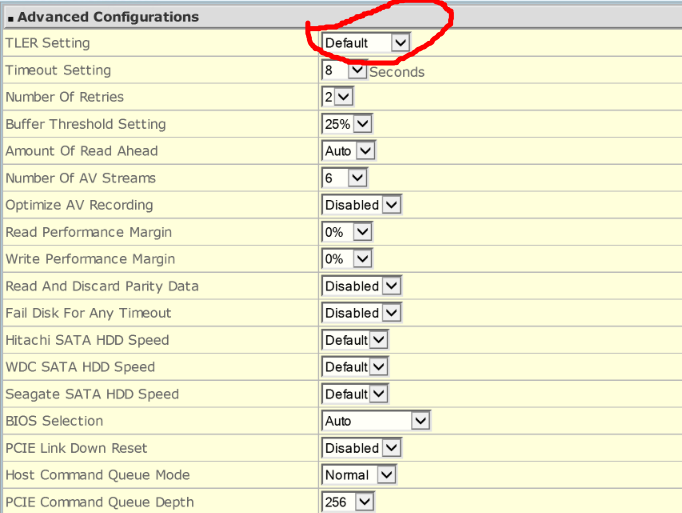

Other than this, I don't know... any advice on BIOS setup for the RAID is appreciated.

I'm new here and new to HW RAID (or any kind of RAID for that matter), and recently bought a used Areca 1882ix-24. Haven't installed it yet, still waiting for it to clear customs (taking forever!).

So I have some questions about setting everything up.

For starters, I bought 3 x 10TB HGST (model 0F27352) which will be set up in RAID5 until I expand to more drives a little later this year. I wanted to get 4Kn drives, but when I ordered them, they were the only 10TB HGST drives available. Now someone more knowledgeable than me has told me that I will not be impacted by any performance loss if I use either 512e or 4Kn drives. But what difference would I feel between the two in real world use? This will be a storage server, so no heavy I/O.

Another thing would be that I currently own an older setup with no PCIe2.0 or 3.0 slots other than the second PCIe2.0 slot which would have been dedicated for a second GPU. I do plan to upgrade, but waiting on Cannonlake to be launched. I'm short of funds now anyway and I want to see if they'll change the LGA. So my second question would be if the Areca will work properly in this second PCIe2.0 slot, considering the first one holds the GPU.

Other than this, I don't know... any advice on BIOS setup for the RAID is appreciated.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)