Intel(R) Core(TM) i7-8700K CPU @ 3.70GHz (6C 12T 4.3GHz/4.7GHz, 4GHz IMC/4.4GHz, 6x 256kB L2, 12MB L3)

Confirms the specs I leaked.

Confirms the specs I leaked.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Intel(R) Core(TM) i7-8700K CPU @ 3.70GHz (6C 12T 4.3GHz/4.7GHz, 4GHz IMC/4.4GHz, 6x 256kB L2, 12MB L3)

Confirms the specs I leaked.

4,3/4,7 meaning that it can go up 4o 4,7 boost? If so, it might be a beasty thingIntel(R) Core(TM) i7-8700K CPU @ 3.70GHz (6C 12T 4.3GHz/4.7GHz, 4GHz IMC/4.4GHz, 6x 256kB L2, 12MB L3)

Confirms the specs I leaked.

4,3/4,7 meaning that it can go up 4o 4,7 boost? If so, it might be a beasty thing

This launch has made me realize what a great investment the 5820K would've been.

6C CPU mostly capable of 4.6~4.7 GHz, the first (?) DDR4 high end platform. It would've cost an extra $100-$200 over Coffee Lake but you would've had it a little over 3 years ahead of time. Also, soldered, and actually better than it's replacement 2 YEARS later (6800K).

I've been very happy with mine at 4.5 in my Ncase

The recent PUBG updated added 6 core support and actually did noticeably increase my frame minimums so hopefully (thanks to Ryzen) 6 core support becomes more commonplace5820K is an unsung hero of the CPU world. If more games could take advantage of it, we'd be calling it the new Q6600 of its time.

What if they announce on the 21st but don't launch until 5 months later? Long wait.

4,3/4,7 meaning that it can go up 4o 4,7 boost? If so, it might be a beasty thing

Start planning for what new 8th Gen Intel Core processor-based device to purchase in the holiday season and even before.

We're starting to get Z370 board leaks so it's probably not going to be too long. Also one of the Intel materials mentions the new CPUs being available prior to the holiday season, meaning Sept/Oct most likely.

thank god. had windows and bunch of all other junk software are as optimized as CB15 then i'd have gone TR without 2nd thought. 80% of the software i use are still legacy software which only uses ST and will never get the optimization or update. to make it fast as possible and as snappy as possible we need intel's high 5ghz high IPC so sad

CB15 IS legacy. Its 5 years behind their Cinema4D engine that's now on version 19. And CB15 is made without supporting any form of newer instruction types. Its as legacy as it can be. You can run it on a K6/P3 and still get no performance penalty.

And CB with its tile based rendering cant be used to compare any form of normal software. Its like claiming the GPU could run all software better and faster than the CPU. Same reason nobody is using CPU to do the rendering in CB in the first place.

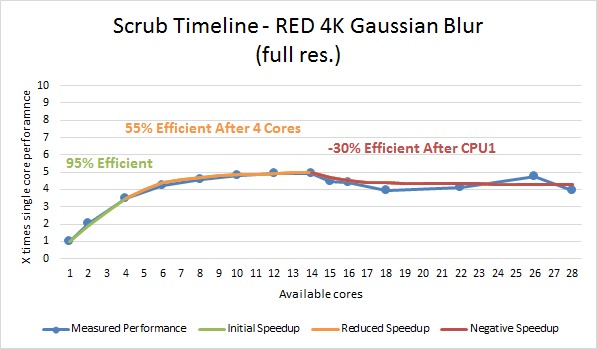

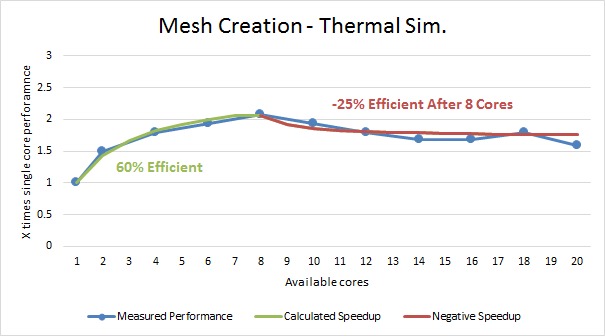

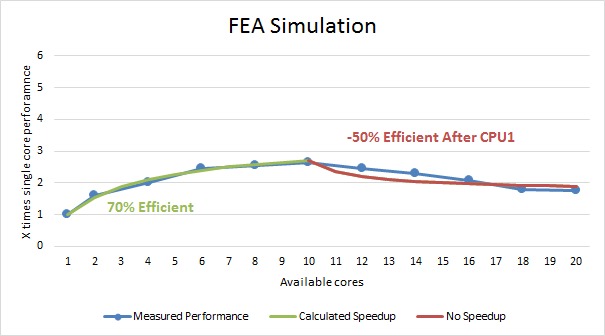

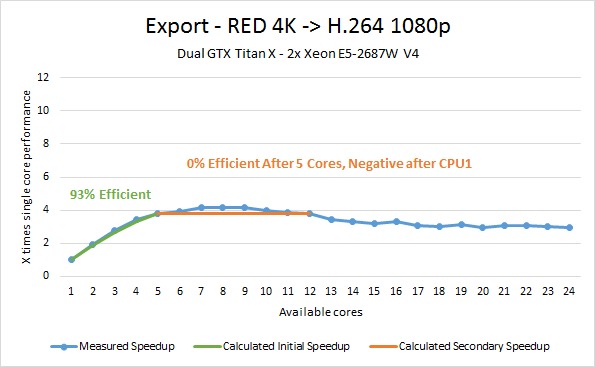

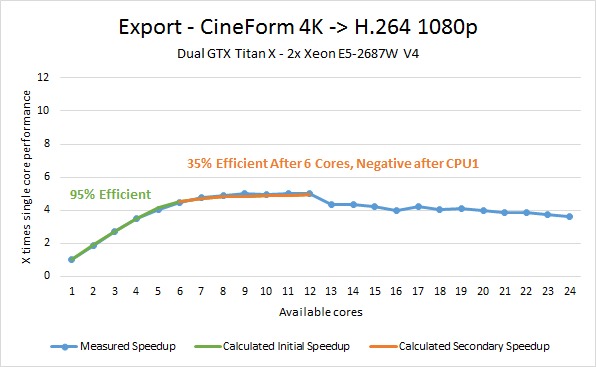

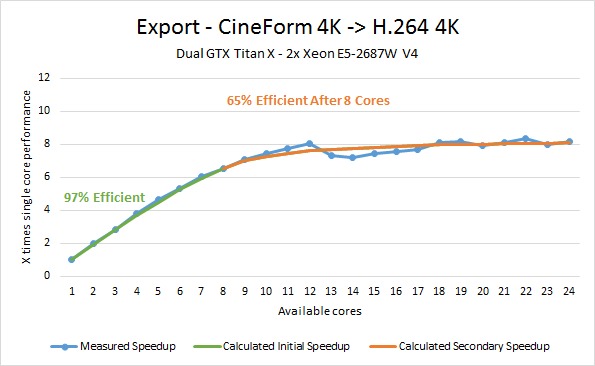

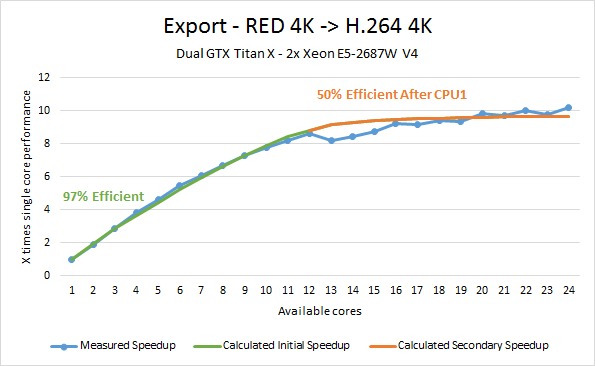

And then there is the fun when multithreading turns into negative performance.

https://blogs.msdn.microsoft.com/dd...ilds-play-part-2-amdahls-law-vs-gunthers-law/

Examples:

yes i understand CB is considered as "legacy" compare to some of other software like dophin and other enterprise software which uses avx2 or 512 the newer extension. however CB uses AVX and is multi threaded and very well optimized in that regard, where as majority of consumer software aren't even close to CB15. of the pictures you posted, i use none of them and actually never heard of them at all rofl, also those effiency after CPU1 or # of cores, wouldnt TSX support in those software help?

CB15 benchmark doesn't use AVX. Cinema4D does.

And using CB15 as an example of multithreading that should be applied to everything else couldn't be more wrong. Also quad 8180 "breaks" CB15

TSX only work in somewhat limited cases, SQL being one of them. But it doesn't magically solve the issue. And that's in a case where you can scale concurrency.

The reason you have negative scaling is explained in the blog link. Not something you can magically fix.

People have to accept that we already have multithreading and its not going to change much. Multithreading isn't new, its been there for over 30 years in the professional segments.

In the old days speculative threading was attempted, but you had to "abuse" power to gain a benefit. Think a 4Ghz 16 core, being 20% faster than a 4Ghz quad, but using 300% more power/resources to do it.

This is from Adobe Premiere Pro CC 2015.

This is also why a 8700K is the new gaming king without any remote kind of competition.

wait what i thought CB15 uses avx, thats why sandy and beyond cpus does so much faster in ST vs first gen i7 the 980 and 990.

also i heard this from somewhere cant recall now donno if its true, that avx/avx2 are memory extensive so usually with only dual channel we see benefit going from non-avx to avx workload but from avx to avx2 theres little improvement. do u know anything about that

the thing i wanted with 8700k is the high ipc/core count without mesh/cache rework, as well as the IGPU which skylake-x didn't have.

AVX isn't used and you can run it with it disabled if you got an AVX CPU and see no difference. Another obvious hint is the power consumption when running CB15. 55-60W on a 95W CPU.

i7 2600 gets ~600 at stock 3.4Ghz.

i7 860 gets ~565 at 3.46Ghz.

i7 980 gets ~790 at 3.33Ghz.

oh nice so its purely ipc improvement from first gen to sandy. so what software can bench and show difference with/without ipc

The problem is how to measure IPC without the influence of cache, uncore etc. Chips with EDRAM vs chips without. Smaller and bigger caches vs one another etc.

Cache in one way or the other wins twice in this example. Not actual core performance.

And again here:

Soo.....I know it's been asked but..... 3770K here for the last 5 plus years....Really been dying to upgrade and always keep holding back due to these continued new sku's and release dates.... I want to build a new rig solely for gaming. No streaming, no content creation, etc. Wondering about the PCI-e lanes

on these mainstream coffee sku's though. I don't think it will make a huge difference, but I would like to continue using my SLI config with 2 1080 Ti cards. I am also one of the old die hard dedicated sound card users, so I want to continue using my X-fi card in the PCI-E 1x slot. Also, I would like to try out a

new NVME drive to roll a fresh windows 10 OS onto, and keep a SSD or large 6TB plus mechanical drive for my gaming needs. I think the upcoming coffee lake 8700k would be the best choice. I really don't care about price, but if I can't justify spending over a grand for a cpu just to game, why spend the

money if won't benefit my needs and I don't have to. thoughts?

Huh? Unless you have Z370 I believe you do, unless Asrock was wrong.I will not have to change my mobo

You probably will.Thanks god, I will not have to change my mobo Amazing chip incoming!!

I think you can swing it on PCIe 3.0 x8 for each GPU and have the X-Fi and a NVMe SSD run off the DMI 3.0-provided lanes left over without much performance loss.Soo.....I know it's been asked but..... 3770K here for the last 5 plus years....Really been dying to upgrade and always keep holding back due to these continued new sku's and release dates.... I want to build a new rig solely for gaming. No streaming, no content creation, etc. Wondering about the PCI-e lanes

on these mainstream coffee sku's though. I don't think it will make a huge difference, but I would like to continue using my SLI config with 2 1080 Ti cards. I am also one of the old die hard dedicated sound card users, so I want to continue using my X-fi card in the PCI-E 1x slot. Also, I would like to try out a

new NVME drive to roll a fresh windows 10 OS onto, and keep a SSD or large 6TB plus mechanical drive for my gaming needs. I think the upcoming coffee lake 8700k would be the best choice. I really don't care about price, but if I can't justify spending over a grand for a cpu just to game, why spend the

money if won't benefit my needs and I don't have to. thoughts?

Super Super likely an 8700k will outright beat an 1800x in almost all benchmarks (multithreaded included...) - go look at 1800x reviews when it came out, a heap of multithreaded benchmarks, the 7700k was really not that far behind.I can't wait to see these benched vs Ryzen. Man exciting times!!

Now if only SSDs battled each other to drive down prices...

I'd guess paper launch.

Super Super likely an 8700k will outright beat an 1800x in almost all benchmarks (multithreaded included...) - go look at 1800x reviews when it came out, a heap of multithreaded benchmarks, the 7700k was really not that far behind.

That clock speed....

That IPC.

and no, I'm not an intel fanboy, I buy what's best for my wallet.

If it's backwards compatible, people are going to say Intel changed their mind at the last minute because of all the outrage from the leaks. Mark my words.

well lets see, 8 cores is 33.3% more cores than 6 cores. 8700k assuming it can hit 5ghz at 6 cores and 1800x can hit 4ghz at 8 cores, intel's frequency is about 25% higher. intel has about 6% IPC advantage over ryzen so overall ryzen should still have an edge in multi threaded scenarios in ideal situation.

of course we know real world ideal situation dont exist, CB15 is very close as it is well optimized and scales perfectly with ryzen cpu but more cores means worse scaling so 6c each core efficiency should be better than each cores in 8, unless CCX design says otherwise.

but yes i agree 6c 5ghz will come VERY CLOSE to 8c at 4ghz ryzen in multi threaded scenarios. in ST it'll just blow ryzen away.