stealthballer123

Limp Gawd

- Joined

- Mar 2, 2017

- Messages

- 299

I want that monitor for 60 bucks.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

V-v-volta! Wait for volta giuyz!Navi wait for Navi!!!!!!!

Not all FreeSync is "cheap".

See C32HG70, costs the same as XB271HU.

I am hoping for 28Mhs and a break of NDA to make sure all miners know it.Ut oh... we need details! But he isn't really allowed to post any. Can he even post what he did without breaking NDA?

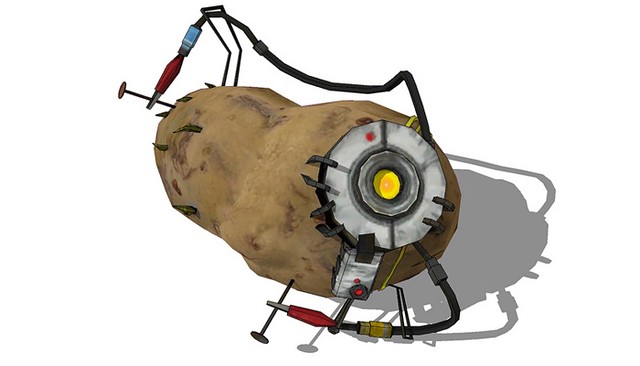

. doom can run flawlessly in a potato

V-v-volta! Wait for volta giuyz!

Those leaderboards won't change if this gets bought up by all miners.I hope Hardocp does some VR testing also, because VR Leaderboard is quite sad to look at if you think of AMD GPU's.

V-v-volta! Wait for volta giuyz!

At this point that is only a real concern for 4k gaming. We are at an inflection point in the technology where you can get by with even lower end mid range cards at high settings unless you want 4k.That's actually good advice if someone wants something better than a 1080Ti.

At this point that is only a real concern for 4k gaming. We are at an inflection point in the technology where you can get by with even lower end mid range cards at high settings unless you want 4k.

That can be a serious suggestion even if you are hard core AMD. Vega prices should drop like a rock when Volta hits.

But the same old argument applies, if you don't have a Ti or 1080/Vega 64, you're going to wait at least 4-5 months? I want something better than a Ti too but I'm not going to wait for god damn navi lol.That's actually good advice if someone wants something better than a 1080Ti.

But the same old argument applies, if you don't have a Ti or 1080/Vega 64, you're going to wait at least 4-5 months? I want something better than a Ti too but I'm not going to wait for god damn navi lol.

We all want 'the' 4k solution but as that darn scientist in Half life said; 'you'll just have to wait'.

I don't think Volta will be that solution either. Maybe for 4k60Hz but not 120Hz+ and heavy VR games maxed.

But the same old argument applies, if you don't have a Ti or 1080/Vega 64, you're going to wait at least 4-5 months? I want something better than a Ti too but I'm not going to wait for god damn navi lol.

We all want 'the' 4k solution but as that darn scientist in Half life said; 'you'll just have to wait'.

I don't think Volta will be that solution either. Maybe for 4k60Hz but not 120Hz+ and heavy VR games maxed.

https://linustechtips.com/main/topi...ng-performance-is-false-according-to-io-tech/

Tweaktown in the comments states that the mining rumor is false at least according to his testing. A finnish reviewer is also saying the same thing in the above link.

Also, Austin Evans has backed up the August 28th release date of RX Vega 56 during his Vega unboxing video.

not false just misunderstood. those rates are what the large mining farms will get with drivers they develop and not with what AMD releases.

not false just misunderstood. those rates are what the large mining farms will get with drivers they develop and not with what AMD releases.

We don't have evidence that those mining farms have developed better rates. The rumor was based on AMD informing their partners that the cards would have very high hash rates as a result of enabling features on drivers needed for non-mining purposes. If reviewers are using the latest drivers (which they 100% will be) then the rumor is false as is. Unless AMD is holding it back, but I can't think of a reason why. Unless they don't influence the card's performance except in mining, which is a possibility i guess.

We don't have evidence that those mining farms have developed better rates. The rumor was based on AMD informing their partners that the cards would have very high hash rates as a result of enabling features on drivers needed for non-mining purposes. If reviewers are using the latest drivers (which they 100% will be) then the rumor is false as is. Unless AMD is holding it back, but I can't think of a reason why. Unless they don't influence the card's performance except in mining, which is a possibility i guess.

Probably right on the money there. Wondering if Navi could even do it before Nvidia replaces Volta, hope and pray they have got Navi to at least 4k60-70/Volta and it can scale like TR, then we might even see 4k120 a little quicker. Either way I want to see the competition, AMD might just have another Ryzen on their hands there, they already have 500gb/sec IF links in Vega.I believe we are two generations out from a card that can handle 4K 120Hz+

4-5 months for Volta to lauch and Vega prices to be halved might be worth it to the AMD folks. Hell they've waited years already...

I bought a 1080 on launch so I've been enjoying Vega performance at about the same cost for quite some time now bahahah.

You will never have "evidence" from the mining farms, they have every reason to keep things private. I'm surprised they shared what they can do with AMD as even knowing what is possible gives an advantage. The rumor was based on AMD presentation that shared what some of those private mining farm rates are and was misunderstood by the audience. The presenter even said those rates are not what AMD could do because they have have to enable all features. Talked about this in the vega rx pre-order thread in regards to tomshardware article.

The first time this rumor was circulated was not to miners or an AMD presentation that we know of, if you have link so we can see the dates that would be great.

If features are disabled that stop from fully utilizing the shader array. Is pretty much what this guy is getting at. That would be dumb on AMD's part. The only thing I can think of that would even remotely give that much increase will be FP16 capabilities. Which pretty sure its enabled. There is nothing else that increase its calculations per sec. With that much increase in calcs/sec then you will need an increases in cache amounts and bandwidth proportional to that.

as I mentioned, talked about it in the pre-order thread. relevant link from that thread.

http://www.tomshardware.com/news/vega-ethereum-mining-performance-rumor,35160.html

I'm sure it doesn't go into as much detail as you'd like and I don't think that is ever going to happen so it's down to whether you believe the AMD VP or not.

he said nothing about drivers he stated changing the firmware, bios man, yeah we are talking about ram timings, functionality and what not. Drivers can't do that.

All of miners do that man, not only do we do it we also get 30% increase in hashrates by doing so. At no time was he talking about Vega specifically, these are just normal things if you are a miner would do to get the best mining performance out of your cards.

Look up hashrates for polaris that are put up on review website, you will see it gets 21-25 mhs depending on which memory it comes with, once you mod it though, (change its ram timings) it will go up to 29 mhs easily then you over clock it and what not to get more.

When I'm single mining I'm getting 32 mhs. So there ya go, big difference with default settings vs. And if I don't change ram timings, the overclocking does almost nothing for the hash rates.

Well, he does go on about public and private hash rates and the private ones being "whoa". community mining forums must be considered public and going from ~25mh/s to ~30mh/s is not "whoa" to me and that's what you get with those memory strap mods. Going 2x, or 3x hash rate is "whoa" to me so I think the 70-100mh/s is a private rate that is not going to be shared.

I've read people getting 31-32mh/s with 1070 using recent update to claymore or etheminer (think it was the later that had update algorithm with input from Nvidia that speed up rates and then claymore followed). You should be able to get mid 30s with your 1080ti, assuming you mining ETH which might not be the most profitable to mine with that card anyways.

edit: reading the VP quote again, it seems clear that huge increases in hash rate are NOT going to come from an AMD driver update, assuming quote is genuine and true. He basically says in order to do that you have to break something that you're not going to be using and AMD can't do that as they have to enable all features to work. None of the public community mods that I know of required installation of or have special tweaked driver. There is a leaked driver that fixes DAG epoch issue but that's not huge hash increase and it doesn't break anything like gaming.

he mentioned OpenCL AND they go into firmware. Probably also use different algo's as well, since large miners solo mine they can view things differently than those who have to join a pool.

as I talked about it in the pre-order thread:

I'm just trying to point out where the Vega 100 mh/s rumor came from and was misunderstood as coming from an AMD driver update.

Doesn't matter where it comes from to hit that hashrate Vega would need 1 Terabyte/sec of bandwidth.

All the special bandwidth savings tech, like compression don't work in compute work loads.

Apparently even the reviewers packaging says about a minimum 1000 watt psu, for the one that got the aio version at least.

I have a Corsair 750W power supply. Do I need to upgrade this if I want to run a Vega 64 Liquid? Do we know yet, or should I wait to find out on Monday?

I have a Corsair 750W power supply. Do I need to upgrade this if I want to run a Vega 64 Liquid? Do we know yet, or should I wait to find out on Monday?

I'm not trying to rehash the arguments from when the rumors first came out a week or so ago. I'm not an expert in this area anyway and if I was and new how to frankly I wouldn't be sharing it with you or any open forum. I'd be shopping my resume to the large mining firms for the big bucks.

Our algorithm, Ethash (previously known as Dagger-Hashimoto), is based around the provision of a large, transient, randomly generated dataset which forms a DAG (the Dagger-part), and attempting to solve a particular constraint on it, partly determined through a block's header-hash.

It is designed to hash a fast verifiability time within a slow CPU-only environment, yet provide vast speed-ups for mining when provided with a large amount of memory with high-bandwidth. The large memory requirements mean that large-scale miners get comparatively little super-linear benefit. The high bandwidth requirement means that a speed-up from piling on many super-fast processing units sharing the same memory gives little benefit over a single unit.

At that time you're also looking at a refresh of Vega. With the typical 3-5 year upgrade cycle for most of the market, 6 months is nothing.But the same old argument applies, if you don't have a Ti or 1080/Vega 64, you're going to wait at least 4-5 months? I want something better than a Ti too but I'm not going to wait for god damn navi lol.

You act like 4 tri/cycle is actually a problem. That's 4/cycle before you start tessellation and is based on binning geometry into four quadrants in screen space. For almost all titles, excluding CAD, that is more than sufficient. They will offer tangible gains in current games as Mantor explained. Just not in peak geometry rate without explicit programming. That wasn't a possibility before and something devs have requested.Which is actually worse than before, because their effectiveness is rather limited right now, ie: they don't offer any tangible performance increase in current games. Vega appears locked at 4 tri/cycle. At least with developer intervention hope is there for it to offer more with proper handling. Now it won't even do that.

No, it hasn't been released yet. You get an advance copy? All I've seen was the ISA paper.Did you read Vega's white paper?

Beyond the 40ish new instructions that we're exposed? That's not necessarily all of them either, just what's released.There is no damn difference between older GCN to VEGA when it comes to instruction sets lol (outside of FP 16), You know what that means? Primitive shaders is nothing newThey just are using a different name for what programmers have used in the past.

It doesn't work because it just works? The drivers have always done that work, only difference being they are likely culling a bit more efficiently. The attribute fetching was the big one Mantor mentioned that should work with existing games. Would likely entail their intelligent workgroup distribution as well.And ya RYS, what he just stated is BS, he lied....... He didn't answer the question properly, It doesn't auto magically work, AMD has to do the work and guess what that is why you won't see more than Polaris's geometry through put most of the time, I have already confirmed this. So unless the application is changed to take advantage of primitive shader, we will not see any improvements in Vega when it comes to geometry through put.

At that time you're also looking at a refresh of Vega. With the typical 3-5 year upgrade cycle for most of the market, 6 months is nothing.

not false just misunderstood. those rates are what the large mining farms will get with drivers they develop and not with what AMD releases.

You act like 4 tri/cycle is actually a problem. That's 4/cycle before you start tessellation and is based on binning geometry into four quadrants in screen space. For almost all titles, excluding CAD, that is more than sufficient. They will offer tangible gains in current games as Mantor explained. Just not in peak geometry rate without explicit programming. That wasn't a possibility before and something devs have requested.

No, it hasn't been released yet. You get an advance copy? All I've seen was the ISA paper.

Beyond the 40ish new instructions that we're exposed? That's not necessarily all of them either, just what's released.

Primitive shaders get rid of the black box and allow a programmable pipeline. Beyond what used to be driver optimizations, they would be limited by the old pipeline structure without game specific testing. A lot is possible without those limitations and that's what devs have been presenting papers on.

It doesn't work because it just works? The drivers have always done that work, only difference being they are likely culling a bit more efficiently. The attribute fetching was the big one Mantor mentioned that should work with existing games. Would likely entail their intelligent workgroup distribution as well.

Eventually primitive shaders will have dynamic memory and scheduling capabilities. They seem to exist to control AMDs binning process in addition to optimizing the culling. Passing hints from prior frames to better predict distributions.

The problem with that is people like Claymore take 2% of your mining profits, anyone that can make mining software run that much more would make a KILLING going on and taking from pools, the biggest pools have close to 20000 workers, you know what that means, 2% of those 20000 workers 6 cards per worker, is how much money?

Yeah shopping around for private miners, they will pay you a flat rate + a small % maybe if you are that good. Guess what when we are talking about hundreds of thousands of graphics cards being used in pools that 2% will blow away anything one guy can give.

This is why solo mining is not recommended because the pool luck will get you all the time, you need to have a butt load of rigs to ensure pool luck for yourself, and even then it still hurts when you have a few hours of bad luck. It can go on for a day or two as well, not just hours.

Simple economics.

I think I know a bit about programming to know what can be done and what can't be done in reasonable aspects. Whom ever pulled that 100 mhs number pulled it out of their ass, its just not possible to do that much with one card right now.

And if you don't believe me about Eth being bandwidth bound, just look up Dagger Hasimoto, that is the algorithm Eth's blockchain is based off of.

They don't use multiple algorithms per coin, its one algorithm and that is it (some coins are combinations of previous algorithms, but the mining software must use all of those algorithms as specified by the coin otherwise it can't mine that coin).