Would be nice to see some 100hz 4K monitors. I still think HDR monitors will make a bigger impact once more readily available and costs come down. Frame delivery tech (Freesync(2) and GSync) I do agree are excellent in improving the experience. Would most be able to tell 60hz Freesync to 100hz Freesync? I am not sure, I would suspect not but really don't know especially if motion blur is used.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Blind Test - RX Vega FreeSync vs. GTX 1080 TI G-Sync

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

100Hz vs. 60Hz?

Night and day.

[I don't condone the use of global motion blur; fine if they use it for cinematics, but leave it off for the point of focus during gameplay, at least]

Night and day.

[I don't condone the use of global motion blur; fine if they use it for cinematics, but leave it off for the point of focus during gameplay, at least]

Very interesting video which makes one want to see more videos like these to explore what people really experience and think. Very professionally done, to the point (refreshing) and has good impact. Love to see more comparison videos's with real folks for example:

- HDR monitor to SDR

- 60hz FreeSync or Gsync to 100hz version

- Vive and Rift

- Ultra/Max quality to Very High Quality settings in games, maybe even to high settings

- Gaming on same GPU/Monitor but different CPUs

I believe you but for most I am not sure.100Hz vs. 60Hz?

Night and day.

[I don't condone the use of global motion blur; fine if they use it for cinematics, but leave it off for the point of focus during gameplay, at least]

As for motion blur I too for the most part do not like it, only Game I do like it is Doom which also happens to be the only game I ever like Depth of Field in as well.

Again: not how it works.But this whole concept is based on perception is it not? If you're pushing the frames its a non issue.

As I said previously: every time you pass a multiple of the refresh rate, you get a new tear line.

~360 FPS at 60Hz = 6 torn frames per refresh:

High framerates don't eliminate tearing. Syncing frames eliminates tearing.

VRR allows you to sync frames with negligible latency so long as you keep the framerate below the display's maximum refresh rate.

If you can get the framerate high enough (at least 10x the refresh rate, higher preferred) it stops looking like tearing and just looks like the image is warping, but nobody wants that either.

I would rather take a slower GPU and a VRR display than a faster GPU and a fixed refresh rate display; e.g. GTX 1070 + 144Hz G-Sync vs GTX 1080 + 144Hz display.

That's not how it works.

The higher the framerate, the more tearing you get. For every multiple of the refresh rate that you cross, you get an extra tear line added on-screen.

What does change is that the gap between tears gets smaller at higher framerates though.

Eventually when the framerate gets high enough (maybe 10x the refresh rate) it just looks like the image is warping when you look around, instead of tearing.

I'm not sure why anyone here is suggesting that VRR and V-Sync should be disabled.

How are you supposed to make a good comparison when the game is stuttering and the screen is tearing?

The flaw with this test was not choosing settings which were demanding enough to keep the fastest GPU below 100 FPS at all times since they were 100Hz displays, not that VRR was being used.

A couple things.

1.) You are technically correct that in the scenario of high-refresh (144hz) and high FPS (something approaching or above 144 fps), there are technically more torn screens. These are invisible to the naked eye because despite more of them existing they are being refreshed with whole frames faster than you can see the intermittent torn frame. It's why freesync/gsync doesn't take off with 'hardcore' gamers. freesync/gsync makes sense in low fps/low refresh situations. Think about it like this, 2 torn frames in one second at 30fps on a monitor that has a refresh rate of 60hz is going to look terrible. The 60hz refresh of the monitor has a bit of vertical refresh to it that can be seen, and the torn screen themselves will also be consistently visible in real-time. This situation can definitely be resolved with freesync/gsync. The extraplotation of this effect is quite different In the case of 10 torn frames a second at 100+ fps on a monitor refreshing at 144hz. It's pretty much invisible to the naked eye. The vertical refreshing isn't visible, and the 10 torn frames @ 144fps in 1 second just can't be seen.

2.) The image does not look like it is warping.

3.) Vsync should always be disabled in dexterity based games. You understand how vsync works? Once you enable vsync, your reactions are always several frames behind a frame buffer.

4.) freesync/gsync is not really vsync. It's kinda what vsync should be, and it's pretty cool, but it has kinda particular usage which we will get to in a moment. It's not really something that people would generally want to use in a high performance rig in a twitch game as both have been shown to increase latency to some extent.

5.) 4k gaming is a hard sell. The monitors aren't really on point, and there aren't really single card setups capable of driving them. Freesync/Gsync is the solution to this scenario. It allows you to game smoothly at 4k. It's not optimal, but it's a cool trick. Nobody is using freesync/gsync at 100+hz/100+fps. It makes no sense, would introduce latency, and doesn't add any appreciable visual fidelity. Freesync/gsync are designed for systems that can't push an optimal fps or monitors that have a sub-optimal refresh rate. It's a good catch-all for stuff that is intensive for high or low-end hardware that is over-extending itself in application to make a pretty terrible experience, pretty great.

DarkLegacy

[H]ard|Gawd

- Joined

- Dec 26, 2004

- Messages

- 1,097

Not about smoke & mirror blind test. Just show me some benchmarks. That is what most PC enthusiast really want to see.

I also think they are trying to generate excitement without actually spilling the beans on it's performance.My guess...the GPU is lacking in performance/power metric vs NVIDIA...so AMD PR is trying to get people to focus on something other than the GPU and performance/Watt...simple as that

I'm not sure where you're getting "2 torn frames per second" or "10 torn frames a second" from.1.) You are technically correct that in the scenario of high-refresh (144hz) and high FPS (something approaching or above 144 fps), there are technically more torn screens. These are invisible to the naked eye because despite more of them existing they are being refreshed with whole frames faster than you can see the intermittent torn frame. It's why freesync/gsync doesn't take off with 'hardcore' gamers. freesync/gsync makes sense in low fps/low refresh situations. Think about it like this, 2 torn frames in one second at 30fps on a monitor that has a refresh rate of 60hz is going to look terrible. The 60hz refresh of the monitor has a bit of vertical refresh to it that can be seen, and the torn screen themselves will also be consistently visible in real-time. This situation can definitely be resolved with freesync/gsync. The extraplotation of this effect is quite different In the case of 10 torn frames a second at 100+ fps on a monitor refreshing at 144hz. It's pretty much invisible to the naked eye. The vertical refreshing isn't visible, and the 10 torn frames @ 144fps in 1 second just can't be seen.

If V-Sync is disabled, every frame tears, not just some of them.

What changes is how many tear lines you get. Below the refresh rate you get a single tear line and its position moves up or down with the framerate. Above the refresh rate you get multiple tear lines. (one for every multiple of it)

Screen tearing is a constant thing, and I don't know how anyone would miss it. It certainly does not disappear for me at high framerates/refresh rates.

It does become less obvious as distinct "tearing" and looks more like the image is warping at very high framerates though, unless there are flashing lights which look like flash strobes that are out of sync.

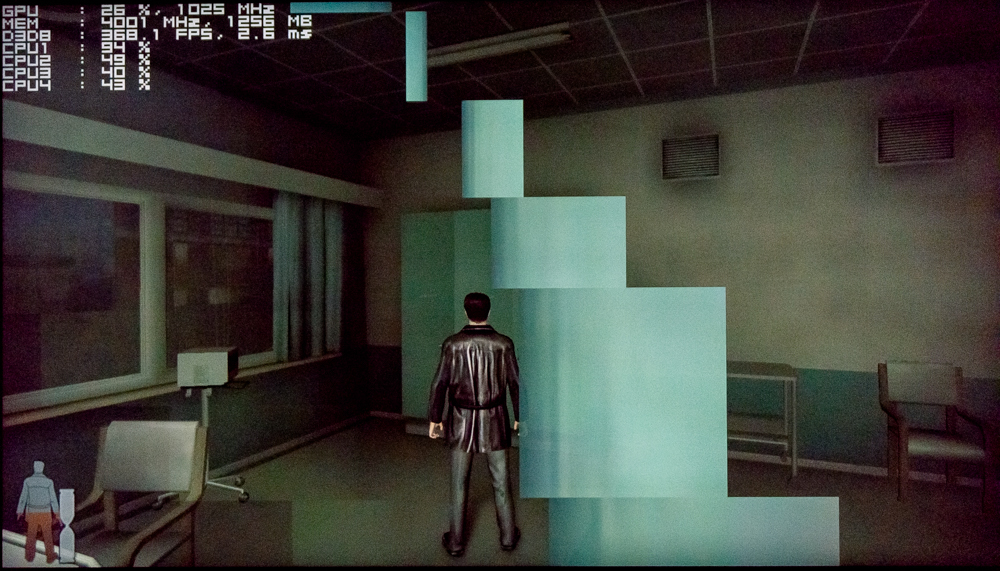

Strafing left while synced at 100 FPS:2.) The image does not look like it is warping.

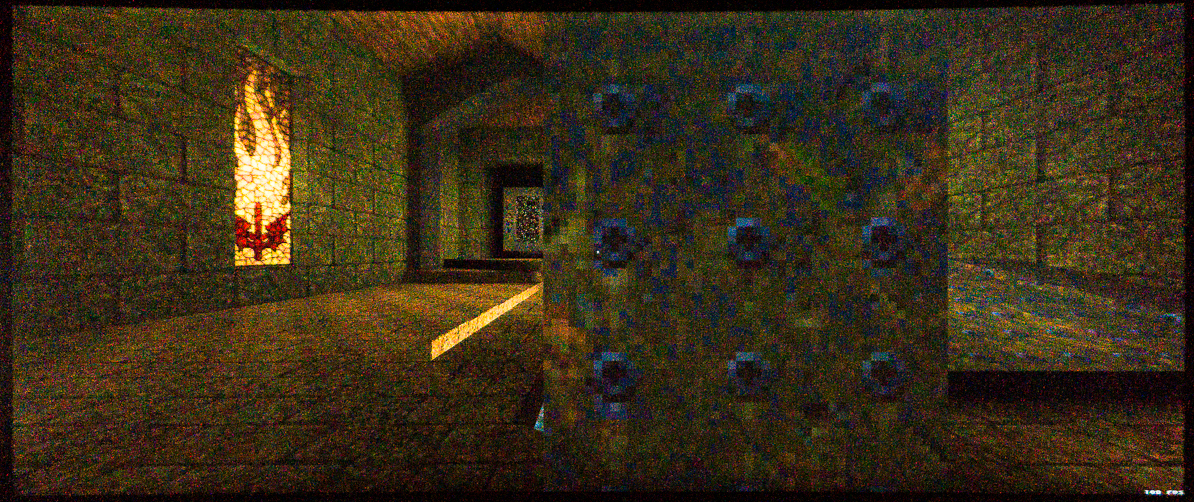

Strafing left with no sync ~650 FPS:

Images are super noisy because Quake is dark and they were shot at 1/800s. (ISO 25,600) Monitor is a PG348Q.

It warps much more when aiming with the mouse, since that turns your view many times faster than strafing does.

The comparison was a lot easier to set up with strafing though. I'm honestly shocked at how closely aligned the two photos ended up.

EDIT: If anyone wants to replicate this, you need to enter the following commands into the console:

Code:

cl_rollangle 0

cl_bob 0

r_drawviewmodel 0/EDIT

3.) Vsync should always be disabled in dexterity based games. You understand how vsync works? Once you enable vsync, your reactions are always several frames behind a frame buffer.

"V-Sync" on a G-Sync display is not standard V-Sync. It's basically acting as frame-time compensation to prevent tearing.

With V-Sync + G-Sync, you can cap the framerate ~3 FPS lower than the maximum refresh rate and you will have effectively the same latency as V-Sync/G-Sync being completely disabled, with the added benefits of accelerated scan-out, and no screen tearing.

If you disable V-Sync while G-Sync is active, you have to set the framerate cap significantly lower than the maximum refresh rate (20 FPS or more) to avoid screen tearing because there is no frame-time compensation. It doesn't offer any latency benefit to do this.

It's much better than V-Sync, and the difference in latency is effectively zero for a high refresh rate panel, and minimal for other panels (1-2ms if running at the same framerate).4.) freesync/gsync is not really vsync. It's kinda what vsync should be, and it's pretty cool, but it has kinda particular usage which we will get to in a moment. It's not really something that people would generally want to use in a high performance rig in a twitch game as both have been shown to increase latency to some extent.

I think I've made a pretty clear case for it not only being about making low framerates smoother.5.) 4k gaming is a hard sell. The monitors aren't really on point, and there aren't really single card setups capable of driving them. Freesync/Gsync is the solution to this scenario. It allows you to game smoothly at 4k. It's not optimal, but it's a cool trick. Nobody is using freesync/gsync at 100+hz/100+fps. It makes no sense, would introduce latency, and doesn't add any appreciable visual fidelity. Freesync/gsync are designed for systems that can't push an optimal fps or monitors that have a sub-optimal refresh rate. It's a good catch-all for stuff that is intensive for high or low-end hardware that is over-extending itself in application to make a pretty terrible experience, pretty great.

Last edited:

zone74 We get it man, your 60hz panel has like +10ms response time and looks terrible. You should try to understand what people are saying rather than getting defensive. You might learn something and save yourself some money. Your panel is the issue. I'm sure it's very good at lots of things, but the issue in all your pictures has to do with the panel have a low refresh rate and a very slow response time. It's an early IPS?

Edit: NM, you gave model number and it's definitely an IPS monitor. It probably does need freesync/gsync. Well...not everyone pays 1000 dollars for a molasses monitor. I hope the color reproduction on that is exceptional, because you have given everyone several reasons to never purchase that monitor.

Edit: NM, you gave model number and it's definitely an IPS monitor. It probably does need freesync/gsync. Well...not everyone pays 1000 dollars for a molasses monitor. I hope the color reproduction on that is exceptional, because you have given everyone several reasons to never purchase that monitor.

Last edited:

D

Deleted member 278999

Guest

There's huge article on blur busters about this:

http://www.blurbusters.com/gsync/gsync101-input-lag/

I thought that fast sync automatically capped your FPS at the maximum refresh rate essentially dumping frames above what's set keeping gsync enabled?

To me, GSYNC w/ Fast Sync provides the smoothest game play.

Unfortunately it looks like blur busters didn't test GSYNC w/ Fast Sync without an in game FPS limiter for input latency.

Factum

2[H]4U

- Joined

- Dec 24, 2014

- Messages

- 2,455

I also think they are trying to generate excitement without actually spilling the beans on it's performance.

You have doubts about the performance?

I know (via AMD's PR) what to expect...and what not to expect.

I suspect that I am not alone either.

Hence why I try to avoid the "debate" right now...

zone74 We get it man, your 60hz panel has like +10ms response time and looks terrible. You should try to understand what people are saying rather than getting defensive. You might learn something and save yourself some money. Your panel is the issue. I'm sure it's very good at lots of things, but the issue in all your pictures has to do with the panel have a low refresh rate and a very slow response time. It's an early IPS?

Edit: NM, you gave model number and it's definitely an IPS monitor. It probably does need freesync/gsync. Well...not everyone pays 1000 dollars for a molasses monitor. I hope the color reproduction on that is exceptional, because you have given everyone several reasons to never purchase that monitor.

The PG348Q is a 100hz IPS panel with a 5ms response time. You are looking a hell of a lot more defense then he is right now, especially as you seem to be getting pissy at someone telling you that you are wrong about something. V-sync acts how it acts regardless of the monitor.

Nothing I posted has anything to do with response time.zone74 We get it man, your 60hz panel has like +10ms response time and looks terrible. You should try to understand what people are saying rather than getting defensive. You might learn something and save yourself some money. Your panel is the issue. I'm sure it's very good at lots of things, but the issue in all your pictures has to do with the panel have a low refresh rate and a very slow response time. It's an early IPS?

Edit: NM, you gave model number and it's definitely an IPS monitor. It probably does need freesync/gsync. Well...not everyone pays 1000 dollars for a molasses monitor. I hope the color reproduction on that is exceptional, because you have given everyone several reasons to never purchase that monitor.

The warped appearance comes from drawing multiple frames in a single refresh when you disable V-Sync.

The more frames being displayed in a single refresh, the more it looks like a warped image rather than a torn image, since the gaps between each torn frame can be close enough to overlap at slower movement speeds.

If you use Triple Buffering: Borderless/Fullscreen Windowed Mode, Fast Sync (NVIDIA), or Enhanced Sync (AMD), you can render more frames than the maximum refresh rate, but only the most recent complete frame will be displayed when the screen refreshes - so you don't get tearing/warping.

It's still higher latency than disabling V-Sync or using G-Sync though, and it won't be smooth like G-Sync is.

Fast Sync essentially breaks G-Sync when you get near your maximum refresh rate. You shouldn't be combining the two.I thought that fast sync automatically capped your FPS at the maximum refresh rate essentially dumping frames above what's set keeping gsync enabled?

To me, GSYNC w/ Fast Sync provides the smoothest game play.

Unfortunately it looks like blur busters didn't test GSYNC w/ Fast Sync without an in game FPS limiter for input latency.

It takes a framerate 5x higher than G-Sync for Fast Sync latency to be comparable.

The rules to follow for the smoothest and lowest latency G-Sync are to: keep V-Sync enabled in the NVIDIA Control Panel, and cap the framerate 3 FPS below the maximum refresh rate with the game if it has the option or RTSS if it does not.

The only exception to this is if your system can consistently render significantly more frames than the maximum refresh rate of your monitor (at least 2x) and only if you disable V-Sync/G-Sync entirely.

That's only going to be 1 frame in the worst-case scenario (60Hz display).

With 144Hz or higher refresh rate G-Sync displays it is less than a frame, and drops to only 1ms at 240Hz.

D

Deleted member 278999

Guest

Nothing I posted has anything to do with response time.

The warped appearance comes from drawing multiple frames in a single refresh when you disable V-Sync.

The more frames being displayed in a single refresh, the more it looks like a warped image rather than a torn image, since the gaps between each torn frame can be close enough to overlap at slower movement speeds.

If you use Triple Buffering: Borderless/Fullscreen Windowed Mode, Fast Sync (NVIDIA), or Enhanced Sync (AMD), you can render more frames than the maximum refresh rate, but only the most recent complete frame will be displayed when the screen refreshes - so you don't get tearing/warping.

It's still higher latency than disabling V-Sync or using G-Sync though, and it won't be smooth like G-Sync is.

Fast Sync essentially breaks G-Sync when you get near your maximum refresh rate. You shouldn't be combining the two.

It takes a framerate 5x higher than G-Sync for Fast Sync latency to be comparable.

The rules to follow for the smoothest and lowest latency G-Sync are to: keep V-Sync enabled in the NVIDIA Control Panel, and cap the framerate 3 FPS below the maximum refresh rate with the game if it has the option or RTSS if it does not.

The only exception to this is if your system can consistently render significantly more frames than the maximum refresh rate of your monitor (at least 2x) and only if you disable V-Sync/G-Sync entirely.

That's only going to be 1 frame in the worst-case scenario (60Hz display).

With 144Hz or higher refresh rate G-Sync displays it is less than a frame, and drops to only 1ms at 240Hz.

Interesting. I will have to give it a try when I get my system back together.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,054

Why have V-Sync enabled at all if you are just going to cap the framerate below the max refresh rate? Doesn't make any sense.Nothing I posted has anything to do with response time.

The warped appearance comes from drawing multiple frames in a single refresh when you disable V-Sync.

The more frames being displayed in a single refresh, the more it looks like a warped image rather than a torn image, since the gaps between each torn frame can be close enough to overlap at slower movement speeds.

If you use Triple Buffering: Borderless/Fullscreen Windowed Mode, Fast Sync (NVIDIA), or Enhanced Sync (AMD), you can render more frames than the maximum refresh rate, but only the most recent complete frame will be displayed when the screen refreshes - so you don't get tearing/warping.

It's still higher latency than disabling V-Sync or using G-Sync though, and it won't be smooth like G-Sync is.

Fast Sync essentially breaks G-Sync when you get near your maximum refresh rate. You shouldn't be combining the two.

It takes a framerate 5x higher than G-Sync for Fast Sync latency to be comparable.

The rules to follow for the smoothest and lowest latency G-Sync are to: keep V-Sync enabled in the NVIDIA Control Panel, and cap the framerate 3 FPS below the maximum refresh rate with the game if it has the option or RTSS if it does not.

The only exception to this is if your system can consistently render significantly more frames than the maximum refresh rate of your monitor (at least 2x) and only if you disable V-Sync/G-Sync entirely.

That's only going to be 1 frame in the worst-case scenario (60Hz display).

With 144Hz or higher refresh rate G-Sync displays it is less than a frame, and drops to only 1ms at 240Hz.

Toriessian

Limp Gawd

- Joined

- Jan 10, 2017

- Messages

- 196

Do we get to see any VR test data in the Vega review? I'm curious

Because it acts as frame-time compensation when combined with G-Sync.Why have V-Sync enabled at all if you are just going to cap the framerate below the max refresh rate? Doesn't make any sense.

Without the frame-time compensation, you have to set the framerate limit considerably lower to avoid frame-time spikes causing the game to stutter and tear for a moment.

Yeah, it's going to be interesting.

I'm expecting them to be burned at the stake honestly, after all the frustration especially.

It'll be a hoot to see the GPU benchers screaming that Freesync needs to be used in the benches.

Magic Hate Ball

Limp Gawd

- Joined

- Jul 28, 2016

- Messages

- 366

I would like to know what and where is freesync II

Same.

I picked up the C32HG70 for $575 and I'm wondering when my software will say Freesync 2 for it instead of just Freesync... if it ever will?

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,054

If you say so. I have not experienced this in my entire time using G-Sync without V-Sync. You should not be coming even close to the maximum refresh rate when using G-Sync, anyway. If you are, you should be using ULMB instead.Because it acts as frame-time compensation when combined with G-Sync.

Without the frame-time compensation, you have to set the framerate limit considerably lower to avoid frame-time spikes causing the game to stutter and tear for a moment.

- Joined

- May 18, 1997

- Messages

- 55,601

Hopefully.Do we get to see any VR test data in the Vega review? I'm curious

- Joined

- May 18, 1997

- Messages

- 55,601

I intend to do another full series of VR testing when these new GPUs are ready.Time allotted? maybe better to do a full VR test after?

Long time reader first time poster. Sorry to say put this test was pointless. It would be like asking drivers what felt better during towing, a Ford truck or Chevy truck and then having each truck towing a wheelbarrow containing a 50 lb bag of cement. Since each card can play the game easily what is the test?

OutOfPhase

Supreme [H]ardness

- Joined

- May 11, 2005

- Messages

- 6,578

Long time reader first time poster. Sorry to say put this test was pointless. It would be like asking drivers what felt better during towing, a Ford truck or Chevy truck and then having each truck towing a wheelbarrow containing a 50 lb bag of cement. Since each card can play the game easily what is the test?

To correct your car analogy (why must we do this?) it would be more like both trucks towing another car around some hills. Something they both really should be able to do well of course, and in a road test, actually do.

Actually doing what you'd expect it to do in a live bind test is useful information, if not entirely surprising. If Vega came up wildly short - you'd want to know, right? So, it didn't, and that is a data point. No, it is not the only data point we ever wish to see.

If you follow this site at all, you know Kyle full well knows the limitations of what he tested, and that it is not the only thing you'll read here over the coming weeks.

- Joined

- May 18, 1997

- Messages

- 55,601

If you watched my comments at the end, I think I summed it rather poignantly.If you follow this site at all, you know Kyle full well knows the limitations of what he tested, and that it is not the only thing you'll read here over the coming weeks.

If you're avoiding the conditions that cause this, you aren't going to see the problem.I have not experienced this in my entire time using G-Sync without V-Sync.

You should not be coming even close to the maximum refresh rate when using G-Sync, anyway.

Where I notice it most is in FPS games when flicking the camera around quickly.

With G-Sync + V-Sync, capping to 97 FPS at 100Hz keeps things smooth, low latency, and tear-free.

With G-Sync enabled and V-Sync disabled, I have to reduce the cap to about 80-85 FPS depending on the game, or else it will frequently tear and stutter for a brief moment if I flick the camera around.

As long as you're implementing a framerate limiter, there's no reason to avoid enabling V-Sync in the NVIDIA Control Panel.

There are times when you may want to enable or disable it inside a game though.

ULMB requires that the framerate is kept equal to the refresh rate and never drops; similar to VR, only you don't have reprojection or time warp to fall back on.If you are, you should be using ULMB instead.

The biggest problem with ULMB is that it's so restricted. 85/100/120Hz is no use for games that are capped to 60 FPS for example, and staying above 85 FPS at all times is basically impossible in many new games. (60 FPS at 120Hz = double images with ULMB)

If you're avoiding the conditions that cause this, you aren't going to see the problem.

Where I notice it most is in FPS games when flicking the camera around quickly.

With G-Sync + V-Sync, capping to 97 FPS at 100Hz keeps things smooth, low latency, and tear-free.

With G-Sync enabled and V-Sync disabled, I have to reduce the cap to about 80-85 FPS depending on the game, or else it will frequently tear and stutter for a brief moment if I flick the camera around.

As long as you're implementing a framerate limiter, there's no reason to avoid enabling V-Sync in the NVIDIA Control Panel.

There are times when you may want to enable or disable it inside a game though.

ULMB requires that the framerate is kept equal to the refresh rate and never drops; similar to VR, only you don't have reprojection or time warp to fall back on.

The biggest problem with ULMB is that it's so restricted. 85/100/120Hz is no use for games that are capped to 60 FPS for example, and staying above 85 FPS at all times is basically impossible in many new games. (60 FPS at 120Hz = double images with ULMB)

ULMB is a non-starter for me given the limitations there are on brightness.

Hopefully that's something HDR displays with backlights capable of 1000-nits brightness will help improve.ULMB is a non-starter for me given the limitations there are on brightness.

- Joined

- May 18, 1997

- Messages

- 55,601

Sorry, do not know.When will it come out Kyle? Or is it under NDA as well........

Was that really likely though given that other AMD cards play Doom well? Please tell me what else we learned other than, yeah both cards can crush Doom.Actually doing what you'd expect it to do in a live bind test is useful information, if not entirely surprising. If Vega came up wildly short - you'd want to know, right?.

OutOfPhase

Supreme [H]ardness

- Joined

- May 11, 2005

- Messages

- 6,578

Was that really likely though given that other AMD cards play Doom well? Please tell me what else we learned other than, yeah both cards can crush Doom.

We learned/confirmed "plays doom at high refresh rates, at high resolutions, at incredibly high settings in a way which hardcore gamers cannot distinguish from a 1080ti", under the direction of a person who is highly critical of PC equipment for a living.

Perhaps you knew this was a foregone conclusion, not everyone did though.

A lot of time, effort, and resources were put into this test. Considering that other AMD cards played Doom extremely well I am disappointed that this was all we learned. I think using a more demanding game would have made the results more interesting.We learned/confirmed "plays doom at high refresh rates, at high resolutions, at incredibly high settings in a way which hardcore gamers cannot distinguish from a 1080ti", under the direction of a person who is highly critical of PC equipment for a living.

Perhaps you knew this was a foregone conclusion, not everyone did though.

GoodBoy

2[H]4U

- Joined

- Nov 29, 2004

- Messages

- 2,751

I like the idea of the blind tests, seemed to work out pretty well.

I don't know the functional differences between G-sync and freesync, if there are any.

The main thing is how much of the price difference of the monitors' is due to the sync tech? Maybe the panels were different tech too, I don't know.

But the main thing I take from the review is that the price premium on the G-Sync isn't necessarily warranted. Assuming it is the sole factor in the price difference. I'm sure nVidia wanted to earn as much as possible while the tech was proprietary. At this point they might as well open them up. It makes no sense to me that an LCD couldn't perform both types of sync... unless there really is some underlying tech differences. Would be interesting to learn more about that.

Some posters mention that one type works better when frames get slower, that could be worth another look. Because isn't that when frame sync really comes into play anyway? So the frames aren't tearing at 120 fps... not really any major technical accomplishment. But if the frame times are controlled with cooperation between GPU and LCD at lower FPS, and the result is smooth frame times, then this seems worthwhile. Seems like I remember reading something along these lines years ago, probably in reference to the G-Sync tech. I haven't read anything about the freesync. Hopefully it does the same thing, and if so, nVidia should just open up the G-Sync tech, or do whatever is needed to allow an LCD panel to support either. I don't like the idea that a panel would only support one or the other, as this locks you into buying a particular brand of GPU.

I don't know the functional differences between G-sync and freesync, if there are any.

The main thing is how much of the price difference of the monitors' is due to the sync tech? Maybe the panels were different tech too, I don't know.

But the main thing I take from the review is that the price premium on the G-Sync isn't necessarily warranted. Assuming it is the sole factor in the price difference. I'm sure nVidia wanted to earn as much as possible while the tech was proprietary. At this point they might as well open them up. It makes no sense to me that an LCD couldn't perform both types of sync... unless there really is some underlying tech differences. Would be interesting to learn more about that.

Some posters mention that one type works better when frames get slower, that could be worth another look. Because isn't that when frame sync really comes into play anyway? So the frames aren't tearing at 120 fps... not really any major technical accomplishment. But if the frame times are controlled with cooperation between GPU and LCD at lower FPS, and the result is smooth frame times, then this seems worthwhile. Seems like I remember reading something along these lines years ago, probably in reference to the G-Sync tech. I haven't read anything about the freesync. Hopefully it does the same thing, and if so, nVidia should just open up the G-Sync tech, or do whatever is needed to allow an LCD panel to support either. I don't like the idea that a panel would only support one or the other, as this locks you into buying a particular brand of GPU.

If you say so. I have not experienced this in my entire time using G-Sync without V-Sync. You should not be coming even close to the maximum refresh rate when using G-Sync, anyway. If you are, you should be using ULMB instead.

You start to get tearing at the upper range of frame-time variance (roughly 120+ FPS on a 144 Hz display) if you don't have V-Sync enabled. FYI, NVIDIA had V-Sync enabled automatically with G-Sync before people delved into the settings and demanded it be exposed to the driver. NVIDIA is on record saying that is how G-Sync was always intended. The option for V-Sync off was only added because people weren't capping with RTSS or in-game and that added a lot of input latency past the monitor's refresh rate. (capping via NV driver still added significant latency)

To be fair, yes, ULMB is ideal if you have 120+ FPS consistently (100 may be doable for some people) and if you're in the 300+ FPS range Fast Sync becomes an option. I have a particularly bright monitor so ULMB is tolerable but I still prefer it off, plus it's only applicable to a few games for me (e.g. Rainbow Six Siege). In any case, V-Sync on with G-Sync in the NVCP - but off in games - is the intended and best setting for G-Sync.

I am betting that a monitor could have both. But Nvidia won't allow their product to be put into a monitor that also supports freesync

I also remember when they said this would add no more than $75 to the price of a monitor (and a hint at closer to $50)

I also remember when they said this would add no more than $75 to the price of a monitor (and a hint at closer to $50)

I don't know the functional differences between G-sync and freesync, if there are any.

...

But the main thing I take from the review is that the price premium on the G-Sync isn't necessarily warranted.

In my experience - and there is a $125 difference between the FreeSync and G-Sync versions of my monitor (roughly) - G-Sync is better at the fringes (30-60, 120+ FPS on my 144 Hz display) and when properly set up also adjusts better to frame-time variances. There's also ULMB. Is it worth the premium? That's the big question. I could pay $250 for a wireless, wireless-charging mouse (G903 + pad) for essentially the same experience as a $50 DeathAdder Chroma, and 99% of people would say a $200 difference there is insane. So it is subjective but there is still at least some difference in experience. The fact that AMD is making this a "GPU + monitor" contest tells me everything I need to know about their angle; so, if you want the best, even if almost insignificantly for many people, you'll go NVIDIA + G-Sync, but it's painted as a rich man's game versus the value-oriented AMD + FreeSync option.

Nothing wrong with that but at the end of the day if I get a Pepsi when having asked for a Coke, my experience is lessened.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)