The longer we wait the higher the expectations are for a home run. If the launch was around the corner people would be more happy with at least a ground rule double.

I had money in 2014 for a new GPU. AMD had nothing worth the cost and the Nvidia 9 series was coming. Waited to see what AMD responded with and was let down by the Fury stuff. 1080 came and was great, was hoping for a Ti but with no competition it wont come. Which ever is next from both vendors that has the best bang for my buck will be my upgrade. The games I mainly play are fine on my 7970 for now. Thought several I cannot run through Eyefinity anymore.

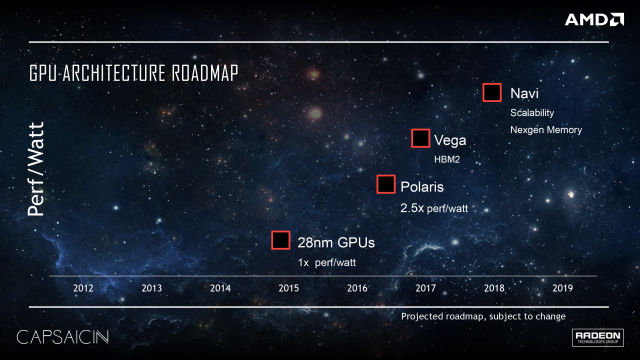

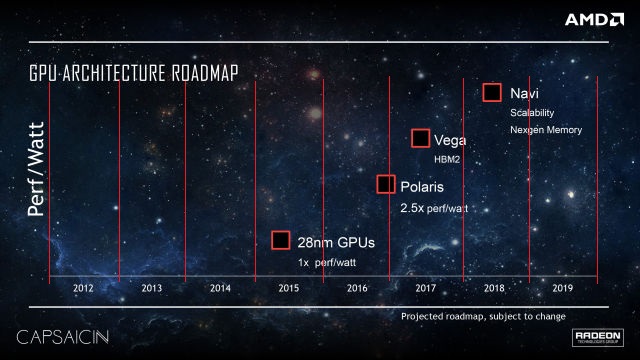

If you have to go now pick a side and be happy, but if you can afford to wait.... Vega better be solid for the lengthy and teased wait otherwise camp green will gain a lot more customers this cycle. Good for them, bad for the industry.

I had money in 2014 for a new GPU. AMD had nothing worth the cost and the Nvidia 9 series was coming. Waited to see what AMD responded with and was let down by the Fury stuff. 1080 came and was great, was hoping for a Ti but with no competition it wont come. Which ever is next from both vendors that has the best bang for my buck will be my upgrade. The games I mainly play are fine on my 7970 for now. Thought several I cannot run through Eyefinity anymore.

If you have to go now pick a side and be happy, but if you can afford to wait.... Vega better be solid for the lengthy and teased wait otherwise camp green will gain a lot more customers this cycle. Good for them, bad for the industry.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)