Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

True 120Hz from PC to TV!!! (Successful Overclocking Of HDTV / Plasmas)

- Thread starter Mark Rejhon

- Start date

Skakruk:You're still spewing that BS I see. It's is not that noticeable in-game unless you're one those people who are more interested in image artifacts than the game. Not to mention, LCDs have far worse problems (as most LCD TVs are PWM based) and OLED isn't affordable yet.

For the record, Samsung plasmas have better motion than Panasonic PDPs (but with elevated black levels).

Vega: Modern plasmas are extremely difficult to burn-in. I leave static pictures on all the time. It's only an issue on LG plasmas.

For the record, Samsung plasmas have better motion than Panasonic PDPs (but with elevated black levels).

Vega: Modern plasmas are extremely difficult to burn-in. I leave static pictures on all the time. It's only an issue on LG plasmas.

Mark Rejhon

[H]ard|Gawd

- Joined

- Jul 6, 2004

- Messages

- 1,395

Skakruk:You're still spewing that BS I see. It's is not that noticeable in-game unless you're one those people who are more interested in image artifacts than the game.

Not necessarily

1. Viewing distance. Sitting computer-monitor-proportional distances (3 feet from 42" plasma) can cause artifacts to be easier to see.

2. Plus (see below), sensitivity to the specific types of artifacts that plasma has, versus sensitivity to the specific types of artifacts that LCD has.

Some people are color blind. (reportedly ~8% of population!). Some people are not.

Some people have better hearing. Some people have poorer hearing.

Some people are more sensitive to motion blur. Some people are not.

Some people get headaches by looking at a display. Some do not.

Some people are sensitive to intra-frame temporal effects (rainbow artifacts on DLP, subfields artifacts on plasma). Some are not.

Some people are more uncontrollably distracted by certain display artifats. Some people are not.

So, if you're all for equality in diversity of humanity, and your sentence is clearly an assumption someone is "interested" in artifacts; some people uncontrollably see them as a distraction (not too unlike a high-pitched noise screaming annoyingly into your ears, while others can't hear the noise at all). It's THAT annoying to a small proportion of people. Some artifacts are THAT uncontrollably annoying to some human eyes, just like a high-pitched coliwhine. Or a symptom such as a PWM-dimming headache you get that others cannot get, etc. Vision is NOT THE SAME among all humans.

For my eyes (when excluding colors), the LCD look is much, much more pure/natural than plasmas. I am sensitive to high-frequency elements of a DLP and plasma (I can see DLP rainbow artifacts easily & I can see plasma subfield artifacts easily). However, accounting for colors, plasmas are certainly better. This is not a lie for my own specific set of human eyes (and many others), even if not your set if eyes. This is a lot of human eye's preference.

Last edited:

Chad_Thunder

Weaksauce

- Joined

- Aug 16, 2013

- Messages

- 83

Well I have testufo working on the latest Opera and I have some crazy discovery to share

First test was tracking the blurtrail line by eye (Running 1440x1080p @ 100hz/100fps on TH-42PF30U)

Results

Here is the interesting bit

If your eye isn't moving there is ZERO motion blur, the blurtrail, UFOs and every other graphic remains as sharp and clear as any static image at any speed including 6400pps!

I can reproduce this even at 60hz, the only purpose higher refresh rates serve is reducing eye-tracking based blur

First test was tracking the blurtrail line by eye (Running 1440x1080p @ 100hz/100fps on TH-42PF30U)

Results

Pixels per second vs Blur width

500 / 1 ~ 1.5 dots

600 / 1.5 ~ 2 dots

700 / 2.5 dots

800 / 2.5 ~ 3 dots

900 / 3 dots

1000 / 3.5 dots

*Swapping to a black line halves the blur width

*At around 800 pps the rear dot turns a pale yellow

Here is the interesting bit

If your eye isn't moving there is ZERO motion blur, the blurtrail, UFOs and every other graphic remains as sharp and clear as any static image at any speed including 6400pps!

I can reproduce this even at 60hz, the only purpose higher refresh rates serve is reducing eye-tracking based blur

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,287

If your eye isn't moving there is ZERO motion blur, the blurtrail, UFOs and every other graphic remains as sharp and clear as any static image at any speed including 6400pps!

I can reproduce this even at 60hz, the only purpose higher refresh rates serve is reducing eye-tracking based blur

Sample and hold blur exists and is a bane of displays. It is related to your eyes and movement, that is why you have to use a pursuit camera to get an approximation of what the blur looks like to your eyes. In real world screen usage, you are going to get sample and hold blur constantly in FoV movement based games (1st/3rd person cgi world perspectives) unless you stare unblinkingly at one point in the middle of the screen and never even judder your eyes slightly at any angle, which is impractical if even possible(I doubt it).

http://www.blurbusters.com/faq/lcd-motion-artifacts/

http://www.blurbusters.com/faq/oled-motion-blur/

The answer lies in sample-and-hold. OLED is great in many ways, but some of them are hampered by the sample-and-hold effect. Even instant pixel response (0 ms) can have lots of motion blur due to the sample-and-hold problem. Some newer OLED’s use impulse-driving in order to eliminate motion blur, but not all of them do.

Your eyes are always moving when you track moving objects on a screen. Sample-and-hold means frames are statically displayed until the next refresh. Your eyes are in a different position at the beginning of a refresh than at the end of a refresh; this causes the frame to be blurred across your retinas:

sampleandhold1

(Source: Microsoft Research)

Vertical axis represents position of motion. Horizontal axis represent time.

Middle image represents flicker displays, including CRT and LightBoost.

Right image represents sample-and-hold displays, including most LCD and OLED.

The flicker of impulse-driven displays (CRT) shortens the frame samples, and eliminates eye-tracking based motion blur. This is why CRT displays have less motion blur than LCD’s, even though LCD pixel response times (1ms-2ms) are recently finally matching phosphor decay times of a CRT (with medium-persistence phosphor). Sample-and-hold displays continuously display frames for the whole refresh. As a result, a 60Hz refresh is displayed for a whole 1/60th of a second (16.7 milliseconds).

sampleandhold2

(Source: Microsoft Research)

Motion blur occurs on the Playstation Vita OLED even though it has virtually instantaneous pixel response time. This is because it does not shorten the amount of time a frame is actually visible for, a frame is continuously displayed until the next frame. The sample-and-hold nature of the display enforces eye-tracking-based motion blur that is above-and-beyond natural human limitations.

An excellent web-based animation of sample-and-hold motion blur can be found via the Blur Busters UFO Motion Tests: Eye-Tracking Motion Blur Animation (View this link via a supported web browser on your LCD computer monitor or laptop LCD screen).

Solution to Motion Blur

The only way to reduce motion blur caused by sample-and-hold, is to shorten the amount of time a frame is displayed for. This is accomplished by using extra refreshes (higher Hz) or via black periods between refreshes (flicker).

.

I completely disagree that the only purpose of high hz (input+output) combined with high fps is reducing eye tracking based blur.

.

While I am very interested in blur reduction and optimally blur elimination, there are additional benefits to running high fps and high hz.

.

When I say "smoothness" I mean something separate from blur reduction. If I were using a general term for blur reduction I would use something like "clarity" or "clearness".

.

Smoothness to me means more unique action slices, more recent action going on in the game world shown - more dotted lines per dotted line length, more slices between two points of travel per se, more unique and newer pages flipping in an animation booklet, pick your analogy. It means less "stops" in the action per second and more defined ("higher definition") animation/action flow, which provides greater aesthetic motion and can increase accuracy, timing, and reaction time.

.

Disregarding backlight strobing for a moment.. As I understand it - where a strobe light in a room someone runs across would show blackouts, a typical lcd rather than blacking out just continues displaying the last "frozen" frame of action until it is updated. At 60hz that is every 16.6ms of course, and at 120hz and high fps it would have shown a new state of/peek into the room and run cycle 8.3ms sooner instead of freeze-frame skipping (over what would have been a new state at +8.3ms) to the next later state of the room and run cycle a full 16.6ms later. What is displayed of the entire animated world action in games is updated twice as often(and twice as soon) which can increase accuracy, and in providing more "dots per dotted line" per se, makes movement transitions "cleaner"/aesthetically smoother, providing higher definition movement and animation divided into 8.3ms updates. This goes hand in hand with blur reduction/elimination to make the entire experience a drastic improvement over 60hz/60fps.

..

Last edited:

Plasma TVs like the Panasonic VT50 are not fit to display even 60 FPS of fast motion, let alone higher. You get all sorts of weird stuff, like warping effects due to the way the subfields work. They're designed and optimized for 25-30fps Television/Cinema where panning speeds are strictly controlled. Even consoles are generally running at lower framerates and there's no rapid (twitch) panning thanks to the controller handicap. You feed these TVs 60fps of ultra smooth mouse-driven PC motion, and they're well out of their depth.

Add to that the retention, dithering, etc. etc. - I tried really hard to make Plasmas work as PC gaming monitors, but it didn't take me long to realise I was pissing in the wind. Increasing the refresh rate wouldn't have made it any more viable.

While i haven't tested the VT50 for pc use, almost none of that is true at all for the VT60.

It displays flawless blur-free 120hz if you put it in 3D mode (2D-3D conversion, or feed it 60hz side-by-side which turns into 120hz).

You can count the little windows on the castle at the fastest speed ( Mark's moving photo http://www.testufo.com/#test=photo&photo=quebec.jpg&pps=3840&pursuit=0 ) , and the picture looks about as crisp as lightboost. It is also possible at 3000pixels/s at 60hz, although it's certainly not perfectly crisp.

It is, however, far better at motion @60hz than any 60hz IPS/VA out there.

Seeing dithering simply means you are sitting too close. Minimum distance is ~2m for a 50".

The only issue is the little rainbow artifacts (which i perfectly understand could be a dealbreaker for some), but again, they are very rarely visible unless far too close to the screen.

That, and the 60hz flicker because of short persistence/60hz general choppiness issue until/if the refresh can be raised.

Burn in seems about as likely as a CRT these days, as in if you really really mistreat it, it can happen.

I haven't even seen any retention yet on mine.

Michaelius

Supreme [H]ardness

- Joined

- Sep 8, 2003

- Messages

- 4,684

Real deal breaker on those plasmas is that they don't make 30" variants

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

Its not a deal breaker if you can get a room that has enough space to sit further away.

Its more comfortable and better for your eyes too.

Its more comfortable and better for your eyes too.

Chad_Thunder

Weaksauce

- Joined

- Aug 16, 2013

- Messages

- 83

oops I meant it's the only solution not purposeI completely disagree that the only purpose of high hz (input+output) combined with high fps is reducing eye tracking based blur.

How did you go with gaming so far? fyi there should be no dithering on Cinema/True Cinema/Pro1/Pro2 picture modessnip

How did you go with gaming so far? fyi there should be no dithering on Cinema/True Cinema/Pro1/Pro2 picture modes

Gaming has been fine on it, but i have a hard time standing 60fps for fast motion.

Haven't had much time to experiment more with timings since I have been very busy lately.

I've mainly watched movies/series on it, the 120hz 3d is excellent (better than 3d lightboost, digital cinema, or anything else i've seen), and movies and series are great in 60fps 2d as well.

I have zero issues except the 60hz choppiness for gaming and the 60hz flicker for whites/bright colors. The quality in all other regards is, as most reviewers also seem to agree, reference quality.

Even if I can't get the refreshrate up, I'm still very happy with it for movies/series. We will see.

Chad_Thunder

Weaksauce

- Joined

- Aug 16, 2013

- Messages

- 83

I know what you mean, I simply run 1440x1080 for certain games because 100hz is a big improvement for shooters and the like. I'm holding out for a VT70 with display port but the future of Panasonic plasma is uncertain with only 2% market share or something

Mark Rejhon

[H]ard|Gawd

- Joined

- Jul 6, 2004

- Messages

- 1,395

That's television view distance. Not suitable for some of us.Seeing dithering simply means you are sitting too close. Minimum distance is ~2m for a 50".

Common proportional view distance is approximately 1:1 for a primary computer monitor.

24" monitor = common desktop view distance of ~24" (e.g. 24" monitors)

50" monitor = common desktop view distance of ~50" (e.g. HDTV mounted on wall at back of deep desk)

etc.

In fact, many people sit closer to the SEIKI 50" (wall mounted at the back of their desk, 3 feet away from their head), because of the pixel density of the SEIKI. From a computer monitor usage perspective where you want to use it as a primary monitor for everything, I don't necessarily buy the "too close" stuff on every use case; this is why plasma becomes flawed as a computer monitor. You're forced to sit further back just to gain the full plasma benefits, which is unacceptable for many if they like to use Visual Studio, PhotoShop, and videogames, on the same TV panel as a computer monitor. As a result, people who are sensitive to plasma artifacts, means plasma won't let you sit close enough comfortably without seeing plasma artifacts. It automatically disqualifies plasma for many people who want to scale the angular coverage proportionally to a computer monitor. Plasma is great when it fits your use case. For desktop usage, I find close viewing of LCD motion blur (of non-LightBoost) is more comfortable than close viewing of plasma artifacts. Your mileage will vary based on your use case.

Last edited:

That's television view distance. Not suitable for some of us.

Common proportional view distance is approximately 1:1 for a primary computer monitor.

24" monitor = common desktop view distance of ~24" (e.g. 24" monitors)

50" monitor = common desktop view distance of ~50" (e.g. HDTV mounted on wall at back of deep desk)

etc.

In fact, many people sit closer to the SEIKI 50" (wall mounted at the back of their desk, 3 feet away from their head), because of the pixel density of the SEIKI. From a computer monitor usage perspective where you want to use it as a primary monitor for everything, I don't necessarily buy the "too close" stuff on every use case; this is why plasma becomes flawed as a computer monitor. You're forced to sit further back just to gain the full plasma benefits, which is unacceptable for many if they like to use Visual Studio, PhotoShop, and videogames, on the same TV panel as a computer monitor. As a result, people who are sensitive to plasma artifacts, means plasma won't let you sit close enough comfortably without seeing plasma artifacts. It automatically disqualifies plasma for many people who want to scale the angular coverage proportionally to a computer monitor. Plasma is great when it fits your use case. For desktop usage, I find close viewing of LCD motion blur (of non-LightBoost) is more comfortable than close viewing of plasma artifacts. Your mileage will vary based on your use case.

When people sit that close to a 50" 4k monitor, it is a completely different scenario.

They are effectively using that 50" 4k in the same way and the same PPI/viewing distance as you would use 4x 25" monitors mounted together in a stand, it can't be compared.

When comparing a 50" 1080p to a 24" 1080p, it's a different thing.

The 50" is more than four times the size.

I am currently using only the 50" for a while to see how it works, and sitting 50" away is way to close.

Around 2m for a 50" seems to give the same experience as using a 24" at normal viewing distance.

Not necessarily

1. Viewing distance. Sitting computer-monitor-proportional distances (3 feet from 42" plasma) can cause artifacts to be easier to see.

2. Plus (see below), sensitivity to the specific types of artifacts that plasma has, versus sensitivity to the specific types of artifacts that LCD has.

Some people are color blind. (reportedly ~8% of population!). Some people are not.

Some people have better hearing. Some people have poorer hearing.

Some people are more sensitive to motion blur. Some people are not.

Some people get headaches by looking at a display. Some do not.

Some people are sensitive to intra-frame temporal effects (rainbow artifacts on DLP, subfields artifacts on plasma). Some are not.

Some people are more uncontrollably distracted by certain display artifats. Some people are not.

So, if you're all for equality in diversity of humanity, and your sentence is clearly an assumption someone is "interested" in artifacts; some people uncontrollably see them as a distraction (not too unlike a high-pitched noise screaming annoyingly into your ears, while others can't hear the noise at all). It's THAT annoying to a small proportion of people. Some artifacts are THAT uncontrollably annoying to some human eyes, just like a high-pitched coliwhine. Or a symptom such as a PWM-dimming headache you get that others cannot get, etc. Vision is NOT THE SAME among all humans.

For my eyes (when excluding colors), the LCD look is much, much more pure/natural than plasmas. I am sensitive to high-frequency elements of a DLP and plasma (I can see DLP rainbow artifacts easily & I can see plasma subfield artifacts easily). However, accounting for colors, plasmas are certainly better. This is not a lie for my own specific set of human eyes (and many others), even if not your set if eyes. This is a lot of human eye's preference.

Of course it entirely depends on the user. I had to wait for the phosphor trails and flicker to be reduced before I could own a plasma. I was entirely happy with LCD until PWM trailing became an issue (along with uniformity, elevated black level and viewing angle issues due to LED backlighting). Based on my reviews and my colleagues, I feel that LCD TVs haven't improved since 2010 (and in some cases, they worsened). Back in 2010, I reviewed a 40C580 CCFL-LCD by Samsung. It retailed for ~ £380 (a low end model) and had ANSI black level of 0.03 cd/m2 (peak brightness was set to 120 cd/m2). To this day, we have yet to review a LCD TV with such black level. In fact, some have elevated to high as 0.07/0.08 cd/m2. Viewing angles have also worsened (possibly due to LED backlighting) and motions hasn't improved at all. Without MCFI, LCDs are only capable of 300 lines of motion. It seems manufacturers have ceased improving LCD TVs and are focusing on design, cost and features. Considering money is being diverted to OLED and low-cost, high-efficient LCD, I guess this shouldn't be a surprise.

Panasonic plasma is definitely not ideal for PC gaming. Aside from the obvious issues, there's also the 1.5-2 frame lag. My statement was mainly aimed at Skakruk, who seem to think warping is a massive issue.

PS: Samsung plasma could be ideal since they have very clear motion and very little flicker. But it's processor is questionable though.

Last edited:

I don't quite remember, but I think this was the muppet who claims to be a reviewer, yet has all the discernment of my grandmother when it comes to identifying flaws in the displays allegedly being reviewed. What next, shall we have the hearing impaired reviewing Hi-Fi gear?

Newsflash: This is Hardforum, an enthusiast website. Generally, you can expect the people who post here have a keen interest in things like image artefacts.

I never claimed to be a reviewer. I do actually review for HDTVtest. Here's a little teaser:

http://www.hdtvtest.co.uk/about.php

http://www.hdtvtest.co.uk/news/toshiba-32rl953b-40rl953b-201208242124.htm

http://www.hdtvtest.co.uk/news/panasonic-tx-l42e5b-l32e5b-201207131935.htm

http://www.hdtvtest.co.uk/news/lg-47lw550t-42lw550t-201107081247.htm

Every display has it's faults. Some directly impact the quality of viewing, some are minor. Warping falls under minor. The effect has to be observed in order to be seen. In other words, you have to know when and where it would occur and track its movement.

I've played countless games on my 42G30 (which has far more flaws than 50 and 60 series) and I haven't seen warping during game-play (60p) or when viewing high-motion video. There are occasions where distortions can occur, but its not something I would personally fuss over (especially knowing that the alternative have far more issues).

Last edited:

Mark Rejhon

[H]ard|Gawd

- Joined

- Jul 6, 2004

- Messages

- 1,395

Actually I'm already familiar with itWell I have testufo working on the latest Opera and I have some crazy discovery to share

Correct.If your eye isn't moving there is ZERO motion blur, the blurtrail, UFOs and every other graphic remains as sharp and clear as any static image at any speed including 6400pps!

That's how I created www.testufo.com/eyetracking

It's proof that speed of LCD GtG pixel transitions aren't the limiting factor on modern LCD panels, but the sample-and-hold effect.

Modern LCD's have GtG transitions that are a tiny fraction of a refresh. The remainder of motion blur is forced upon your eyes caused by eye-tracking across high-persistence (scientific references).

Sample-and-hold effect is always crystal clear if you don't move your eyes, but creates motion blur when you move your eyes. Your eyes are continuously moving, even while static frames are displayed during milliseconds. Your eyes are in a different position at the beginning of a refresh than at the end of a refresh, causing the statically-displayed frames.

Long persistence phosphor creates a sample-and-hold effect. A plasma with 5ms of phosphor decay will generate approximately 5 pixels of motion blur during 1000 pixels/second, as an example. The phosphor decay curve does soften this somewhat, as eye-tracking motion blur becomes linear-style (brief strobes) or gaussian-style (gradual strobe with fadein-fadeout). Some CRT tubes have ultrafast short persistence phosphor that mostly decays in only 0.1 seconds. That means ultrafast eye motion is as clear as a static image, with no perceptible motion blur (~0.1 pixel of blurring during 1000 pixels/sec would not be noticeable).

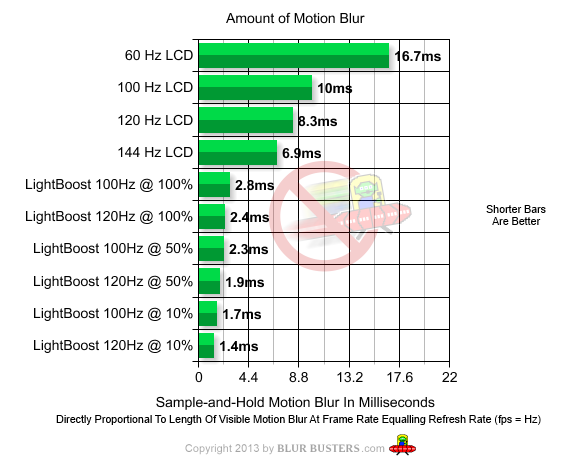

This is exactly how the graphic works at 60Hz vs 120Hz vs LightBoost

When doing a motion at 1000 pixels per second, the milliseconds translates to pixels of motion blur (1 pixel = 1 millisecond during 1000 pixels/sec). TestUFO uses 960 pixels/sec as the default target motion speed, so this is very close to the easy "1 ms = 1 pixel". Therefore, this graph means approximately (+/- 0.5 pixel):

60Hz LCD = ~16.5 pixels of motion blurring

100Hz LCD = ~10 pixels of motion blurring

120Hz LCD = ~8 pixels of motion blurring

144Hz LCD = ~7 pixels of motion blurring

LightBoost 100Hz @ 100% = ~3 pixels of motion blurring

LightBoost 120Hz @ 100% = ~2.5 pixels of motion blurring

LightBoost 100Hz @ 50% = ~2.5 pixels of motion blurring

LightBoost 120Hz @ 50% = ~2 pixels of motion blurring

LightBoost 100Hz @ 10% = ~2 pixels of motion blurring

LightBoost 120Hz @ 10% = ~1.5 pixels of motion blurring

It's similiar to photography: Longer shutter speeds (longer strobe flashes) will create more motion blur (photograph vs display) in a moving sensor (moving camera versus moving eyeball). The motion blur mathematics is identical.

This is quite obvious, since it is consistent with what is seen at www.testufo.com/ghosting at 960 pixels/sec

The 100Hz LightBoost example would be just very, very slightly blurrier than the last photo above (one added pixel of motion blur, maximum), but much sharper than the 120Hz middle photo. The 144Hz non-LightBoost (7 pixels of blur) would be just very, very slightly sharper than the 120Hz middle photo (8 pixels of blur). Just view www.testufo.com/ghosting -- the motion blurring you will see, will be very similiar to one of the above photos. (Different monitor response curves and overdrive settings will affect things such as ghosting and coronas, so your monitor may not match my monitor and overdrive settings exactly, but the sample-and-hold motion blur is unaffected)

Correct.I can reproduce this even at 60hz, the only purpose higher refresh rates serve is reducing eye-tracking based blur

The display is often contributing additional (often unwanted) eye-tracking motion blur that is above-and-beyond natural human limitations. So people like us, who dislike motion blur, want to eliminate display-enforced eye-tracking-based motion blur where possible.

The ideal display has EXACTLY the same motion clarity during fast-moving images, and during static images. With LightBoost=10% and VSYNC ON during framerate=Hz motion, fast motion has darn near ZERO motion blur (almost unnoticeable), like a piece of paper moving past your face and your eyes tracking it. No display-enforced eye-tracking-based motion blur caused by the sample-and-hold effect of finite framerates. Basically, to reach Holodeck perfection, you want a display to have less forced unwanted eye-tracking motion blur (above-and-beyond human eye tracking limitations/saccade inaccuracies), so that a panning motion on a display looks exactly the same as physical panning (e.g. piece of paper moving sideways in front of you). Above a certain limit, you can't track fast motion anymore, but a "Holodeck" display should never force unwanted eye-tracking motion blur upon you.

Good Valve Software article discusses eye-tracking motion blur (Michael Abrash), you will now understand this paper better with your new enlightenment.

Michael Abrash: Down the VR rabbit hole: Fixing judder

Imagine wearing VR googles. Imagine turning your head. You get one big display motion blur mess. You can't stare at things while you're turning your head. You can read text on this forum while nodding your head. But you can't read text on a wall in virtual reality while nodding your head, because of the display motion blur on the VR goggle's screen. Can become nauseating and headache-inducing. It's not Holodeck perfection. You need either 1000fps or strobing to fix the VR googles panning motion blur problem. See, you _have_ to fix tracking motion blur for many use cases (FPS gaming, VR goggles, sim racing, and many other situations where motion blur is heavily unwanted).

The perfect display is the infinite-framerate, infinite-resolution display, so there's no strobe effects, no flicker, no sample-and-hold effect (externally-forced eye tracking motion blur), no aliasing effects, no pixellation effects, etc. A perfect zero motion blur display showing fast panning motion (e.g. www.testufo.com/photo ...) should be as perfectly clear as a display being physically pushed smoothly sideways; or turning while holding a tablet/book in your hands. (Like John Carmack demonstrated on YouTube 12min00s). But such displays will probably never happen in our lifetimes, so flicker displays (e.g. CRT, plasma, LightBoost) are a good compromise of motion blur elimination during moving images.

ChadThunder: BlurBusters XP Points +10 -- LEVEL UP!

Enlightened: Now understands the concept of eye tracking motion blur: the sample-and-hold effect

Last edited:

Mark Rejhon

[H]ard|Gawd

- Joined

- Jul 6, 2004

- Messages

- 1,395

Cool, I didn't realize that.I never claimed to be a reviewer. I do actually review for HDTVtest. Here's a little teaser:

I've talked to David Mackenzie, Vincent Teoh and and Moderator FoxHounder., who are all fans of Blur Busters now (sent a few PM's), plus a few posts. Here's one of my articles that I posted about my tests of Sony Motionflow Impulse on HDTV Test Forums

http://forums.hdtvtest.co.uk/index.php?topic=7938.0

BTW, they've (HDTVTest) asked for a unit of my upcoming Blur Busters Input Lag Tester (three-flashing-square method, supports all resolutions and refresh rates; 4K and 120Hz compatible), expected during Winter 2014.

Mark Rejhon

[H]ard|Gawd

- Joined

- Jul 6, 2004

- Messages

- 1,395

Sony Motionflow Impulse does not use MCFI.Without MCFI, LCDs are only capable of 300 lines of motion.

It's 100% pure strobe based on the KDL55W905A, adds less than one additional frame of input lag, and is available in Game Mode!

It returns over 1000 lines of motion resolution on the motion tests pattern on the KDL55W905A.

Pure strobe backlights allow breaking the "300 lines of motion resolution" barrier on LCD, without using MCFI!

You may want to be aware that "lines of motion resolution" isn't future-proof.

Standardizing Motion Resolution: "Milliseconds of motion resolution" (MPRT) better than "Lines of motion resolution"

Most refined post of mine is the following:

One simpler method to interpret MPRT ("Moving Picture Response Time") is that it is just simply a reinterpretation of "lines of motion resolution".spacediver said:I was thinking more along the lines of devising a test where, instead of deriving the MPRT/MER (which I still don't have a fluent grasp of!), you just find the threshold of that particular test.

But in a way that can be measured more accurately, and can be recorded in a number that is test-pattern independent.

Firstly, you can even begin by just using today's "lines of motion reslution" test pattern for now.

-- If a display's known MPRT is 16.7ms, and that display known "lines of motion resolution" (for one specific Blu-Ray test pattern at one specific motion speed) is "300 lines of motion resolution", then it's easy to approximately translate between MPRT and "lines of motion resolution" that specific test pattern:

MPRT 16.67ms = 300 lines of motion resolution

MPRT 8.33ms = 600 lines of motion resolution

MPRT 4.16ms = 1200 lines of motion resolution

(If the test pattern caps out at 1200 lines of motion resolution, its ability is limited. Thus, for that specific pattern, you can't measure MPRT better than about 4ms, which happens to be conveniently near time of plasma phosphor decay of a very fast plasma phosphor.)

-- Now, if you had a different Blu-Ray pattern where it measures 200 on a fast 60Hz LCD in Game Mode (16.7ms MPRT), with different resolutions, caps out at 1080 lines, and runs at a different motion speed, you might have conversion numbers:

MPRT 16.67ms = 200 lines of motion resolution

MPRT 8.33ms = 400 lines of motion resolution

MPRT 4.16ms = 800 lines of motion resolution

MPRT ~3ms = 1080 lines of motion resolution

(caps out here)

Then you've got a 4K video file, with lines of motion resolution that goes all the way to 2000 (more dense lines), which creates this example:

MPRT 16.67ms = 500 lines of motion resolution

MPRT 8.33ms = 1000 lines of motion resolution

MPRT 4.16ms = 2000 lines of motion resolution

(cass out here)

Now, you begin to realize the problem; comparing different benchmarks of "lines of motion resolution" becomes problematic!

-- MPRT is test-pattern independent

-- MPRT is resolution independent

-- MPRT doesn't cap out. There's no upper or lower bound.

You can see then, here.

-- MPRT is more apples-to-apples comparision.

-- MPRT is future proof.

-- MPRT is more standardizable.

Also, we already scientifically know for MPRT:

-- 1ms of motion blur equals 1 pixel of motion blur for every 1000 pixels/second of motion

-- Example: That means 6ms of MPRT at 500 pixels/sec creates 3 pixels of motion blur

-- Example: That means 9ms of MPRT at 2000 pixels/sec creates 18 pixels of motion blur

Subjective versus Objective

-- MPRT can optionally be used with a pursuit camera.

-- Obviously, various inefficiencies such as temporal dithering/ghosting/rise/fall times, will come into play, but displays are getting better and better and getting so good that the motion blur measurements very accurately matches MPRT, especially on several display technologies.

-- LCD, OLED, DLP works great with the human-eye-based MPRT test, for example and the subjectively-observed numbers closely agree with objectively-measured numbers in these situations!

-- The situation of CRT and plasma becomes slightly complicated but apparently, when I run the numbers at "50% rise-fall" cutoff points, the numbers between objective-vs-subjective apparently come into closer harmony! The question is whether this is appropriate/good enough (aka non-controversial)

MPRT (full name "Moving Picture Response Time" (Google Search)) was originally invented for LCD's, but is not properly being used by monitor manufacturers because MPRT's actually give terrible numbers to LCD's once LCD's became far faster than 16ms. MPRT is *not* GtG transition measurement. Instead, monitor manufacturers are instead measuring the time it takes for a pixel to transition (GtG), rather than measuring the time of the sample-and-hold effect. Completely ignoring MPRT. However, it's apparently a good standard (The MPRT standard was mentioned to me -- by no less than an email from Raymond Soneira from DisplayMate) -- I was trying to find a standard way of measuring motion blur and that was one of them. I have grown to like the standard very much, and I have learned quite a lot in the last few months, with a more intimate understanding of motion blur. Although Raymond and I may have different approaches to motion testing, MPRT is quite a great standard that deserves a closer look. The MPRT standard is quite applicable to all displays, not just LCD's, and even retroactively applicable to CRT's. Yes, it's problematic, but no less problematic than the concept of "contrast ratios" (given our human visions' limited dynamic range and all, to things like ambient light interfering with contrast ratio, to display non-uniformity). I argue, that this decade is a good time for the industry to begin considering going to MPRT standard (even if old test patterns have to be recycled for now).

To keep things simple, makers of blue-ray test patterns can also list MPRT numbers alongside the "lines density" numbers. That's the simple way without needing to invent new patterns (we still have the capping-out disadvantage, but at least we're now not locked to a specific test pattern)

Okay, makes better sense? Now, moving on:

MPRT is measurable both electronically and by human vision. That's what makes it so promising, like a standardized way -- a universal motion blur number measurement like a contrast ratio measurement (as subjective as contrast ratio measurements can be, with all its complications I've already explained) There are superior ways to measure MPRT than using a "lines of motion resolution" test pattern. That's what some of my discussions in this thread, has been about.

Hopefully this de-mystifies MPRT a little bit.

Basically this thread is simply talking about standardizing motion blur measurement.

To the point where it's far easier to get subjective measurements more closely agreeing with objective measurements.

With no bias to a specific display technology.

And about the arbitrariness of "lines of motion resolution":

Ok, I thought you knew before you read this thread.spacediver said:Can you explain what "lines of motion resolution" is? Before this thread, I'd never heard of it, and I can't seem to find an answer on google. I imagine it's something about how many moving lines you have have before they blend into each other?

Let Me Google That For You.

Search: "1080 lines of motion resolution" -avsforum (excludes AVSFORUM posts)

Search: "1200 lines of motion resolution" -avsforum (excludes AVSFORUM posts)

Examples:

- C|Net Review of HDTV measuring motion blur by "lines of motion resolution"

- HDGuru talks about motion blur by "lines of motion resolution"

- HDTV Magazine: Panasonic Viera touting "lines of motion resolution"

Hundreds of home theater magazines sitting here, blabbing about "lines of motion resolution" in their HDTV reviews. Ugh.

Mainstream reviewers are still using "lines of motion resolution" which is not future-proof [you can't easily compare 1080p blur vs 4K blur], is arbitrary, and is not apples-vs-apples [it's very test pattern specific].

Last edited:

Bollocks. How do you think I discovered the bloody issue in the first place? Did I go scanning the set for a phenomenon I didn't even know existed? No. I was playing a game and noticed the vertical boards on a picket fence were curved during pans. Later, I saw straight pillars warping in the same way in another game. Then l noticed that a bright slab of rock on a dark background appeared to be floating when the scene was in motion. Then in F.E.A.R, a game full of sharp contrasts and rich shadows, I saw whole scenes appearing warped/disjointed. THEN, and only then, did I decide to try and figure out the cause. At this point I was ignorant to the mechanism of subfields.

Maybe you're more sensitive to subfield rendering. That doesn't mean everyone will experience the same phenomenon. The same applies to phosphor traling and noise.

In any case, you should make it clear that its effects varies from individual to individual instead of stating it as a universal problem.

Mark Rejhon

[H]ard|Gawd

- Joined

- Jul 6, 2004

- Messages

- 1,395

Blur Busters Master Listing of Known Ultra High Efficency Strobe Backlights

Confirmed 100% MCFI-free low-latency (adds under 1 frame) method of breaking the "300 lines of motion resolution" barrier on LCD's.

1. nVidia LightBoost -- www.blurbusters.com/lightboost/howto

2. Samsung "3D Mode" -- www.blurbusters.com/zero-motion-blur/samsung

3. Eizo's FDF2405W blur reduction mode -- page 15 of manual describes LightBoost-style strobing

4. Sony's Motionflow Impulse -- www.blurbusters.com/sony-motionflow-impulse

Monitor reviewers/television reviewers who are unfamilar with low-latency MCFI-free CRT-clarity LCD's, need to begin studying the science of strobe backlights, as these are gradually becoming more common, with more and more models coming onto the market, including those officially advertised as motion blur reduction backlights. Ultra-High Efficiency Strobe backlights outperform yesterday's scanning backlight which were often gimmicks (inefficient motion blur reduction), TFTCentral explains why strobe backlights outperform scanning backlights, especially for game/computer use.

Confirmed 100% MCFI-free low-latency (adds under 1 frame) method of breaking the "300 lines of motion resolution" barrier on LCD's.

1. nVidia LightBoost -- www.blurbusters.com/lightboost/howto

2. Samsung "3D Mode" -- www.blurbusters.com/zero-motion-blur/samsung

3. Eizo's FDF2405W blur reduction mode -- page 15 of manual describes LightBoost-style strobing

4. Sony's Motionflow Impulse -- www.blurbusters.com/sony-motionflow-impulse

Monitor reviewers/television reviewers who are unfamilar with low-latency MCFI-free CRT-clarity LCD's, need to begin studying the science of strobe backlights, as these are gradually becoming more common, with more and more models coming onto the market, including those officially advertised as motion blur reduction backlights. Ultra-High Efficiency Strobe backlights outperform yesterday's scanning backlight which were often gimmicks (inefficient motion blur reduction), TFTCentral explains why strobe backlights outperform scanning backlights, especially for game/computer use.

Last edited:

Mark Rejhon

[H]ard|Gawd

- Joined

- Jul 6, 2004

- Messages

- 1,395

Correct Neilo -- scientifically, MCFI-free methods of eliminating motion blur, tends to add more visible flicker.

Generally, for MCFI-free operation, motion blur reduction is unfortunately proportional to amount of visible flicker. (and even flicker only seen under highspeed camera)

Variables: Assuming a fixed refresh rate (e.g. 60Hz) without using MCFI, It's an unavoidable flicker-versus-motion-clarity tradeoff. You can balance it pretty well by using a slightly longer flicker that's not human-visible (softer phosphor decay), very good plasmas use approximately about a 5 milliseconds of phosphor decay, which is just about "perfect" for common video motionspeeds, but still limiting for high-end enthusiast gaming.

What this means is that a finely-tuned strobe backlight (long flashes, capacitor decay to simulate phosphor fadeout), would roughly make an LCD equal plasma in motion resolution. But it's so easy to flash a backlight briefer, to get less motion blur than plasma, too.

No plasma displays can successfully pass the TestUFO Panning Map Test at 1920 pixels/second

www.testufo.com/photo#photo=toronto-map.png&pps=1920

Only a few displays can. (e.g. CRT displays, LightBoost strobe-backlight displays, Eizo FDF2405W professional strobe-backlight LCD, etc).

This means there exists a select, choice, few LCD's that actually create less motion blur than a plasma (Without needing to use MCFI).

Statistically at one time in the past, it was measured that the average motion speed of video was approximately 6.5 pixels per frame of motion (this is how FPD benchmark came up with the motion speed of some of their tests). However, videogames don't have builtin motion blur (makes motion blur easier to see), videogames are often viewed closer than television (makes motion blur easier to see) & videogames move much faster (20-30 pixels per frame of motion during FPS, that's a full screen width at 60Hz at 1080p during fast strafing/turning) making motion blur easier to see. So the bar for motion blur sensitivity is much higher. Motion blur stands out far more. That's situations WHEN strobe backlights really sings their praises. Unlike during watching video material.

As you can see from www.blurbusters.com/about/mark -- I used to work in the home theater industry, and create video processor boxes (I invented the world's first open source 3:2 pulldown deinterlacer, found in dScaler, a PC-based video deinterlacing program that was released in the late 1990's, when Faroudja boxes still cost several thousand of dollars! It's also mentioned in "PCinema", page 38 of Stereophile Guide To Home Theater, November 2001 issue), I've got an intimate familiarity with motion blur science, and the uncanny ability to explain motion blur to the masses (e.g. LightBoost). The video processor I worked on a long ago (TAW ROCK) won 2003 Editor's Choice in Stereophile Guide To Home Theater, as it was one of the first video processors to convert 3:2 pulldown to 3:3 pulldown for 72Hz output to a CRT projector. I owned an NEC XG135 CRT projector, and became an expert at convergence, keystone, bow, astig, focus, and was able to get the beam spot size damn tight for 1080p in year 1999, long before 1080p was thought of as a standard.

Generally, for MCFI-free operation, motion blur reduction is unfortunately proportional to amount of visible flicker. (and even flicker only seen under highspeed camera)

Variables: Assuming a fixed refresh rate (e.g. 60Hz) without using MCFI, It's an unavoidable flicker-versus-motion-clarity tradeoff. You can balance it pretty well by using a slightly longer flicker that's not human-visible (softer phosphor decay), very good plasmas use approximately about a 5 milliseconds of phosphor decay, which is just about "perfect" for common video motionspeeds, but still limiting for high-end enthusiast gaming.

What this means is that a finely-tuned strobe backlight (long flashes, capacitor decay to simulate phosphor fadeout), would roughly make an LCD equal plasma in motion resolution. But it's so easy to flash a backlight briefer, to get less motion blur than plasma, too.

No plasma displays can successfully pass the TestUFO Panning Map Test at 1920 pixels/second

www.testufo.com/photo#photo=toronto-map.png&pps=1920

Only a few displays can. (e.g. CRT displays, LightBoost strobe-backlight displays, Eizo FDF2405W professional strobe-backlight LCD, etc).

This means there exists a select, choice, few LCD's that actually create less motion blur than a plasma (Without needing to use MCFI).

Statistically at one time in the past, it was measured that the average motion speed of video was approximately 6.5 pixels per frame of motion (this is how FPD benchmark came up with the motion speed of some of their tests). However, videogames don't have builtin motion blur (makes motion blur easier to see), videogames are often viewed closer than television (makes motion blur easier to see) & videogames move much faster (20-30 pixels per frame of motion during FPS, that's a full screen width at 60Hz at 1080p during fast strafing/turning) making motion blur easier to see. So the bar for motion blur sensitivity is much higher. Motion blur stands out far more. That's situations WHEN strobe backlights really sings their praises. Unlike during watching video material.

As you can see from www.blurbusters.com/about/mark -- I used to work in the home theater industry, and create video processor boxes (I invented the world's first open source 3:2 pulldown deinterlacer, found in dScaler, a PC-based video deinterlacing program that was released in the late 1990's, when Faroudja boxes still cost several thousand of dollars! It's also mentioned in "PCinema", page 38 of Stereophile Guide To Home Theater, November 2001 issue), I've got an intimate familiarity with motion blur science, and the uncanny ability to explain motion blur to the masses (e.g. LightBoost). The video processor I worked on a long ago (TAW ROCK) won 2003 Editor's Choice in Stereophile Guide To Home Theater, as it was one of the first video processors to convert 3:2 pulldown to 3:3 pulldown for 72Hz output to a CRT projector. I owned an NEC XG135 CRT projector, and became an expert at convergence, keystone, bow, astig, focus, and was able to get the beam spot size damn tight for 1080p in year 1999, long before 1080p was thought of as a standard.

Last edited:

Cool, I didn't realize that.

I've talked to David Mackenzie, Vincent Teoh and and Moderator FoxHounder., who are all fans of Blur Busters now (sent a few PM's), plus a few posts. Here's one of my articles that I posted about my tests of Sony Motionflow Impulse on HDTV Test Forums

http://forums.hdtvtest.co.uk/index.php?topic=7938.0

BTW, they've (HDTVTest) asked for a unit of my upcoming Blur Busters Input Lag Tester (three-flashing-square method, supports all resolutions and refresh rates; 4K and 120Hz compatible), expected during Winter 2014.

I've been off for a year. With uni and now the Army, I don't have much time. Besides, I got sick of reviewing the same garbage over and over. Prettier design with performance similar to 2008/9 models. It also got too messy due to panel and processor lottery.

David reviews the all the high-end gear cos he has all the equipment. I'm not sure if I'll be reviewing any more TVs, but may help out Vincent if David were to go. Now with OLED, the differences between various sets are going to be even narrower (maybe nonexistent).

I haven't tested nor seen it, so can't really comment. My biggest issue with any kind of pulsing is maintaining sync, which can lead to multiple ghost images (frame doubling/tripling/quadrupling). I can see it working with TN since TN can respond within 8ms, but not sure about VA and IPS (especially VA since most VAs still struggle to respond within 16ms). Not to mention ambient temperature directly affect pixel response. FoxHounder did point out some downside so I'm guessing Pulse-based Motion-Flow it's still isn't good as Samsung and Panasonic plasmas. At least for now. The other issue is black level. ATM, Plasmas are 6-7 times better than leading LCD. That needs to be addressed as well, but I don't see it happening.Sony Motionflow Impulse does not use MCFI.

It's 100% pure strobe based on the KDL55W905A, adds less than one additional frame of input lag, and is available in Game Mode!

It returns over 1000 lines of motion resolution on the motion tests pattern on the KDL55W905A.

Pure strobe backlights allow breaking the "300 lines of motion resolution" barrier on LCD, without using MCFI!

You may want to be aware that "lines of motion resolution" isn't future-proof.

Standardizing Motion Resolution: "Milliseconds of motion resolution" (MPRT) better than "Lines of motion resolution"

Lines of motion isn't future-proof, but I don't think Vincent will change that any time soon. It still gives pretty good indication tho.

Last edited:

Mark Rejhon

[H]ard|Gawd

- Joined

- Jul 6, 2004

- Messages

- 1,395

Yeah -- the faint crosstalk ghost effect that also happens on several LightBoost displays. Basically, a trailing ghost sharp image (non-blurred) roughly similiar to intensity of 3D crosstalk. I already explain this in the HDTVTest posting about Sony's Motionflow Impulse, and explain exactly why it's a non-issue for gaming. An easy way to test strobe crosstalk (faint sharp trailing ghost double-image) similiar in faintness to 3D crosstalk, is the TestUFO Eiffel Tower Test.I haven't tested nor seen it, so can't really comment. My biggest issue with any kind of pulsing is maintaining sync, which can lead to multiple ghost images (frame doubling/tripling/quadrupling). I can see it working with TN since TN can respond within 8ms, but not sure about VA and IPS (especially VA).

Fortunately, VA has made some amazing progress in the last 2 years alone. It's finally now possible for strobed VA to have less ghosting than older strobe TN (e.g. XL2420T Revision 1.0 from early 2012). MCFI has forced VA LCD's to refresh faster (240Hz VA LCD's now exist). This gives the perfect opportunity for a creative trick called the double-pass refresh, already documented on Page 15 of the manual of the released FDF2405W LCD (cost several thousands, mainly for what are probably enterprise customers). They use a 240Hz two-pass refresh (one heavily overdriven refresh in total darkness, and one regular cleanup refresh, then followed by a strobe near the end of the second refresh which is overdrive-artifact-free -- as explained here in Eizo's manual), doing 120 strobe flashes per second. The already-released professional-market-only Eizo FDF2405W would have has less sharp-double ghosting than LightBoost displays, because of this very creative VA refreshing technique.

Yep, he didn't test 60fps videogames, which is where Impulse shines massively (IMHO -- it benefits 60fps games massively more than video -- I'd even argue a 10x bigger benefit to games than to video). FoxHounder agreed with me about Motionflow Impulse could be potentially good for videogames, as he hadn't closely considered that use case during his tests. (videogames use sharper graphics, closer view distances, and faster motion, all of which makes motion blur easier to see than with video)FoxHounder did point out some downside so I'm guessing it's still not good as Samsung and Panasonic plasmas.

Agreed, it's probably okay for the average home theater audience. That said, I'm trying to contact several test pattern manufacturers to try and consider MPRT as a unified motion blur measurement standard. But I'm just a small drop in a big ocean. That said, if a test pattern disc maker gives you a free sample, please tell them about a future-proof motion blur measurement such as MPRT by providing a link to my articles and the MPRT scientific papers. ThanksLines of motion isn't future-proof, but I don't think Vincent will change that any time soon. It still gives pretty good indication tho.

Last edited:

Yes, and maybe you're less sensitive to subfield rendering, but that doesn't mean that no one else will see it or that it's "BS". Better to overstate potential issues than understate them, and I expect supposed 'reviewers' to approach this sort of thing with a more critical eye. This is why I'd take any review from the likes of you with a grain of salt.

At the end of the day, we need to strike a balance. We don't have a fault free display technology and we don't have an alternative to LCD and Plasma (at least not yet). Overstating a problem that doesn't affect everyone is not a wise move. You can advice them of possible issues that is inherent to various technologies, but that's it.

As things stand, plasma has the upper hand. It's blacks are 6-24 times better than LCDs (no, that's not a typo), it has cleaner and better motion than LCD (generally speaking), has better uniformity and winder viewing angle. Given the pros and cons of both tech, PDP easily wins. But in the end, it all depends on which technology you can tolerate better. Personally, I can tolerate plasma more than LCD. I also don't have to deal with panel and processor lottery.

Last edited:

Mark Rejhon

[H]ard|Gawd

- Joined

- Jul 6, 2004

- Messages

- 1,395

Agreed - especially in space movies.As things stand, plasma has a major upper hand. It's blacks are 6-24 times better than LCDs (no, that's not a typo)

(Though for gaming -- black levels are far, far, far less annoying than motion blur for me)

Agreed -- except for Game Mode motion uniformity.has better uniformity and winder viewing angle.

Agreed.But in the end, it all depends on which can you tolerate better. Personally, I can tolerate plasma more than LCD.

Not for computer use at 1:1 viewing distances.It has cleaner and better motion than LCD (for now)

Not in Game Mode with plasma processing turned off.

Compared to the better strobe-backlight computer displays. I can read the tiny text on the TestUFO Panning Map Test at 1920 pixels/second on my LightBoost display (configured to 10%):

www.testufo.com/photo#photo=toronto-map.png&pps=1920

Just try that on any plasma. You can't read any text. Games have fast panning speeds (fast turning, fast strafing, fast panning) and it's lovely to be able to identify fine details without stopping turning/strafing first. And when you use Game Mode on plasmas, you often reduce plasma processing in order to reduce input lag -- so you often get more plasma artifacts in Game Mode (e.g. more banding, because anti-banding algorithms often add input lag).

At 1:1 viewing distance from a plasma, I can see:

-- plasma christmas pixel noise in dark shades of dungeon FPS games (yes, even on Pioneer Kuro, yes even on Elite LCD HDTV)

-- plasma temporal color effects (also seen in high speed camera). (I also see DLP rainbow artifacts even on 6X colorwheels)

-- plasma banding during fast motion

All of them quite annoying during computer gaming at traditional proportional computer monitor viewing distances (1:1 ratio). As a result, even blurry LCD motion becomes much, much cleaner looking (and I still hate the motion blur). The 50" SEIKI 4K is nicer to stare at on a computer desk because of this (e.g. wallmounted at the back of a deep desk), and it even supports true 120Hz from a computer at 1920x1080 to solve a little bit of the LCD motion blur. Mind you, crappy black levels, though. But for gaming, LCD blacks are a lesser of evil for me, at least.

Plasma motion looks rather nice at regular sofa viewing distance, mind you, and they are quite numero uno for a lot of television material, but plasma limitations really stand out when you attempt to use them as 1:1-view-distance computer monitors for gaming, if you have any slight sensitivity to motion-related artifacts.

Last edited:

Chad_Thunder

Weaksauce

- Joined

- Aug 16, 2013

- Messages

- 83

This is video processing which can be turned offNo. I was playing a game and noticed the vertical boards on a picket fence were curved during pans. Later, I saw straight pillars warping in the same way in another game. Then l noticed that a bright slab of rock on a dark background appeared to be floating when the scene was in motion. Then in F.E.A.R, a game full of sharp contrasts and rich shadows, I saw whole scenes appearing warped/disjointed. THEN, and only then, did I decide to try and figure out the cause. At this point I was ignorant to the mechanism of subfields.

You are describing the artifacts caused by the interpolation algorithm Panasonic used on the 30 and 50 series, they use subfield and full frame motion prediction which has some really weird affects with CG content (in CounterSrike the stationary text 'shoots' or streaks at random times, as you turn corners you see warping and so on)

I think most people are comparing plasma in its worst modes for CG, 4:2:2 compression (540p chroma) ON, interpolation ON, low gradations ON which are all not representative of the technology

Chad_Thunder

Weaksauce

- Joined

- Aug 16, 2013

- Messages

- 83

Probably the same on V20, I know exactly what warping you are talking about and its not present on my PF30 unless frame creation is on. I don't even know why they would use sub field interpolation it doesn't make any sense unless you were trying to save power

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

On my UK plasma, interpolation automatically turns on when I use a 50Hz refresh (TH 42PZ80).

Some US TVs might have a similar method when using 60Hz.

Some US TVs might have a similar method when using 60Hz.

Chad_Thunder

Weaksauce

- Joined

- Aug 16, 2013

- Messages

- 83

Good point Nenu

I have read that NTSC(60hz) uses 10 sub-fields and PAL(50hz) uses 12

I see major flicker at 56hz and under so it makes perfect sense to interpolate up to 100hz

I have read that NTSC(60hz) uses 10 sub-fields and PAL(50hz) uses 12

I see major flicker at 56hz and under so it makes perfect sense to interpolate up to 100hz

Mark Rejhon

[H]ard|Gawd

- Joined

- Jul 6, 2004

- Messages

- 1,395

As much as I hate motion blur, I prefer viewing 1:1 from an LCD, than viewing 1:1 from a plasma or closer. Sitting a mere three to four feet from a 50" television mounted at the rear of a deep desk, for example. Here, plasma motion artifacts are so unnatural looking, that even plain LCD motion blur looks more natural. It's as annoying as coilwhine screaming into my ears; it's not something I'm intentionally paying attention to.

Human vision and real world don't use subfields or temporal methods of color generation. It's totally artificial and unnatural if you have a vision sensitivity to those, especially at 1:1 monitor view distances.

Sofa viewing distance, I love plasma, though. Movies are amazing on plasma! Especially on Kuro and Elite. My two favourite plasmas.

Plasmas will never be good close-view-distance desktop computer monitors for me. I'm amazed how "dirty" looking plasma refreshes look, and high-speed video even agrees too (Have you seen HDTVTest's high speed videos of plasma refreshes? Versus LCD refreshes? Exactly. My eyes notice this, especially when I'm saccading my vision around as I'm a continual moving-objects-tracker. That's not good for a plasma that's sitting right in front of my nose like a computer monitor. LCD refreshes are more natural to humans that are very perceptive of high-speed temporal artifacts). Plasma is beautiful when pixels are too small to be seen, or when your vision system can't see the artifacts.

The perfect Holodeck display is a flicker-free (no subfields, no strobes, no temporal color), infinite-refresh-rate, adjustable-gamut, infinite-resolution display. No motion blur (caused by discrete refresh rates on sample-and-hold displays). No interpolation. No flicker. No strobing. Perfect solid flickerfree even in 1000fps high speed video. A display that looks the same as real life in 1000fps video comparision of the display operating versus real life scenery. LCD and plasma are neither, I know. The perfect Holodeck display won't happen for a long time. Until then, no display is a one-size-fits-all.

Human vision and real world don't use subfields or temporal methods of color generation. It's totally artificial and unnatural if you have a vision sensitivity to those, especially at 1:1 monitor view distances.

Sofa viewing distance, I love plasma, though. Movies are amazing on plasma! Especially on Kuro and Elite. My two favourite plasmas.

Plasmas will never be good close-view-distance desktop computer monitors for me. I'm amazed how "dirty" looking plasma refreshes look, and high-speed video even agrees too (Have you seen HDTVTest's high speed videos of plasma refreshes? Versus LCD refreshes? Exactly. My eyes notice this, especially when I'm saccading my vision around as I'm a continual moving-objects-tracker. That's not good for a plasma that's sitting right in front of my nose like a computer monitor. LCD refreshes are more natural to humans that are very perceptive of high-speed temporal artifacts). Plasma is beautiful when pixels are too small to be seen, or when your vision system can't see the artifacts.

The perfect Holodeck display is a flicker-free (no subfields, no strobes, no temporal color), infinite-refresh-rate, adjustable-gamut, infinite-resolution display. No motion blur (caused by discrete refresh rates on sample-and-hold displays). No interpolation. No flicker. No strobing. Perfect solid flickerfree even in 1000fps high speed video. A display that looks the same as real life in 1000fps video comparision of the display operating versus real life scenery. LCD and plasma are neither, I know. The perfect Holodeck display won't happen for a long time. Until then, no display is a one-size-fits-all.

Last edited:

The VT60 can not do that perfectly at 1920 pixels @60hz, simply too blurry, but in 2D-3D conversion (120hz refresh, 60hz per eye + brain-postprocessing to meld the images), some of the text is easily readable, some is hard to read but doable, and some is not readable at all.No plasma displays can successfully pass the TestUFO Panning Map Test at 1920 pixels/second

www.testufo.com/photo#photo=toronto-map.png&pps=1920

It only looks a touch worse than on VG248QE.

At 1440 pixels/s 2D-3D everything is readable though some text is still a bit hard.

Still hoping this can in some way achieve 96hz or more, and still pretty sure it will perform as excellently in 2D as it does in 3D.

Is the text readable at 1900 pixels/s even on a crt@60hz ?

Last edited:

Chad_Thunder

Weaksauce

- Joined

- Aug 16, 2013

- Messages

- 83

When you are talking about 2D-3D conversion does that mean are using 3D goggles?

If you are able to read 1440pps with 60 unique frames and chroma softening (VT60 uses the 4:2:2 color space) image what is technically possible with 125fps+ 4:4:4 2D with full gradations (no noise), makes me sad thinking about what is being thrown away (I probably need another hobbies)

If you are able to read 1440pps with 60 unique frames and chroma softening (VT60 uses the 4:2:2 color space) image what is technically possible with 125fps+ 4:4:4 2D with full gradations (no noise), makes me sad thinking about what is being thrown away (I probably need another hobbies)

When you are talking about 2D-3D conversion does that mean are using 3D goggles?

If you are able to read 1440pps with 60 unique frames and chroma softening (VT60 uses the 4:2:2 color space) image what is technically possible with 125fps+ 4:4:4 2D with full gradations (no noise), makes me sad thinking about what is being thrown away (I probably need another hobbies)

Yes, 2D->3D using the 3D-goggles (this is the only way i can get it to run 120hz, 60fps per eye, half the frames being faked for 3D effect). I think it uses 4:4:4, at least text and images look normal and crisp and reviews say so. I recall reading some review stating 4:2:2, but the official panasonic product page says:

1080p pure direct

"Offering colours that are faithful to the original 1080 content, Pure Direct increases the transmission efficency of high quality 30 Bit YUV 4:4:4 content. YUV refers to the three types of data the TV receives; the more data transmitted and displayed the higher the quality picture.

Y = Brightness

UV = Colour Signal

YUV = Image produced

*VT60, WT60, DT60"

"The VT60 also adds a 1080p Pure Direct mode that enables support of a 4:4:4"

So, afaik, it supports 4:4:4. I have also set hdmi range full both in nvidia panel and in the monitor's settings, 1080p Pure Direct on. I could definitely be wrong though, i searched for some tests, and the best thing i found was to create a colored background in paint, and draw single pixel wide lines/scribble on it. Blue on red looked normal, red on blue looked a touch odd, like vertical lines were a touch brighter red than horizontal lines, but every pixel was certainly displayed as red. Black on white/white on black looks normal. I don't know much about chroma subsampling, and am not very picky with colors unless its quite far off.. such as IPS/TN being completely unable to display black..

Last edited:

Chad_Thunder

Weaksauce

- Joined

- Aug 16, 2013

- Messages

- 83

Well thanks to the Custom Resolution Utility I have successfully plugged in a greater range of resolutions at 120hz and the performance is just phenomenal, input lag has been cut down to virtually nil and there is absolutely zero perceptible flicker or motion artifacts in games

I do not remember CRT being this good

I do not remember CRT being this good

Well thanks to the Custom Resolution Utility I have successfully plugged in a greater range of resolutions at 120hz and the performance is just phenomenal, input lag has been cut down to virtually nil and there is absolutely zero perceptible flicker or motion artifacts in games

I do not remember CRT being this good

Could you pm me some of those timings, just in case any would work on mine?

Chad_Thunder

Weaksauce

- Joined

- Aug 16, 2013

- Messages

- 83

According to some graphic I found earlier the sub-field order are reversed in 3D mode so you get the brightest pulses first instead of last like at 60hz, it was not made clear whether hold time was shortened though I don't see why it would need to beYes, 2D->3D using the 3D-goggles (this is the only way i can get it to run 120hz, 60fps per eye, half the frames being faked for 3D effect).

I think there is probably some mild chroma softening for the RGB colorspace but nothing to worry about if it does not bother you.So, afaik, it supports 4:4:4. I have also set hdmi range full both in nvidia panel and in the monitor's settings, 1080p Pure Direct on. I could definitely be wrong though, i searched for some tests, and the best thing i found was to create a colored background in paint, and draw single pixel wide lines/scribble on it. Blue on red looked normal, red on blue looked a touch odd, like vertical lines were a touch brighter red than horizontal lines, but every pixel was certainly displayed as red. Black on white/white on black looks normal. I don't know much about chroma subsampling, and am not very picky with colors unless its quite far off.. such as IPS/TN being completely unable to display black..(I see color errors briefly, after a minute or so it fades away due to adjusting to it, i guess).

Chad_Thunder

Weaksauce

- Joined

- Aug 16, 2013

- Messages

- 83

Yeah sure, they are just auto calculated from CRU I didn't tweak themCould you pm me some of those timings, just in case any would work on mine?

http://oi41.tinypic.com/2uzfj21.jpg

Maybe you should start with 800x600/1024x768/1280x720 and work up, you don't need CRU aggressive timings for that (NVIDIA control panel is nice for calculating safe timings)

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

According to some graphic I found earlier the sub-field order are reversed in 3D mode so you get the brightest pulses first instead of last like at 60hz, it was not made clear whether hold time was shortened though I don't see why it would need to be

I imagine its to reduce ghosting.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)