Cerulean

[H]F Junkie

- Joined

- Jul 27, 2006

- Messages

- 9,476

now just imagine what performance you'd get if you rendered a video with avisynth+x264+MeGUI, and with Adobe After Effects.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

4 10 cores with HT or 8 10 cores without HT (7000/E7 LGA1567 chips)how does one get 80 cores in one machine! or is it a cluster of like ESXi boxes with windows using up all the resources LOL.

you could fold like 1 protein in seconds!

do i dare ask the cost of one of those suckers?

you could fold like 1 protein in seconds!

do i dare ask the cost of one of those suckers?

I was goofing off on the Dell Enterprise site and set up a R920 with 40 cores (80 with HT), 2TB of RAM and a bunch of SSDs and 15k SAS in near-line storage for $220,000 retail. (OS and DB software extra.)

I printed it to PDF and sent it to my boss saying that I'd found a replacement unit for our data warehouse build. I suggested that five should suffice for our SQL cluster.

LOL, no, he doesn't win. Maybe among those who can post pictures, but no -- not overall.

4 10 cores with HT or 8 10 cores without HT (7000/E7 LGA1567 chips)

So,

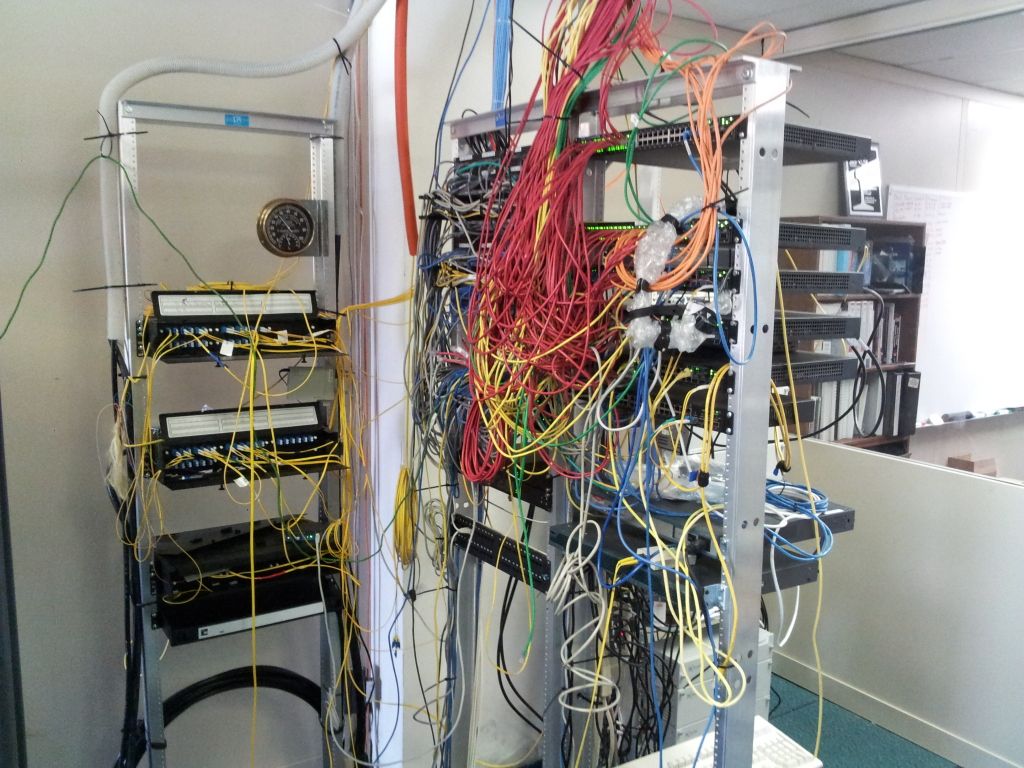

I figured I'd share my network at work. Please keep in mind, What you see in the picture I inherited. I've only been here since April in my current capacity. Yes I'm very aware that we have alot of work left to do.........alot.......

LOL, no, he doesn't win. Maybe among those who can post pictures, but no -- not overall.

LOL, no, he doesn't win. Maybe among those who can post pictures, but no -- not overall.

I work for the group that supports the network for the worlds fastet supercomputer now

I guess I win?

Lawrence Livermore, or IBM?I work for the group that supports the network for the worlds fastet supercomputer now

So,

I figured I'd share my network at work. Please keep in mind, What you see in the picture I inherited. I've only been here since April in my current capacity. Yes I'm very aware that we have alot of work left to do.........alot.......

/snip/

Why don't you like them?SonicWall makes good Firewalls but their wireless AP's suck.

Why don't you like them?

I finally rebuilt my home lab's SAN. 4x 160GB SSD and 4x 1.5TB Spindle in Raid 10/5(Z) respectivly. 200Mbps Read/600Mbps write on some older Sata 2 controllers/drives. Not bad. Still loving OI151a/Napp-It.

This is tied via a dual port Intel nic to my ESXi host. More then I need.

Have you got your home lab's SAN (OI151a/Napp-It) installed on the ESXi host or is that installed on a other server? I just having terrible performance with my HP micro server/Freenas/ESXi installed on the same host.

Why don't you like them?

Way to fuking over priced for what they are. I just installed one today. 300$ for a ap, what a rip off !

Out of curiosity for those of you with data centers do you stack your gear (firewalls, routers, load balancers, etc) on top of each other in your network racks or do you leave at least a 1U spacing in between devices? I'm thinking of doing

from bottom up (heaviest to lightest)

etc

1U blocking panel or cable mgmt

2U appliance

1U blocking panel or cable mgmt

2U appliance

No, because no photo attached!

Lawrence Livermore, or IBM?

Out of curiosity for those of you with data centers do you stack your gear (firewalls, routers, load balancers, etc) on top of each other in your network racks or do you leave at least a 1U spacing in between devices? I'm thinking of doing

from bottom up (heaviest to lightest)

etc

1U blocking panel or cable mgmt

2U appliance

1U blocking panel or cable mgmt

2U appliance

Huh? I thought the LLNL/DOE system was already running in excess of 15 petaflops.it was IBM, they backed out and now its Cray, it hasnt fully been launched yet thanks to the contract dispute. Supposed to be 1 Petascale when done

48 port patch panel

48 port switch

48 port patch panel

48 port switch

48 port patch panel

48 port switch

Then 1 foot patch cables between them. Looks ugly, but easy to trace a cable from patch panel to switch port.

link