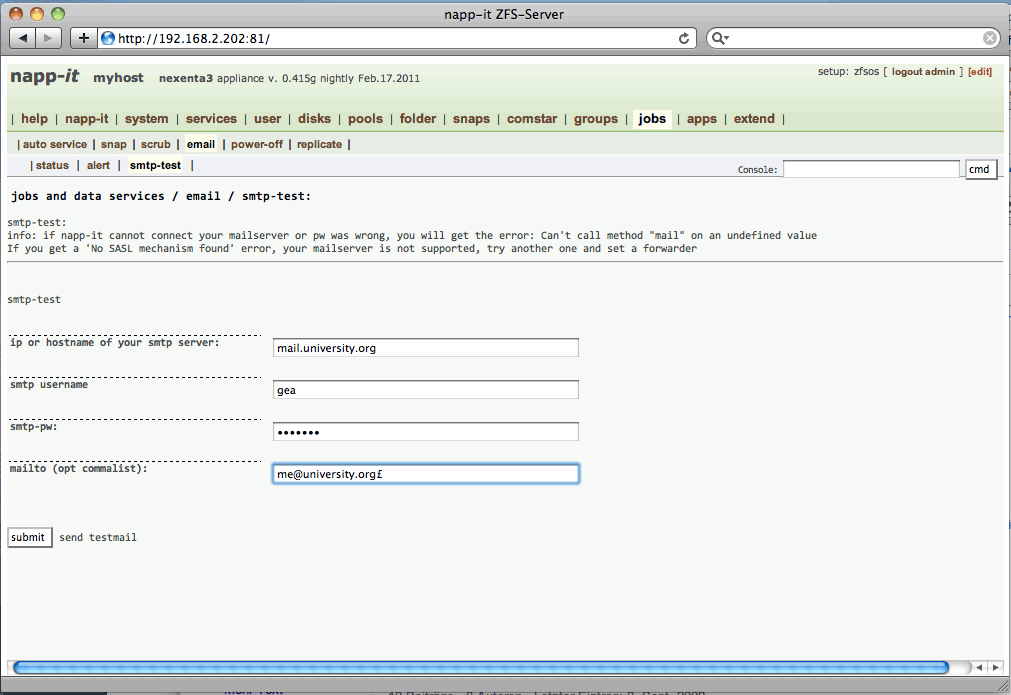

Heya Gea, loving Napp-it so far, any chance on a better configuration of the mail system?

Like a better configuration of the smtp server (authentication). Or is that already in there somewhere and I'm looking in the wrong place. I'd love to use my gmail

Thanks !

i have included the missing sasl libraries in current napp-it 0.415g nightly.

it now supports smtp with authentication - at least with my own mailer

Gea

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)