HardOCP News

[H] News

- Joined

- Dec 31, 1969

- Messages

- 0

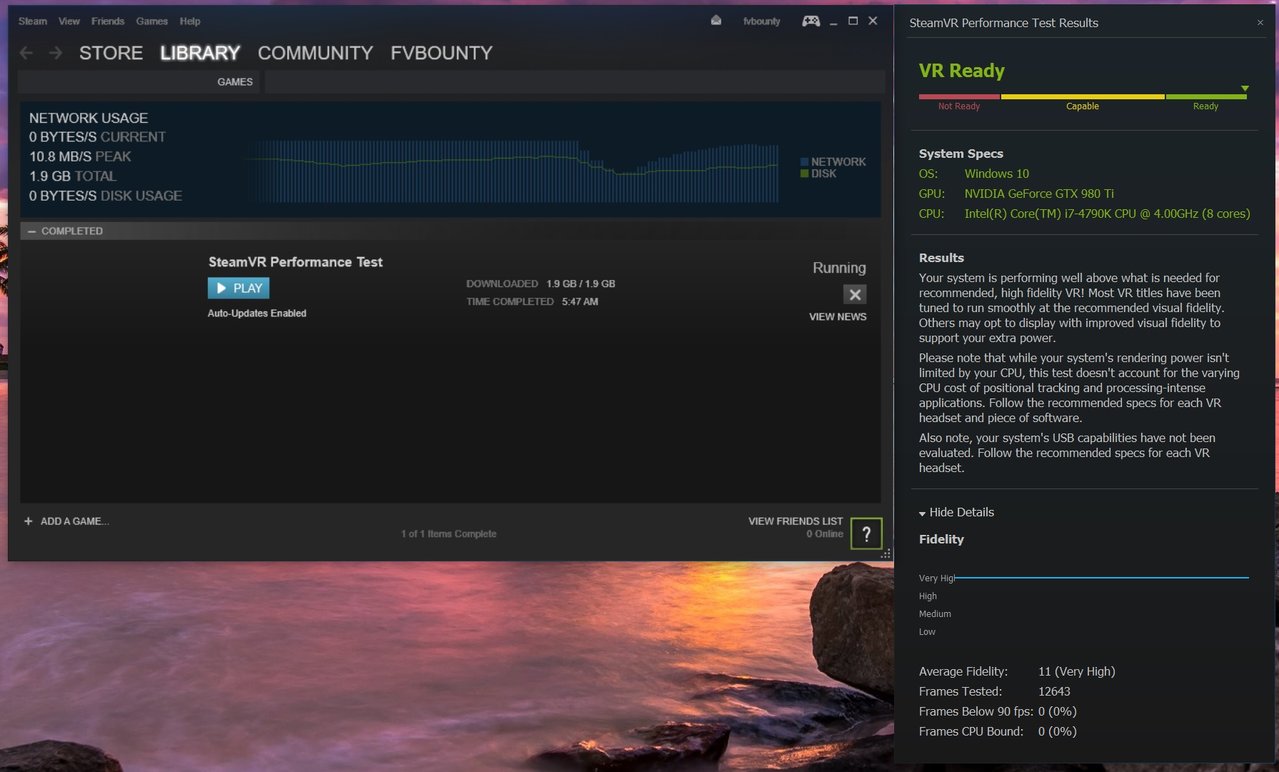

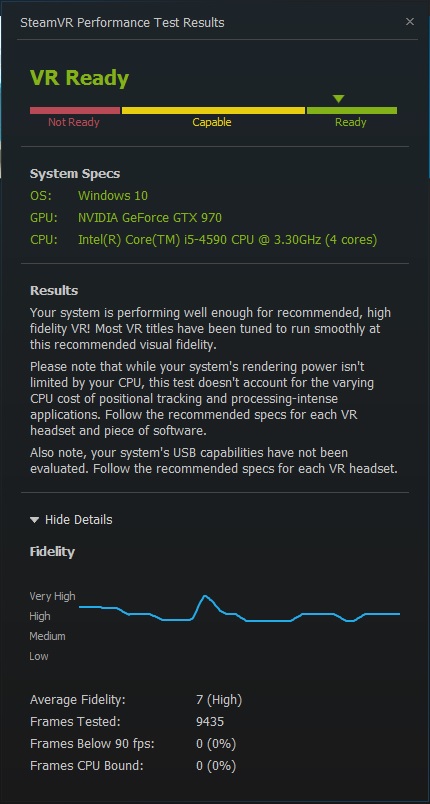

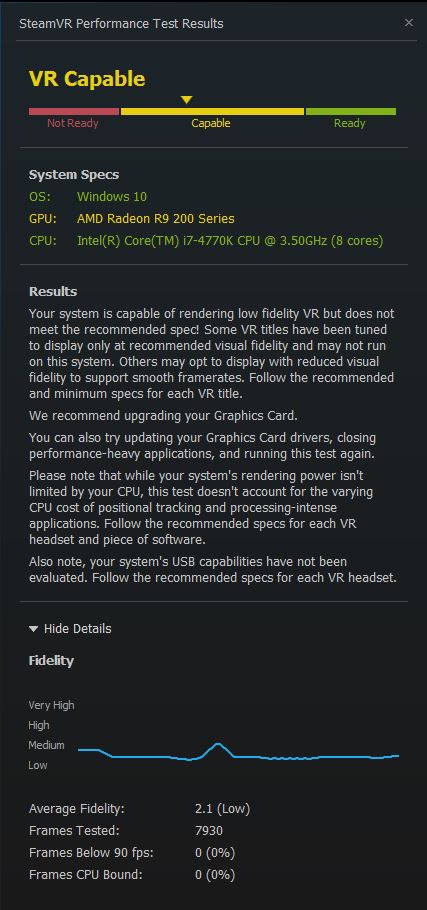

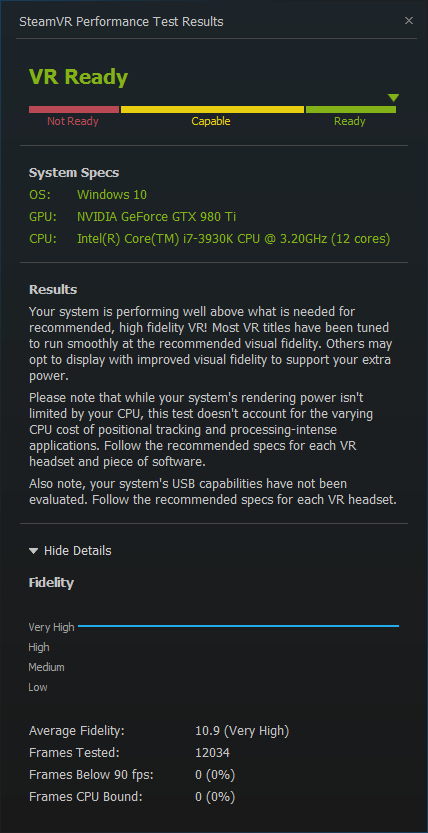

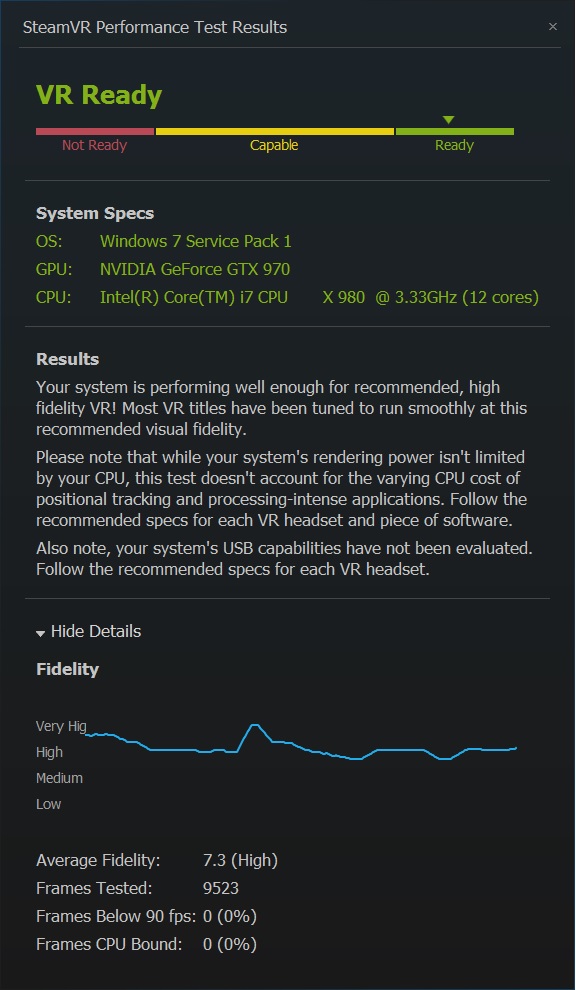

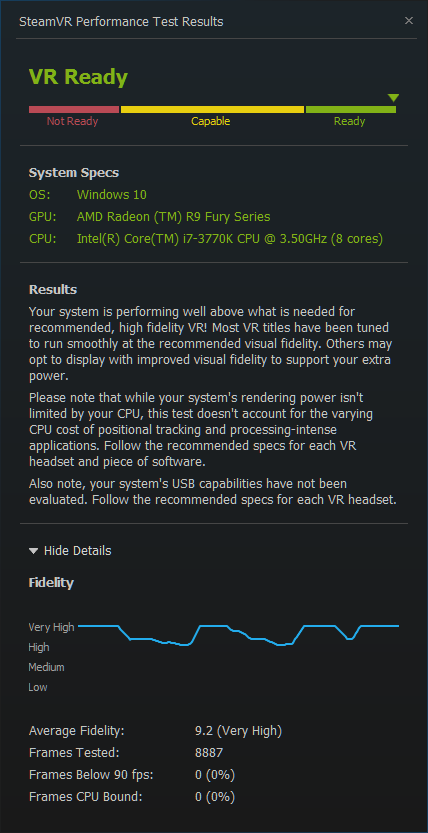

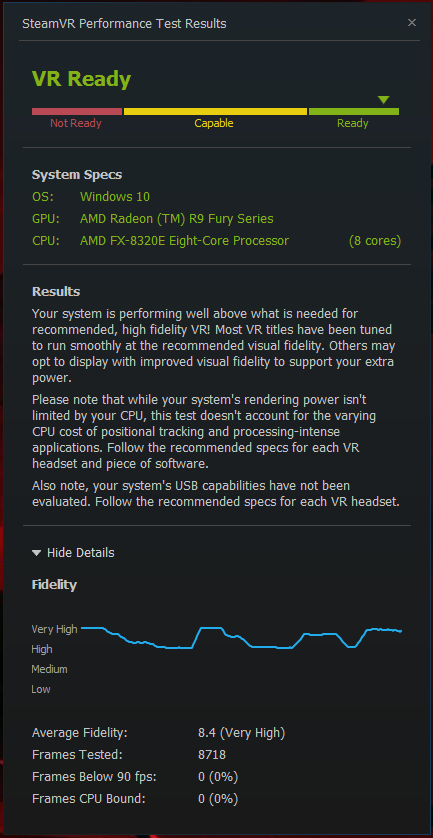

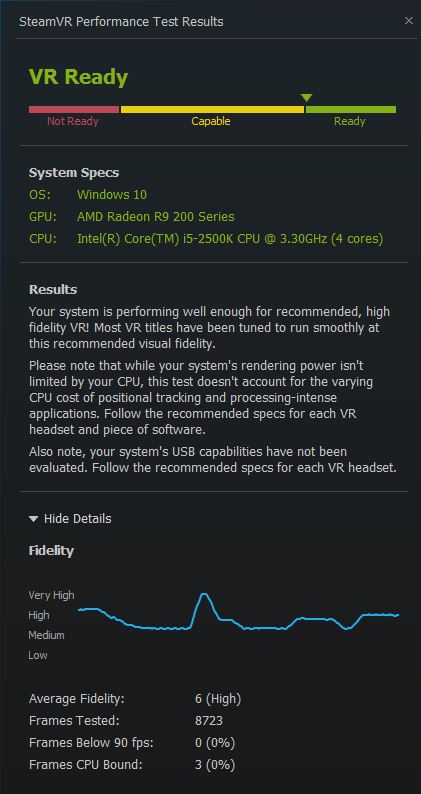

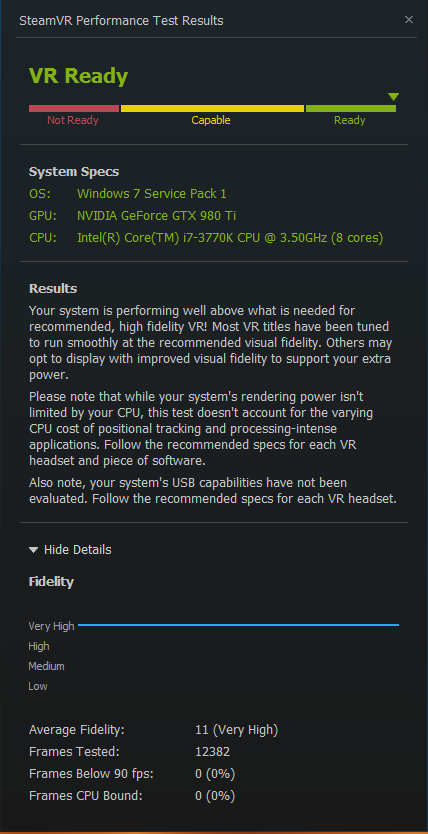

Those of you wondering whether or not your system has what it takes to be "VR Ready," can use the SteamVR Performance Test to find out. The test will also tell you, if your system doesn't pass, whether bound by CPU, GPU or both.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)