cjcox

2[H]4U

- Joined

- Jun 7, 2004

- Messages

- 2,947

Education is somewhat of a partnership. Up to you if you think "cheating" (whatever that might mean to you) is what you want to do.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I went to community college which I was told weren't allowing graphic calculators in tests. You need a graphing calculator for class, but it was a wasn't allowed in tests. Outside of community colleges it was maybe dependent on the teacher.Just an aside.

Using calculators on a test is not a college policy, that's 100% on the teacher involved. If your college is small and only one teacher is teaching that level then yeah you could be hosed big time if you have a "this is the way I did it when I was a student" type of teacher. I went to a $10/unit community college to take Calculus back in the early 90s (i.e. not prestigious at all) and a graphing calculator was actually a requirement for the class (was most expensive thing for the class at the time too!)

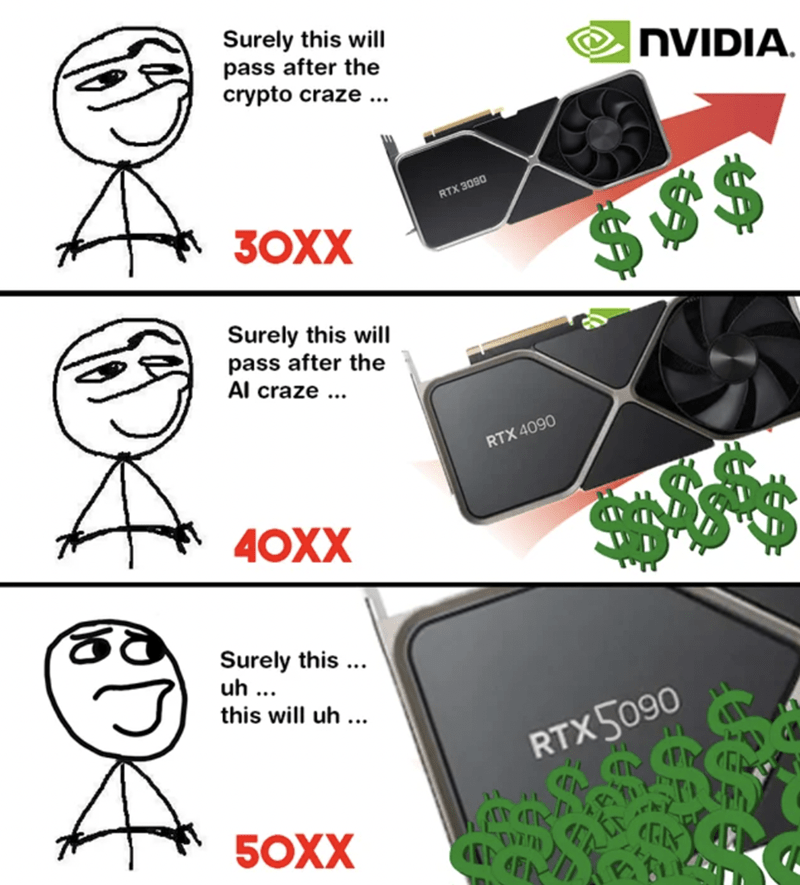

It's very amusing (mostly annoying) how you attempt to downplay the profound implications of AI by comparing it to past instances of cheating and plagiarism with books, calculators, and the internet. Those comparisons utterly fail to grasp the magnitude of what AI (and particularly ChatGPT) is capable of in the grand scheme of things.When I was in college I was struggling with Calculus, because my college won't let us use graphing or even regular calculators for tests. I then found out that other colleges let you use them during tests. Friend of mine had the most expensive graphing calculator money can buy, and he used them in tests. The more prestige the college, the more lenient they were with calculators during tests. Remember when people would write book reports by using the blurb part of the book? People plagiarized all the time long before the internet as well as AI. Plagiarism online has long since been out of control before AI. Nothing changed, but the tools. Maybe the problem isn't ChatGPT, but the method we teach students?

I'm not downplaying AI, just the reaction people have to using it. If you're worry about what AI can do, then students not properly learning is the least of our concern.It's very amusing (mostly annoying) how you attempt to downplay the profound implications of AI by comparing it to past instances of cheating and plagiarism with books, calculators, and the internet. Those comparisons utterly fail to grasp the magnitude of what AI (and particularly ChatGPT) is capable of in the grand scheme of things.

I'm not downplaying AI, just the reaction people have to using it. If you're worry about what AI can do, then students not properly learning is the least of our concern.

View: https://youtu.be/Ug4--gTy-sA?si=2RBeJy85C2JddlmX

View: https://youtu.be/Sq1QZB5baNw?si=Qg0-nizOP_AGEtSs

“Larry Summers, Now an OpenAI Board Member, Thinks AI Could Replace 'Almost All'”This thread randomly reminded me of a comic my friend made when we were both in Grad school for Mechanical Engineering (Would have been 2009 I think?).

View attachment 644230

So kind of to say, yea, people are always going to find other ways to do work.

That being said, pretty much every job is going to use LLMs/AI of some sort for the most part, I don't think It's necessarily a bad thing to teach people how to use it. Obviously not just flat out use it for everything and not learn, but there is still a good way to do both.

Jensen was on one of our company sales meetings talking with our CEO a few months back, and I kind of like how he put it, It's just a new/different form of computing.

Dang.. forgot about that song!! https://yewtu.be/watch?v=ixe8Snxu3wo Guess ChatGPT made me forget!!"We'll make great pets."

Just an update to this, but I'm not the only one who made this correlation.It's very amusing (mostly annoying) how you attempt to downplay the profound implications of AI by comparing it to past instances of cheating and plagiarism with books, calculators, and the internet. Those comparisons utterly fail to grasp the magnitude of what AI (and particularly ChatGPT) is capable of in the grand scheme of things.

ChatGPT is terrible at coding. ChatGPT can also do infinitely more things than rudimentary coding.Just an update to this, but I'm not the only one who made this correlation.

"There is a common analogy between calculators and their impact on mathematics education, and generative AI and its impact on CS education. Teachers had to find the right amount of long-hand arithmetic and mathematical problem solving for students to do, in order for them to have the “number sense” to be successful later in algebra and calculus. Too much focus on calculators diminished number sense. We have a similar situation in determining the “code sense” required for students to be successful in this new realm of automated software engineering. It will take a few iterations to understand exactly what kind of praxis students need in this new era of LLMs to develop sufficient code sense, but now is the time to experiment."

https://cacm.acm.org/opinion/generative-ai-and-cs-education/

https://news.slashdot.org/story/24/...kened-to-calculators-impact-on-math-education

and when we got calculators we wrote 55378008 on them, you expected more from humans? hahaChatGPT is terrible at coding. ChatGPT can also do infinitely more things than rudimentary coding.

My point wasn't humans. My point was Duke doesn't seem to have any clue what ChatGPT is capable of.and when we got calculators we wrote 55378008 on them, you expected more from humans? haha

You have any idea what calculators can do? You're missing the point in that this has happened in the past and we've not worse for it. The Genie is out of the bottle and it ain't going back in.My point wasn't humans. My point was Duke doesn't seem to have any clue what ChatGPT is capable of.

You'll love how well ChatGPT did as a lawyer.I am a professor of anatomical sciences (i.e., gross anatomy, histology, embryology, and neuroanatomy) at a well-known US medical school. Here are a few of my observations:

- ChatGPT 3.5 and 4 can write well enough to pass general freshman-level English composition courses

- They begin struggling with details of anatomical sciences past the introductory level

- By first-year training for MD/DO/PA/other graduate-level allied health sciences professions, their miss rates are high enough to cause frequent problems (i.e., it produces so many errors so often it's not a useful resource)

- AI's misses, at least in terms of what I do, seem to be either bizarre errors no human would make or total failure to appreciate how science is not always black and white - it is often nuanced and highly dependent upon many, many other considerations

- A study I recently completed with a student demonstrated an error rate that would keep an undergraduate student from successfully completing an honors thesis

There are a lot of lower-level white collar jobs that AI will obsolete in the coming decade. I hope the rise of AI will encourage more young people to attain valuable vocational training instead of pursuing a BA/BS that is often simply no longer a good investment.

I'm in my mid-40s, and I am not particularly concerned about an AI replacing higher-level professionals in my lifetime. VERY fortunate my folks growing up in Michigan in the 50s and 60s saw robots replace line workers in the auto industry - they encouraged me to train for a job that was unlikely to get automated. AFAIK, AI does not (yet) ask interesting, useful questions. Students answer questions while masters ask them.

You have any idea what calculators can do? You're missing the point in that this has happened in the past and we've not worse for it. The Genie is out of the bottle and it ain't going back in.

View: https://youtu.be/XmY83eCzHwI?si=fVUL4STw8dY6cVGi

You'll love how well ChatGPT did as a lawyer.

View: https://youtu.be/oqSYljRYDEM?si=JB5rtBHa1tGy9V_T

The point is that calculators also disrupted the learning experience and nothing happened. Also yes, that video was a joke. Are you expecting people to be dumber with ChatGPT?That video has literally nothing to do with ChatGPT. Your responses are always so wildly off the mark. I could say I hate France, and you'll post a video about how amazing Italy is.

Could be half joke and obviously the cherry picked case was ridiculous here, butAnyone using ChatGPT for their court case deserves to be removed from the practice.

Not nothing, at least if we are to believe some study, maybe even more than anyone expected to happen happened to humans using calculator:The point is that calculators also disrupted the learning experience and nothing happened.

Does anyone take studies serious?Not nothing, at least if we are to believe some study, maybe even more than anyone expected to happen happened to humans using calculator:

If you do something often enough then your brain will adjust. Do we really need peoples brains adjusted for stuff that is now considered unimportant? I took Calculus classes and I'm pretty sure I forgot everything I learned. You know how many teachers were upset when Wikipedia came out? I remember being told that real research was done at the library reading books, using the Dewey Decimal system. Google now rewires your brain. There's a lot of stuff they want you to learn that really isn't important. To some people it's really important, but those same people are also employed doing this. Call it what it is, an article that's meant to get you upset with a click bait subject about AI, because AI is now on everyone's lips.https://www.nature.com/articles/s41598-017-08955-2

Brain scan of people that use mental-abacus strategy seem to show difference, a lot of the mental trick to do calculus in head and physical object disappeared, people got much worse at it, like expected.

I more worried about a society that's complacent with technology that's become a black box. I learned a lot about computers by just being able to build one, setup the OS, and etc. Now you get an Android or iPhone that you better not try to modify it or else. The stuff is technically cumulative which means it applies to technology even today. I feel bad for Gen Z because they missed out on this. Meanwhile schools want me to memorize every president of the United States? You think people want to know this? The only time this ever becomes useful is in a Trivia contest. No wonder people have a memory loss, because they never wanted to know this to begin with. The less time they take doing it, the more likely they won't remember it, but that's intentional. That's why students use ChatGPT.A bit like the worst of what the Greek feared would happen because of the youth writing-reading would happen (our memory and all the memory trick disappeared for the most part, part of the world in which paper became cheap first lost the oral history tradition and so on), the obvious negative effect of technology predicted often do occur, what would they mean for society as a whole and on balance with the much harder to imagine benefit of the new tech that's another story.

Yes that article and the self reported and not random group assigned to use gpt study is ridiculous, brainscan as more change to point to actual things.If you do something often enough then your brain will adjust. Do we really need peoples brains adjusted for stuff that is now considered unimportant? You know how many teachers were upset when Wikipedia came out? I remember being told that real research was done at the library reading books, using the Dewey Decimal system. Google now rewires your brain. There's a lot of stuff they want you to learn that really isn't important. To some people it's really important, but those same people are also employed doing this. Call it what it is, an article that's meant to get you upset with a click bait subject about AI, because AI is now on everyone's lips.

Phone were an enthusiast affair, people were in clubs, knew how they worked and became black box, microwave are black box, at first with cars you either were a mechanics-enthusiast or had one at your service (maybe an exaggeration) they became overtime blackbox for most users, planes, many tech become black box over time, we remark it for computer because we lived through and were enthusiast but that probably just the norm, lot of watches users they not knew how they worked once they became just normal easy to use machine.I more worried about a society that's complacent with technology that's become a black box. I learned a lot about computers by just being able to build one, setup the OS, and etc

Or they want you to develop memory tricks, train the brain and use anything as an excuse.Meanwhile schools want me to memorize every president of the United States? You think people want to know this? The only time this ever becomes useful is in a Trivia contest.

Yes that article and the self reported and not random group assigned to use gpt study is ridiculous, brainscan as more change to point to actual things.

Is it needed, does it help, that a second step and much harder to predict and and if it did does the benefit overall worth the trade off, it seems that learning that involve multiple sense, with physical space position is sometime most people remember more, I can imagine that doing things is what you remember the most, interviewing a bridge engineer is something you remember more than books, physical books more than a webpage and so on and a webresearch more than a gpt query. It is easy to predict that extreme short content with multiple dopamine hit can lead to shorter attention span and harder long forced attention toward boring things, what are the effect of those become much harder to predict and not "scientific question".

We can imagine that In civil engineering, people will often learn roughly values and order of values for a ton of stuff that they will always use a reference for them when they want to know it and not sure anyway, to have a gut feeling that something is wrong by just looking at someone else plan you need to have a lot of knowledge without having to look anywhere, same will be true in just less obvious way about everything in life.

To take your wikipedia article, we can imagine most of the things teacher did not like about it became true maybe worse than the worse fears, the consequence of those things becoming true that such a different things to try to predict.

Phone were an enthusiast affair, people were in clubs, knew how they worked and became black box, microwave are black box, at first with cars you either were a mechanics-enthusiast or had one at your service (maybe an exaggeration) they became overtime blackbox for most users, planes, many tech become black box over time, we remark it for computer because we lived through and were enthusiast but that probably just the norm, lot of watches users they not knew how they worked once they became just normal easy to use machine.

Or they want you to develop memory tricks, train the brain and use anything as an excuse.

Does anyone take studies serious?

If you do something often enough then your brain will adjust. Do we really need peoples brains adjusted for stuff that is now considered unimportant? I took Calculus classes and I'm pretty sure I forgot everything I learned. You know how many teachers were upset when Wikipedia came out? I remember being told that real research was done at the library reading books, using the Dewey Decimal system. Google now rewires your brain. There's a lot of stuff they want you to learn that really isn't important. To some people it's really important, but those same people are also employed doing this. Call it what it is, an article that's meant to get you upset with a click bait subject about AI, because AI is now on everyone's lips.

I more worried about a society that's complacent with technology that's become a black box. I learned a lot about computers by just being able to build one, setup the OS, and etc. Now you get an Android or iPhone that you better not try to modify it or else. The stuff is technically cumulative which means it applies to technology even today. I feel bad for Gen Z because they missed out on this. Meanwhile schools want me to memorize every president of the United States? You think people want to know this? The only time this ever becomes useful is in a Trivia contest. No wonder people have a memory loss, because they never wanted to know this to begin with. The less time they take doing it, the more likely they won't remember it, but that's intentional. That's why students use ChatGPT.

We learn to do that throughout the first 12 years of our education. Why is more needed in college or university? I understand teaching something like technical writing if you're going into a field that requires it, but why do you need to be beaten over the head again with basic writing skill as a fucking adult?Specialized in a subject, yes. But still able to string together a coherent sentence without help from a fucking AI. Isn't that the hallmark of an educated person throughout the ages? Being literate and able to communicate effectively is the basic standard regardless of what major you went for.

not any more and the ai bullshit is only going to make it worse.We learn to do that throughout the first 12 years of our education.

Yep. The retardification of human civilization is being fast-tracked by AI.not any more and the ai bullshit is only going to make it worse.

I actually think we probably will come into a renaissance period again soon. Or a mad max style period, we are on a road to either.Yep. The retardification of human civilization is being fast-tracked by AI.

At least for me, when AI does math like this I will always have trust issues with the algorithms that are out there right now.not any more and the ai bullshit is only going to make it worse.

Math cannot really be done well from pure LLM (they need calculator and other plugin) maybe for a while or forever, LLM are much better for either things that do not need to be actually precise (text-image, etc..) or if that they need to be like code can be validated to detect if it work or not (compiler-running a test suite), for lot of stuff it will need to talk with specialized sub-agents (Math, physic, chemical) that have none LLM part to either participate to the generation or validate it, GPT-5 will apparently sometime ask itself the question 10,000 time and use a validation tools to take the best of the 10,000 answer, which will remove the very common scenario that we have now, now you can ask it are you sure and it will often correct itself, being able to find something better or an error in their previous answer.At least for me, when AI does math like this I will always have trust issues with the algorithms that are out there right now.

Mostly just for software dev stuff, which could be an important distinction, the amount of easily available online content in that space is particularly giant making them particularly good at it and the scenarios can be fully well content versus dealing with the physical world.I'm not asking software / web dev type q's, but very "standard" industry spec stuff & most Ai is lame.