agrikk

Gawd

- Joined

- Apr 16, 2002

- Messages

- 933

In this thread I take a look at the performance capababilities of three free iSCSI Target software platforms: FreeNAS, Openfiler, and Microsoft iSCSI Target.

I will be comparing file copy performance as well as raw Input/Output Operations Per Second (IOPS) in various test configurations.

Background:

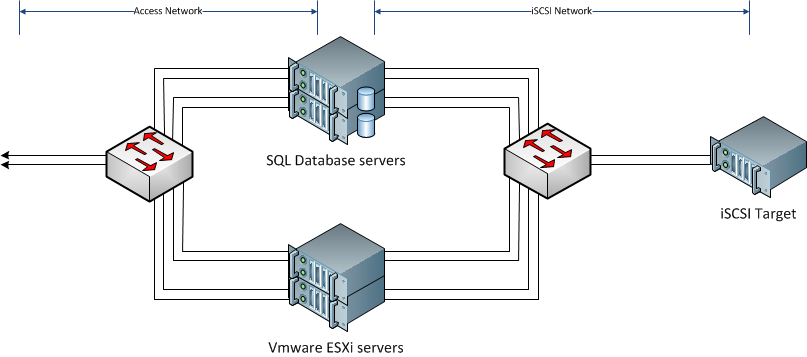

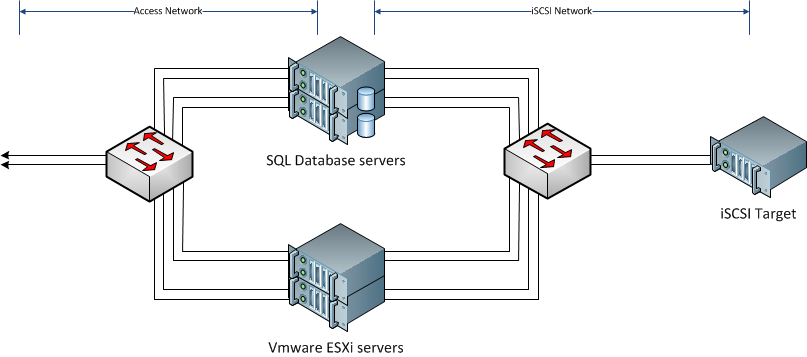

I am building a clustered lab consisting of two Windows Server 2008 R2 boxes that will be running the SQL Server 2008 R2 suite of products (SQL Server, Analysis Services and Integration services) and two ESXi 5.1 boxes that will be running some windows VMs (for a SQL Server Reporting Services web pool) and linux boxes (for monitoring and other web services). I had planned on building an iSCSI-based SAN to provide shared storage for both clusters and needed a free iSCSI target solution.

I wanted to use iSCSI for the additional technological challenge of setting it up in a load-balanced/highly-available configuration to make my cluster as "production ready" as possible.

After doing some research, I found three products that I wanted to take a look at:

FreeNAS - FreeNAS is an Open Source Storage Platform based on FreeBSD and supports sharing across Windows, Apple, and UNIX-like systems. FreeNAS 8 includes ZFS, which supports high storage capacities and integrates file systems and volume management into a single piece of software.

Openfiler - Any industry standard x86 or x86/64 server can be converted into a powerful multi-protocol network storage appliance, replete with an intuitive browser-based management interface, in as little as 15 minutes. File-based storage networking protocols such as CIFS and NFS ensure cross-platform compatibility in homogeneous networks - with client support for Windows, Linux, and Unix. Fibre channel and iSCSI target features provide excellent integration capabilities for virtualization environments such as Xen and VMware.

Microsoft iSCSI Target - Microsoft iSCSI Software Target provides centralized, software-based and hardware-independent iSCSI disk subsystems in storage area networks (SANs). You can use iSCSI Software Target, which includes a Microsoft Management Console (MMC) snap-in, to create iSCSI targets and iSCSI virtual disks. You can then use the Microsoft iSCSI Software Target console to manage all iSCSI targets and virtual disks created by using iSCSI Software Target.

I have used FreeNAS and Openfiler before, but this was my first look at Microsoft's product as it was formerly only available via Microsoft Storage Server installed on OEM boxes.

The Setup:

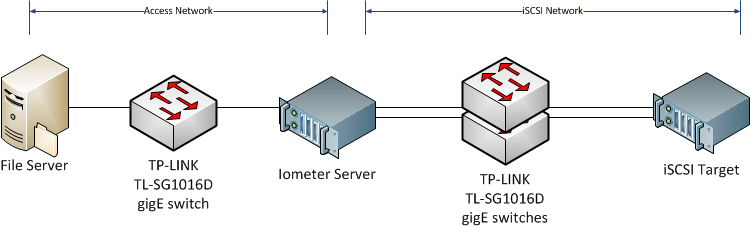

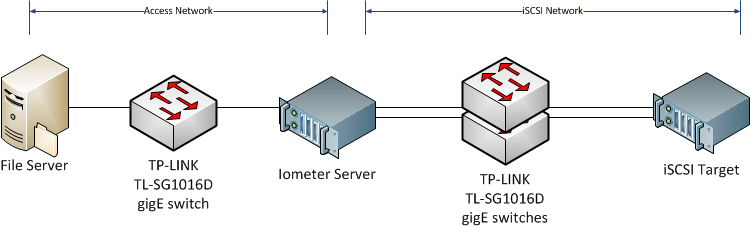

I used some spare gear I had laying around and kept the setup as stock as possible:

- The file server is a whitebox setup that is part of my home network.

- The switches are three TP-LINK TL-SG1016D unmanaged switches.

- The Iometer server that runs my IOPS tests and file copy tests:

-- AMD Athlon X2 5200+ CPU

-- ASUS M2N-E motherboard

-- 8GB Crucial RAM

-- 2x WD Blue 500GB Hard drives (RAID-0)

-- Access network NIC: nForce gigE

-- iSCSI network NIC: Intel 2-port gigE PCIe

- The iSCSI Target server:

-- Supermicro AS-1012G-MTF 1U Rackmount Server

-- AMD Opteron 6128 Magny-Cours 2.0GHz 8-Core CPU

-- 16GB (8x2GB) Kingston unbuffered ValueRAM

-- 5 x Western Digital WD Blue WD6400AAKS 640GB 7200 RPM HD (1 OS, 4 JBOD data)

The Iometer server is running Windows Server 2008 R2 SP1

While the Supermicro H8SGL-F motherboard has an on-board Adaptec RAID controller, it is a software solution with non-native drivers required. To keep things simple and as apples-to-apples as possible, I configured the BIOS to force the controller into AHCI-SATA mode instead of RAID and will configure the data array in RAID-0 through the OS.

Since this was a performance test in a lab environment, I opted to configure the data array on the filer in RAID-0 to give as much juice as possible to the disk subsystem. FreeNAS has some advanced configuration options with RAID-Z and RAID-2Z, and the software RAID drivers for Windows provided by Supermicro are awesome, but to keep the playing field as level as possible, RAID-0 configured through the OS was what I chose. For the love of god, please never use RAID-0 for a data storage volume unless you know what you are doing and have a solid backup strategy in place!

After installing the Filer OS on disk0, disks 1-4 were configured through the OS as RAID-0, meaning that all I/O operations were handled through the OS. No hardware acceleration was used.

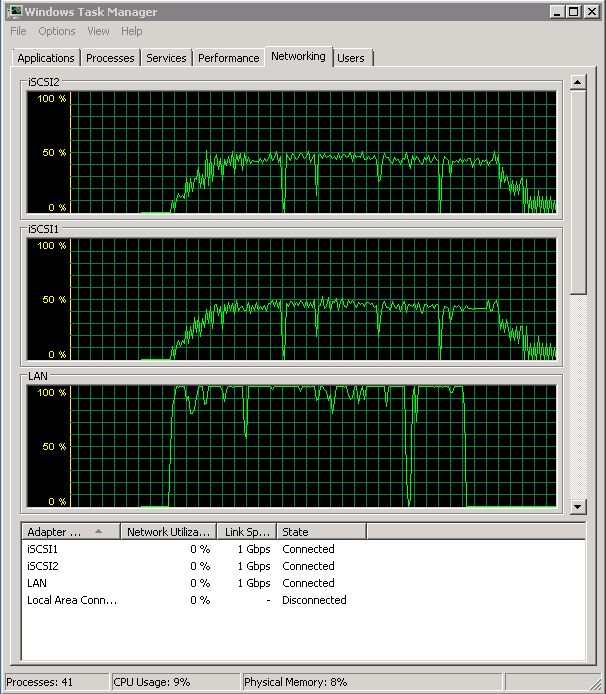

With each Filer setup the iometer server was configured to mount three 50gb volumes using the native iSCSI Initiator feature that comes bundled with Windows 2008 R2. The Multipath I/O (MPIO) Feature was also enabled to allow multiple paths to the filer from the server across two different switches to increase available bandwidth to the target as well as providing fault tolerance like you would find in a production environment.

After doing some research, I discovered that using the Multiple Connections per Session (MCS) functionality in Microsoft's iSCSI Initiator should be avoided as it isn't implemented on some systems and isn't supported by VMware.

Some further reading:

link1

link2

The Tests:

I came up with a set of nine tests that I hoped would give a good representation of lab tests and real-world scenarios. The file copy tests were performed using robocopy.exe found Windows 2008 R2. the IOmeter tests were performed using version 2006.07.27 of IOmeter installed on the test box. The IOmeter tests were run for a period of 30 minutes each.

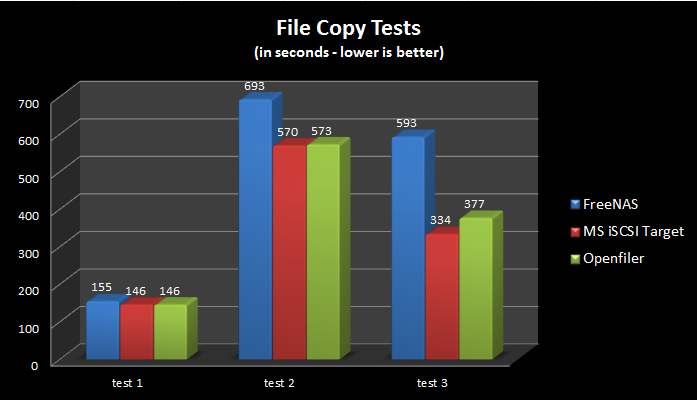

The File Copy tests:

Each of the next three tests involved a robocopy job copying files from an external file server to one or more iscsi volumes mounted locally on the iometer test server. These would test the write ability of the filer and the ability to handle multiple streams at once.

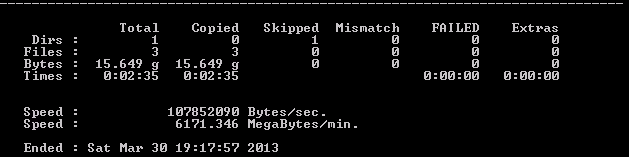

Test 1 - A single robocopy job that copies 3 large files (~15.5GB) from a file server to an iSCSI volume. This setup tests the raw sequential write ability of the filer.

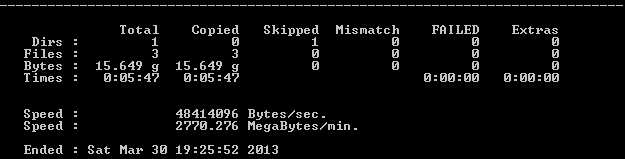

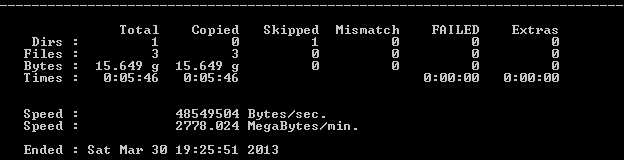

Test 2 - Two robocopy jobs copying three large files (~15.5GB) to each of two iscsi volumes. Another sequential write test, but with two different write streams occuring simultaneously. The results are the sum of the duration of both copy jobs.

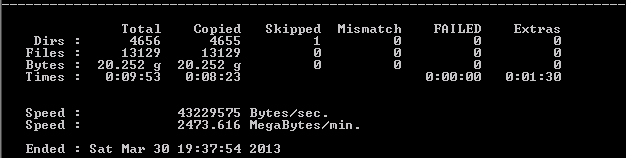

Test 3 - A single robocopy job copying a large amount of data in various file types (13,000 files / 20GB data). I wanted to see the throughput of a simulated robocopy-based backup job.

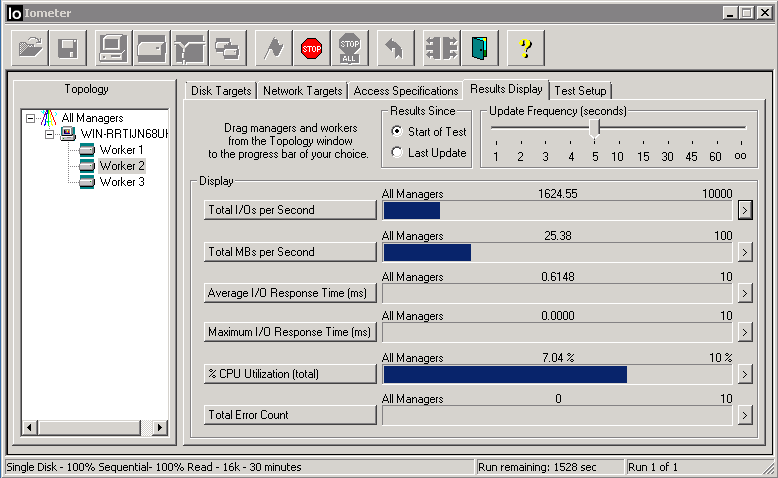

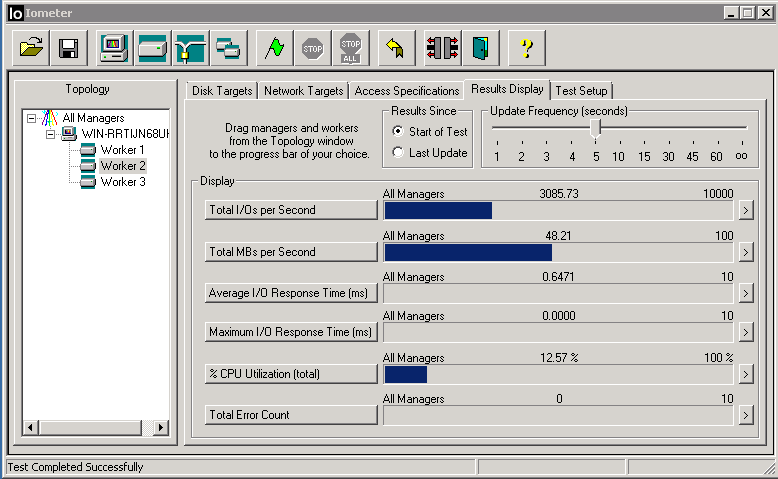

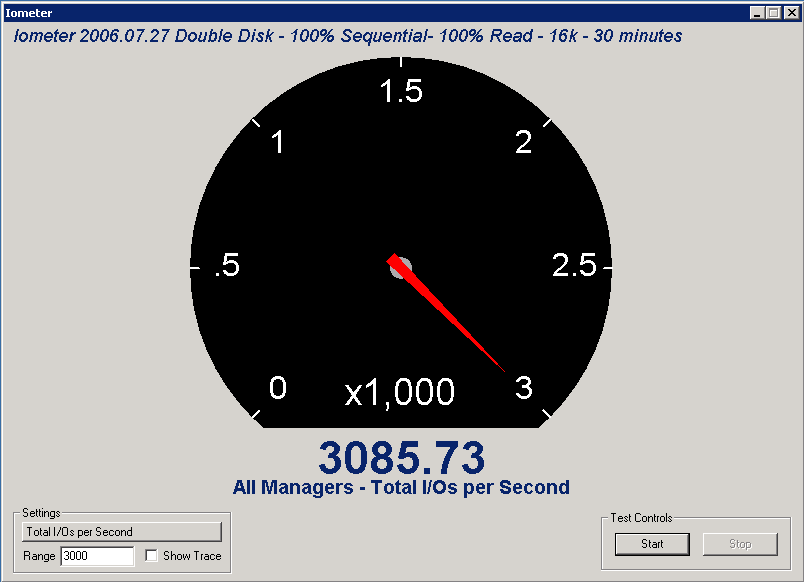

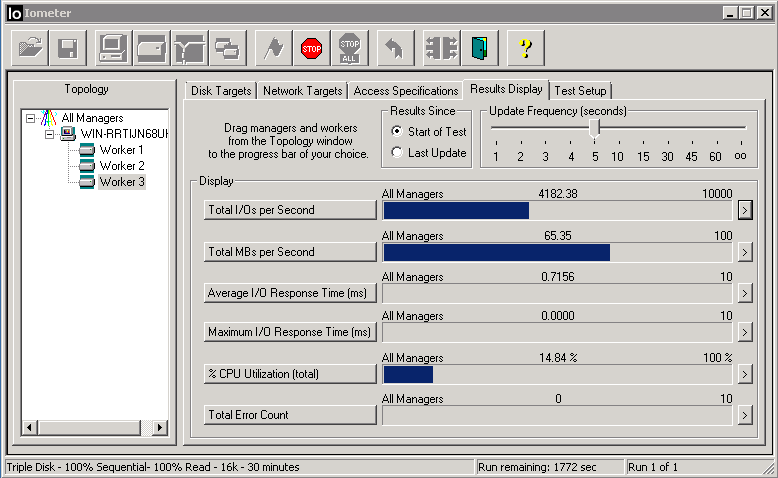

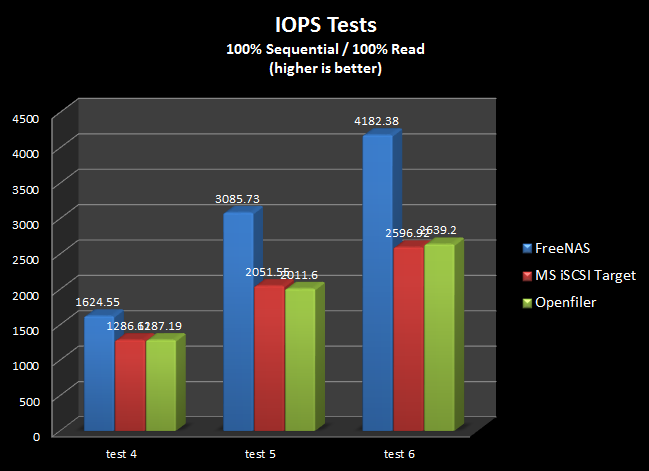

IOPS tests - Sequential Read

These are the kind of tests that typically put up big numbers that storage vendors like to put up on their web sites. 100% sequential read takes out all of the seek time latency and removes any mechanical latency caused by the write process. IOmeter was configured on the test server to place a read load on one-, two-, or three volumes simultaneously. I wanted to see how the IOPS score scaled as multiple volumes were accessed simultaneously.

Test 4 - 100% Read / 100% Sequential on one iscsi volume mounted locally

Test 5 - 100% Read / 100% Sequential on two iscsi volumes mounted locally

Test 6 - 100% Read / 100% Sequential on three iscsi volumes mounted locally

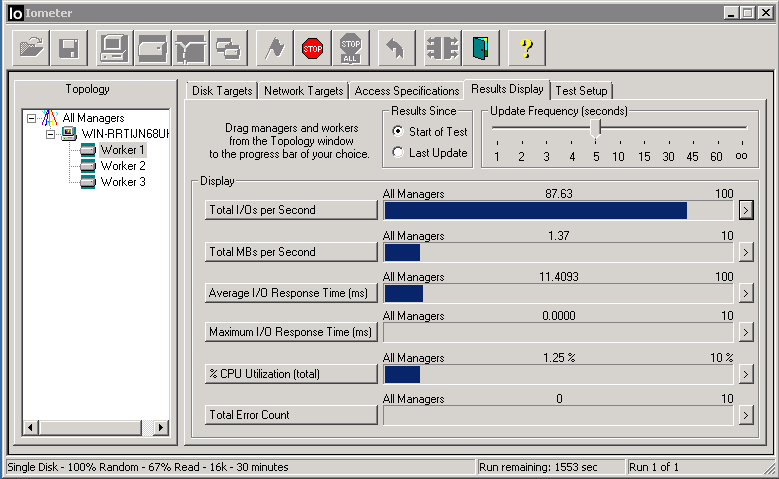

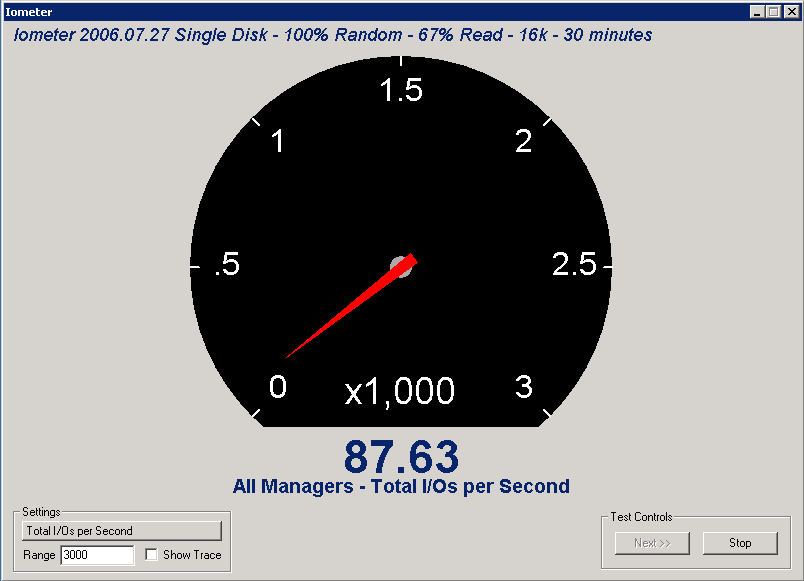

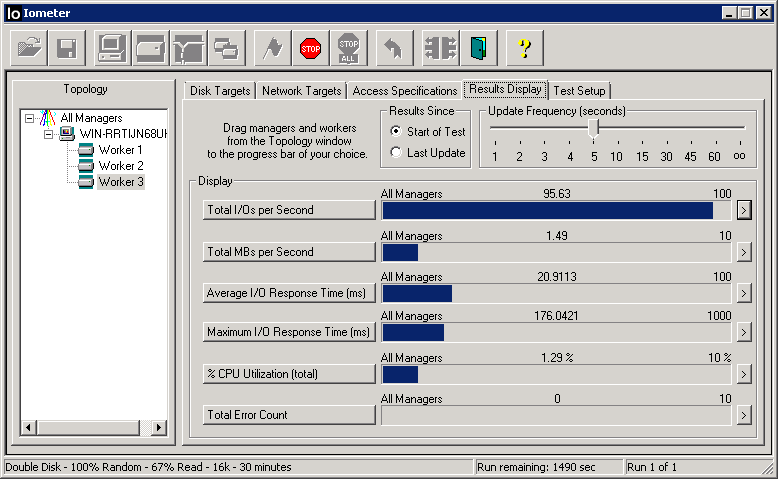

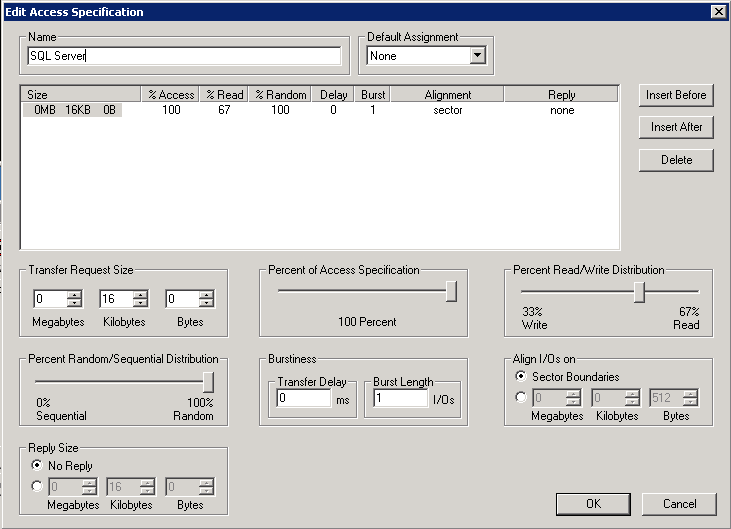

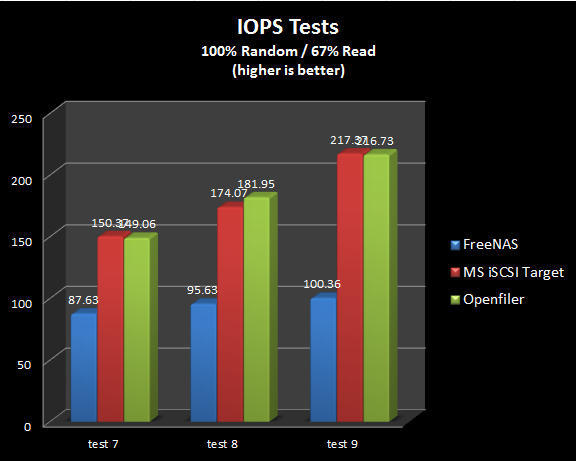

IOPS tests - SQL disk access pattern

These are more real world tests, involving read/write patterns that approximate the disk usage pattern of a large SQL database:

In this test case, access patters are completely random across the entire volume, and involve mostly read operations but contain some write operations as well. These tests put a serious strain on the disks and from the massive clicking coming from the disk array during the tests caused by the heads seeking across the platters I thought I would kill a disk for sure.

Test 7 - 67% Read / 100% Random on one isci volume mounted locally

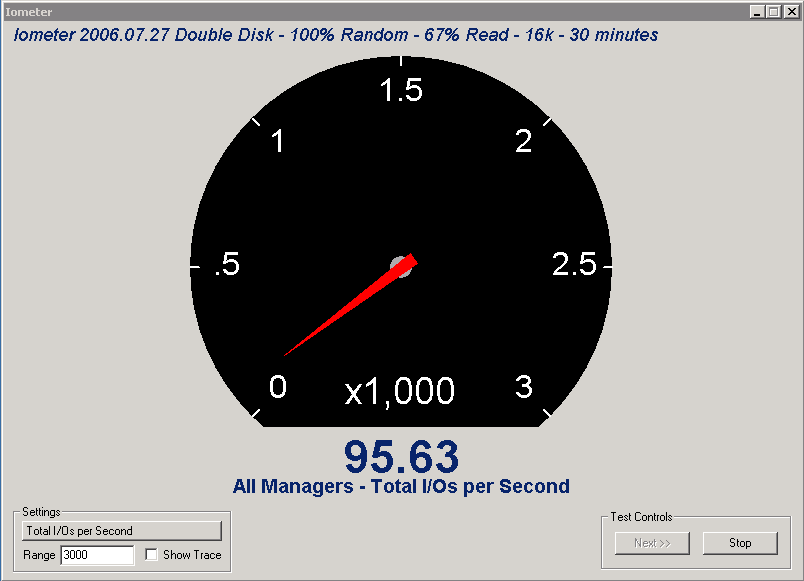

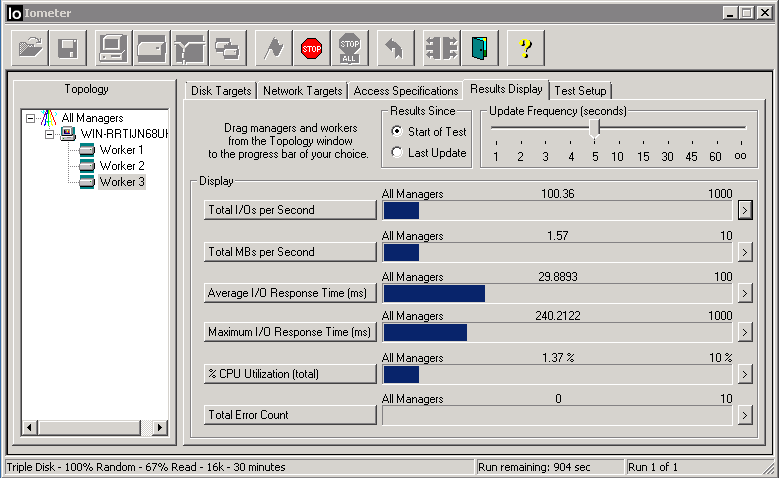

Test 8 - 67% Read / 100% Random on two isci volumes mounted locally

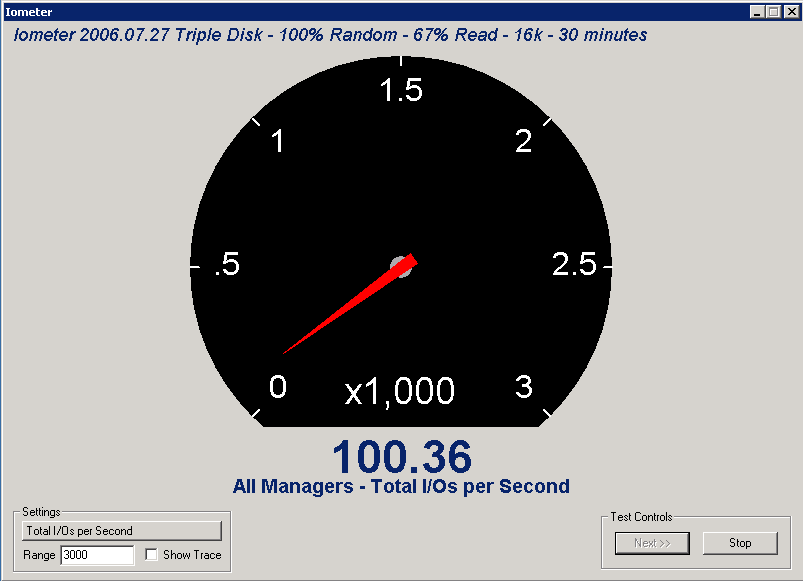

Test 9 - 67% Read / 100% Random on three isci volumes mounted locally

The Results:

The charts below show direct comparisons of the test results.

The file copy tests (results in seconds - the lower the better):

In the single copy of large files, all three post similar scores as the access network becomes the bottleneck. But in the multiple copy streams test and the small files test, FreeNAS lags behind and surprisingly the Microsoft iSCSI target edges out Openfiler.

The sequential read tests (Raw IOPS scores - the higher the better):

Here FreeNAS is the clear performance leader, with Openfiler and Microsoft coming in neck and neck. Unfortunately, unless you are running a software jukebox or some kind of media server, this is a synthetic score with very little real-world implications.

The random read/write tests (Raw IOPS scores - the higher the better):

In these tests, FreeNAS gets left behind by both Openfiler and Microsoft who trade performance lead between the tests.

Conclusion:

The purpose of this test was to find the best performing iSCSI target with all other things being equal. Under this criteria alone, it would be a split decision between Openfiler and Microsoft.

However, the Microsoft solution requires the purchase of a licensed copy of Windows 2008 R2 server to run its filer software so it isn't exactly free. In addition, Openfiler can be run from a USB key, leaving all disks available for storage, where Microsoft cannot easily be run from anything other than a hard drive, taking up storage space for the OS.

With this added criteria, Openfiler emerges as the iSCSI champ.

Postscript:

However, since I already have a Windows file server running with a beefy RAID card sitting under utilized and with ports available, I am considering adding 4 drives in a hardware RAID-10 array and dedicating that volume to iscsi storage using the Microsoft iSCSI target software. This would remove the requirement for a dedicated iSCSI target which would save space in my rack and potentially save power. Although my file server stays on 24/7 so the energy drain of four drives spinning all the time may be significant and a dedicated iSCSI target would be powered off most of the time so...

I will be comparing file copy performance as well as raw Input/Output Operations Per Second (IOPS) in various test configurations.

Background:

I am building a clustered lab consisting of two Windows Server 2008 R2 boxes that will be running the SQL Server 2008 R2 suite of products (SQL Server, Analysis Services and Integration services) and two ESXi 5.1 boxes that will be running some windows VMs (for a SQL Server Reporting Services web pool) and linux boxes (for monitoring and other web services). I had planned on building an iSCSI-based SAN to provide shared storage for both clusters and needed a free iSCSI target solution.

I wanted to use iSCSI for the additional technological challenge of setting it up in a load-balanced/highly-available configuration to make my cluster as "production ready" as possible.

After doing some research, I found three products that I wanted to take a look at:

FreeNAS - FreeNAS is an Open Source Storage Platform based on FreeBSD and supports sharing across Windows, Apple, and UNIX-like systems. FreeNAS 8 includes ZFS, which supports high storage capacities and integrates file systems and volume management into a single piece of software.

Openfiler - Any industry standard x86 or x86/64 server can be converted into a powerful multi-protocol network storage appliance, replete with an intuitive browser-based management interface, in as little as 15 minutes. File-based storage networking protocols such as CIFS and NFS ensure cross-platform compatibility in homogeneous networks - with client support for Windows, Linux, and Unix. Fibre channel and iSCSI target features provide excellent integration capabilities for virtualization environments such as Xen and VMware.

Microsoft iSCSI Target - Microsoft iSCSI Software Target provides centralized, software-based and hardware-independent iSCSI disk subsystems in storage area networks (SANs). You can use iSCSI Software Target, which includes a Microsoft Management Console (MMC) snap-in, to create iSCSI targets and iSCSI virtual disks. You can then use the Microsoft iSCSI Software Target console to manage all iSCSI targets and virtual disks created by using iSCSI Software Target.

I have used FreeNAS and Openfiler before, but this was my first look at Microsoft's product as it was formerly only available via Microsoft Storage Server installed on OEM boxes.

The Setup:

I used some spare gear I had laying around and kept the setup as stock as possible:

- The file server is a whitebox setup that is part of my home network.

- The switches are three TP-LINK TL-SG1016D unmanaged switches.

- The Iometer server that runs my IOPS tests and file copy tests:

-- AMD Athlon X2 5200+ CPU

-- ASUS M2N-E motherboard

-- 8GB Crucial RAM

-- 2x WD Blue 500GB Hard drives (RAID-0)

-- Access network NIC: nForce gigE

-- iSCSI network NIC: Intel 2-port gigE PCIe

- The iSCSI Target server:

-- Supermicro AS-1012G-MTF 1U Rackmount Server

-- AMD Opteron 6128 Magny-Cours 2.0GHz 8-Core CPU

-- 16GB (8x2GB) Kingston unbuffered ValueRAM

-- 5 x Western Digital WD Blue WD6400AAKS 640GB 7200 RPM HD (1 OS, 4 JBOD data)

The Iometer server is running Windows Server 2008 R2 SP1

While the Supermicro H8SGL-F motherboard has an on-board Adaptec RAID controller, it is a software solution with non-native drivers required. To keep things simple and as apples-to-apples as possible, I configured the BIOS to force the controller into AHCI-SATA mode instead of RAID and will configure the data array in RAID-0 through the OS.

Since this was a performance test in a lab environment, I opted to configure the data array on the filer in RAID-0 to give as much juice as possible to the disk subsystem. FreeNAS has some advanced configuration options with RAID-Z and RAID-2Z, and the software RAID drivers for Windows provided by Supermicro are awesome, but to keep the playing field as level as possible, RAID-0 configured through the OS was what I chose. For the love of god, please never use RAID-0 for a data storage volume unless you know what you are doing and have a solid backup strategy in place!

After installing the Filer OS on disk0, disks 1-4 were configured through the OS as RAID-0, meaning that all I/O operations were handled through the OS. No hardware acceleration was used.

With each Filer setup the iometer server was configured to mount three 50gb volumes using the native iSCSI Initiator feature that comes bundled with Windows 2008 R2. The Multipath I/O (MPIO) Feature was also enabled to allow multiple paths to the filer from the server across two different switches to increase available bandwidth to the target as well as providing fault tolerance like you would find in a production environment.

After doing some research, I discovered that using the Multiple Connections per Session (MCS) functionality in Microsoft's iSCSI Initiator should be avoided as it isn't implemented on some systems and isn't supported by VMware.

Some further reading:

link1

link2

The Tests:

I came up with a set of nine tests that I hoped would give a good representation of lab tests and real-world scenarios. The file copy tests were performed using robocopy.exe found Windows 2008 R2. the IOmeter tests were performed using version 2006.07.27 of IOmeter installed on the test box. The IOmeter tests were run for a period of 30 minutes each.

The File Copy tests:

Each of the next three tests involved a robocopy job copying files from an external file server to one or more iscsi volumes mounted locally on the iometer test server. These would test the write ability of the filer and the ability to handle multiple streams at once.

Test 1 - A single robocopy job that copies 3 large files (~15.5GB) from a file server to an iSCSI volume. This setup tests the raw sequential write ability of the filer.

Test 2 - Two robocopy jobs copying three large files (~15.5GB) to each of two iscsi volumes. Another sequential write test, but with two different write streams occuring simultaneously. The results are the sum of the duration of both copy jobs.

Test 3 - A single robocopy job copying a large amount of data in various file types (13,000 files / 20GB data). I wanted to see the throughput of a simulated robocopy-based backup job.

IOPS tests - Sequential Read

These are the kind of tests that typically put up big numbers that storage vendors like to put up on their web sites. 100% sequential read takes out all of the seek time latency and removes any mechanical latency caused by the write process. IOmeter was configured on the test server to place a read load on one-, two-, or three volumes simultaneously. I wanted to see how the IOPS score scaled as multiple volumes were accessed simultaneously.

Test 4 - 100% Read / 100% Sequential on one iscsi volume mounted locally

Test 5 - 100% Read / 100% Sequential on two iscsi volumes mounted locally

Test 6 - 100% Read / 100% Sequential on three iscsi volumes mounted locally

IOPS tests - SQL disk access pattern

These are more real world tests, involving read/write patterns that approximate the disk usage pattern of a large SQL database:

In this test case, access patters are completely random across the entire volume, and involve mostly read operations but contain some write operations as well. These tests put a serious strain on the disks and from the massive clicking coming from the disk array during the tests caused by the heads seeking across the platters I thought I would kill a disk for sure.

Test 7 - 67% Read / 100% Random on one isci volume mounted locally

Test 8 - 67% Read / 100% Random on two isci volumes mounted locally

Test 9 - 67% Read / 100% Random on three isci volumes mounted locally

The Results:

The charts below show direct comparisons of the test results.

The file copy tests (results in seconds - the lower the better):

In the single copy of large files, all three post similar scores as the access network becomes the bottleneck. But in the multiple copy streams test and the small files test, FreeNAS lags behind and surprisingly the Microsoft iSCSI target edges out Openfiler.

The sequential read tests (Raw IOPS scores - the higher the better):

Here FreeNAS is the clear performance leader, with Openfiler and Microsoft coming in neck and neck. Unfortunately, unless you are running a software jukebox or some kind of media server, this is a synthetic score with very little real-world implications.

The random read/write tests (Raw IOPS scores - the higher the better):

In these tests, FreeNAS gets left behind by both Openfiler and Microsoft who trade performance lead between the tests.

Conclusion:

The purpose of this test was to find the best performing iSCSI target with all other things being equal. Under this criteria alone, it would be a split decision between Openfiler and Microsoft.

However, the Microsoft solution requires the purchase of a licensed copy of Windows 2008 R2 server to run its filer software so it isn't exactly free. In addition, Openfiler can be run from a USB key, leaving all disks available for storage, where Microsoft cannot easily be run from anything other than a hard drive, taking up storage space for the OS.

With this added criteria, Openfiler emerges as the iSCSI champ.

Postscript:

However, since I already have a Windows file server running with a beefy RAID card sitting under utilized and with ports available, I am considering adding 4 drives in a hardware RAID-10 array and dedicating that volume to iscsi storage using the Microsoft iSCSI target software. This would remove the requirement for a dedicated iSCSI target which would save space in my rack and potentially save power. Although my file server stays on 24/7 so the energy drain of four drives spinning all the time may be significant and a dedicated iSCSI target would be powered off most of the time so...

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)